Introduction

Scientific research is facing increasing pressures: massive datasets, tight deadlines, complex challenges, and the need for faster breakthroughs. Artificial intelligence (AI) is becoming an indispensable ally for researchers across the globe, helping them accelerate discoveries, streamline workflows, and make data-driven decisions.

This guide explores the top AI use cases in research that are delivering real-world impact, from drug discovery to climate predictions, and the strategic considerations for adopting these technologies.

What is AI and Why Does It Matter in Research?

Definition of AI and Its Core Technologies

AI refers to systems capable of performing tasks that typically require human intelligence, such as learning from data, recognizing patterns, making decisions under uncertainty, and adapting over time. Core technologies include machine learning, deep learning, natural language processing (NLP), computer vision, and predictive analytics.

In research, AI means applying these technologies to automate and optimize tasks that were traditionally manual, such as data analysis, hypothesis generation, and experimental design. This enables researchers to gain deeper insights from their data, predict outcomes, and make faster decisions, ultimately accelerating the pace of discovery across various scientific disciplines.

The Growing Role of AI in Transforming Research

AI is transforming research from a traditional, manual, and time-consuming process into a streamlined, data-driven, and highly efficient industry. In the past, research was often slow, relying on trial-and-error methods and static processes. Today, AI is replacing these methods with intelligent systems that continuously adapt to real-time data, enabling researchers to make faster, more accurate decisions, reduce variability, and optimize experimental outcomes.

From drug discovery and climate modeling to academic research and medical diagnostics, AI is accelerating progress across many fields. AI-powered tools can rapidly analyze vast amounts of data, identify hidden patterns, and generate new hypotheses, enabling researchers to move forward faster. Machine learning models also help researchers better predict outcomes, reducing uncertainty and increasing the reliability of results.

Key Statistics and Trends in AI Adoption in Research

AI adoption in research is accelerating as institutions and organizations seek more efficient, accurate, and scalable solutions to drive innovation. According to MarketsandMarkets, the global AI in research market was valued at $3.5 billion in 2020 and is projected to reach $12.3 billion by 2027, growing at a CAGR of 19.4%. This growth is driven by the increasing demand for faster discoveries, more precise data analysis, and enhanced research capabilities across various disciplines.

Furthermore, machine learning and deep learning account for approximately 50% of AI technologies used in research, underscoring the shift toward intelligent data processing, automated analysis, and improved decision-making. These technologies are pivotal for tasks like analyzing complex datasets, predicting research outcomes, and optimizing experimental processes.

With growing investments in digital infrastructure, especially in North America, Europe, and Asia-Pacific, AI is increasingly becoming a core tool for research institutions looking to stay competitive and innovative. Cloud-based solutions, edge computing, and AI-powered research platforms are making it easier for institutions to scale their AI adoption, even in remote or data-intensive environments.

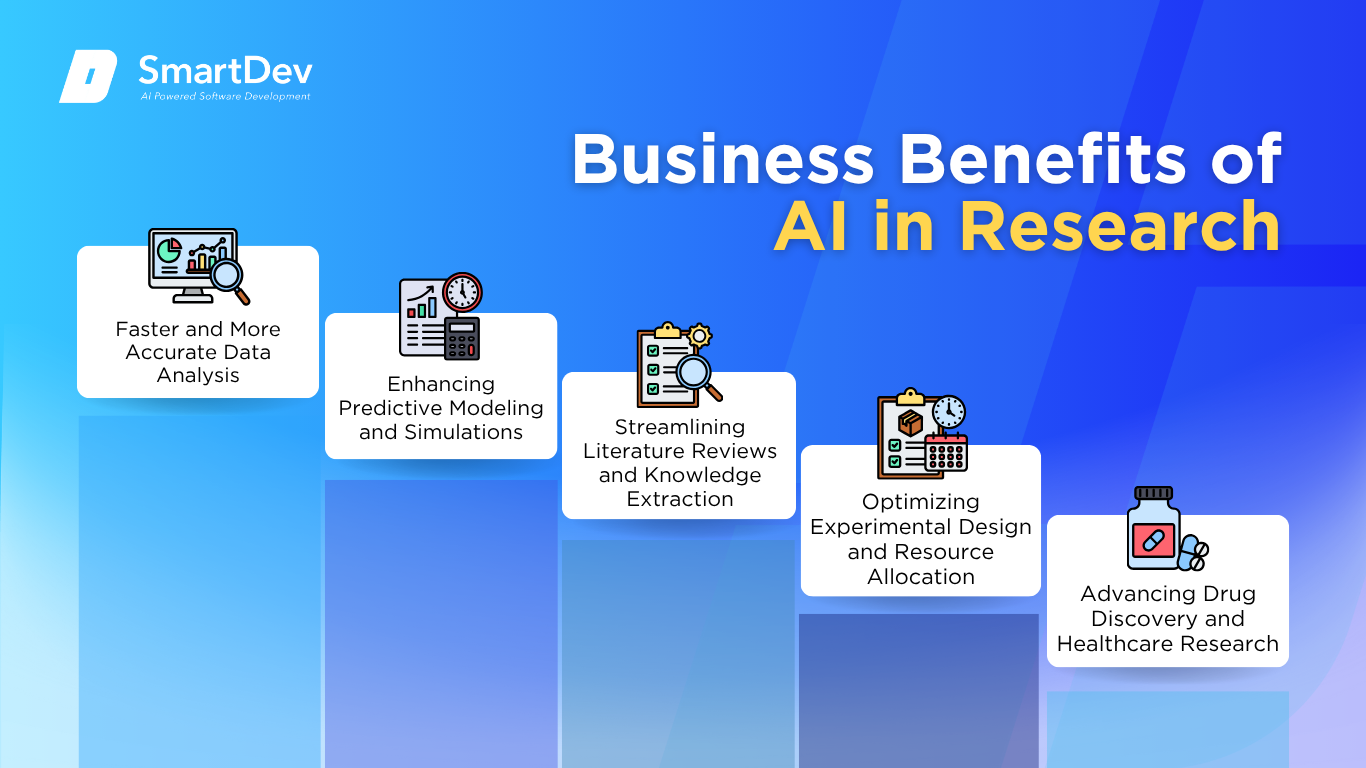

Business Benefits of AI in Research

AI is solving foundational challenges in research, ranging from data overload and time-consuming analyses to resource inefficiencies and limited scalability. Below are five key areas where AI is delivering measurable, transformative value in the world of research:

1. Faster and More Accurate Data Analysis

In research, particularly in fields like healthcare, climate science, and physics, vast amounts of data are generated every day. Traditional methods of data analysis can be slow, error-prone, and resource-intensive. AI is helping researchers overcome these hurdles by automating data analysis and enabling faster, more accurate results.

Machine learning models and deep learning algorithms can process and analyze large datasets with precision, detecting patterns that might be invisible to human researchers. These insights allow scientists to draw conclusions faster and more reliably, accelerating the pace of discovery. Whether analyzing clinical trial data, environmental models, or astronomical observations, AI reduces the time and effort needed to extract meaningful insights from complex datasets.

2. Enhancing Predictive Modeling and Simulations

Predictive modeling is essential in research fields like climate science, epidemiology, and drug discovery, where researchers need to anticipate future events or outcomes based on historical data. Traditional methods can struggle with the complexity and volume of data involved, often leading to inaccurate predictions or slow results.

AI is revolutionizing predictive modeling by applying machine learning algorithms that can handle vast datasets and complex variables. In drug discovery, for example, AI models can predict how certain compounds will interact with biological systems, vastly speeding up the discovery of new drugs. In climate research, AI-powered models can predict long-term environmental changes, helping policymakers make informed decisions about sustainability and conservation efforts.

3. Streamlining Literature Reviews and Knowledge Extraction

Literature reviews are a critical part of research, yet they are often time-consuming and exhaustive. Researchers have to manually read and analyze thousands of academic papers, making the process slow and inefficient. AI is solving this problem by automating the extraction of relevant information from academic literature.

NLP algorithms can scan large volumes of research papers, extracting key findings, identifying trends, and summarizing essential insights. This capability allows researchers to stay up-to-date on the latest studies without spending countless hours sifting through papers. By enabling faster access to critical knowledge, AI enhances researchers’ ability to build on existing work, identify gaps in knowledge, and generate new hypotheses.

4. Optimizing Experimental Design and Resource Allocation

Experimentation is a cornerstone of scientific research, but designing effective experiments can be a lengthy and costly process. AI helps by optimizing experimental designs through predictive models that analyze past research data to identify the most promising variables to test.

For example, in material science, AI can predict which combinations of materials will produce the desired properties, reducing the number of experiments needed. In clinical research, AI can optimize trial designs by predicting patient responses based on genetic data, minimizing the need for large, costly trials. With AI models recommending the most efficient and impactful experimental setups, researchers can allocate their resources more effectively, saving time, money, and effort.

5. Advancing Drug Discovery and Healthcare Research

AI is having a profound impact on healthcare research, particularly in drug discovery and medical diagnostics. Traditional drug discovery is a slow and costly process, with a high failure promising drug candidates faster and with more precision.

AI algorithms can analyze vast chemical libraries, genetic data, and clinical trial results to predict how different compounds will affect human biology. By identifying molecular patterns and potential interactions early in the process, AI significantly accelerates the identification of viable drug candidates.

Challenges Facing AI Adoption in Research

Despite its growing impact, AI adoption in research comes with significant challenges that can hinder its full integration and limit its effectiveness. Below are five of the most pressing obstacles that researchers and institutions face when implementing AI in their workflows:

1. Data Quality, Availability and Integration

AI systems rely heavily on high-quality, structured, and consistent data. However, in many research fields, especially in healthcare and environmental sciences, data can be fragmented, incomplete, or inconsistent. Research data is often stored in diverse formats across multiple platforms, making it difficult to integrate and analyze efficiently.

To overcome this, researchers must invest in building robust data infrastructure and implement standardized data collection practices. Without proper integration across systems and disciplines, AI models can provide incomplete, misleading, or inaccurate insights, slowing down the potential for breakthroughs.

2. Lack of AI Expertise in Research Domains

While AI holds great promise for research, its integration requires specialized knowledge in both the domain of study and AI itself. Many researchers are experts in their respective fields, but may lack the technical skills required to implement AI tools effectively. This creates a gap between researchers’ expertise and the technical knowledge needed to deploy AI systems.

As a result, AI adoption often requires collaboration between domain experts and data scientists or AI specialists. This interdisciplinary approach can be challenging due to the need for effective communication between researchers with different technical backgrounds. Additionally, the rapid pace of AI development means that ongoing training and upskilling are necessary for research teams to stay current with evolving tools and technologies.

3. High Implementation Costs

While AI has the potential to significantly improve research outcomes, the initial investment required for AI tools can be substantial. Developing and deploying AI models often requires access to high-performance computing infrastructure, specialized software, and skilled personnel—all of which can be expensive.

For many research institutions, especially those with limited budgets, the costs associated with adopting AI can be prohibitive. Smaller organizations may struggle to compete with larger institutions or private companies that have more resources to invest in AI infrastructure and talent. Furthermore, there are additional costs related to data collection, cleaning, and integration, as well as ongoing maintenance of AI systems.

4. Ethical Concerns and Data Privacy

AI in research, particularly in healthcare and social sciences, raises significant ethical concerns. These include issues related to data privacy, informed consent, and potential biases in AI algorithms. Furthermore, AI models can inadvertently reinforce existing biases present in the data they are trained on.

Addressing these challenges requires strong governance frameworks, transparent AI model development processes, and ethical guidelines to ensure that AI is used responsibly and fairly in research. Research institutions must prioritize data security, minimize bias, and ensure that ethical standards are adhered to throughout the AI development lifecycle.

5. Resistance to Change and Institutional Inertia

Adopting AI in research requires a shift in mindset and a willingness to embrace change. However, many research institutions are rooted in traditional methods and have established workflows that can be difficult to disrupt. Resistance to AI adoption may come from researchers who are skeptical of AI’s effectiveness or fear that AI could replace human judgment and expertise.

Moreover, institutional inertia can slow the pace of AI implementation, as many academic and research organizations are structured in ways that prioritize conservative approaches to innovation. The time and effort required to integrate AI into established research processes can be seen as a barrier, especially when researchers are already under pressure to meet publication deadlines and funding requirements.

Specific Applications of AI in Research

1. AI in Data Analysis and Pattern Recognition

AI-driven data analysis is revolutionizing research by allowing scientists to process massive datasets quickly and accurately. Machine learning algorithms can uncover hidden patterns, correlations, and anomalies that human researchers might miss, especially in complex or unstructured data. This application is crucial in fields like genomics, where it can identify genetic variations linked to diseases, or in social science, where it helps researchers detect emerging social trends from vast datasets.

Furthermore, AI models allow for continuous refinement of research methods as new data is incorporated. By feeding new data into existing models, researchers can improve predictions and adapt to changing conditions in real-time. This iterative approach helps to optimize the accuracy of predictions, making AI indispensable in long-term studies like climate change or drug development.

Real-World Example: In the field of genomics, DeepMind’s AlphaFold uses AI to predict protein folding, a critical area of biomedical research. By analyzing vast protein structure data, AlphaFold has successfully predicted the 3D structures of proteins, accelerating drug discovery and helping scientists understand complex diseases more effectively. This breakthrough has opened up new avenues for designing treatments and understanding the underlying causes of various medical conditions.

2. AI in Drug Discovery and Healthcare Research

AI has dramatically accelerated the pace of drug discovery by analyzing vast chemical and biological datasets to identify potential drug candidates. Using machine learning, AI models can predict the interaction between compounds and biological systems, helping researchers identify promising drugs faster than traditional methods. This reduces the time and cost of the lengthy drug development process, which can take years and requires millions of dollars.

Moreover, AI-driven tools allow for the identification of biomarkers and personalized treatment plans. By analyzing patient data, AI can predict which treatment options are most likely to work for an individual based on their unique genetic profile. This personalized approach enhances the effectiveness of treatments and minimizes the risk of side effects, bringing precision medicine to the forefront of healthcare.

Real-World Example: Insilico Medicine uses AI to identify new drug candidates and optimize clinical trial designs. Their AI-driven platform has already been used to discover a novel compound for fibrosis, which has entered clinical trials. The platform analyzes vast chemical and biological data, significantly reducing the time to identify new therapeutic opportunities.

3. AI in Environmental Science and Climate Change Research

AI is transforming environmental science by helping researchers model and predict climate patterns more accurately. Machine learning algorithms can process vast amounts of environmental data, such as temperature changes, atmospheric conditions, and carbon emissions, to identify trends and forecast future conditions. These predictions are crucial for understanding and mitigating the impacts of climate change, particularly in areas such as sea-level rise, deforestation, and biodiversity loss.

Additionally, AI-driven optimization techniques can help in resource management, improving the efficiency of renewable energy production and conservation efforts. By predicting energy demand and optimizing the use of renewable sources like wind or solar power, AI helps reduce carbon footprints and promotes sustainable practices. This technology also aids in monitoring deforestation or pollution levels, providing real-time insights that can inform policy and conservation strategies.

Real-World Example: Google’s AI for Earth initiative uses machine learning to predict and mitigate the impact of climate change. One of their projects involves using AI to analyze satellite imagery and track deforestation in real time, allowing for faster intervention and policy changes. By combining AI with environmental monitoring, Google is helping drive more effective climate action.

4. AI in Social Sciences and Behavioral Research

In the social sciences, AI is used to analyze large-scale human behavior data, providing insights into patterns and societal trends. Natural language processing (NLP) models, for example, can analyze social media posts, surveys, and online discussions to gauge public opinion on various issues. This allows researchers to better understand human behavior, political sentiment, and societal changes over time, offering real-time data for studies on social movements or political dynamics.

Additionally, AI models can identify biases in social research, helping to create more balanced and representative studies. By analyzing demographic data, AI can reveal hidden biases in surveys or datasets, ensuring that research findings are more accurate and reflective of diverse populations. This ensures that research outcomes are not skewed by unacknowledged biases, especially in sensitive areas like public health or criminology.

Real-World Example: The Behavioral Insights Team (BIT) uses AI to analyze data from various sources, including social media and surveys, to better understand human behavior. Their AI-powered tools help governments and organizations design policies that promote positive behavioral changes, such as encouraging healthy eating or boosting vaccination rates. This data-driven approach has led to more effective public policy interventions worldwide.

5. AI in Automation and Experimentation

AI-driven automation is accelerating research by reducing the time spent on repetitive tasks such as data collection and analysis. In experimental research, AI can automate laboratory processes like sample testing, data logging, and analysis, freeing up researchers to focus on more complex aspects of their work. This automation not only increases the speed of experiments but also reduces the likelihood of human error, ensuring more consistent and reliable results.

Additionally, AI is used to optimize experimental design by predicting which conditions are most likely to yield successful results. By analyzing historical data from previous experiments, AI models can suggest optimal testing parameters or new experimental approaches. This helps researchers prioritize their time and resources more effectively, improving overall research efficiency and outcomes.

Real-World Example: Labster, a company that offers virtual labs powered by AI, provides students and researchers with automated experiments that simulate real-world laboratory settings. Their AI-driven platform helps users conduct experiments remotely, saving time and resources while offering instant feedback and results. This innovation is transforming how both educational and professional researchers approach experimentation.

6. AI in Scientific Collaboration and Knowledge Discovery

AI is enhancing scientific collaboration by facilitating the sharing and synthesis of research findings across disciplines. Knowledge graphs and AI-driven recommendation systems can suggest relevant papers, researchers, or datasets to help connect researchers from different fields. This ability to discover new connections and insights fosters cross-disciplinary collaboration, which is increasingly important for tackling complex, global challenges like pandemics or climate change.

Furthermore, AI models can help identify gaps in existing research, guiding scientists toward unexplored areas or emerging topics. By analyzing the body of existing research, AI can pinpoint under-researched topics and suggest novel research directions. This encourages more efficient and innovative scientific discovery by highlighting areas that require further exploration.

Real-World Example: Microsoft’s Project Hanover uses AI to assist researchers in oncology by analyzing large datasets of clinical trials, research papers, and patient data. The AI system helps identify novel cancer therapies by connecting disparate data points, which has led to the discovery of new treatment pathways. This collaborative AI platform significantly accelerates the pace of cancer research and improves the chances of breakthrough discoveries.

Need Expert Help Turning Ideas Into Scalable Products?

Partner with SmartDev to accelerate your software development journey — from MVPs to enterprise systems.

Book a free consultation with our tech experts today.

Let’s Build TogetherExamples of AI in Research

Real-World Case Studies

1. DeepMind: AlphaFold and Protein Structure Prediction

To overcome the long-standing challenge of predicting protein structures, DeepMind developed AlphaFold, an AI system capable of modeling protein folding with unprecedented accuracy. The goal was to accelerate biomedical research and drug discovery by providing reliable 3D structures of proteins, which are critical for understanding disease mechanisms. By leveraging deep learning models trained on vast datasets of known protein structures, AlphaFold can predict how a protein folds from its amino acid sequence alone.

The system analyzes patterns in protein sequences and uses attention-based neural networks to model the spatial arrangements of atoms within proteins. Millions of experimental and simulated protein data points were used to train the model, allowing it to generalize to previously unseen proteins. Researchers integrate these predictions into drug design workflows and structural biology studies to guide experiments and prioritize targets efficiently.

As a result, AlphaFold successfully predicted the structures of nearly all human proteins and thousands from other organisms. This breakthrough has dramatically reduced the time required to obtain experimentally verified structures, accelerating research in genetics, drug discovery, and disease understanding. The system is now widely used by academic and pharmaceutical researchers worldwide, exemplifying AI’s transformative impact on scientific discovery.

2. IBM Watson Health: Accelerating Drug Discovery

IBM Watson Health applied AI to process vast medical literature and patient data to identify potential drug candidates and optimize clinical trial designs. The initiative aimed to reduce the time-consuming and costly traditional drug discovery process, where analyzing research papers, trial outcomes, and chemical interactions manually could take years. Watson uses natural language processing and machine learning to extract meaningful insights from unstructured medical data, making connections between diseases, treatments, and biomarkers.

The platform integrates clinical data, genomic datasets, and published research to suggest new therapeutic approaches and predict patient responses. Machine learning algorithms rank drug candidates based on efficacy, safety, and novelty while identifying potential side effects early in development. This AI-driven system enables research teams to focus on the most promising avenues, reducing trial-and-error in the lab.

Watson Health has helped pharmaceutical companies identify novel compounds faster than conventional methods. By prioritizing drug candidates and refining trial designs, AI has reduced R&D costs and shortened development cycles. This case demonstrates how AI accelerates biomedical research and improves the efficiency of healthcare innovation.

3. Google AI for Earth: Environmental Research

Google AI for Earth leverages AI to monitor environmental changes and inform conservation strategies, addressing challenges such as deforestation, biodiversity loss, and climate change. The initiative uses satellite imagery, sensor networks, and climate datasets to detect patterns and predict ecological trends. AI models can identify illegal logging activities, monitor ecosystem health, and track wildlife populations in real time, providing actionable insights to researchers and policymakers.

Machine learning algorithms process petabytes of remote sensing data to detect subtle environmental changes that humans might miss. AI models integrate temporal and spatial data to forecast trends like forest degradation or habitat loss, guiding targeted conservation actions. The system also supports climate modeling and resource management, helping scientists and governments make data-driven decisions to preserve natural ecosystems.

Through AI-assisted monitoring, Google has enabled faster detection of deforestation hotspots in regions like the Amazon rainforest. Conservation organizations use these insights to respond proactively, protecting endangered habitats and species. The initiative highlights AI’s ability to enhance environmental research and support sustainable management of natural resources.

Innovative AI Solutions

AI-Driven Innovations Transforming Mining

Emerging Technologies in AI for Mining

Generative AI is reshaping how researchers conceive, draft, and iterate on studies. Large language models (LLMs) can help generate hypotheses, outline structure, and even suggest literature links by scanning thousands of documents in seconds. This reduces the cognitive load on researchers and accelerates the transition from idea to draft. For example, researchers have used LLMs to assist in literature synthesis and writing, enabling faster iterations of sections like introductions or discussions.

Beyond text, computer vision and multimodal AI are enabling new modes of analysis. In fields like neuroscience, ecology, materials science, and medicine, image-based data (microscopy, satellite images, scans) are abundant. AI models can detect patterns, segment features, and flag anomalies more accurately and at scale than manual inspection. For instance, algorithms in biomedical research detect tumor boundaries or cell substructures automatically.

Agentic and autonomous AI agents are also emerging in research workflows. These systems don’t just assist — they act, coordinating tasks like data collection, experiment scheduling, or adaptive parameter tuning in simulations. Over time, they learn from prior experiments and dynamically adjust strategy, making scientific workflows more adaptive and responsive. This push toward autonomy is still nascent, but prototypes in lab automation and robotics show the direction.

AI’s Role in Sustainability Efforts

AI plays a critical role in making research itself more sustainable and efficient. In large experimental domains (e.g. climate modeling, materials discovery, genomics), computing and resource consumption are significant. Predictive models can optimize which experiments to run to maximize information gain, reducing wasted computing or lab time. In that sense, AI helps “prune” unnecessary experiments, focusing resources on high-impact experiments.

More broadly, by speeding up research cycles and reducing trial‑and‑error, AI helps reduce the waste of reagents, computing cycles, and human effort. This is especially important in fields where experiments are costly or materials are rare. When AI accelerates “fail fast, learn fast” cycles, it helps conserve both capital and ecological resources.

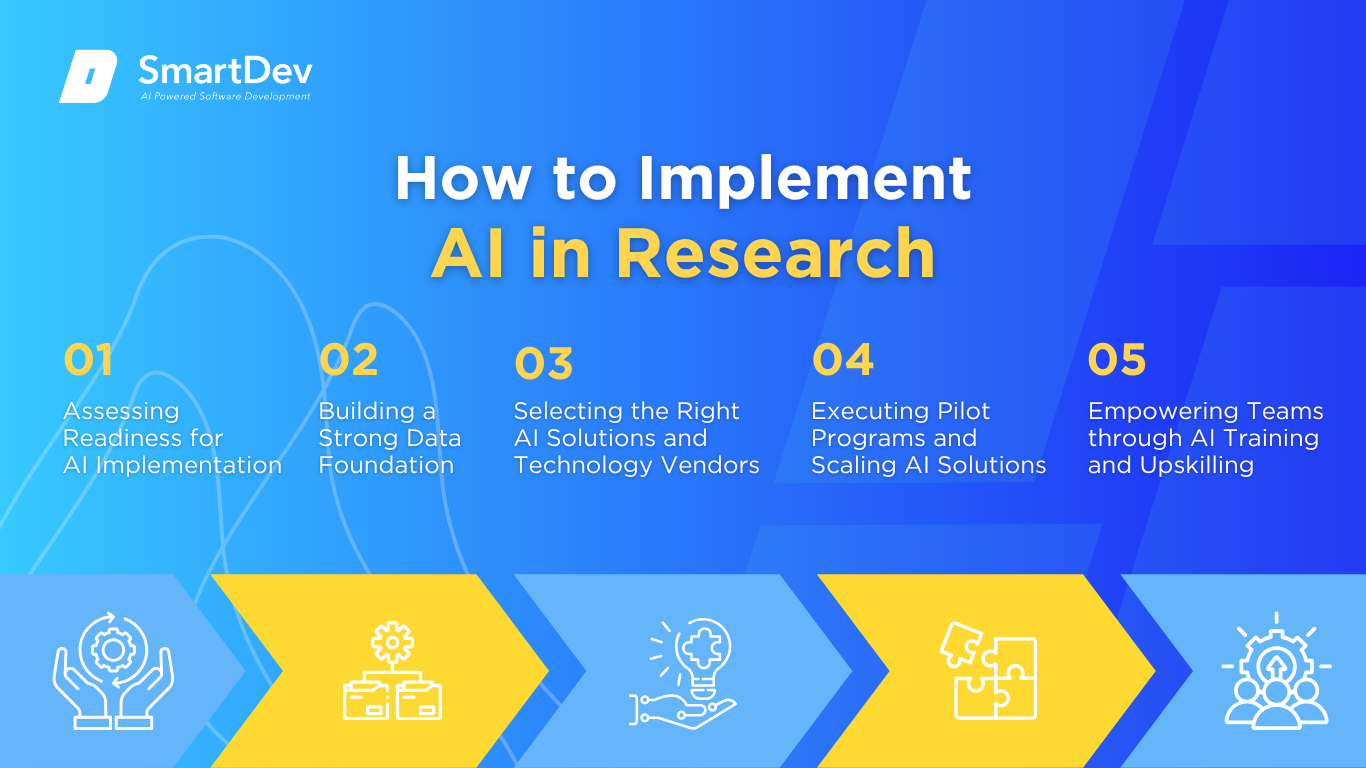

How to Implement AI in Research

Step 1: Assessing Readiness for AI Adoption

Before introducing AI into research workflows, it’s essential to pinpoint areas where AI can deliver measurable improvements. Begin by identifying phases of the research cycle that are data-intensive, repetitive, or involve time-consuming analysis. These often include literature reviews, data annotation, statistical modeling, and experiment optimization, domains where AI can significantly accelerate outcomes or uncover patterns hidden in complex datasets.

Readiness assessment also requires a comprehensive look at infrastructure, culture, and collaboration. Evaluate whether your current systems can support AI tools from data formats and storage architecture to compute resources and internet connectivity. Equally important is assessing team readiness: are researchers open to integrating AI into their workflow, or is there skepticism? Including scientists, data engineers, and institutional IT leaders early in the process ensures buy-in and sets the groundwork for long-term success.

Step 2: Building a Strong Data Foundation

AI models are only as reliable as the data they’re built on. For research institutions, this begins with collecting, cleaning, and organizing data from diverse sources, be it lab sensors, observational logs, survey results, or legacy databases. Data should be structured, labeled, and timestamped in formats that AI systems can interpret easily and consistently.

Establishing strong data governance is equally crucial. This includes setting standards for data quality, access permissions, version control, and compliance, especially in sensitive research areas like genomics, social science, or healthcare. Whether training an AI model to predict molecular behavior or automate document classification, a well-managed data ecosystem ensures your insights are not just accurate but replicable and ethically sound.

Step 3: Choosing the Right Tools and Vendors

Finding the right AI tools for research means balancing flexibility, transparency, and domain alignment. Many open-source platforms provide robust support for customizing models to fit specific research goals, such as NLP for academic literature mining or computer vision for imaging datasets. Choose tools that allow for rapid prototyping, reproducibility, and easy integration with existing workflows like Jupyter Notebooks or institutional data lakes.

When evaluating vendors, look for those with a track record in scientific applications—not just general-purpose AI. Ask how their tools support collaboration, data privacy, and continuous learning. Some research units may benefit from modular AI solutions, while others might require full-stack development partnerships. A clear understanding of licensing costs, hardware compatibility, and support requirements helps prevent costly misalignments or underutilized technologies.

Step 4: Pilot Testing and Scaling Up

Piloting is the safest way to validate AI’s impact without disrupting the broader research process. Start with one or two high-value use cases, such as automating figure labeling in published papers or using AI to suggest citations. Choose areas where results can be easily quantified, like time saved, accuracy improvements, or expanded analytical capacity.

Once a pilot demonstrates tangible value, scaling becomes a structured process of integration and replication. Expand across departments by tailoring models to new datasets, disciplines, or experimental conditions. Document learnings from early rollouts to inform protocols and reduce friction in future deployments. As research projects evolve, your AI systems should be designed to adapt and iterate with them.

Step 5: Training Teams for Successful Implementation

People determine whether AI adoption thrives. Training your teams ensures AI becomes a trusted part of the research process rather than an opaque tool. Focus on practical education: teach researchers how to evaluate model outputs, manage data pipelines, and integrate AI into their existing analysis routines. Avoid overly technical training unless it’s tied directly to research needs.

Interdisciplinary collaboration is vital. Encourage dialogue between domain experts and technical staff to co-design AI applications that reflect real-world constraints and goals. Peer-led workshops, collaborative pilot reviews, and shared repositories can help foster a culture of learning. Empowering teams through continuous training not only boosts AI adoption—it also turns researchers into champions of innovation.

Measuring the ROI of AI in Research

Key Metrics to Track Success

Measuring the return on AI in research means connecting improvements in workflow efficiency, discovery speed, and scientific impact to tangible outcomes. One of the most immediate indicators is time saved across critical phases—literature reviews completed faster, data annotated with fewer manual hours, or models trained and deployed with reduced computational overhead. These time gains free up researchers to focus on hypothesis building and experimentation, directly boosting output and quality.

Cost reduction also plays a pivotal role in assessing ROI. AI helps minimize wasted experiments, reduces the need for redundant data collection, and streamlines lab operations. Predictive models in materials science or biomedical research, for example, can flag unlikely candidates before costly physical testing begins. These efficiencies lower the cost per discovery and increase research throughput, ultimately allowing institutions to stretch grant funding or R&D budgets further without sacrificing rigor or impact.

Case Studies Demonstrating ROI

Real-world applications of AI in research consistently deliver high returns when thoughtfully implemented. In one case, the Chan Zuckerberg Biohub used machine learning to accelerate cell classification from microscopy images. The AI model reduced the processing time from days to minutes, enabling faster experimental turnaround and freeing up valuable human expertise for higher-order analysis, effectively transforming the lab’s productivity model.

In computational chemistry, DeepMind’s AlphaFold revolutionized protein structure prediction by reducing what used to take years into hours. This breakthrough has not only saved countless research hours but also opened new avenues for drug discovery and disease understanding. Pharmaceutical companies leveraging AlphaFold’s insights report faster target validation and preclinical development timelines, translating directly into financial and clinical ROI.

Common Pitfalls and How to Avoid Them

Despite the promise, AI in research can stumble if deployed without clear direction or foundational support. A frequent issue is launching AI initiatives with vague objectives or no aligned KPIs. This often leads to underutilized tools or projects that never move beyond the experimental stage. The solution is to start with specific, measurable challenges like reducing analysis time or increasing data reproducibility and build from there.

Another common pitfall is poor data quality. In research, where datasets are often heterogeneous, missing, or biased, rushing into model training without proper data prep can compromise outcomes and credibility. Ensuring strong data hygiene, transparent documentation, and ethical oversight is essential. Failing to do so not only reduces ROI but can damage institutional trust in AI and delay broader adoption across departments.

Future Trends of AI in Research

Predictions for the Next Decade

AI is poised to redefine the research landscape, evolving from a supporting tool to a core driver of scientific inquiry. Over the next 5 to 10 years, we can expect AI to move beyond automation and into active collaboration, where machine learning models assist in designing experiments, forming hypotheses, and even suggesting new theoretical frameworks. Research institutions will adopt intelligent systems that adapt in real time, learning from every iteration to improve accuracy, speed, and discovery potential.

This evolution will coincide with advancements in multimodal AI, quantum computing, and synthetic data generation. Together, these innovations will enable simulations of complex systems, from drug interactions to climate dynamics, with unprecedented precision. We’ll also see deeper integration of AI with lab robotics and digital lab assistants, creating self-updating workflows where data is collected, analyzed, and acted upon autonomously. AI won’t just help scientists research faster; it will redefine how research itself is conducted, making it more iterative, interconnected, and dynamic.

How Businesses Can Stay Ahead of the Curve

To lead in this rapidly evolving space, research institutions must shift from ad-hoc AI experimentation to long-term AI strategy. This begins with institutional leadership embedding AI into the core research agenda as a capability. Success hinges on empowering interdisciplinary teams, where domain experts and AI specialists co-create solutions tailored to specific research questions, rather than adopting generic tools.

Staying competitive also means investing in digital infrastructure that supports scalability and agility. As AI becomes more central to scientific workflows, issues like reproducibility, model transparency, and research ethics will take center stage. Institutions that proactively establish clear guidelines for AI governance and foster a culture of responsible innovation will be better positioned to navigate future disruptions.

Ultimately, those who view AI not just as a technical add-on but as a transformative force in the research process will lead the next generation of discovery. In a world where knowledge cycles are accelerating, the ability to adapt, scale, and innovate continuously will distinguish tomorrow’s scientific leaders.

Conclusion

Key Takeaways

AI is reshaping the research ecosystem by accelerating discovery, increasing accuracy, and enhancing the reproducibility of scientific work, all while delivering measurable returns in efficiency and cost. From AI-powered literature review and data annotation to automated hypothesis testing and lab optimization, artificial intelligence is rapidly becoming an indispensable asset in the modern research toolkit.

Organizations that lay strong data foundations, invest in interdisciplinary collaboration, and align AI initiatives with clearly defined research goals are already seeing transformative benefits. As AI systems mature and take on more adaptive, agentic roles, they will shift from being analytical assistants to co-investigators, driving deeper insight, faster breakthroughs, and more impactful science.

Research institutions stand at a pivotal point. The frameworks, infrastructure, and talent strategies adopted today will determine their ability to lead and innovate in a world where knowledge creation is increasingly shaped by intelligent systems.

Moving Forward: A Strategic Approach to AI in Research

If your organization is ready to harness AI as a strategic asset in your research operations, now is the time to act with intention. Start by identifying high-friction areas where AI can deliver rapid improvements, whether that’s automating data labeling, improving analysis workflows, or accelerating manuscript drafting. Assess your existing data landscape and team capabilities to ensure you’re building on solid ground.

Partnering with experienced AI vendors or academic-industry alliances like SmartDev can accelerate adoption while reducing operational risk. These collaborations help align AI investments with your institutional mission and scientific priorities, making implementation smoother and outcomes more reliable. Whether you’re aiming to boost publication output, accelerate experimental cycles, or expand the frontiers of knowledge, a well-defined AI roadmap will keep your initiatives grounded and scalable.

Don’t wait for the research world to change around you. Lead the transformation with SmartDev. Begin exploring how AI can amplify every phase of the research process and ensure your institution remains at the forefront of innovation, insight, and global impact.

—

References:

- Artificial Intelligence Market Report | MarketsandMarkets

- AI in Healthcare Market Report | MarketsandMarkets

- AI Infrastructure Market Report | MarketsandMarkets

- Artificial Intelligence Press Release | MarketsandMarkets

- The Future of AI in Biotechnology | Taylor & Francis Online

- Insilico’s AI Use Case in Healthcare | AWS Case Study

- Using AI to Fight Climate Change | DeepMind

- AI Technology at BI | Boston Consulting Group

- Labster Virtual Labs: Evidence of Effectiveness | Labster

- Jackson Lab Project at Hanover | Microsoft News

- Accelerating Discovery in Healthcare | IBM Research