Introduction

DevOps is facing unprecedented complexity: faster release cycles, infrastructure sprawl, and escalating demands for system reliability are pushing teams to their operational limits. AI is quickly becoming a strategic enabler, automating decision-making, streamlining pipelines, and reducing human toil. In this guide, we explore how AI is transforming DevOps by delivering predictive insights, enhanced automation, and real-time system intelligence.

What is AI and Why Does It Matter in DevOps?

Definition of AI and Its Core Technologies

AI refers to the simulation of human intelligence in machines—capable of learning from data, making decisions, and improving over time without explicit programming. Key AI technologies relevant to DevOps include machine learning (ML), natural language processing (NLP), and deep neural networks.

In DevOps, AI enables systems to automatically detect anomalies, suggest optimized deployment paths, and generate infrastructure code from context. By embedding intelligence into CI/CD workflows and infrastructure operations, AI helps teams move from reactive troubleshooting to proactive optimization.

AI systems analyze massive volumes of logs, metrics, and user behavior in real time—detecting issues before they affect users and recommending fixes. This intelligence transforms DevOps into a data-driven, predictive discipline that scales with modern application demands.

The Growing Role of AI in Transforming DevOps

AI is transforming DevOps by bringing intelligence and automation to complex, high-stakes workflows. Predictive analytics tools can now detect performance issues before they cause outages, enabling teams to address potential failures with minimal disruption. These capabilities are especially valuable in environments where uptime is mission-critical.

Development teams are increasingly using AI to streamline their CI/CD pipelines. AI-powered assistants can automatically generate build scripts, test cases, and deployment configurations—reducing setup time and minimizing human error. This allows DevOps teams to ship features faster and with greater confidence in code quality.

AI is also reshaping incident response. Intelligent systems aggregate logs and metrics from across distributed systems, identifying root causes and recommending remediations in real time. This dramatically reduces mean time to resolution (MTTR) and enables smaller teams to manage larger, more complex infrastructures.

Key Statistics and Trends in AI Adoption in DevOps

AI adoption in DevOps is gaining momentum as teams seek to improve speed and stability. According to Techstrong Research, 33% of DevOps teams already use AI tools, and another 42% are actively exploring them. These tools are being integrated into workflows for monitoring, testing, deployment, and security automation.

GitLab’s 2024 DevSecOps report shows that over 70% of developers now rely on AI tools daily—for tasks like code generation, vulnerability detection, and pipeline optimization. This surge in usage reflects a shift toward AI as a routine part of the DevOps toolkit, not just an experimental add-on.

Market projections reinforce this trend. The global market for AI in DevOps is expected to grow from $2.9 billion in 2023 to $24.9 billion by 2033, with a CAGR of 24%. This growth is driven by demand for scalable automation, faster incident recovery, and intelligent resource management across cloud-native environments.

Business Benefits of AI in DevOps

AI is delivering concrete improvements in DevOps by automating routine tasks, accelerating delivery pipelines, and enhancing system reliability. Below are five key benefits that demonstrate how AI creates value across the software development and operations lifecycle.

1. Faster Incident Resolution

AI systems continuously scan logs, metrics, and traces to detect anomalies and surface root causes in real time. This allows teams to identify and respond to issues before they impact end users or critical services. By reducing time spent on diagnosis, teams can accelerate their response and minimize service disruptions.

These capabilities directly lower mean time to resolution (MTTR), improving overall system uptime and reliability. Faster recovery also relieves pressure on on-call engineers and reduces the operational cost of incidents. As reliability improves, so does customer satisfaction and confidence in the platform.

2. Accelerated CI/CD Pipelines

AI tools automatically generate and optimize CI/CD workflows by analyzing codebase structures and historical deployment data. They identify misconfigurations, recommend improvements, and streamline complex setup processes. This reduces manual effort and shortens the cycle from commit to production.

With faster and more consistent pipelines, engineering teams can deploy updates frequently and with confidence. This speed helps organizations stay competitive in fast-moving markets. It also improves feedback loops, enabling faster innovation and delivery of customer value.

3. Intelligent Testing and Quality Assurance

AI analyzes code changes to generate targeted test cases and flag untested paths. It can detect flaky tests and reduce test execution time by prioritizing high-risk areas. These improvements help teams catch bugs earlier and ship higher-quality software.

Better testing coverage means fewer production issues and reduced time spent on bug fixes. It also builds trust in automation and ensures faster delivery without compromising reliability. Over time, AI-driven QA significantly boosts engineering productivity.

4. Scalable Security Integration (DevSecOps)

AI embeds automated security scans and policy enforcement directly into CI/CD pipelines. It flags vulnerabilities, misconfigurations, and compliance violations as code is written and deployed. This continuous approach helps teams shift security left in the development lifecycle.

By identifying risks early, AI reduces the cost and complexity of remediation. Security teams benefit from real-time visibility and prioritized alerts, enabling faster response. This allows organizations to maintain high security standards without slowing delivery.

5. Optimized Resource Usage and Cost Efficiency

AI tracks infrastructure usage patterns and predicts scaling needs based on traffic and historical behavior. It identifies idle or over-provisioned resources and suggests adjustments for optimal performance. This ensures systems remain responsive while minimizing waste.

By automating resource allocation, teams reduce cloud costs without compromising availability. These optimizations are especially valuable in large-scale or hybrid environments. Over time, AI enables smarter budgeting and better infrastructure ROI.

Challenges Facing AI Adoption in DevOps

Despite its clear benefits, adopting AI in DevOps introduces complex implementation hurdles. From fragmented data to trust and security concerns, organizations must overcome several technical and cultural barriers to integrate AI effectively into their workflows. Addressing these challenges early is essential to unlocking AI’s full potential without disrupting stability or team performance.

1. Inconsistent and Siloed Data

DevOps environments often involve fragmented data across tools like monitoring, source control, and ticketing systems. AI models need consistent, structured data to deliver reliable insights. When data is incomplete or siloed, AI outputs become inaccurate or misleading.

Organizations must invest in unified observability platforms and enforce data standards across teams. Without a reliable data foundation, AI tools cannot reach their full potential. This challenge is often underestimated but is critical to long-term success.

2. Lack of Trust in AI Outputs

AI-generated recommendations can be opaque or inconsistent, leading to skepticism among engineers. When models suggest incorrect fixes or generate false alerts, confidence quickly erodes. Teams need to understand how and why AI made a decision.

Explainability features like confidence scores and reasoning traces help validate AI suggestions. Building trust also requires testing AI outputs in controlled environments. Transparency is essential for adoption, especially in high-risk workflows.

3. Skills Gaps in AI and ML Technologies

Most DevOps teams are not trained in machine learning or prompt engineering, making AI integration a steep learning curve. This gap limits the ability to fine-tune models or interpret their outputs effectively. As a result, teams may underuse or misuse available tools.

Addressing this requires targeted upskilling and better documentation from AI vendors. Simplified interfaces and no-code options can help bridge the divide. Successful AI adoption depends on empowering teams, not overwhelming them.

4. Workflow Disruption and Resistance to Change

Integrating AI into DevOps often changes how teams work—automating tasks, suggesting decisions, or redirecting workflows. These shifts can feel disruptive, especially if not clearly explained or aligned with goals. Resistance may grow if teams view AI as a replacement rather than a collaborator.

To mitigate this, change management efforts must highlight how AI reduces manual burden and improves outcomes. Involving engineers in tool selection and pilot phases builds ownership and confidence. Adoption rises when teams see AI as a partner, not a threat.

5. Security and Compliance Risks

AI tools can inadvertently introduce new vulnerabilities through autogenerated scripts or misinterpreted inputs. Without guardrails, these tools may expose sensitive systems or bypass compliance policies. The risks grow as AI handles more critical tasks.

Governance is crucial—teams must monitor AI behavior, audit decisions, and enforce strict access controls. Security testing must extend to AI-generated assets and actions. Failing to manage these risks can outweigh the benefits AI promises to deliver.

Specific Applications of AI in DevOps

1. Incident Detection & Automated Remediation

Incident detection and automated remediation tackle one of DevOps’ biggest pain points: real-time monitoring and rapid issue resolution. Using AI, these systems continuously analyze logs, metrics, and traces to detect anomalies and preemptively respond to system failures. They reduce downtime by triggering predefined actions, such as restarting services or scaling up infrastructure without human intervention.

The AI behind this use case often includes unsupervised learning and time-series anomaly detection. It uses telemetry data from observability tools, ingests it into models trained to recognize normal patterns, and flags deviations. These tools integrate with incident management platforms like PagerDuty or ServiceNow, allowing automated workflows that escalate or resolve issues based on severity.

IBM adopted Watson AIOps to enhance its internal DevOps operations by automating incident triage and root cause analysis. Leveraging machine learning and NLP, the system filters alerts, correlates events, and recommends actions. As a result, IBM reported a 30% reduction in mean time to resolution (MTTR) and improved overall system reliability.

2. Predictive Analytics & Self-Healing Infrastructure

Predictive analytics and self-healing infrastructure provide DevOps teams with foresight into potential system failures before they occur. These AI systems analyze historical performance data, resource consumption, and user behaviors to forecast issues like memory leaks, server overloads, or latency spikes. They automate proactive measures, allowing environments to self-correct with minimal disruption.

This use case relies on supervised learning models trained on system metrics and logs over time. By recognizing degradation patterns, the models can initiate remediation tasks—such as restarting a failing service or adjusting workload distribution. These tools are often embedded within Kubernetes environments and cloud-native platforms to facilitate seamless orchestration.

Navya CloudOps implemented predictive analytics into its DevOps platform to prevent outages and support self-healing pipelines. The system uses time-series models to detect deviations and trigger container restarts or capacity adjustments automatically. The result has been a noticeable reduction in downtime incidents and smoother CI/CD workflows.

3. AI-Powered Code Review & Security Scanning

AI-powered code review and security scanning play a critical role in integrating DevSecOps into CI/CD pipelines. These tools automatically detect bugs, vulnerabilities, and coding standard violations by analyzing source code during commit and merge stages. They reduce manual code review time and help enforce secure coding practices at scale.

These platforms use natural language processing and static analysis algorithms, along with machine learning models trained on vast codebases. They detect common issues like SQL injection, hardcoded credentials, or performance bottlenecks. Integrating with repositories such as GitHub or Bitbucket, they provide real-time feedback and halt non-compliant builds.

SonarQube and GitHub’s CodeQL have become popular in many DevOps pipelines for automatic vulnerability scanning. At companies like BMW and eBay, these tools identify security flaws early in the development cycle. Teams have reported faster delivery cycles and a significant drop in post-release issues due to early detection.

4. CI/CD Pipeline Optimization

Optimizing CI/CD pipelines with AI increases the efficiency and reliability of build and deployment workflows. AI models analyze pipeline execution history to detect bottlenecks, flaky tests, and misconfigurations that delay deployments. They also help prioritize test execution and optimize resource utilization during builds.

This approach leverages reinforcement learning and regression models to predict optimal build sequences and identify recurring failures. Integrated into DevOps tools like Jenkins, GitLab CI, or Azure Pipelines, the AI learns from past executions to suggest configuration tweaks and faster workflows. It can even auto-tune test suites based on coverage data and success rates.

At LinkedIn, AI was used to streamline their CI/CD process, cutting down build times by analyzing test flakiness and dependency resolution. By reprioritizing tests and isolating slow components, deployment speed improved by 25%. This has enabled more frequent and stable releases for their massive production environment.

5. Observability & Root-Cause Analysis

AI enhances observability by connecting logs, traces, and metrics to reveal the root causes of application issues. These systems use pattern recognition and causal inference to trace fault origins across microservices. This reduces manual debugging time and improves the speed and accuracy of incident response.

Machine learning models—often built on graph analytics and time-series analysis—process data streams from monitoring tools like Prometheus, Grafana, or Elastic Stack. By mapping service interactions and anomaly timelines, AI can highlight the most likely source of a disruption. Some tools also offer visual root-cause maps to aid operator decision-making.

Dynatrace’s Davis AI engine exemplifies this use case by performing automatic root-cause analysis across distributed environments. The platform detected over 90% of performance anomalies before customers reported them. Its implementation has cut investigation times by more than 60% in enterprises using microservices and cloud-native stacks.

6. Cloud Cost Optimization

AI in DevOps enables smarter cloud cost management by analyzing infrastructure usage and recommending adjustments. These solutions detect underutilized resources, suggest better instance types, and automatically shut down idle workloads. The goal is to optimize cloud spending without sacrificing performance or availability.

This use case combines usage telemetry, billing data, and predictive analytics to suggest savings opportunities. Some tools use clustering algorithms to identify usage patterns and outliers, and integrate with platforms like AWS Cost Explorer or Google Cloud Billing. Optimization recommendations can be executed manually or set to auto-deploy in real time.

Deloitte’s internal DevOps teams leveraged AI tools to reduce cloud expenditures across multiple business units. By automatically rightsizing VMs and consolidating storage, they achieved a 31% reduction in total cloud costs. These savings were reinvested into innovation and digital transformation initiatives across departments.

Need Expert Help Turning Ideas Into Scalable Products?

Partner with SmartDev to accelerate your software development journey — from MVPs to enterprise systems.

Book a free consultation with our tech experts today.

Let’s Build TogetherExamples of AI in DevOps

Transitioning from specific applications, it’s essential to examine how leading organizations have harnessed AI to drive measurable business outcomes in DevOps. These case studies demonstrate AI’s tangible impact on software delivery, operational resilience, and cost optimization, offering actionable insights for engineering leaders.

Real-World Case Studies

1. IBM: Automated Incident Resolution with Watson AIOps

IBM, a global leader in enterprise technology, aimed to improve the efficiency of its DevOps operations by reducing incident response time and minimizing alert fatigue. Traditional monitoring tools generated high volumes of unprioritized alerts, which overwhelmed support teams and slowed resolution during outages. IBM needed a smarter, context-aware solution to reduce noise and speed up triage.

To address this, IBM deployed Watson AIOps, a machine learning-driven platform that ingests logs, metrics, and topology data to detect anomalies and surface root causes. The system uses NLP to correlate events and provide remediation suggestions in real time. This resulted in a 30% reduction in mean time to resolution (MTTR) and significantly improved system uptime across IBM’s global infrastructure.

2. JPMorgan Chase: AI-Powered Coding Assistants

JPMorgan Chase sought to enhance developer productivity across its engineering teams managing thousands of applications and services. The manual development process—especially for boilerplate code and repetitive tasks—was time-consuming and constrained innovation. The firm needed a scalable way to augment its developer workforce without compromising quality or speed.

The bank introduced AI coding assistants, powered by generative AI models such as GitHub Copilot and internal LLMs, to support tasks like code generation, unit testing, and documentation. These tools were integrated into development environments to offer real-time code suggestions and automated QA support. As a result, JPMorgan saw a 20% boost in developer efficiency and identified over $1 billion in potential productivity gains.

3. Shell: Predictive DevOps in Industrial Systems

Shell, a global energy company, faced challenges in maintaining high availability and performance of its refinery systems managed via complex DevOps pipelines. Traditional monitoring was reactive and failed to capture early signals of system degradation, leading to unexpected downtime and high maintenance costs. Shell sought a proactive, AI-driven solution to manage its critical infrastructure more intelligently.

By partnering with Applied Computing, Shell implemented predictive DevOps models that monitored telemetry and process data in real time. The AI system predicted component wear and suggested preemptive interventions to avoid system failures. This approach led to a 10% reduction in energy consumption and minimized unplanned outages, demonstrating the operational impact of AI in industrial DevOps environments.

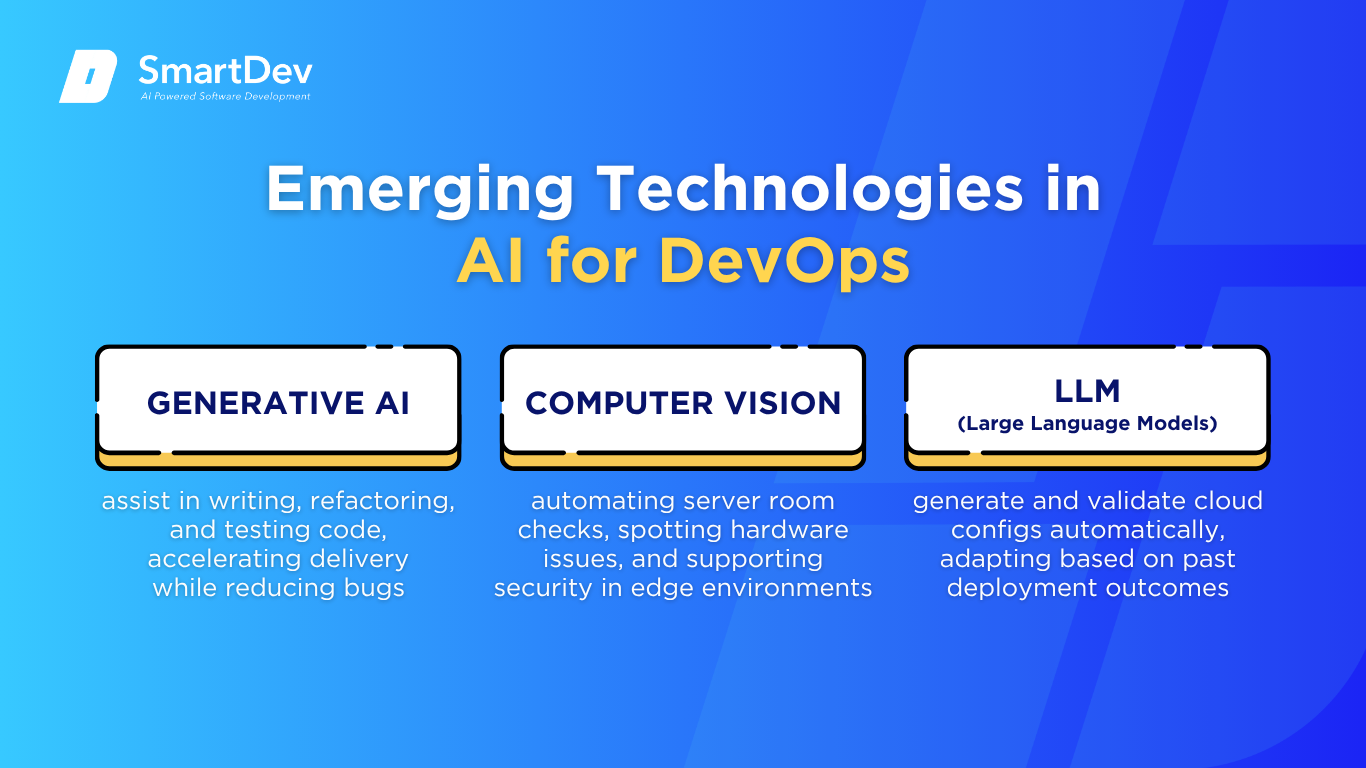

Innovative AI Solutions

Following the impact of proven case studies, it’s important to highlight the emerging technologies shaping the future of AI in DevOps. These innovations are unlocking new possibilities in automation, developer experience, and continuous intelligence across the software delivery lifecycle.

One major trend is the rise of AIOps platforms that unify observability, automation, and decision-making. These platforms ingest telemetry data across systems and apply causal inference to detect, diagnose, and resolve incidents autonomously. Tools like Moogsoft and Dynatrace’s Davis AI are evolving to act as proactive agents, offering real-time guidance and reducing alert fatigue in complex environments.

Platform engineering is emerging as a strategic framework powered by AI to enhance internal developer platforms (IDPs). AI helps auto-generate service templates, analyze developer friction points, and ensure compliance with governance policies. This shift supports a self-service model that empowers teams, reduces ticket overhead, and ensures secure, scalable delivery pipelines.

AI-Driven Innovations Transforming DevOps

Emerging Technologies in AI for DevOps

AI is reshaping DevOps by automating repetitive tasks and making systems more adaptive. Generative AI tools like GitHub Copilot now assist in writing, refactoring, and testing code, accelerating delivery while reducing bugs. These models learn from your project context to suggest smarter solutions, allowing engineers to focus on innovation rather than boilerplate.

New platforms powered by large language models are also transforming infrastructure management. They generate and validate cloud configs like Terraform scripts automatically, adapting based on past deployment outcomes. This means fewer errors, faster rollouts, and smarter orchestration across environments.

While not yet widespread, computer vision is emerging in physical infrastructure monitoring—automating server room checks, spotting hardware issues, and supporting security in edge environments. As IT stacks blend digital and physical systems, visual AI will play a bigger role in system reliability and compliance.

AI’s Role in Sustainability Efforts

AI is driving sustainability in DevOps by optimizing resource usage and reducing waste. Through predictive analytics, AIOps platforms anticipate system demand and auto-scale infrastructure accordingly—minimizing idle servers and energy consumption. This not only cuts costs but also lowers carbon footprints in cloud-native environments.

Smart automation also improves release efficiency. By detecting potential failures before they happen, AI reduces rollbacks and redundant deployments—saving compute cycles and developer time. At scale, these efficiencies translate into significantly leaner operations.

In data centers and hybrid IT environments, AI-powered energy management tools analyze usage patterns and recommend adjustments in real time. From managing cooling systems to balancing workloads, these systems help DevOps teams run greener without sacrificing performance—aligning operational goals with sustainability targets.

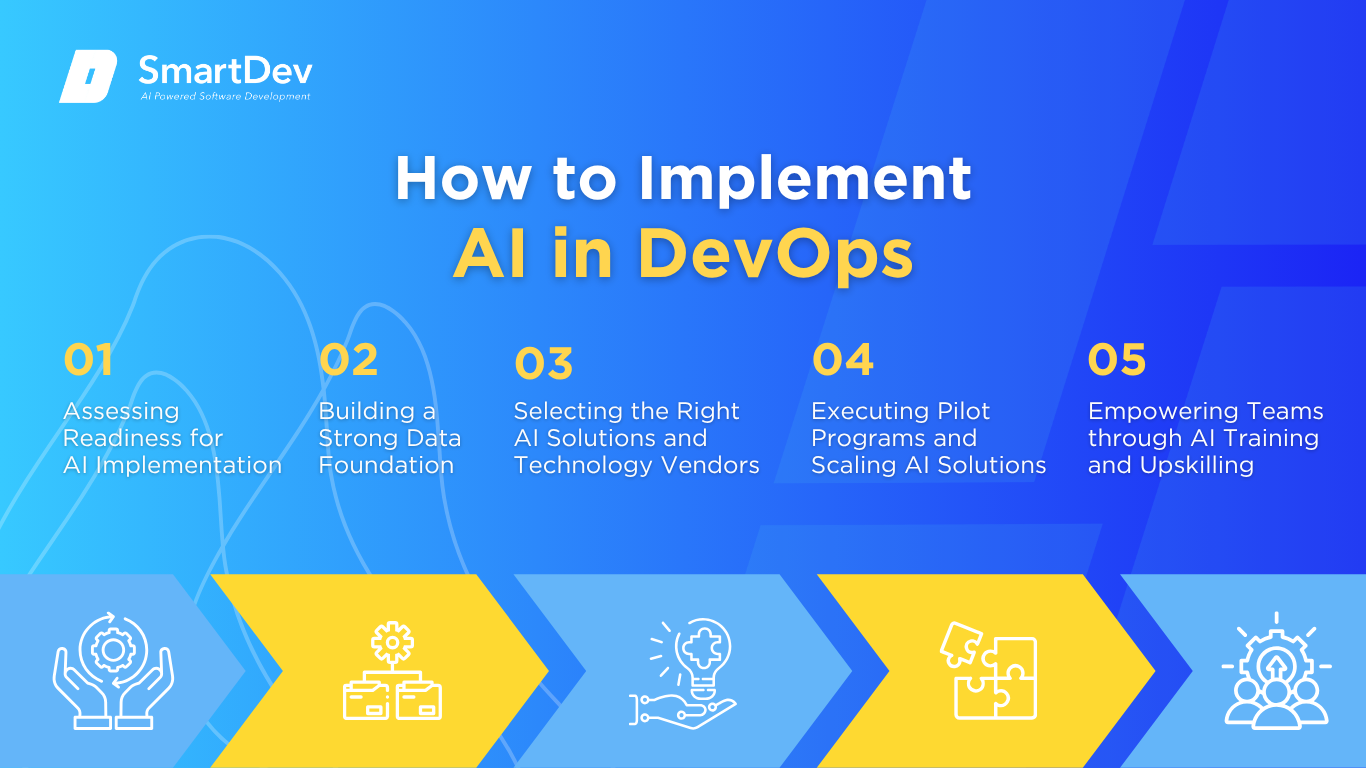

How to Implement AI in DevOps

Step 1: Assessing Readiness for AI Adoption

Before integrating AI into DevOps, businesses must evaluate their current DevOps maturity—CI/CD pipelines, incident response, infrastructure management—and identify areas that suffer from manual toil or slow feedback loops. This readiness check helps ensure AI investments target high-impact use cases like release automation or predictive monitoring.

Equally important is reviewing the existing tech stack and data infrastructure. Successful AI in DevOps relies on clean logs, consistent monitoring data, and cloud platforms that support AI model integration. Companies should verify they have the necessary telemetry, storage, and compute power to scale AI capabilities reliably.

Step 2: Building a Strong Data Foundation

AI-driven DevOps needs consistent, high-quality operational data—system logs, deployment metrics, performance indicators, and incident reports. This data must be collected from across the DevOps toolchain, from code commits to production environments, to support accurate AI decision-making.

Maintaining data hygiene is critical. Outdated logs or incomplete event traces can lead to flawed AI insights. Implementing data validation, tagging standards, and regular audits ensures your AI tools detect real issues, not noise, and drive meaningful automation at scale.

Step 3: Choosing the Right Tools and Vendors

Choosing AI tools tailored for DevOps is essential. Look for platforms offering AIOps capabilities such as anomaly detection, root cause analysis, and intelligent automation that integrate with existing tools like Kubernetes, Jenkins, and Git. Compatibility with observability platforms like Prometheus or Datadog is also vital.

Working with experienced vendors who understand DevOps pipelines can accelerate implementation. Whether adopting AI incident managers or ML-driven deployment tools, the right partner helps fine-tune models, ensure security compliance, and scale with confidence.

Step 4: Pilot Testing and Scaling Up

Start small. Pilot AI in a focused area like alert fatigue reduction or automated test case generation and monitor its impact on team productivity and service reliability. Use this phase to validate performance, collect user feedback, and refine integrations.

Once pilot goals are met, expand gradually across the toolchain. Roll out AI to additional teams or workflows while ensuring consistent monitoring and governance. This phased approach minimizes risk and builds organizational confidence in AI as a core DevOps enabler.

Step 5: Training Teams for Successful Implementation

People are the key to successful AI adoption. Upskill DevOps engineers, SREs, and platform teams on how AI tools work, where to apply them, and how to interpret results. Training should be continuous and aligned with evolving toolsets and roles.

Encourage collaboration between developers, operations staff, and data engineers to ensure AI tools solve real DevOps pain points. Create feedback loops where teams refine AI recommendations based on day-to-day realities—turning AI from a black box into a trusted co-pilot.

Measuring the ROI of AI in DevOps

Key Metrics to Track Success

To evaluate the ROI of AI in DevOps, companies should track critical DevOps performance indicators that directly reflect efficiency and reliability. Key metrics include reduced mean time to detection (MTTD) and mean time to resolution (MTTR), increased deployment frequency, and fewer failed releases. AI-powered anomaly detection and predictive alerting significantly reduce downtime, enabling faster incident triage and more stable systems.

Cost savings also play a major role. Automating repetitive tasks like code testing, configuration validation, or alert management reduces manual labor and accelerates delivery cycles. Organizations can quantify ROI by comparing pre- and post-AI productivity gains, such as hours saved per sprint or the reduction in service disruptions. Improvements in developer experience and reduced burnout—often intangible—are increasingly recognized as long-term ROI drivers.

Case Studies Demonstrating ROI

A global e-commerce company integrated AI-driven testing and release automation into its DevOps pipeline. Within four months, they reduced deployment errors by 35% and increased their release velocity by 50%. Developers reported fewer manual rework cycles, freeing them to focus on feature innovation rather than firefighting.

Another enterprise used AIOps to consolidate monitoring tools and apply AI to identify root causes across infrastructure layers. This led to a 60% faster resolution rate for critical incidents and saved the operations team hundreds of hours in manual log analysis. By reducing service downtime and operational load, the company significantly lowered its total cost of ownership.

Common Pitfalls and How to Avoid Them

One major challenge in realizing AI ROI in DevOps is poor data readiness. Inconsistent logs, fragmented observability, or missing metadata can lead to unreliable AI predictions. To avoid this, teams must establish strong data pipelines and enforce standards for logging, monitoring, and tagging—long before AI implementation begins.

Another common issue is the mismatch between AI capabilities and DevOps needs. Tools that overpromise or don’t integrate seamlessly into existing workflows can stall productivity. Organizations must involve engineering teams early in the vendor evaluation process and prioritize tools that enhance—not replace—their day-to-day processes. Clear expectations, robust training, and a phased rollout help minimize resistance and maximize value.

Future Trends of AI in DevOps

Predictions for the Next Decade

The next decade will push AI in DevOps far beyond automation toward self-healing systems and autonomous orchestration. Large language models will evolve into intelligent agents capable of understanding context, adjusting configurations on the fly, and resolving incidents with minimal human input. AI will move from being a passive advisor to an active collaborator, automating full CI/CD pipelines, optimizing infrastructure based on usage patterns, and orchestrating multi-cloud environments with human-like intuition.

Agentic AI systems will collaborate across development, security, and operations, making decisions in real-time, learning from outcomes, and evolving deployment strategies autonomously. Predictive analytics will become proactive, not just flagging anomalies but fixing them before they surface. AI will also handle policy enforcement, cost control, and compliance checks—turning DevOps into an intelligent, continuous flow of innovation.

How Businesses Can Stay Ahead of the Curve

To stay ahead, companies must start investing in AI literacy across engineering and operations teams. Teams should be trained not only to use AI tools but to co-create with them—writing prompts, debugging outputs, and integrating models into workflows. Organizations should also unify DevOps and MLOps pipelines to avoid silos that slow adoption and limit model visibility.

Early partnerships with AI-driven platforms and participation in open-source projects or tech communities will give businesses a front-row seat to emerging innovations. Developing internal frameworks for ethical AI use, monitoring, and fail-safes ensures these powerful tools remain trustworthy as they scale.

Conclusion

Key Takeaways

AI is revolutionizing DevOps—accelerating code delivery, boosting infrastructure resilience, and transforming operations from reactive to predictive. From generative coding and automated testing to intelligent monitoring and cloud orchestration, the value of AI is measurable and growing. Companies that align AI tools with real-world DevOps pain points gain faster time-to-market, reduced operational overhead, and improved developer productivity.

As AI technologies like agentic systems and orchestration frameworks mature, the future of DevOps will be defined by smart collaboration between machines and humans. Those who lead in adoption today will define the standards of agility and reliability tomorrow.

Moving Forward: A Strategic Approach to AI in DevOps

If your organization is ready to unlock the full potential of AI in DevOps, the time to act is now. Begin with a maturity assessment and identify the high-friction points in your software delivery lifecycle where AI can create immediate value. Then, pilot AI tools that integrate smoothly with your existing DevOps toolchain and culture.

By partnering with SmartDev, you can reduce the complexity of implementation and fast-track your AI strategy with proven, enterprise-grade solutions. Whether your goal is to optimize infrastructure, accelerate deployments, or reduce outages, our experts will help you harness AI responsibly and effectively.

Get in touch to explore how SmartDev can help your teams build a smarter, faster, and more resilient DevOps pipeline—backed by AI. Let’s define the future of software delivery together.

—

References:

-

The Great Merge: Why AI-DevOps Hybrids Are Outpacing Traditional Tech Teams | Andela

-

3 Surprising Findings from Our 2024 Global DevSecOps Survey | GitLab

-

CodeQL: Performance and Coverage Improvements in Recent Releases | GitHub Blog

-

DevOps at Scale: Deloitte’s Point of View | Deloitte Netherlands

-

Shell Uses Azure and Databricks for AI in Mining and Oil | Microsoft