Introduction

Artificial intelligence continues to attract massive investment across industries, yet failure rates remain high. Multiple studies estimate that up to 70–80% of AI projects never make it into production or fail to deliver expected value. The core reason is not lack of ambition or talent, but persistent AI execution issues.

Organizations often rush from idea to implementation without validating feasibility. Business leaders expect rapid ROI, while technical teams struggle with data limitations, unclear objectives, and unrealistic timelines. Without structured validation, AI initiatives accumulate risk early and collapse late.

AI Proofs of Concept, or PoCs, exist to break this cycle. When executed properly, they reduce uncertainty, expose constraints early, and provide evidence for informed decision making. More importantly, they de-risk both technical and business feasibility before large-scale investment occurs.

What an AI Proof of Concept really is

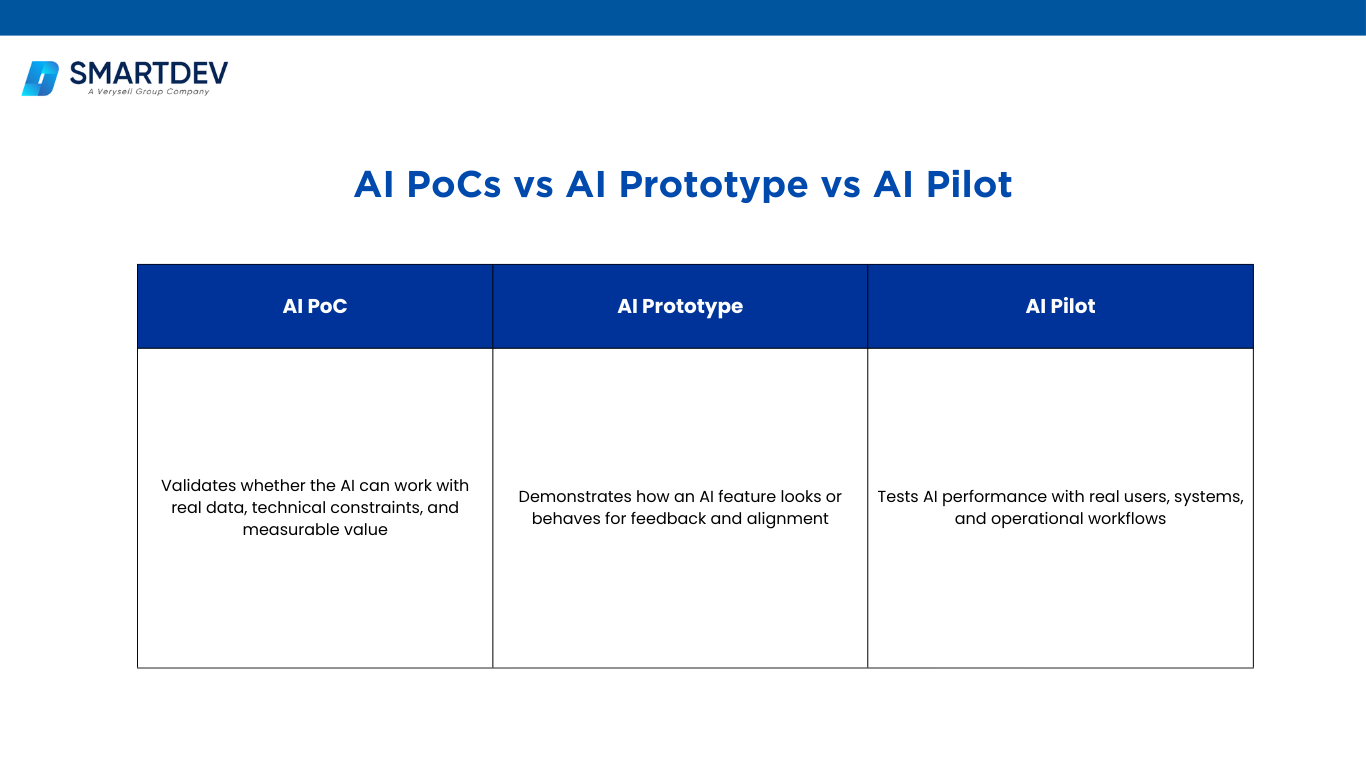

PoC vs prototype vs pilot

An AI Proof of Concept is a small, controlled experiment with a very specific goal. It answers one core question. Can this AI approach work with our data, within our technical constraints, and deliver measurable value.

An AI Proof of Concept is a small, controlled experiment with a very specific goal. It answers one core question. Can this AI approach work with our data, within our technical constraints, and deliver measurable value.

This is fundamentally different from a prototype or a pilot.

A prototype is primarily about demonstrations. It shows how an AI-powered feature might look or behave, often to support stakeholder buy-in or user feedback. A pilot comes later and focuses on limited real-world deployment. It tests how the solution performs with real users, live systems, and operational processes.

A PoC sits at the earliest stage and focuses strictly on feasibility. It tests whether key assumptions are valid before significant resources are committed. When this step is skipped, AI execution issues emerge quickly. SmartDev highlights that organizations moving directly from idea to pilot often waste 30 to 50% of development effort due to rework caused by incorrect assumptions around data availability, model accuracy, or integration complexity. Industry research supports this, showing that nearly 70% of AI projects fail to reach production largely because feasibility is never properly validated upfront.

The role of PoCs in modern AI delivery

AI behaves differently from traditional software. Its performance is probabilistic, not deterministic. Even well-designed models can behave unpredictably when exposed to real data. Because of this, experimentation is not optional.

PoCs provide a low-risk environment to test model performance, assess data quality, evaluate infrastructure limits, and confirm business relevance. They help teams understand what works, what does not, and why.

Just as importantly, PoCs improve communication. Instead of debating assumptions, stakeholders review evidence. SmartDev reports that teams using structured PoCs reach go or no-go decisions up to 40 percent faster, significantly improving AI project planning and reducing strategic uncertainty.

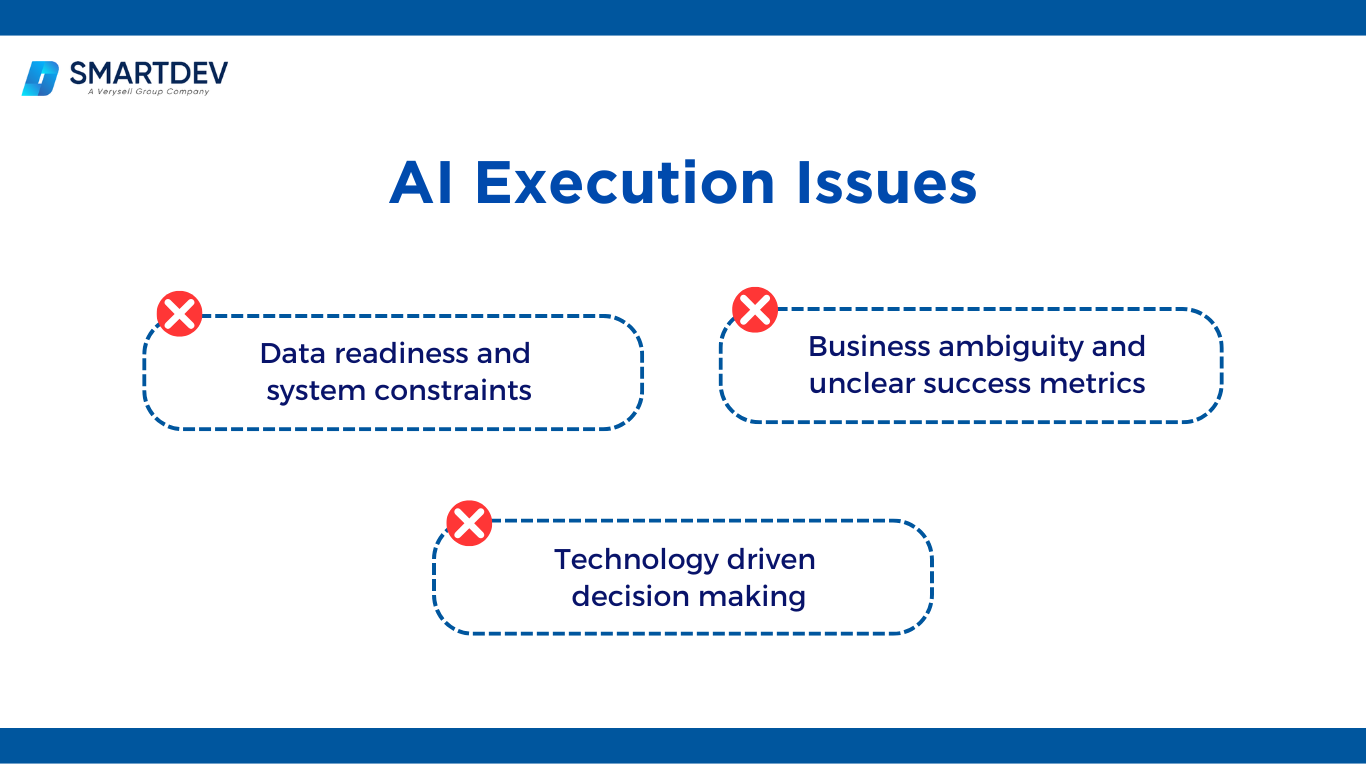

Understanding AI execution issues

1. Data readiness and system constraints

1. Data readiness and system constraints

The most common execution failures in AI initiatives start with data. In many enterprises, data is fragmented across departments, inconsistent in structure, or restricted by governance and security policies. Models trained on clean, idealized datasets often perform well in isolation but fail when exposed to real production environments. These gaps are rarely visible until development is already underway, making correction expensive and time-consuming.

This creates serious challenges for AI project risk management. SmartDev notes that many failed AI initiatives underestimate the effort required to prepare data pipelines, integrate legacy systems, and maintain data quality at scale (SmartDev). Common data-related risks include:

- Incomplete, biased, or outdated datasets

- Fragile or manual data pipelines

- Hidden integration dependencies

A PoC acts as an early feasibility study. It validates technical feasibility while risk mitigation is still realistic and affordable.

2. Business ambiguity and unclear success metrics

Another major source of failure is business ambiguity. Teams are often instructed to “apply AI” without clearly defined outcomes. Accuracy targets, acceptable costs, timelines, and business KPIs are vague or missing altogether. As a result, teams optimize models without knowing whether improvements translate into real value.

Without clear metrics, projects drift and stakeholder confidence erodes. A PoC enforces clarity by requiring explicit success criteria before development begins. Common PoC evaluation metrics include:

- Minimum acceptable model accuracy or error rates

- Cost per inference or operational savings

- Impact on revenue, efficiency, or customer experience

This structured approach enables early validation of business feasibility and improves overall project viability.

3. Technology driven decision making

A third execution issue arises when AI adoption is driven primarily by technology trends rather than real business needs. Many organizations pursue AI because competitors are doing so or because leadership feels pressure to innovate. This results in solutions being built without a clearly defined problem, leading to weak justification and poor long-term outcomes.

These initiatives often struggle to scale because value was never proven. PoCs reverse this pattern by anchoring development in a business hypothesis. They test whether AI is actually the right tool and whether it meaningfully improves outcomes. By linking experimentation to measurable impact, PoCs strengthen AI project risk management, validate both technical feasibility and business feasibility, and prevent resources from being spent on low-viability initiatives.

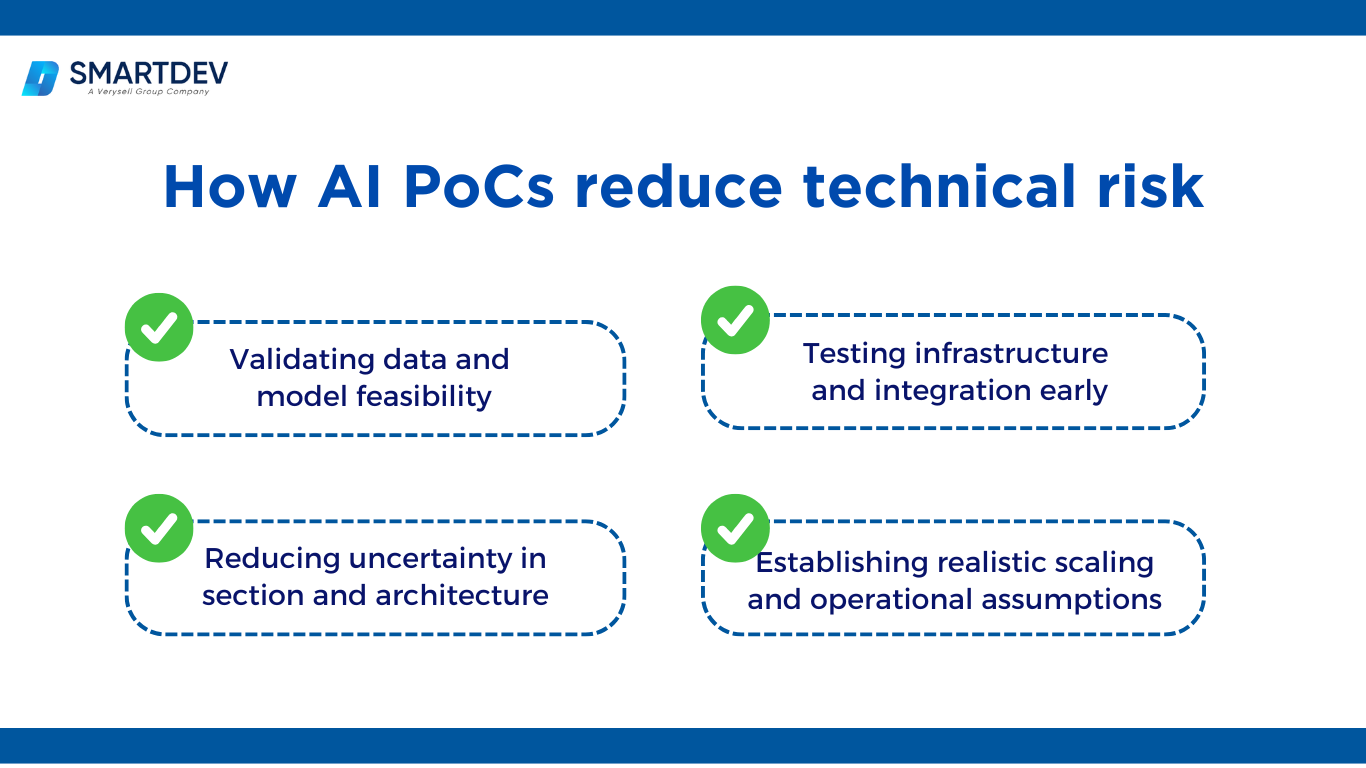

How AI PoCs reduce technical risk

1. Validating data and model feasibility

1. Validating data and model feasibility

At a technical level, PoCs reduce uncertainty by answering critical questions early, before systems are locked in or scaled. Teams validate whether the available data is usable, whether models can achieve acceptable performance, and whether latency and cost constraints can realistically be met in a production context.

This early validation is a core pillar of AI project risk management. Instead of assuming feasibility, PoCs function as a structured feasibility study that tests real conditions rather than theoretical designs. According to industry benchmarks, early validation through PoCs can reduce downstream AI development costs by up to 50 percent by preventing rework, stalled initiatives, and abandoned projects.

PoCs reduce technical risk by exposing issues such as:

- Insufficient data volume or poor data quality

- Model performance gaps under real-world variability

- High inference latency or unexpected compute costs

- Limitations in model explainability or robustness

By addressing these factors early, teams gain a realistic view of technical feasibility and avoid false confidence that often undermines project viability later.

2. Testing infrastructure and integration early

AI solutions rarely operate as standalone components. They must integrate with existing applications, data sources, APIs, security layers, and deployment environments. PoCs test these integrations at a small scale, revealing constraints that are often invisible during design.

SmartDev’s six-layer AI platform approach emphasizes validating infrastructure assumptions during PoCs to avoid scalability traps and architectural rework. Common technical risks identified during PoCs include:

- Bottlenecks in data ingestion or processing pipelines

- Compatibility issues with legacy systems

- Deployment limitations across cloud or on-prem environments

- Security and access control constraints

By surfacing these risks early, PoCs enable proactive risk mitigation. They also clarify whether scaling the solution is realistic within existing constraints, supporting both business feasibility and long-term AI project risk management.

3. Reducing uncertainty in model selection and architecture

Another way PoCs reduce technical risk is by allowing teams to experiment with multiple modeling approaches and architectures before committing to one. Choosing the wrong model or architecture too early often leads to performance ceilings or costly refactoring later.

Through PoCs, teams can compare alternatives such as:

- Classical machine learning vs deep learning

- Pretrained models vs custom-trained models

- Batch processing vs real-time inference architectures

This experimentation improves decision quality and ensures that architectural choices align with both technical feasibility and long-term maintainability. It also reduces dependency on assumptions that may not hold at scale, strengthening overall AI project risk management.

4. Establishing realistic scaling and operational assumptions

PoCs also help teams understand what it will take to operate AI systems reliably over time. This includes monitoring, retraining, and performance degradation as data evolves. Without this insight, projects appear viable initially but fail during operationalization.

By simulating scaled usage patterns during PoCs, teams can assess:

- Infrastructure costs under increased load

- Model retraining frequency and effort

- Monitoring and alerting requirements

These insights are critical for evaluating project viability. By linking early experimentation to long-term operational realities, PoCs ensure that only initiatives with proven technical feasibility and business feasibility move forward, making them a cornerstone of disciplined AI project risk management.

Explore how SmartDev partners with teams through a focused AI discovery sprint to validate business problems, align stakeholders, and define a clear path forward before development begins.

SmartDev helps organizations clarify AI use cases and feasibility through a structured discovery process, enabling confident decisions and reduced risk before committing to build.

Learn how companies accelerate AI initiatives with SmartDev’s discovery sprint.

Start Your 3-Week Discovery Program NowWhy AI proof of concept fails to scale

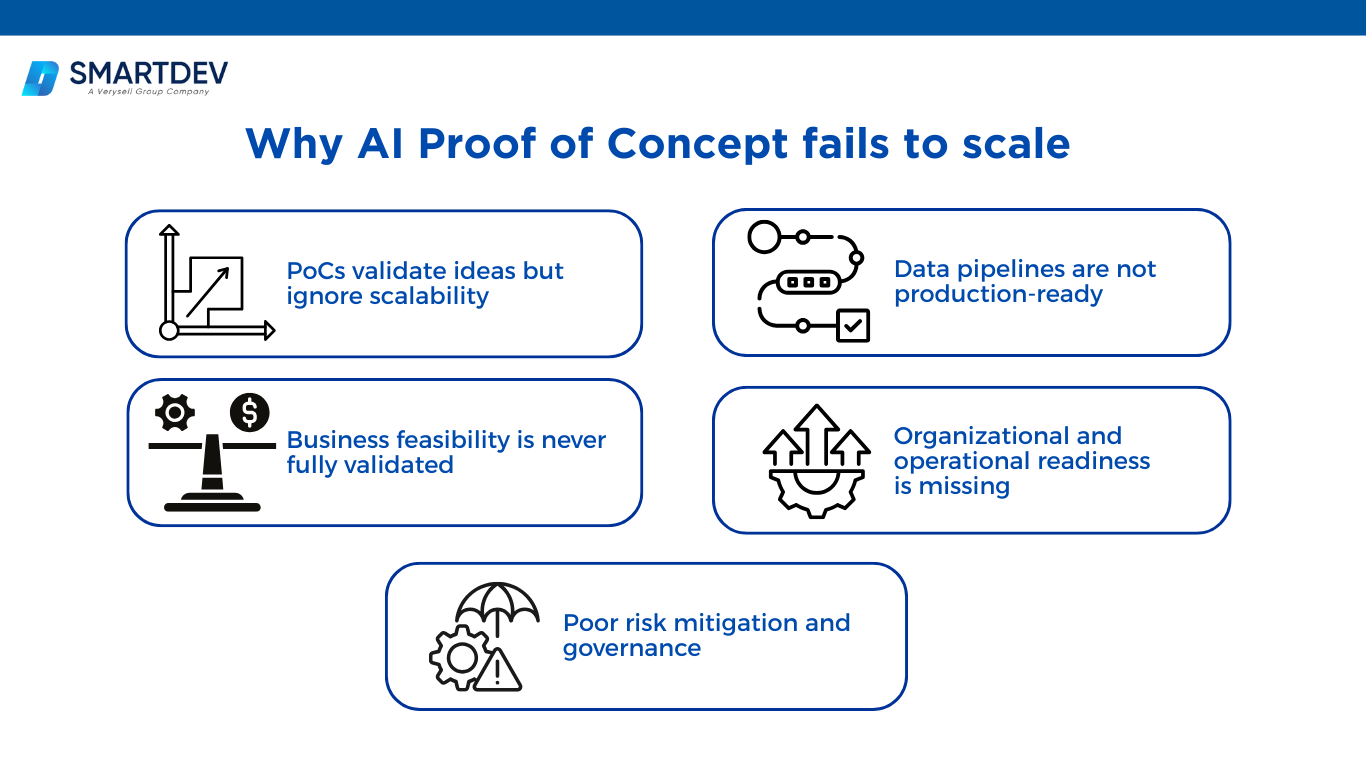

Despite their value, many PoCs never make the leap to production. Understanding why AI proof of concept fails to scale is essential for effective AI project risk management. Below are the most common, evidence-backed reasons.

1. PoCs validate ideas but ignore scalability

1. PoCs validate ideas but ignore scalability

Many PoCs are built to prove that something can work, not that it can work at scale. Teams use simplified datasets, limited users, and temporary infrastructure. When scaling begins, performance drops or costs spike.

Industry data shows that over 60 percent of AI PoCs fail during scaling due to infrastructure and performance constraints that were not tested early. This reflects poor assessment of technical feasibility beyond the PoC stage.

2. Data pipelines are not production-ready

PoCs often rely on manually prepared or static datasets. In production, data is dynamic, noisy, and continuously changing. Without robust pipelines, models degrade quickly.

SmartDev highlights that data engineering and integration account for up to 70 percent of AI project effort, yet are commonly underestimated during PoCs. This gap directly impacts project viability.

3. Business feasibility is never fully validated

Some PoCs demonstrate technical success but fail to show meaningful business impact. Accuracy improves, but ROI remains unclear. As a result, leadership hesitates to fund scaling.

Research indicates that nearly 50 percent of AI projects stall after PoC because business value is not clearly quantified. This reflects weak business feasibility validation.

4. Organizational and operational readiness is missing

Scaling AI requires ownership, monitoring, retraining processes, and user adoption. PoCs that ignore operational realities create false confidence.

According to industry surveys, lack of organizational readiness contributes to failure in more than 40 percent of AI scaling efforts.

5. Poor risk mitigation and governance

PoCs often bypass governance, security, and compliance. These issues resurface later and block deployment entirely.

Without early governance planning, scaling becomes risky and slow. This is why PoCs must function as a true feasibility study, addressing technical feasibility, business feasibility, and long-term risk mitigation together.

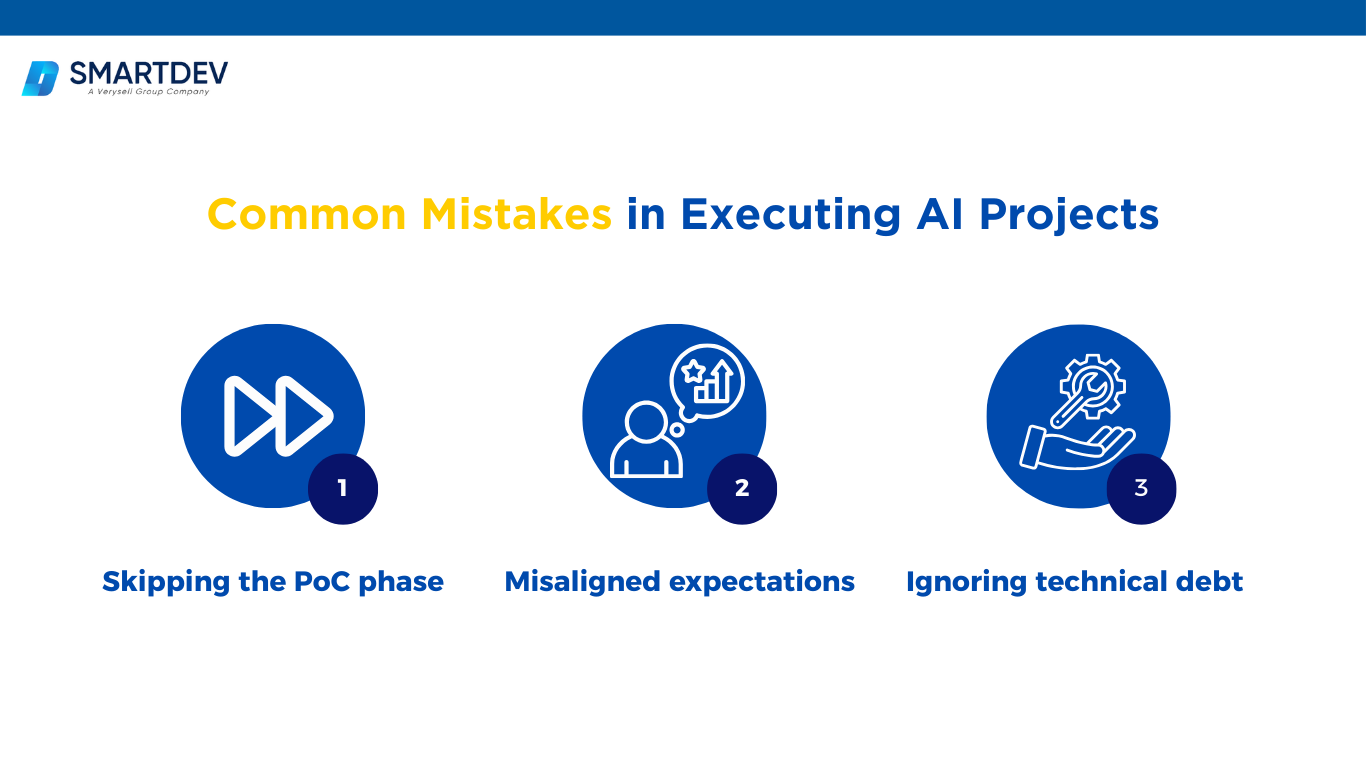

Common Mistakes in Executing AI Projects and Solutions

Some errors in AI initiatives are so common that they have become clichés. Yet they continue to undermine otherwise promising efforts. Understanding these mistakes, and how to address them, is essential for effective AI project risk management and long-term success.

1. Skipping the PoC Phase

1. Skipping the PoC Phase

One of the most damaging mistakes is skipping or delaying the Proof of Concept phase to “save time.” In reality, this shortcut often leads to larger losses later. Without a PoC, teams commit to architectures, datasets, and use cases that have never been validated.

This approach increases uncertainty around technical feasibility and business feasibility, making failure more likely once real constraints appear. The solution is straightforward. Treat the PoC as a mandatory feasibility study, not an optional step. A well-scoped PoC reduces risk early, enables informed go or no-go decisions, and protects overall project viability.

2. Misaligned expectations

Another frequent mistake is unrealistic expectations. Stakeholders may assume AI will deliver immediate, near-perfect results or solve complex problems end to end. When limitations are not clearly communicated, early PoC results can be misunderstood, leading teams to scale prematurely.

This misalignment creates pressure, erodes trust, and often results in failed deployments. The solution is proactive expectation management. PoCs should explicitly document assumptions, limitations, and trade-offs. By grounding discussions in evidence, teams improve risk mitigation and ensure alignment across business and technical stakeholders.

3. Ignoring technical debt

PoCs are often built quickly, with shortcuts taken in code quality, architecture, and documentation. When these experimental systems are later pushed toward production, technical debt becomes a major blocker.

The solution is to design PoCs with evolution in mind. Modular architectures, clean interfaces, and basic maintainability standards help ensure that successful PoCs can transition smoothly into scalable solutions. This approach strengthens technical feasibility and reduces costly rework, supporting sustainable AI project risk management.

Guidelines for How to Structure AI Projects for Success

Successful AI deployment is a journey, not a single delivery milestone. Proofs of Concept are an early but crucial step in that journey, helping organizations validate assumptions while laying the groundwork for scale. To succeed, AI initiatives must be structured deliberately, with attention to execution discipline and long-term sustainability.

1. Modular, layered development

1. Modular, layered development

AI projects should be built using modular, layered architectures rather than monolithic systems. Separating concerns across layers such as data ingestion, data processing, model training, model inference, and application integration allows each component to be tested and evolved independently.

This structure simplifies debugging, supports incremental scaling, and reduces the risk of widespread failure when changes are introduced. Modular design also makes it easier to replace or upgrade models as data and requirements evolve, strengthening long-term technical feasibility and reducing rework.

2. Cross-functional team collaboration

AI initiatives require collaboration across business, engineering, data, and operations teams. When AI projects are driven by a single function, misalignment quickly emerges. Business goals may be unclear to engineers, while technical constraints may be invisible to leadership.

Involving cross-functional stakeholders early ensures shared understanding of objectives, limitations, and trade-offs. This alignment accelerates decision-making, improves adoption, and enhances overall AI project risk management by continuously validating both business feasibility and technical feasibility throughout the project lifecycle.

3. Governance, ethics, and compliance

Trustworthy AI requires more than performance. Governance, ethics, and compliance must be embedded from the beginning. Data privacy, model explainability, bias detection, and regulatory alignment should be considered even during PoCs.

Addressing these concerns early reduces downstream risk and prevents late-stage deployment blockers. It also strengthens project viability by ensuring AI systems can be safely and responsibly scaled.

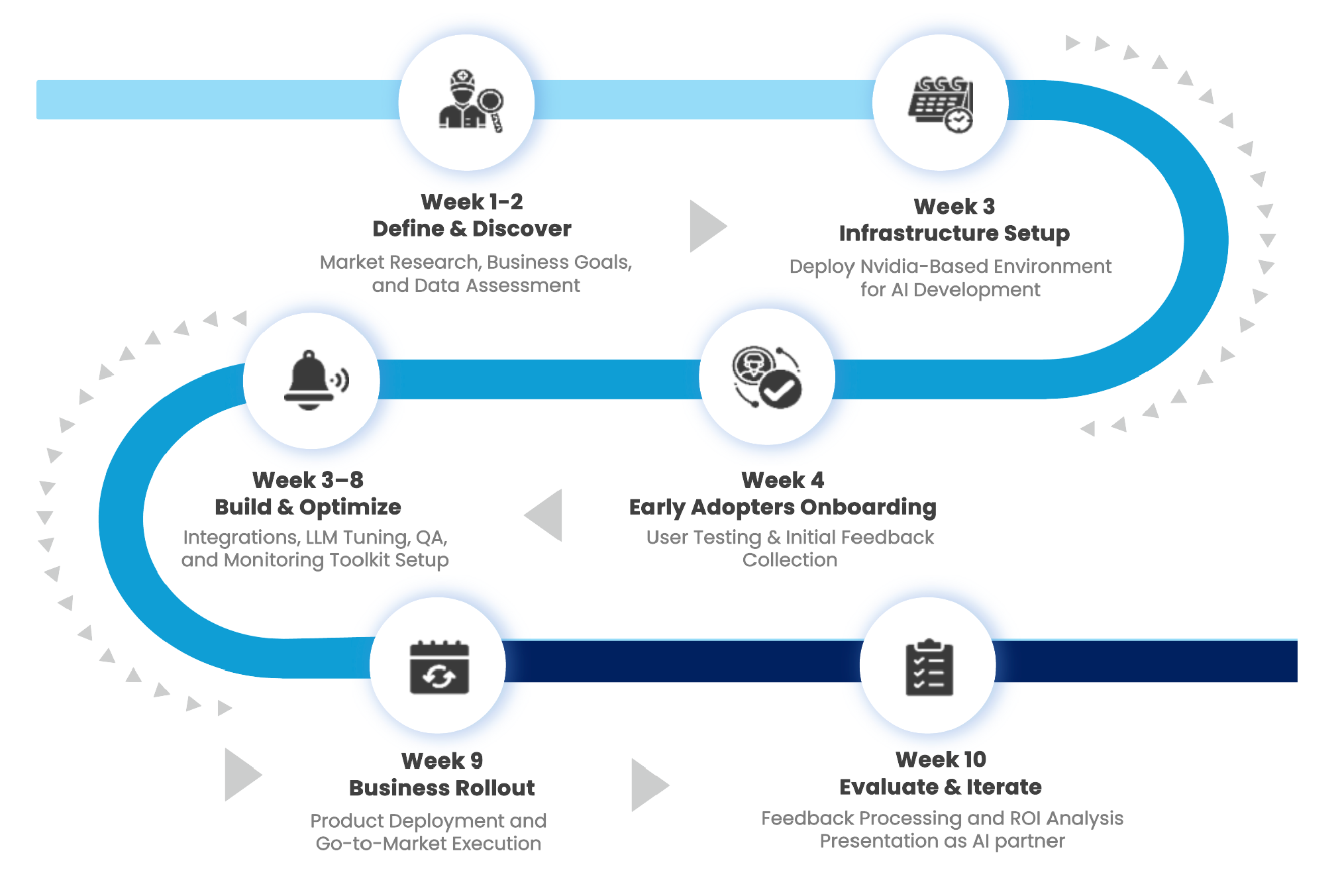

Accelerating AI Delivery Through SmartDev’s 10-Week AI Product Factory

SmartDev’s 10-week AI Product Factory demonstrates how structured delivery can accelerate AI outcomes without sacrificing rigor or control. Instead of treating PoCs, architecture design, and product development as disconnected phases, the model integrates them into a single, disciplined execution framework. This ensures that early validation directly informs how solutions are built and scaled.

Phase 1. Define & Discover (Weeks 1–2). Clarifying Technical Feasibility

Phase 1. Define & Discover (Weeks 1–2). Clarifying Technical Feasibility

Phase 1 removes early ambiguity that often leads to AI execution failure. Instead of starting with models, teams define what technical success actually means. AI ideas are translated into testable hypotheses that expose feasibility risks upfront.

During this phase, teams:

- Define concrete success criteria tied to model performance and system constraints

- Identify data availability, quality, and integration assumptions

- Establish a technical feasibility hypothesis for the AI PoC

- Surface early risks that could block implementation or scaling

By the end of Phase 1, the PoC is no longer exploratory. It is framed around clear technical constraints, reducing uncertainty before development begins.

Phase 2. Prototype Development (Weeks 3–8). Testing Feasibility in Real Conditions

Phase 2 focuses on proving technical feasibility through execution. Models are built and tested within real workflows rather than isolated environments, exposing issues early.

Development is deliberately focused on:

- Validating model performance with real data variability

- Testing system integration, latency, and reliability

- Identifying operational constraints that affect deployment

Continuous testing and feedback ensure that feasibility risks are surfaced during execution, not after delivery. This significantly reduces late-stage AI execution issues.

Phase 3. Rollout & Evaluation (Weeks 9–10). Feasibility-Based Scale Decisions

The final phase converts technical results into clear scale decisions. Performance is evaluated against predefined feasibility thresholds.

During this phase, teams:

- Validate whether technical criteria are consistently met

- Assess scalability risks and infrastructure limits

- Decide whether the solution is ready to scale, refine, or stop

The outcome is a clear, evidence-based view of technical feasibility. AI PoCs move from assumption to proof, ensuring scaling decisions are grounded in execution reality rather than optimism.

Within the 10-week cycle, teams move quickly from feasibility study to implementation by working in short, focused iterations. This minimizes rework and avoids the common scenario where a successful PoC cannot be operationalized. SmartDev emphasizes that disciplined execution alongside early validation significantly improves project viability, as decisions are based on evidence rather than assumptions.

By enforcing stakeholder alignment, continuous feedback, and architectural discipline, the AI Product Factory model enables organizations to scale only those AI initiatives that have proven value. This structured approach ensures effective risk mitigation while delivering tangible results within a predictable timeframe.

Conclusion

Most AI initiatives fail due to execution challenges rather than lack of potential. Poor planning, unclear objectives, and late discovery of constraints continue to undermine AI investments, making strong AI project risk management essential.

Structured AI Proofs of Concept address these problems directly. By acting as a focused feasibility study, PoCs validate technical feasibility and business feasibility early, enabling effective risk mitigation while costs are still low. They help organizations make evidence-based decisions and avoid committing resources to low-viability projects.

When integrated into disciplined AI project planning, PoCs become a foundation for scalable success. Organizations that treat PoCs as strategic tools rather than experiments are far better positioned to convert AI ambition into real, sustainable value.

Talk to our AI experts to assess your AI PoC readiness and de-risk your next AI initiative.

1. Data readiness and system constraints

1. Data readiness and system constraints 1. Validating data and model feasibility

1. Validating data and model feasibility 1. PoCs validate ideas but ignore scalability

1. PoCs validate ideas but ignore scalability 1. Skipping the PoC Phase

1. Skipping the PoC Phase 1. Modular, layered development

1. Modular, layered development Phase 1. Define & Discover (Weeks 1–2). Clarifying Technical Feasibility

Phase 1. Define & Discover (Weeks 1–2). Clarifying Technical Feasibility