Introduction

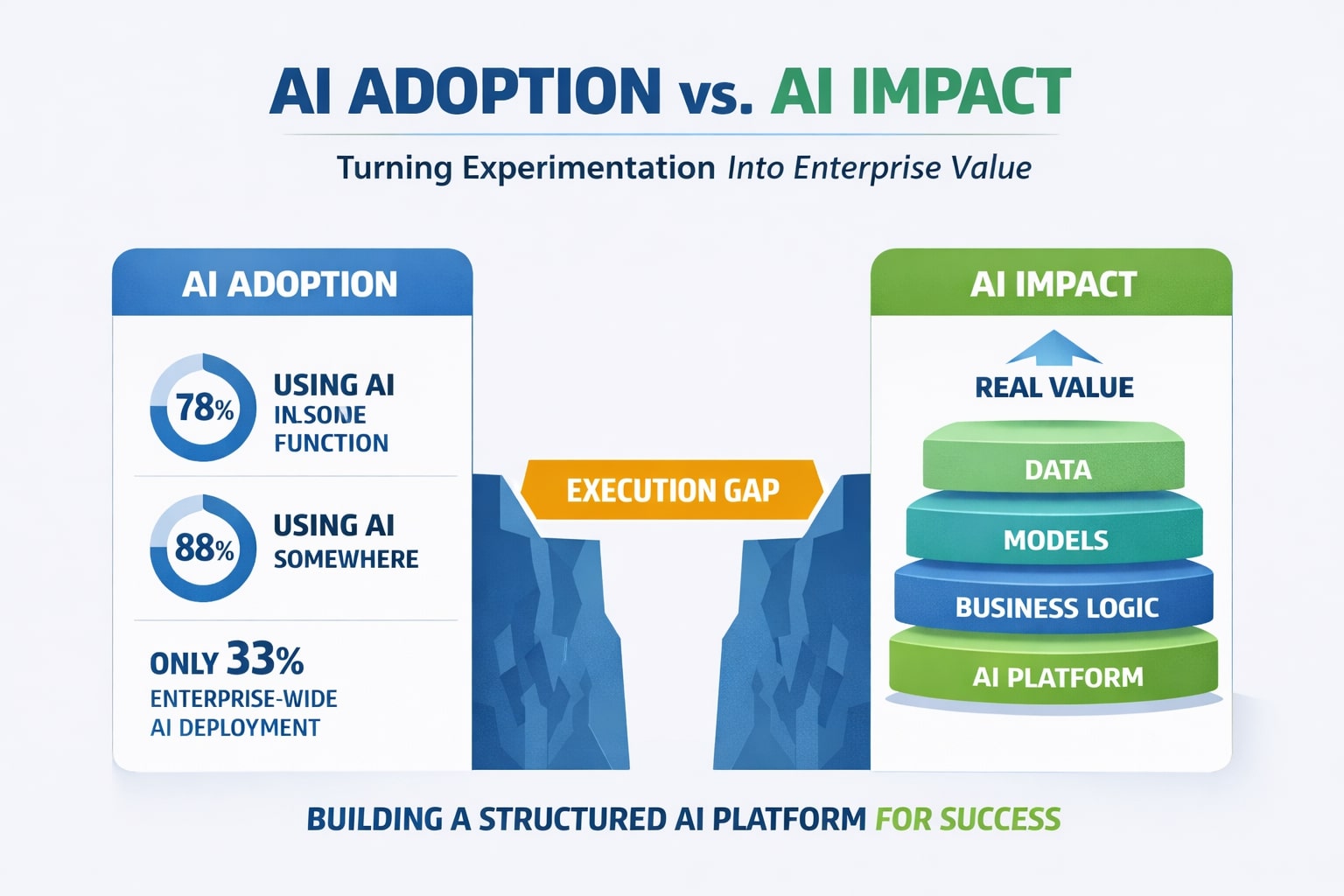

As enterprise leaders increasingly explore AI initiatives, many find themselves stuck between ambition and execution. According to the latest McKinsey State of AI research, 78% of organizations now use AI in at least one business function, up sharply from 55% just one year earlier, indicating a decisive shift from experimental to operational adoption.

However, adoption alone doesn’t guarantee measurable business value. While most companies have begun using AI, only a subset are scaling it meaningfully across workflows. A McKinsey survey showed that 88% of organizations use AI somewhere but only 33% have deployed it enterprise-wide, and less than 6% report improvements of more than 5% in performance outcomes.

This gap highlights a core reality: AI can no longer be treated as isolated innovation projects. What enterprises need is an AI platform built on six core layers – a structured foundation that turns data, models, and business logic into real value, not just technology buzzwords. This blog explores how SmartDev applies a 5-sprint, 10-week AI Product Factory to deliver precisely that.

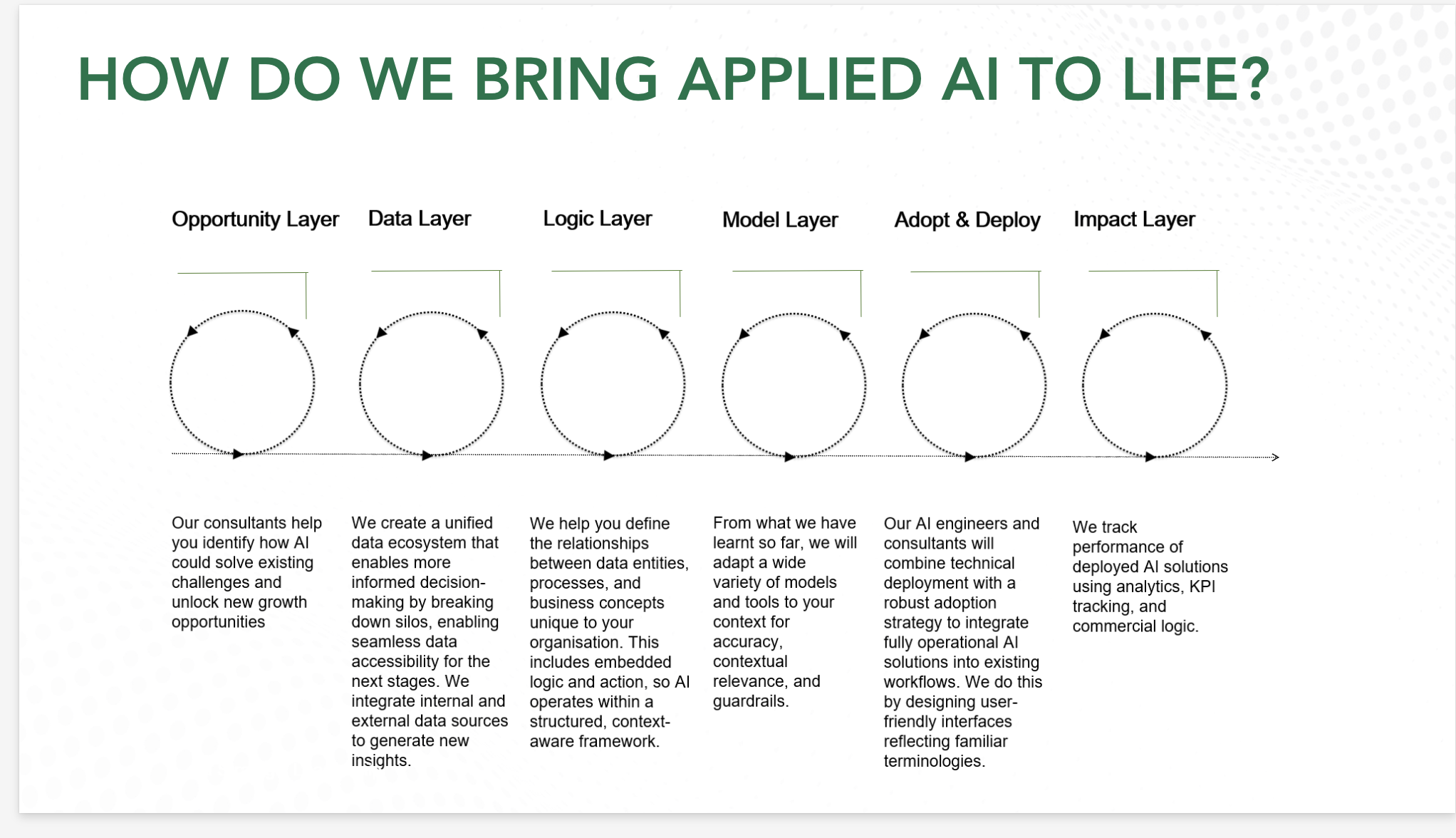

From Strategy to Execution: HowSmartDevBrings Applied AI to Life

Many organizations know AI matters, but struggle to operationalize it. A common cause of failure is treating AI as a set of projects rather than as an integrated platform. McKinsey reports that while executive confidence in AI is high, only 38% of companies have an AI governance framework to support enterprise-wide deployment.

Without a robust platform, investments in models and tools often fail to deliver consistent ROI. Research shows that generative AI pilots suffer from high failure rates — with MIT reporting up to 95% of enterprise GenAI pilots yielding no measurable impact on profit and loss, largely due to poor integration with workflows and business context.

SmartDev’s solution is to treat AI as a product and apply a Product Factory mindset. This means:

- Prioritizing business outcomes over models

- Delivering working, iterative versions rather than one-time “big bang” launches

- Embedding feedback loops for validation at every step

This shift from theory to execution helps enterprises overcome the common pitfalls of AI programs and begin realizing measurable impact faster.

The Six Core Layers of an Enterprise AI Platform

A successful AI platform built on six core layers ensures that AI doesn’t just exist — it creates value consistently, securely, and at scale. Each layer addresses a specific dimension of the platform:

Opportunity Layer

Before engineering work begins, enterprise teams must define where AI can actually drive value. Within an AI layer in the enterprise platform, this means identifying use cases that are tightly connected to business outcomes rather than isolated innovation efforts. SmartDev uses structured frameworks to surface high-impact opportunities early, reducing wasted investment and aligning stakeholders on what successful AI platform adoption should look like from the start.

Data Layer

Data is the lifeblood of AI. Modern enterprises often have fragmented, siloed data systems. Building a unified data foundation — with governance, quality pipelines, access controls, and security — is essential for reliable models downstream. According to industry analyses, the data integration market is expected to grow from $15.24B in 2026 to $47.60B by 2034 largely driven by AI adoption needs.

Logic Layer

The Logic Layer embeds business-specific decision rules and workflows directly into AI systems. Rather than producing generic predictions, this layer ensures insights are meaningful within real enterprise operations. By codifying business logic explicitly, organizations make AI behavior explainable, traceable, and aligned with operational reality, which is critical for sustainable AI platform adoption.

Model Layer

The Model Layer is where machine learning models are trained, optimized, and evaluated, but only after data and business context are properly prepared. This sequencing avoids a common enterprise pitfall. Building models before understanding how they will be used often leads to low ROI and limited adoption. When models are developed as part of a broader lifecycle management platform, they can be monitored, updated, and governed over time instead of becoming brittle assets.

Adopt & Deploy Layer

AI must be integrated into daily business processes to deliver value. This layer connects insights to real systems, interfaces, and workflows that users can trust and adopt. Successful AI applications for enterprises are embedded into existing tools and decision flows, reducing friction and accelerating AI platform adoption across teams and departments.

Impact Layer

Finally, platforms must measure outcomes — not just outputs. Organizations track KPIs, ROI, and strategic impact. Research suggests enterprise AI adoption can deliver 26–55% productivity gains with roughly $3.70 ROI per dollar invested when executed holistically.

Together, these layers form the backbone of an AI platform that consistently delivers value — not siloed experiments.

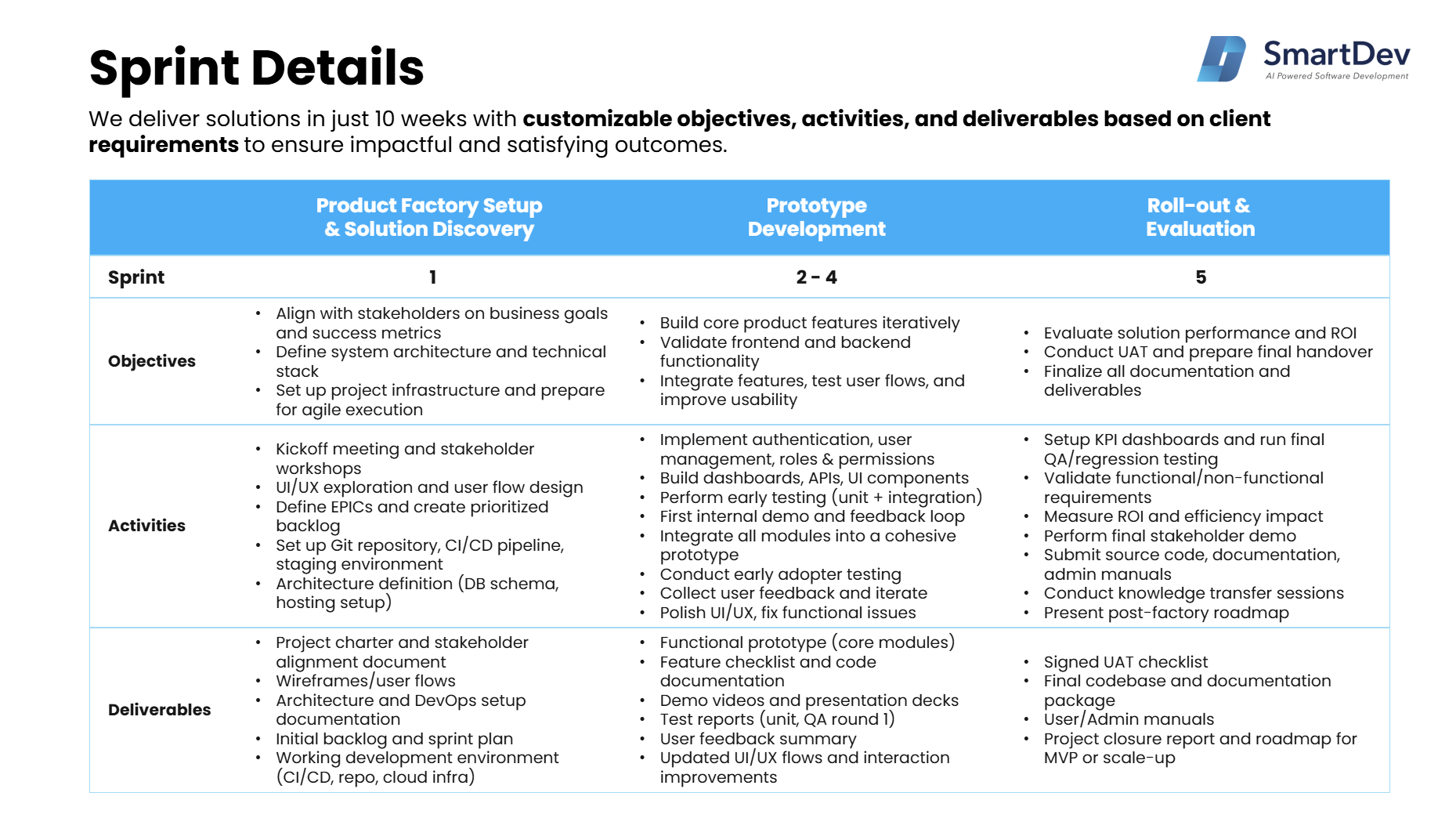

Mapping the Six Core Layers Across Five Strategic Sprints

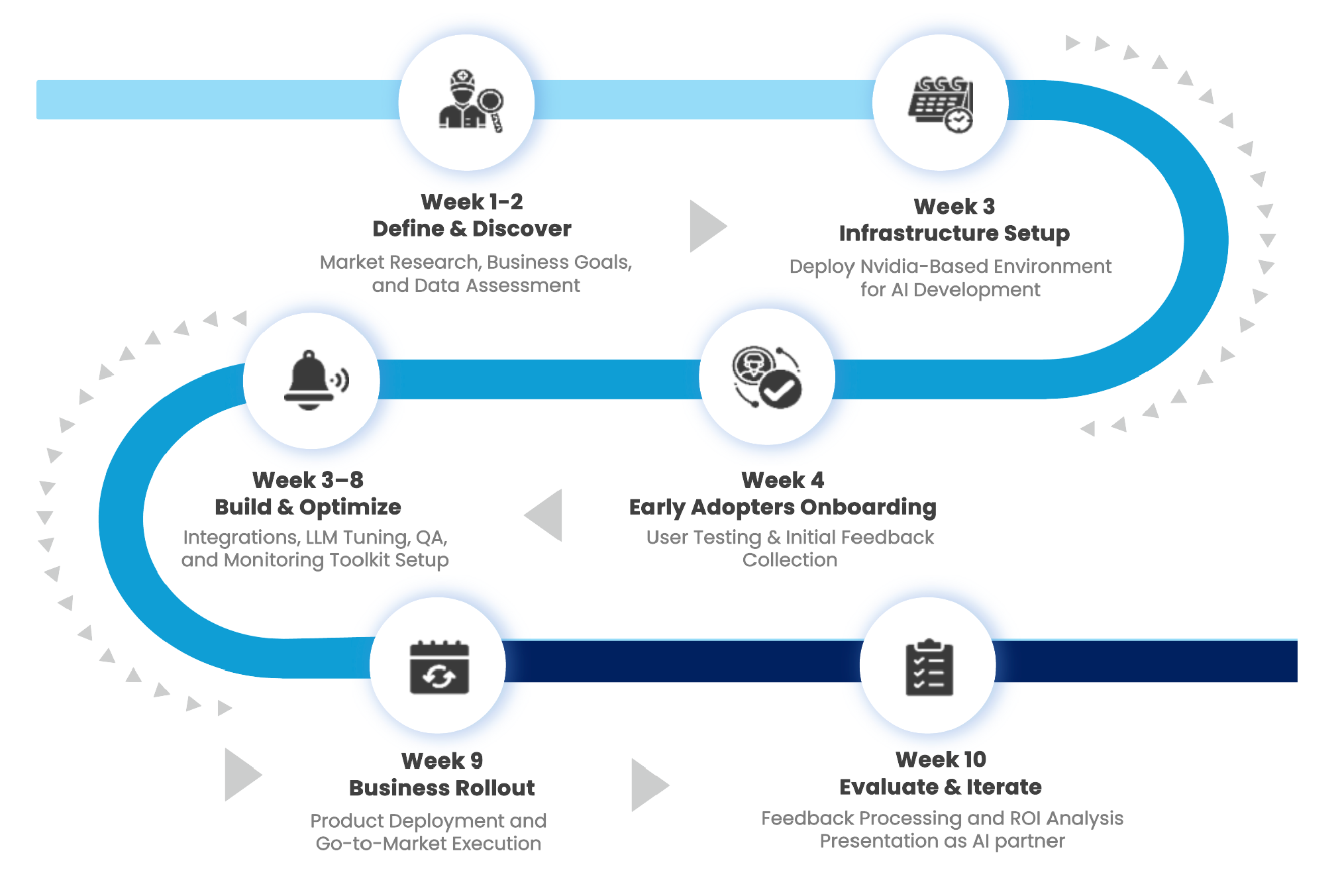

SmartDev delivers AI platforms through five strategic sprints over 10 weeks, each with clear outcomes:

Sprint 1: Discovery and Platform Foundation

This sprint aligns stakeholders, validates opportunities, assesses data maturity, and designs an architecture that supports the six layers. By the end of this phase, the organization has a validated AI roadmap and priorities based on business impact.

Sprints 2 to 4: Build and Optimize

These three sprints focus on:

- Integrating data sources

- Encoding business logic

- Training and validating models

- Developing prototype applications

Each sprint produces working increments with clear evidence backing decisions — a major advantage over traditional, slow-going AI programs.

What makes this approach powerful is risk containment. Rather than investing heavily upfront without evidence, each sprint validates assumptions and reduces uncertainties step-by-step.

Sprint 5: Deploy, Adopt, and Measure Impact

The final sprint focuses on full production deployment, driving adoption within teams, and establishing impact measurement dashboards. Enterprise readiness — including security, compliance, and governance — is completed here.

This sprint-based structure ensures that AI initiatives don’t flatten out after pilot phases, but scale meaningfully across functions.

Explore how SmartDev partners with teams through a focused AI discovery sprint to validate business problems, align stakeholders, and define a clear path forward before development begins.

SmartDev helps organizations clarify AI use cases and feasibility through a structured discovery process, enabling confident decisions and reduced risk before committing to build.

Learn how companies accelerate AI initiatives with SmartDev’s discovery sprint.

Start Your 3-Week Discovery Program NowThe 10-Week AI Product Factory: From Idea to Production-Ready AI

One of the biggest challenges enterprises face with AI is not model performance, but proving business value early enough to justify continued investment. Many AI pilots demonstrate technical feasibility, yet fail to produce decision-ready evidence. Leaders are left asking the same question. Does this AI initiative actually deliver ROI, and should it be scaled further?

This is the gap SmartDev’s 10-Week AI Product Factory is designed to close. Rather than treating ROI as something to measure after deployment, the Product Factory embeds AI ROI thinking from the very beginning. Every experiment is framed around a concrete business question. What outcome are we trying to improve, how will we measure it, and what evidence would justify scaling?

Phase 1. Define & Discover (Weeks 1–2). Framing AI ROI Early

The first phase transforms AI ideas into testable business hypotheses.

Instead of starting with data or models, SmartDev works with stakeholders to define success from a business perspective and to position AI correctly within the broader AI layer in the enterprise platform. This ensures AI initiatives are grounded in real operational needs rather than isolated experimentation.

Key activities in this phase include:

- Aligning on business objectives, constraints, and governance requirements, including early considerations for security and compliance in AI platforms

- Defining AI business impact metrics tied directly to cost reduction, efficiency gains, or revenue growth

- Establishing an initial AI proof-of-concept ROI hypothesis to guide prioritization

- Identifying assumptions, dependencies, and risks that could affect long-term ROI and AI platform adoption

By the end of this phase, AI initiatives are no longer vague experiments. They are clearly scoped around measurable outcomes and enterprise constraints, creating a solid foundation for credible ROI evaluation later.

Phase 2. Prototype Development (Weeks 3–8). Turning Signals into Evidence

During the build phase, AI experiments begin generating real operational signals, not just technical outputs. SmartDev prioritizes high-impact workflows where AI applications for enterprises can demonstrate value quickly and visibly. Models are embedded into real environments rather than isolated sandboxes, allowing teams to observe adoption patterns, performance behavior, and integration challenges as they happen.

ROI assumptions are continuously tested using live pilot data. Through iterative demos and structured feedback loops, stakeholders gain early visibility into where value is emerging and where friction exists. This execution-driven approach ensures AI pilot success is measured during delivery, not retroactively, and that early signals inform future lifecycle management platform decisions.

Phase 3. Rollout & Evaluation (Weeks 9–10). From Metrics to Decisions

The final phase converts collected metrics into decision-ready ROI insights. Performance is validated against predefined success criteria, and operational results are translated into financial impact scenarios. Leaders are then equipped to make informed decisions. Scale the initiative, adjust direction, or stop with confidence.

By the end of the 10 weeks, every AI initiative produces evidence, not optimism. This is what allows enterprises to move beyond experimentation and manage AI investment risk systematically .

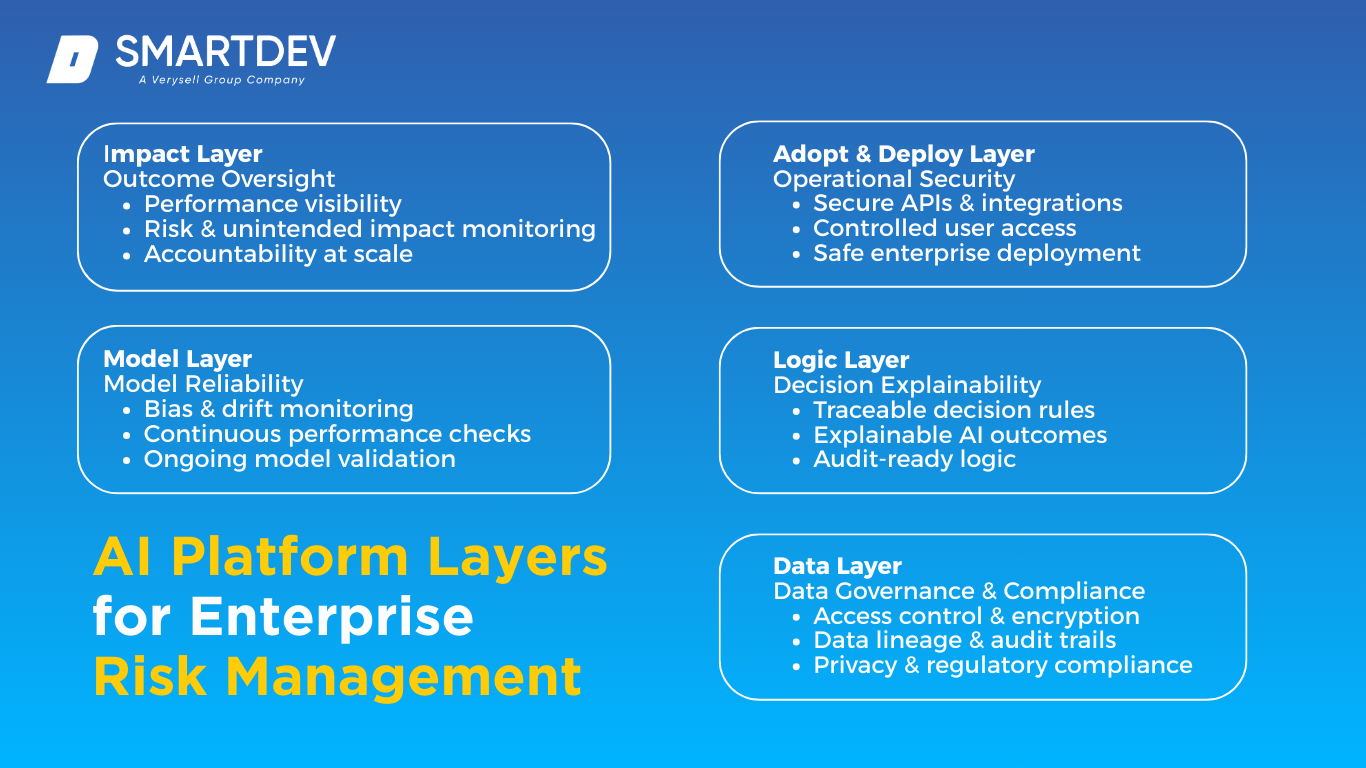

Ensuring Security, Compliance, and Lifecycle Management in AI Platforms

For enterprise AI platforms, security and compliance are not optional requirements. They are foundational design constraints. Many AI initiatives fail to scale not because they lack value, but because governance, risk, and lifecycle concerns surface too late, blocking production deployment. This is why security and compliance in AI platforms must be addressed as core architectural concerns rather than post-deployment controls.

A layered AI platform approach addresses this challenge by distributing governance responsibilities across the system instead of centralizing them in a single control point. By embedding controls directly into each AI layer in the enterprise platform, organizations can support scalability without sacrificing oversight or trust.

Security and Compliance Across the Six Layers

Each layer of the AI platform plays a specific role in enterprise risk management:

- Data Layer: Enforces access control, data lineage, privacy, and regulatory compliance. Sensitive data is protected through role-based access, encryption, and auditability, forming the foundation for compliant AI applications for enterprises.

- Logic Layer: Makes decision rules explicit and traceable, enabling explainability and accountability. This is especially critical in regulated environments where AI-driven decisions must be justified to internal and external stakeholders.

- Model Layer: Supports monitoring for bias, performance degradation, and model drift. Continuous evaluation ensures AI outputs remain reliable, relevant, and aligned with enterprise expectations over time.

- Adopt & Deploy Layer: Secures APIs, integrations, and user access, ensuring AI systems operate safely within enterprise infrastructure and supporting smooth AI platform adoption across teams.

- Impact Layer: Provides transparency into outcomes, enabling oversight of performance, risk, and unintended consequences while reinforcing accountability at scale.

By embedding controls at each layer, enterprises avoid fragile “bolt-on governance” models that often break under scale or evolving regulatory requirements.

Lifecycle Management as a Core Capability

AI systems are not static. Data distributions change. Business rules evolve. User behavior shifts. Without structured lifecycle management, even high-performing models will degrade and introduce operational risk.

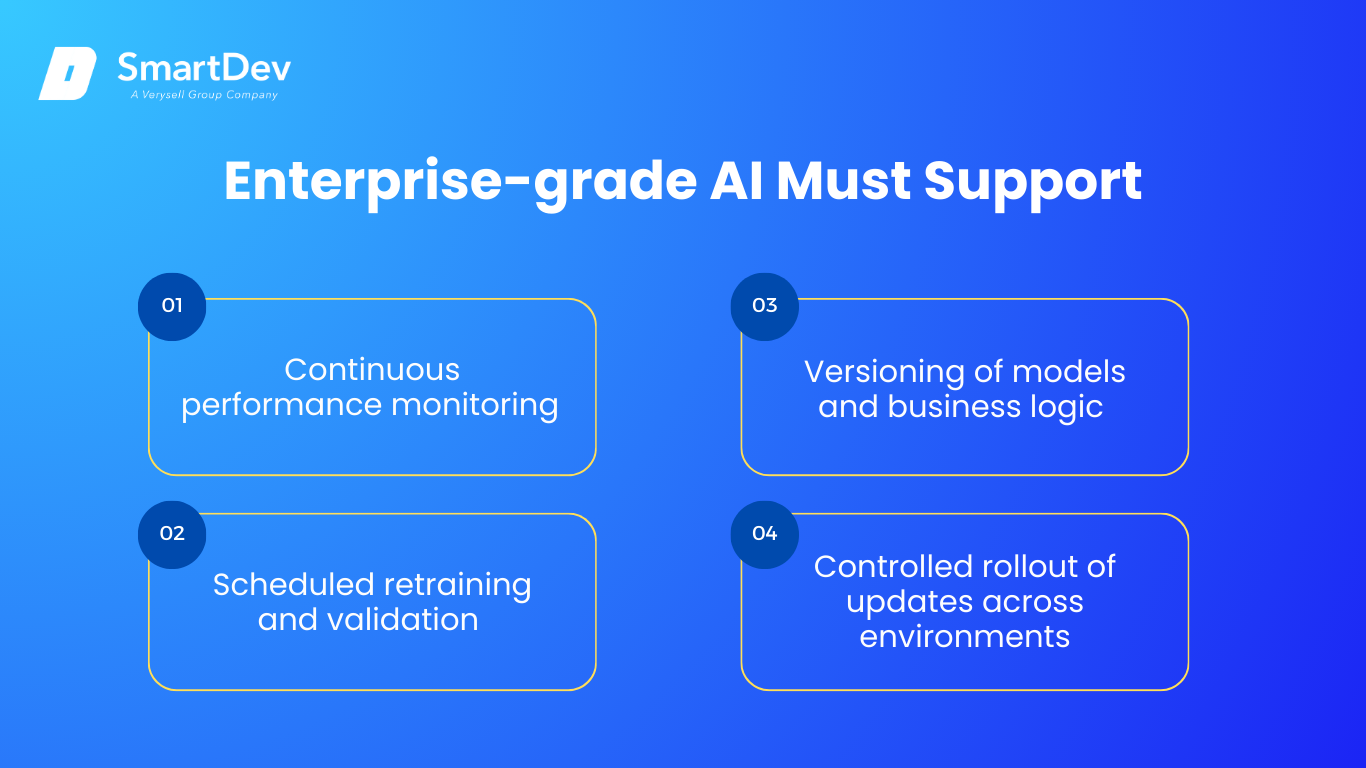

An enterprise-grade AI platform must function as a lifecycle management platform, supporting:

- Continuous performance monitoring

- Scheduled retraining and validation

- Versioning of models and business logic

- Controlled rollout of updates across environments

This discipline, often referred to as ModelOps, is increasingly recognized as a prerequisite for sustainable AI adoption. Effective lifecycle management ensures AI systems remain compliant, accurate, and trusted long after initial deployment.

Building Long-Term Trust in AI

Ultimately, security, compliance, and lifecycle management are not just technical concerns. They are trust enablers. When leaders can clearly explain how AI decisions are made, monitored, and governed, confidence increases. When users experience consistent, reliable behavior from AI systems, adoption follows naturally. This trust is what allows AI platforms to scale safely and deliver long-term value across the enterprise.

By designing governance and lifecycle management into the platform from day one, enterprises transform AI from a risky experiment into a durable operational capability that can scale safely across the organization.

Conclusion

Building an AI platform built on six core layers is the difference between pilots that fade and AI initiatives that transform. Paired with a 5-sprint, 10-week Product Factory, enterprises gain a repeatable, proven framework that turns data into value, avoids common pitfalls, and delivers measurable business outcomes.

As adoption accelerates — with nearly 8 out of 10 organizations using AI in business functions — the gap between strategy and execution becomes the defining challenge for digital leaders. An integrated platform approach bridges that gap, turning AI from hype into hard results that scale across the enterprise.