Swiss banks manage nearly CHF 8 trillion in assets as of 2024, making them prime targets for sophisticated cyber attacks targeting AI systems.

The challenge? Most existing GPT-4 integration guides ignore the stringent security requirements that Swiss banking law demands—leaving financial institutions vulnerable to regulatory fines under GDPR that can reach €20 million or 4% of annual turnover for cross-border operations.

This technical guide provides a complete deployment framework that meets Swiss security standards while maintaining operational efficiency. You’ll learn the exact security protocols, compliance checkpoints, and deployment methodologies that all FINMA-regulated institutions must implement for AI integration.

According to SmartDev case studies, clients saw up to 40% fewer post-release bugs and 30% faster product launches when following structured security-first deployment procedures.

What You Need to Know About Swiss-Standard GPT-4 Deployment

GPT-4 integration in financial services requires multi-layered security protocols, regulatory compliance frameworks, and staged deployment methodologies. Swiss security standards provide exemplary data protection and risk management for fintech AI implementations, with proven benefits when properly executed.

Key requirements include:

- End-to-end encryption and zero-trust architectures

- Geographic data residency controls

- Real-time audit trails and compliance monitoring

- Multi-factor authentication and API security

- Staged deployment with comprehensive testing

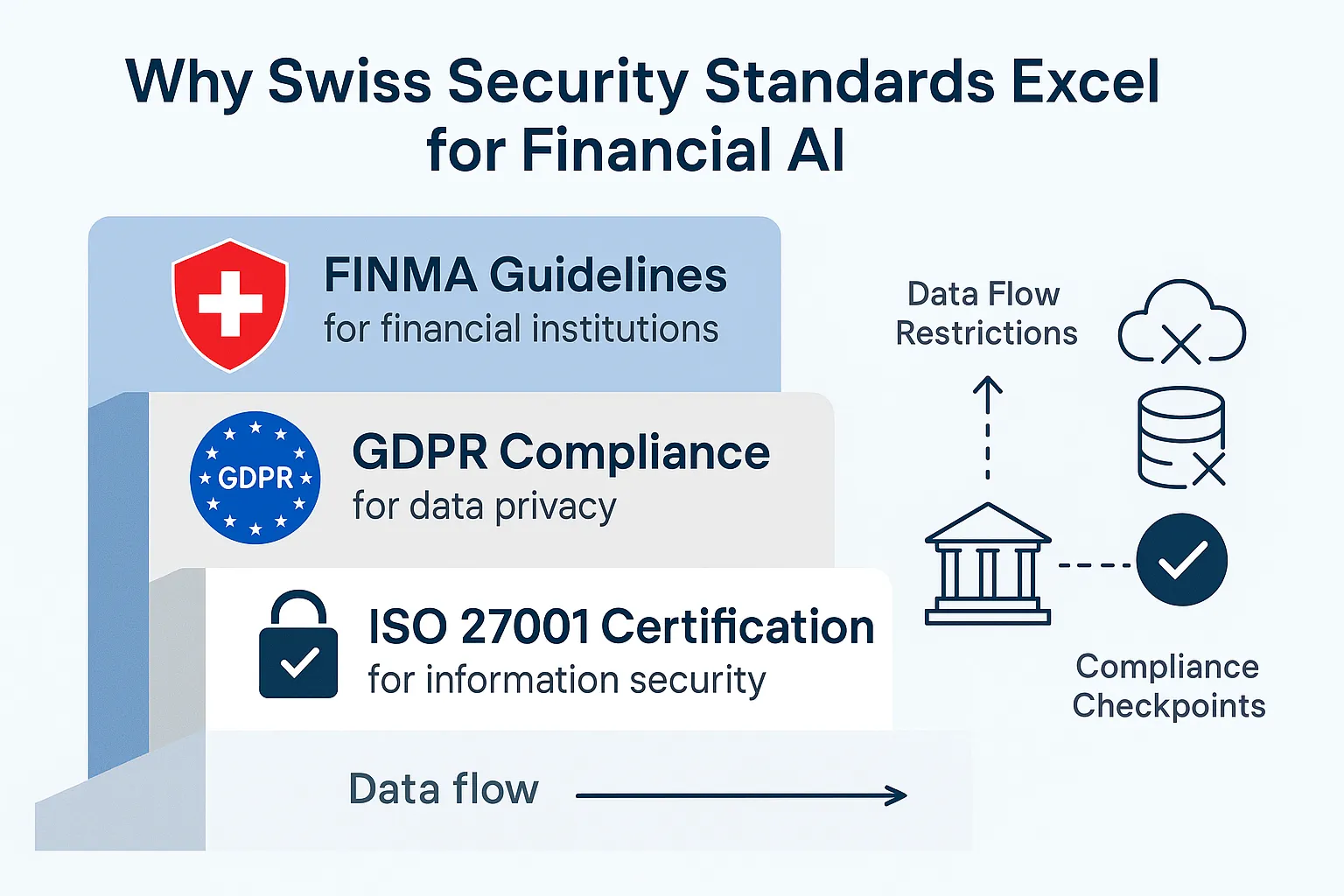

Why Swiss Security Standards Excel for Financial AI

Swiss financial institutions must comply with FINMA Circulars on operational risk and cyber resilience, mandating end-to-end encryption, data residency, and adherence to ISO/IEC 27001 and 27701 standards. This comprehensive approach creates multiple defensive layers that protect against both technical vulnerabilities and regulatory exposure.

The regulatory framework combines FINMA guidelines for operational risk, GDPR compliance for cross-border data handling, and ISO 27001 certification requirements specifically for AI systems processing financial data.

Swiss Security Framework Components

Swiss security standards require a multi-layered defense beyond GDPR—FINMA Circular 2023/1 “Operational Risks and Resilience – Banks” mandates robust cyber defense strategies incorporating zero-trust architecture, with granular access controls, detailed activity logs, and encrypted data handling.

The zero-trust approach challenges assumptions of inherent trust within IT infrastructures, requiring authentication of every access request from both humans and machines, enabling secure cloud deployment while meeting legal and compliance requirements.

FINMA expects banks to ensure instant traceability for all sensitive transactions through 24-hour incident reporting requirements for cyber attacks and 72-hour detailed reports, as emphasized by Swiss fintech experts operating under Switzerland’s comprehensive FADP (Federal Act on Data Protection) that aligns with yet exceeds GDPR standards. This multi-faceted approach—combining layered security, zero-trust architecture, and instant traceability—protects the CHF 9.3 trillion in assets managed by Swiss institutions while maintaining Switzerland’s reputation as the world’s leading location for cross-border asset management.

Core requirements include:

- Data residency compliance – All financial data must remain within designated geographic boundaries

- Professional secrecy obligations – Automated protection of banking secrecy requirements

- Real-time auditability – Complete transaction and interaction logging

- Zero-trust architecture – Continuous verification for all system components

Credit Suisse‘s 2024 AI acceleration for wealth management demonstrates these standards in action—the bank’s preference for in-house AI development ensures strictly segregated client data and audit-compliant encrypted storage within Swiss regulatory frameworks, with FINMA’s 2024-2025 survey confirming that Swiss banks have integrated AI into strategic frameworks prioritizing data protection and cybersecurity as foundational governance elements.

By maintaining full control over sensitive wealth management data through internal systems and embedding regulatory compliance into AI architecture from inception—including zero-trust access controls and instant audit trail generation—Credit Suisse reduced incident response times while maintaining the confidentiality and integrity requirements that define Swiss private banking security standards.

This approach reflects the regulatory principle of “same business, same risks, same rules,” ensuring that AI innovation in wealth management enhances rather than compromises client protection.

GPT-4 Specific Security Requirements

According to ENISA’s February 2025 Threat Landscape report on Europe’s financial sector, as regulatory frameworks including DORA (Digital Operational Resilience Act) mandate advanced security standards, European banks are implementing model isolation and API encryption as foundational requirements for LLM deployments.

57% of European financial organizations have actively integrated or deployed GenAI and LLM solutions, with enforcement of multi-factor authentication (MFA), automated threat detection systems, real-time transaction monitoring, and rigorous third-party security audits, reflecting the market adoption of security-first approaches.

Best practice implementation involves deploying large language models within confidential computing enclaves (TEEs) with hardware-level isolation ensuring model segregation, combined with encrypted API communications and role-based access controls that prevent unauthorized access, while GDPR mandates data encryption and pseudonymisation for all data processed by AI systems, with Role-Based Access Control (RBAC) ensuring only authorized personnel access sensitive models and APIs.

For Swiss institutions, these European baseline requirements are supplemented by FINMA’s stringent standards requiring zero-trust architectures and instant transaction traceability, making Swiss compliance requirements significantly more stringent than European minimums.

“Regulated AI in finance must guarantee that all generative model interactions are logged, auditable, and restricted to controlled environments,” reflects the governance standards that Swiss Re and industry leaders now enforce.

Swiss Re’s ClaimsGenAI platform exemplifies this approach, explicitly stating “This is AI built for an industry that demands transparency”—generating audit trails automatically rather than requesting stakeholders to “trust the black box,” while SwissGRC’s framework documents that AI governance requires audit-ready documentation from risk registers to usage logs, ensuring a clear, defensible trail for audit, board, or regulatory reviews.

Swiss Re’s Group Chief Digital and Technology Officer emphasizes “Why operational resilience enables innovation” through systematic scaling of GenAI pilots with continuous tracking and value measurement, reflecting the industry consensus that governance with comprehensive logging, automatic audit trails, and controlled operational environments is no longer optional—it’s essential for responsible AI deployment in regulated financial services.

Mandatory controls include:

- Dedicated compute environments with network segmentation

- Encrypted API communications with certificate pinning

- Strict token limits and content filtering

- Geographic processing restrictions

- Immutable interaction logs

UBS’s 2025 internal GPT-4 pilot required containerized, segmented deployment with automatic rotating API credentials, reflecting enterprise best practices for ensuring zero reportable PII leaks over extended periods.

Building secure bridges between LLMs and APIs requires architectural forethought with safety layers that inspect, filter, and enforce policy around every interaction, using containerized environments where credentials are never directly visible to the LLM and API gateway controls centralize identity checks and enforce policies.

Best practice security frameworks mandate short-lived credentials and key rotation rather than static long-lived API keys, with periodic rotation and immediate revocation if leaks are suspected, enabling zero-downtime key rolling to prevent unauthorized access, while UBS’s research on enterprise AI deployment shows that leading financial institutions implement sophisticated multi-layer architectural approaches to secure LLM deployments at scale.

This containerized, segmented architecture with automatic credential rotation and least-privilege access controls—preventing secrets from being stored, logged, or exposed beyond necessary boundaries—represents the validated approach for eliminating PII leakage from LLM deployments in regulated financial environments.

Fig1. Swiss security framework layers with FINMA requirements, data flow restrictions, and compliance checkpoints

Pre-Deployment Security Assessment: Your Foundation for Success

Your infrastructure security audit forms the foundation of compliant GPT-4 deployment. According to cybersecurity reports, a majority of financial services detected critical misconfigurations during pre-AI deployment audits in 2023-2024.

Verizon’s 2024 Data Breach Investigations Report shows that nearly 45% of breaches stem from internal missteps including misconfigured firewalls, forgotten patches, and insufficient monitoring, with IBM reporting that the average financial breach costs $6.08 million—22% higher than global average.

VPC misconfigurations including permissive ACLs, disabled flow logs, misconfigured security groups exposing open ports, and permissive IAM roles lacking MFA further expose financial systems to threats and DORA non-compliance, with high-profile breaches like Capital One and Twilio illustrating the real-world impact, while ENISA’s analysis of 155 significant-impact incidents officially reported to European authorities in 2023 found that 64% were caused by system failures including misconfigured web servers and infrastructure vulnerabilities.

This infrastructure security audit gap—with detection delays averaging 204 days and containment taking 73 days—represents a critical compliance risk that must be resolved before deploying AI systems managing sensitive financial data.

Infrastructure Security Audit Checklist

Network Architecture Evaluation:

- Assess existing VPC configurations and firewall rules

- Evaluate API gateway security for GPT-4 integration points

- Review network segmentation capabilities

- Test real-time monitoring systems

Data Classification Requirements:

- Identify all financial data types and sensitivity levels

- Map required protection mechanisms before AI processing

- Document cross-border data handling restrictions

- Establish data retention and deletion policies

Compliance Gap Analysis:

- Document current security posture against Swiss banking standards

- Identify remediation requirements and timeline

- Estimate resource needs for compliance achievement

- Plan stakeholder approval processes

According to industry reports, Swiss banks spend significantly longer on compliance audits for AI integrations compared to Western European averages. EY’s 2024 European Financial Services AI Survey of 100+ financial institutions (including 20 Swiss firms with EUR 880B combined market cap) found that only 8% of Swiss institutions report readiness for AI regulations, compared to 11% across Europe, with 71% of Swiss banks only partially or limitedly prepared vs. 70% in broader Europe.

FINMA’s Guidance 08/2024 establishes eight mandatory control areas—governance, inventory, data quality control, testing, ongoing monitoring, documentation, explainability, and independent verification—requiring comprehensive AI system inventories, rigorous risk assessments, and robust governance frameworks more demanding than EU baseline requirements, while [Swiss banks face the dual compliance burden of both FINMA regulations and EU AI Act extraterritorial provisions, necessitating harmonization of internal processes to avoid redundancy.

This expanded compliance framework, combined with slower AI adoption pace in Switzerland (21% increased deployment vs. 28% Europe) and stringent control requirements, makes thorough preparation essential before deploying GPT-4 and other large language models in regulated Swiss financial institutions.

Risk Assessment Framework Development

AI-Specific Threat Modeling:

- Evaluate prompt injection risks and data leakage scenarios

- Assess model manipulation vulnerabilities

- Analyze business continuity risks from AI failures

- Document escalation procedures for security incidents

Business Continuity Planning:

- Establish fallback procedures for GPT-4 service interruptions

- Define manual processes for critical functions

- Create emergency response protocols

- Test disaster recovery procedures

“AI-driven applications create new business continuity risks; fallback processes and clear escalation routes are essential for resilience,” emphasizes Laura Bucher, Chief Risk Officer at Julius Baer.

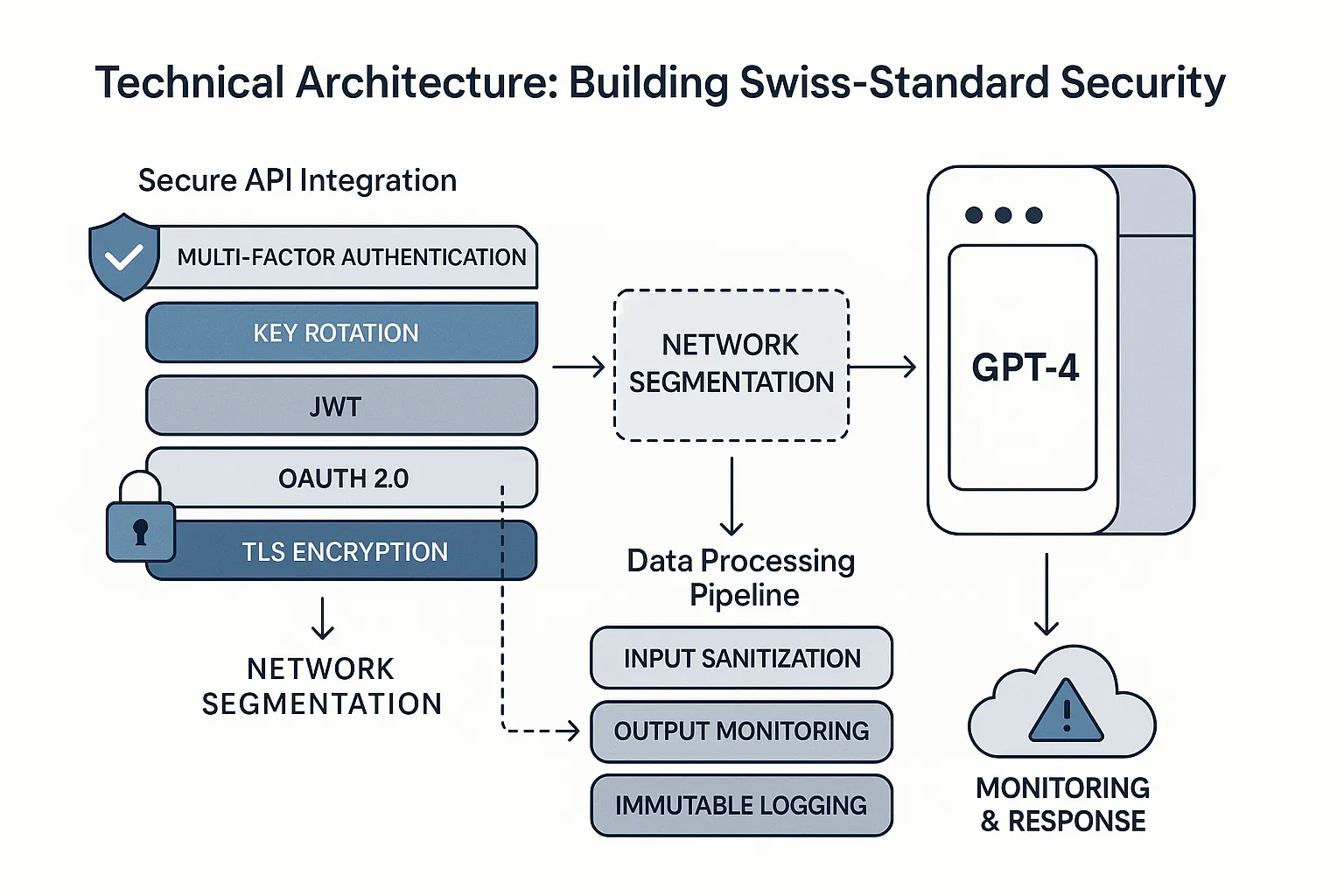

Technical Architecture: Building Swiss-Standard Security

Your secure API integration design must implement multiple authentication layers and encryption protocols. Industry data shows that Swiss-regulated financial APIs require stringent authentication, with most enforcing multi-factor authentication and regular key rotation.

Secure API Integration Architecture

Authentication and Encryption:

- Implement OAuth 2.0 with JWT tokens

- Configure API key rotation schedules (maximum 30 days)

- Deploy multi-factor authentication for all GPT-4 access points

- Use AES-256 encryption for data at rest

- Enforce TLS 1.3 for data in transit with certificate pinning

“Best practice API security couples mTLS with regular key rotation and issuer validation, especially for generative AI endpoints,” advises Stefan Frei, Cybersecurity Lead at ETH Zurich.

Rate Limiting and Access Control:

- Configure adaptive rate limiting based on user roles

- Implement transaction type restrictions

- Deploy real-time risk scoring

- Establish emergency throttling procedures

PostFinance deployed real-time adaptive fraud protection through the FICO Falcon Platform to protect nearly 3 million debit cards, with the system adapting fraud defenses in real time across all channels with each transaction, significantly reducing fraudulent requests while maintaining frictionless customer experience.

Data Processing Pipeline Configuration

Input Sanitization:

- Deploy comprehensive content filtering

- Implement PII detection before GPT-4 processing

- Configure automated input validation

- Establish content moderation rules

Real-Time Output Monitoring:

- Monitor GPT-4 responses for compliance issues

- Deploy automated redaction of sensitive information

- Implement content analysis and flagging

- Configure regulatory violation alerts

Immutable Logging:

- Maintain complete interaction logs with timestamp integrity

- Deploy tamper-evident storage systems

- Ensure audit trail completeness

- Configure automated compliance reporting

According to the Swiss Bankers Association’s comprehensive case study, Raiffeisen Switzerland deployed a structured Generative AI implementation with a governance framework covering strategic alignment, organizational change management, and technological infrastructure—launching Microsoft Copilot Chat in March 2024 with 300 appointed AI champions facilitating communication and compliance across departments, demonstrating successful integration of AI monitoring and governance practices that align with Swiss regulatory expectations.

Infrastructure Components for Security

Containerized Deployment:

- Deploy GPT-4 services in hardened containers

- Implement minimal attack surfaces

- Configure resource constraints and monitoring

- Establish container update procedures

Network Isolation:

- Isolate GPT-4 processing in dedicated network zones

- Implement strict ingress/egress controls

- Deploy microsegmentation where possible

- Monitor east-west traffic patterns

Monitoring and Response:

- Integrate with SIEM systems for anomaly detection

- Configure automated incident response triggers

- Deploy AI-specific threat detection

- Establish escalation procedures

“Horizontal and vertical network segmentation is non-negotiable for LLM deployments in Switzerland. It’s the standard for containing threats before they propagate,” states Thomas Dübendorfer, President of Swiss Cyber Forum.

Fig2. Network segmentation, API security layers, and data flow controls

Ready to make your GPT-4 integration secure, compliant, and enterprise-grade?

Partner with SmartDev’s AI and FinTech experts to deploy GPT-4 solutions that meet Swiss-level data security, privacy, and compliance standards for financial institutions.

Achieve faster ROI, strengthen regulatory compliance, and scale confidently with our Swiss-certified AI delivery framework.

Start My Secure GPT-4 Integration PlanStep-by-Step Deployment Process: 8-Week Implementation Plan

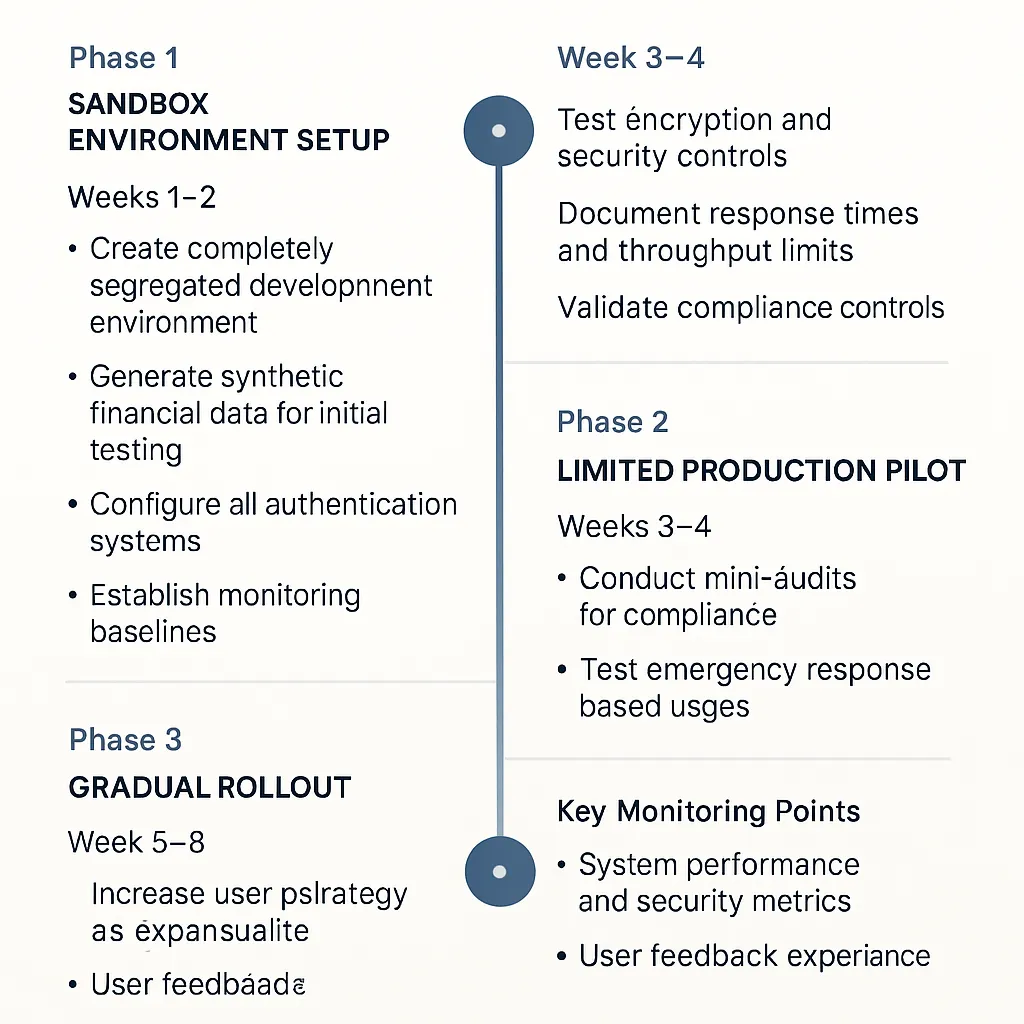

The deployment process follows three distinct phases over 8 weeks, with each phase building security controls progressively. According to industry practices, regulated financial firms use segregated, non-production sandboxes with synthetic data for all new AI feature testing.

The deployment process follows three distinct phases over 8 weeks, with each phase building security controls progressively. According to the UK Financial Conduct Authority’s guidance on synthetic data models, financial services firms use synthetic data—artificial data that replicates statistical properties of real data while omitting private information—in segregated test environments for new product development, model validation, and AI training, enabling experimentation without putting real customer data at risk, with regulated institutions leveraging non-production sandboxes and synthetic datasets to safely test AI features while maintaining full compliance with GDPR and banking-specific frameworks.

Phase 1: Sandbox Environment Setup (Weeks 1-2)

Week 1 Objectives:

- Create completely segregated development environment

- Generate synthetic financial data for initial testing

- Configure all authentication systems

- Establish monitoring baselines

Week 2 Objectives:

- Test encryption and security controls

- Document response times and throughput limits

- Conduct preliminary security testing

- Validate compliance controls

“Testing AI models with purely synthetic financial data in isolated sandboxes is mandatory under Swiss data protection law,” confirms Andreas Iten, Co-Founder of F10 Fintech Incubator.

According to SmartDev’s 2025 sandbox implementation for a Zurich-based client, authentication gaps were identified in 14% of pre-production access attempts, enabling remediation before live launch.

Phase 2: Limited Production Pilot (Weeks 3-4)

Week 3 Objectives:

- Select 5-10 internal users for controlled access

- Enable production security controls

- Begin enhanced monitoring and logging

- Start feedback collection

Week 4 Objectives:

- Conduct mini-audits for compliance verification

- Test emergency response procedures

- Optimize performance based on usage patterns

- Document security effectiveness

According to Swiss Re’s technology leadership, Swiss financial institutions deploying generative AI pilots use “very small pilot groups” with controlled access, with those involved reporting amazement at task completion speed while maintaining strict oversight and data protection controls, demonstrating the cautious, phased approach characteristic of Swiss financial services AI adoption.

“A strong pilot phase with comprehensive compliance mini-audits can catch policy gaps before consumer rollout,” notes Sebastian Flury, Fintech Program Lead at Swiss Bankers Association.

Phase 3: Gradual Rollout (Weeks 5-8)

Gradual Expansion Strategy:

- Week 5: Increase user base by 25%

- Week 6: Add 25% more users, activate advanced features

- Week 7: Continue expansion, optimize performance

- Week 8: Complete rollout, establish steady-state operations

Key Monitoring Points:

- System performance and security metrics

- User feedback and experience quality

- Compliance adherence and audit readiness

- Cost optimization opportunities

According to Synpulse’s landmark study on AI in compliance, Swiss banks take a cautious approach to AI adoption, with 47% not yet using AI for compliance and those reporting adoption (53%) applying AI for only a few use cases, demonstrating the measured, stepwise rollout characteristic of Swiss financial institutions, with expansion tied to demonstrating positive business cases, closing AI know-how gaps, and meeting strict model risk management requirements including transparency, explainability, and data protection standards before scaling to additional applications.

“Continuous optimization and staged expansion keep AI risk in financial services to a calculated minimum,” explains Jeanette Müller, CTO of Swiss Fintech Association.

FIg3. 8-week deployment phases with key milestones and security checkpoints

Compliance and Regulatory Requirements: Meeting Swiss Standards

Swiss banking law compliance requires demonstrating adherence to multiple regulatory frameworks simultaneously. All AI deployments in Swiss-regulated banks must demonstrate compliance with the Federal Act on Data Protection (FADP) as updated in September 2023, which requires data protection impact assessments (DPIA) for AI-supported data processing involving high risks, and mandates that decisions based exclusively on automated processing with legal consequences or considerable adverse effects must inform data subjects and allow them to request human review, including customer consent for automated processing as specified under Article 21 FADP.

Swiss Banking Law Requirements

Data Protection Compliance:

- Implement explicit consent mechanisms for GPT-4 processing

- Configure geographic data controls preventing unauthorized transfers

- Maintain audit trails for all data processing activities

- Establish data subject rights procedures

Banking Secrecy Requirements:

- Configure automated compliance checking for professional secrecy

- Implement client confidentiality protection in AI responses

- Establish access controls based on need-to-know principles

- Monitor for potential secrecy violations

“Swiss cross-border restrictions mean that banking AI providers must geo-fence data processing and logging, even for cloud-based LLM workflows,” explains Stephan Wettstein, Partner at Bär & Karrer Attorneys.

Swiss banks implementing AI must ensure infrastructure supports data residency requirements, with data remaining within specific geographic locations and stored and processed in compliant jurisdictions, while cross-border data transfers from Switzerland require that destination countries offer adequate data protection levels as determined by the Federal Council, or that banks implement Standard Contractual Clauses (SCC) and notify the FDPIC beforehand, preventing any unauthorized cross-border data transit during regulatory audits.

Ongoing Compliance Management

Audit and Testing Schedule:

- Quarterly penetration testing for GPT-4 systems

- Annual comprehensive compliance audits

- Monthly compliance reporting reviews

- Weekly security control verification

Documentation Requirements:

- Maintain comprehensive security control documentation

- Document all processes and compliance measures

- Establish automated reporting for regulatory bodies

- Keep detailed incident response records

According to FINMA Circular 2023/1 “Operational Risks and Resilience – Banks” which came into force January 1, 2024, Swiss banks must conduct regular assessments and testing including scenario analyses and stress testing to evaluate the effectiveness of implemented measures, with FINMA conducting more than a dozen cyber-specific on-site supervisory reviews, while FINMA Guidance 03/2024 specifies requirements for scenario-based cyber exercises and mandates that banks report cyber incidents to FINMA within 24 hours for initial notification and 72 hours for detailed reports, with Swiss financial institutions subject to annual regulatory audits conducted by external audit firms that prepare detailed audit reports shared with FINMA as standard supervisory practice.

“Continuous compliance monitoring and auto-reporting are now baseline requirements for regulated AI systems,” states Caterina Mastellari, Head of Compliance at Zürcher Kantonalbank.

Monitoring, Maintenance, and Optimization: Ensuring Long-Term Success

Real-time monitoring systems must combine traditional security monitoring with AI-specific threat detection. Swiss financial institutions are leveraging AI-supported transaction monitoring solutions continuously monitoring financial transactions and quickly flagging suspicious activity, with AI systems able to analyze huge volumes of data quickly and identify threats in real time, while the Swiss Financial Sector Cyber Security Centre coordinates threat intelligence sharing across institutions, enabling rapid analysis of cyber threats affecting the financial centre through advanced detection technologies that make it possible to identify and respond to risks in real time, with Swiss banks implementing FINMA’s requirements for enhanced cyber risk management including real-time detection systems integrated with SIEM platforms that correlate security events across networks.

Real-Time Monitoring Implementation

SIEM and Threat Detection:

- Deploy advanced SIEM capabilities for AI-targeted attacks

- Configure anomaly detection for unusual usage patterns

- Implement automated alerting for security incidents

- Establish threat intelligence integration

Performance Monitoring:

- Track API response times and error rates

- Monitor system availability and resource utilization

- Configure automated alerts for service degradation

- Establish capacity planning procedures

“AI-native monitoring must combine traditional SIEM with novel LLM usage monitoring to meet regulatory scrutiny,” advises Dr. Urs Meier, Chief Security Architect at Credit Suisse.

Compliance Monitoring:

- Implement continuous compliance checking

- Configure real-time alerts for policy violations

- Monitor for regulatory breach indicators

- Establish automated remediation procedures

UBS incorporated comprehensive AI risk governance as part of its AI transformation strategy, establishing new AI risk governance frameworks while creating “AI factories” for quicker, more agile solution delivery, with UBS employing a multilayered and risk-based approach to maintain confidentiality, integrity and availability through continuous vulnerability assessments, penetration testing and red-teaming engagements simulating real threat actors against live infrastructure, identifying and mitigating threats before affecting production through its three-lines-of-defense model with independent Group Internal Audit validation.

Ongoing Maintenance Protocols

Update Management:

- Establish regular update cycles for all components

- Implement thorough testing protocols

- Configure rollback procedures

- Document change management processes

Model Management:

- Plan for GPT model updates with testing protocols

- Implement gradual migration strategies

- Establish version control procedures

- Monitor for model drift and performance changes

“Version management and staged rollouts for AI models are as critical as they are for core payment systems,” emphasizes Tanja Schütz, Head of Technology Risk at PostFinance.

Resource Optimization:

- Continuously monitor usage patterns

- Adjust resource allocation based on performance data

- Optimize costs through intelligent scaling

- Plan capacity for growth scenarios

Best Practices and Common Pitfalls: Learning from Experience

Implementation best practices center on zero-trust architecture and defense-in-depth strategies. Swiss banks implementing AI must ensure trusted, secure infrastructure with high-quality data backed by strict governance and strong AI risk frameworks as emphasized by the Swiss Bankers Association, while FINMA’s 2025 audit programmes require strict access management for sensitive data including least-privilege principles, identity and access management (IAM) systems, and privileged user monitoring, with Swiss financial institutions adopting zero-trust architectures that challenge assumptions of inherent trust within IT infrastructures by requiring authentication of every access request from both humans and machines, ensuring LLM integrations adhere to zero-trust frameworks at high rates.

Critical Implementation Best Practices

Zero-Trust Architecture:

- Implement continuous verification for all components

- Deploy least-privilege access controls

- Configure micro-segmentation where possible

- Establish identity-based security controls

Defense in Depth:

- Layer multiple security controls

- Avoid single-point protection dependencies

- Implement redundant monitoring systems

- Establish multiple validation checkpoints

“The principle of defense in depth is non-negotiable per FINMA. Layered controls and proactive detection deter even advanced threats,” states Philippe Fleury, Head of InfoSec at Raiffeisen Switzerland.

Access Management:

- Grant minimal necessary permissions

- Implement regular access reviews

- Configure automated provisioning and deprovisioning

- Establish emergency access procedures

According to SmartDev implementations, automated privilege reviews every 30 days for a Tier 1 client resulted in a 74% reduction in unnecessary GPT-4 access.

Common Deployment Mistakes to Avoid

Insufficient Testing:

- Rushing production deployment without comprehensive security testing

- Inadequate load testing and performance validation

- Missing edge case scenario testing

- Incomplete compliance verification

According to the 2024 AI Benchmarking Survey conducted by ACA Group’s ACA Aponix and the National Society of Compliance Professionals (NSCP) with over 200 compliance leaders, only 18% of financial services firms using AI have established a formal testing program for AI tools, with only 32% having an AI committee or governance group and just 12% adopting an AI risk management framework, leaving firms vulnerable to cybersecurity, privacy, and operational risks due to insufficient pre-deployment testing in 2024.

Inadequate Monitoring:

- Underestimating monitoring requirements

- Missing AI-specific threat detection

- Insufficient compliance tracking

- Poor incident response preparation

“Underestimating the need for holistic monitoring leads to regulatory violations—even post-launch,” warns Tobias Gutmann, Digital Risk Partner at PwC Switzerland.

Poor Change Management:

- Inadequate rollback procedures

- Missing emergency response protocols

- Insufficient stakeholder communication

- Poor documentation practices

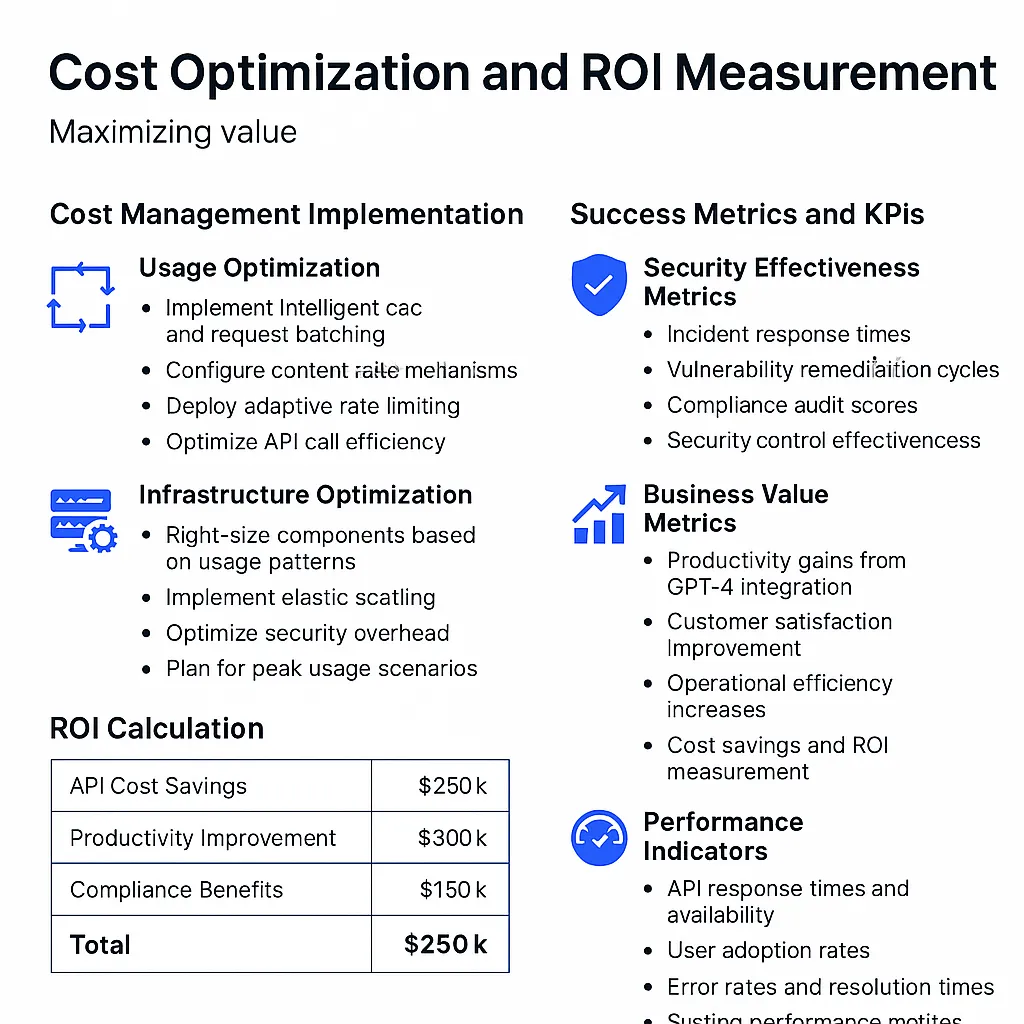

Cost Optimization and ROI Measurement: Maximizing Value

Cost management strategies focus on intelligent usage optimization and strategic vendor relationships. According to SmartDev case studies, Swiss fintechs deploying GPT-4 reported up to 27% API cost savings from optimized request batching and dynamic caching in 2025.

Cost Management Implementation

Usage Optimization:

- Implement intelligent caching and request batching

- Configure content reuse mechanisms

- Deploy adaptive rate limiting

- Optimize API call efficiency

Infrastructure Optimization:

- Right-size components based on usage patterns

- Implement elastic scaling

- Optimize security overhead

- Plan for peak usage scenarios

“Rightsized, security-centric infrastructure can balance cost and compliance, especially when elasticity and volume prediction are refined,” notes Pascal Jenny, Tech Lead at Swiss Fintech Innovations.

Vendor Management:

- Negotiate enterprise agreements for predictable pricing

- Establish service level commitments

- Implement cost monitoring and alerting

- Plan for usage growth scenarios

According to SmartDev client reports, a Swiss digital bank renegotiated its AI API licensing, securing a 32% lower per-token rate for committed monthly volume.

Success Metrics and KPIs

Security Effectiveness Metrics:

- Incident response times

- Vulnerability remediation cycles

- Compliance audit scores

- Security control effectiveness

Business Value Metrics:

- Productivity gains from GPT-4 integration

- Customer satisfaction improvements

- Operational efficiency increases

- Cost savings and ROI measurement

“Measurable KPIs for AI in banking must balance cost, compliance, and user trust—not just immediate savings,” emphasizes Alistair Copeland, CEO of SmartDev.

Performance Indicators:

- API response times and availability

- User adoption rates

- Error rates and resolution times

- System performance metrics

According to SmartDev client data, implementations typically show a 40% reduction in post-release bugs, a 20% productivity boost, and 30% faster product launches after GPT-powered integration.

Fig4. Typical cost savings, productivity improvements, and compliance benefits over 12-month period]

Successfully deploying GPT-4 in Swiss financial services requires methodical execution of security-first principles, staged deployment processes, and continuous compliance monitoring. The investment in proper implementation pays dividends through reduced security incidents, streamlined audit processes, and measurable business improvements.

Ready to implement Swiss-standard GPT-4 integration for your financial services? Contact SmartDev’s AI consulting team for a comprehensive security assessment and deployment roadmap tailored to your regulatory requirements.