Artificial Intelligence is sparking a massive wave of infrastructure investment worldwide. Tech giants have earmarked hundreds of billions of dollars to build out AI-specific computing capacity, data centers, and networks.

In fact, global spending on AI data centers alone is projected to exceed $1.4 trillion by 2027 (Economist Impact, 2025). This boom is driven by the critical need for specialized AI infrastructure – the hardware, software, and facilities that power modern AI applications. Major cloud providers and semiconductor firms are racing to deliver the computing power needed for AI, while governments and investors are treating AI infrastructure as the next great asset class.

However, alongside the growth opportunities come significant challenges: high capital costs, regulatory hurdles, and geopolitical risks.

However, alongside the growth opportunities come significant challenges: high capital costs, regulatory hurdles, and geopolitical risks.

This article provides a comprehensive look at AI infrastructure investment – from its core components and key players to market trends, strategies, risks, and outlook.

Introduction

AI infrastructure refers to the combination of hardware and software designed specifically to support AI workloads (such as machine learning and deep learning). This includes specialized chips (like GPUs and TPUs), high-performance servers and data centers, fast storage for big data, and the networking that connects these elements.

AI infrastructure refers to the combination of hardware and software designed specifically to support AI workloads (such as machine learning and deep learning). This includes specialized chips (like GPUs and TPUs), high-performance servers and data centers, fast storage for big data, and the networking that connects these elements.

Investing in AI infrastructure can involve building or funding these physical and digital resources – for example, constructing cutting-edge data centers or financing the development of advanced AI chips.

As you explore the foundations and future of AI infrastructure investment, it’s clear that robust infrastructure is only the starting point for real-world innovation. To see how organizations are translating these advancements into practical solutions, discover how ai-driven software development can turn cutting-edge infrastructure into measurable business value.

Why is AI infrastructure critical for AI advancement?

Modern AI models, especially deep learning and large language models, are incredibly data- and computation-hungry. Traditional IT systems cannot efficiently handle the “sheer amount of power needed to run AI workloads,” which is why AI projects require bespoke infrastructure. AI models’ capabilities scale with the computer and data available; as OpenAI’s CEO observed, larger models demand exponentially greater resources.

AI infrastructure provides the high-speed processing and massive parallel computations that let companies train complex models in days instead of months. It also enables real-time AI services for consumers (from cloud AI assistants to intelligent analytics) by ensuring low latency and reliability.

How does AI infrastructure differ from traditional IT infrastructure?

The difference lies in specialization and scale. Unlike general-purpose IT systems designed for a mix of routine business applications, AI infrastructure is purpose-built for high-performance computing and handling huge datasets.

For example, AI infrastructures rely on graphics processing units (GPUs) or similar accelerators instead of standard CPUs, because GPUs can perform many calculations in parallel – a necessity for training AI models. Google’s Tensor Processing Units (TPUs) are another example: chips custom-designed for neural network operations.

Additionally, AI infrastructure leverages ultra-fast, distributed storage and networking to shuffle the enormous volumes of training data. A traditional IT setup might consist of on-premises enterprise servers and storage mainly for transactional processing, whereas an AI infrastructure might be a cluster of thousands of GPU servers in the cloud, running machine learning frameworks (TensorFlow, PyTorch) across a distributed computing platform.

In other words, AI infrastructure is optimized for heavy parallel computation, vast data handling, and specialized AI software, setting it apart from conventional IT environments.

Economic and technological impact of AI infrastructure investments

The surge in AI infrastructure spending isn’t just a tech trend – it’s poised to deliver major economic dividends. Industry leaders are betting on AI as the “next fundamental driver of economic revolution”, akin to past transformations from electricity or the internet. Some estimates suggest AI could contribute over $15 trillion to the global economy by 2030, which explains why funding is pouring in from companies, investors, and governments alike.

In 2025, four of the world’s largest tech firms (Alphabet, Amazon, Meta, and Microsoft) together planned roughly $315 billion in capital spending primarily on AI and cloud infrastructure – a clear sign of expectations for enormous future payoffs. Such investments drive technological progress by enabling more powerful AI models that can boost productivity across industries (from healthcare to finance).

For example, improved AI infrastructure is directly linked to breakthroughs like advanced language models (which require supercomputer-level resources to train).

On a macro scale, broader access to AI capabilities can spur new products, services, and even new industries. Crucially, these infrastructure investments also create positive feedback loops: cheaper and more abundant AI compute power lowers the cost of AI services by an order of magnitude each year, which in turn leads to more adoption and further economic value.

2. Understanding AI Infrastructure: Core Components

Building AI capabilities requires a stack of specialized infrastructure. The core components of AI infrastructure include compute power, data centers/servers, networking connectivity, cloud platforms, and systems for model training & data storage. Each plays a distinct role in supporting AI at scale:

Building AI capabilities requires a stack of specialized infrastructure. The core components of AI infrastructure include compute power, data centers/servers, networking connectivity, cloud platforms, and systems for model training & data storage. Each plays a distinct role in supporting AI at scale:

a) Compute Power (GPUs, TPUs, and custom AI chips)

Compute is the engine of AI. Training sophisticated AI models involves billions of matrix operations, which can overwhelm normal processors. This is why GPUs (Graphics Processing Units) have become the workhorses of AI workloads – their massively parallel architecture allows them to perform multiple calculations simultaneously, greatly accelerating model training.

Nvidia’s GPU accelerators (like the A100 and H100) are widely used in AI servers due to their high throughput on neural network operations.

Similarly, companies have developed chips tailored for AI: Google’s TPU (Tensor Processing Unit) is built specifically for deep learning tasks, offering high efficiency for tensor computations. Other examples include AI FPGAs and ASICs, as well as emerging neuromorphic and quantum processors (on the horizon) aimed at AI. Having ample compute power – often measured in petaflops or now exaflops – is foundational. Leading AI firms invest in clusters with tens of thousands of GPUs or specialized chips to train large models (like GPT-4).

In essence, compute infrastructure is the “brain” of AI systems, and investment here focuses on acquiring cutting-edge chips and scaling up compute clusters.

b) Data Centers & AI Servers (Hyperscale vs. Edge Computing)

All that compute needs a home. AI data centers are the physical facilities housing racks of servers, storage, and networking gear dedicated to AI processing.

There are two broad categories: hyperscale data centers and edge data centers. Hyperscale data centers are massive, centralized facilities – often built in rural or remote locations – that can contain hundreds of thousands of processors in one place. They are designed for economies of scale, with enough power and cooling to run city-sized compute loads. For example, a hyperscale AI data center for a company like Google or Microsoft might consume over 1 GW of power (enough to power hundreds of thousands of homes).

In contrast, edge computing involves smaller data centers or server nodes distributed closer to end-users or data sources. Edge data centers might reside in urban areas, cell tower sites, or enterprise campuses – their proximity means lower latency and faster response for AI tasks that need real-time interaction. They typically have fewer servers than a hyperscale site but provide localized processing (important for IoT and latency-sensitive applications).

Both hyperscale and edge infrastructures are important: hyperscalers handle training of large AI models and heavy cloud services, while edge deployments enable inference and analytics to happen near the user (think of a factory floor AI system analyzing sensor data on-site).

Investors are seeing huge growth in this arena – the global hyperscale data center market is expected to grow from about $320 billion in 2023 to $1.44 trillion by 2029, and the edge data center market is growing ~10% annually as well.

This reflects how critical data center infrastructure has become for AI; whether it’s a giant AI supercomputing campus or a network of edge server hubs, capital is flowing into expanding these capabilities.

c) Networking & Connectivity (5G, Fiber Optics, Low-Latency Networks)

Fast networks are the unsung hero of AI infrastructure. AI systems rely on rapidly moving large datasets between storage and processors and often involve distributed computing across many machines. High-bandwidth, low-latency connectivity is therefore essential. This includes fiber-optic links inside data centers and between them, specialized high-speed interconnects (like NVIDIA’s InfiniBand or NVLink for server-to-server GPU communication), and robust internet backbones.

The rollout of 5G wireless networks also plays a role, especially for edge AI – 5G’s ultra-low latency and high throughput enable AI applications like autonomous vehicles or augmented reality by linking devices to edge servers in near real-time. As IBM notes, networks like 5G “enable the swift and safe movement of massive amounts of data between storage and processing” for AI.

Moreover, network infrastructure includes content delivery networks and cloud networking setups that ensure AI services can reach users globally without lag. Investment in this area ranges from laying submarine fiber cables to upgrading data center switches to supporting emerging technologies like satellite internet and 6G (future) for global AI accessibility.

In summary, without fast and reliable networks, even the best AI hardware would sit idle – thus, a significant portion of AI infrastructure investment targets connectivity (for example, companies investing in private 5G networks or new fiber routes to link their data centers).

d) Cloud AI Infrastructure (AWS, Google Cloud, Microsoft Azure, Oracle)

In recent years, much of AI infrastructure has moved to the cloud. The major cloud providers – Amazon Web Services, Google Cloud, Microsoft Azure, Oracle Cloud, IBM Cloud – have built out extensive AI-focused infrastructure that clients can use on-demand.

Investing in cloud AI infrastructure means both the cloud vendors investing in their own platforms, and enterprises investing by adopting cloud solutions. Cloud providers offer specialized AI services and hardware instances (e.g., AWS’s P4d instances with 8 Tesla GPUs, Google’s TPU pods, Azure’s ND-series GPU VMs) that allow companies to rent AI compute instead of building it all in-house. This has democratized access to AI infrastructure.

All the big providers are in an arms race here: AWS has developed custom AI chips like Inferentia (for inference) and Trainium (for training) to lower costs, Google has its TPU v5 pods and JAX ecosystem, Azure has partnered deeply with OpenAI and NVIDIA to host cutting-edge hardware, and Oracle Cloud is collaborating with NVIDIA to host OCI Superclusters of thousands of GPUs.

According to IBM, cloud platforms offer flexibility and scalability – enterprises can tap into massive AI horsepower when needed, without the upfront expense of building their own data center. At the same time, some organizations still invest in on-premises AI infrastructure for data control or performance reasons.

The bottom line is that cloud infrastructure has become a central component of AI investment strategies. Billions are being poured by cloud companies into expanding data center regions and adding AI capacity around the globe, since businesses large and small will likely leverage cloud AI services for their machine learning needs.

e) AI Model Training & Storage (HPC, Distributed Computing, Data Lakes)

AI infrastructure is not only about hardware boxes and cables – it’s also about how you harness them for model training and handle the data. On the training side, modern AI often uses distributed computing: splitting training workloads across many GPUs or servers working in parallel. This requires sophisticated software and orchestration (like Kubernetes, distributed ML frameworks, etc.) and high-speed networks as mentioned. “Distributed training… involves splitting the workload of training a model across multiple devices or machines to accelerate the process and handle larger datasets”, which is crucial for training deep learning models at scale.

In practice, companies invest in HPC (High-Performance Computing) clusters or supercomputers dedicated to AI. For example, Microsoft built an Azure supercomputer for OpenAI with thousands of GPUs linked by InfiniBand, enabling GPT models to be trained in a reasonable time. Such HPC investments, often running on specialized scheduling software and AI accelerators, are a key part of AI infrastructure.

On the storage side, “data is the new oil” for AI – enormous data lakes are needed to feed AI models. Thus, AI infrastructure includes scalable storage systems: from distributed file systems and databases to object storage that can hold petabytes of unstructured data. Object stores (like cloud S3, or on-premises solutions) are ideal for AI because they can handle vast amounts of unstructured data (images, videos, text) with durability and scalability. Many organizations invest in building centralized data lake repositories and the pipelines to continuously gather and prepare data for AI use.

Additionally, infrastructure for AI model lifecycle (MLOps) – like systems for model versioning, evaluation, and deployment (serving) – also comes into play, though those are more software processes riding atop the hardware. From an investment perspective, funding HPC and distributed computing capabilities (whether through cloud credits or buying supercomputer time) and ensuring robust data infrastructure (storage, ETL tools, etc.) are both crucial. They ensure that all the fancy hardware actually translates into faster model development and real insights.

In summary, AI infrastructure spans a wide array of components, all interlinked: powerful compute nodes, housed in advanced data centers, interconnected by high-speed networks, often delivered via cloud platforms, and supported by systems to handle big data and model training.

3. Key Players in AI Infrastructure Investment

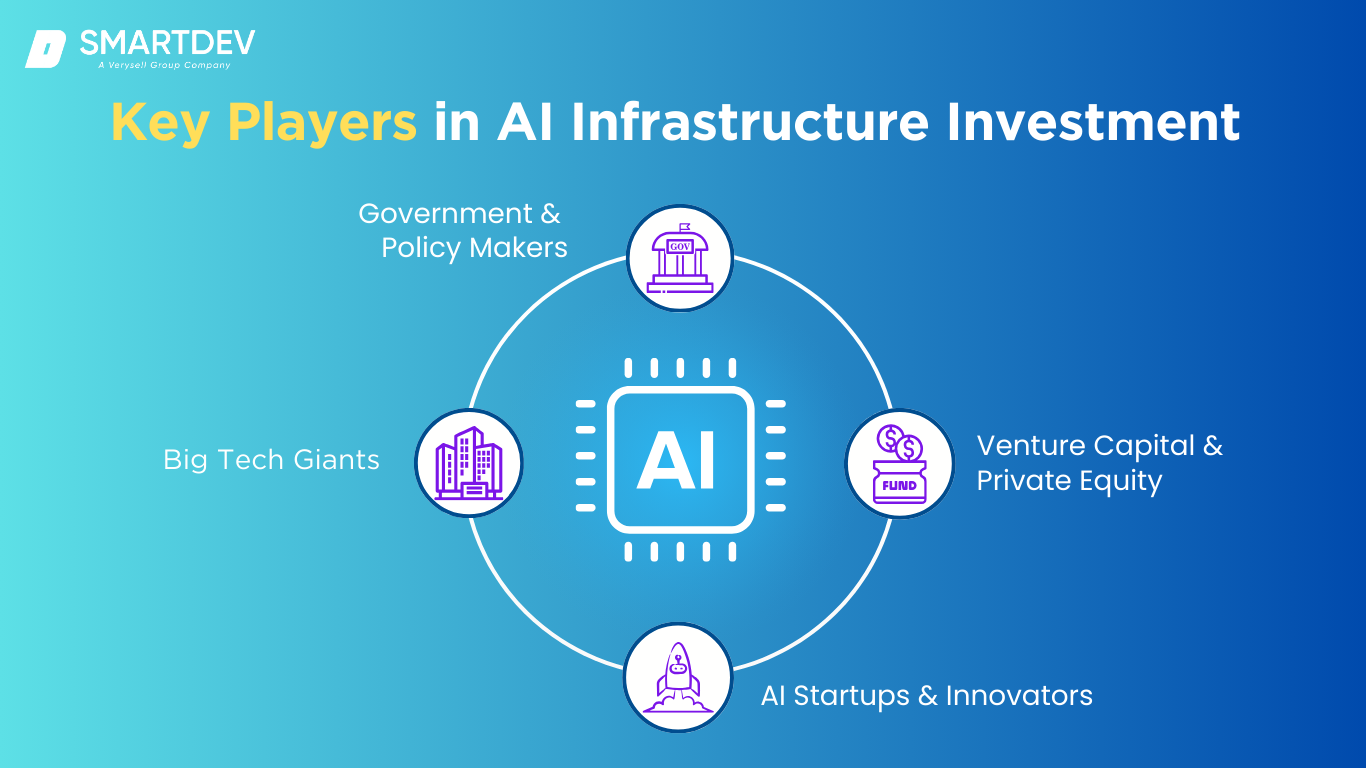

The race to build and control AI infrastructure involves a diverse set of players: technology giants, governments, investors, and startups. Each brings different strengths and motivations. Here we break down the key groups driving AI infrastructure investment:

a) Big Tech Giants

a) Big Tech Giants

The large technology companies are arguably the biggest spenders and innovators in AI infrastructure. They not only need massive AI capacity for their own products and services, but they also offer that infrastructure to others via cloud and platforms. For instance:

- Meta (Facebook’s parent) is undergoing a huge AI infrastructure push – CEO Mark Zuckerberg declared 2025 a “defining year” for AI and announced plans to invest $60–65 billion in capital expenditures largely toward AI projects. Meta is building new data centers “large enough to cover a significant part of Manhattan” and aims to have over 1.3 million GPUs by end of 2025 to power its AI models and services.

- Microsoft has dramatically increased its data center construction to support AI – it plans to spend $80 billion on data centers in fiscal 2025 alone, in part to accommodate partnerships with OpenAI and offer Azure AI services.

- Google has been a pioneer with its TPU-based infrastructure and continues to invest heavily in AI research supercomputers and cloud offerings (Google’s capex was guided at ~$75 billion for a recent year, much of its AI-related.

- Amazon’s AWS, the biggest cloud provider, is expanding its fleet of AI accelerators and recently announced billions of investments to build new infrastructure in regions like the U.S. state of Georgia to support cloud AI demand.

- Nvidia, while not a cloud provider, is a central player as the supplier of GPUs powering most AI data centers – the company’s chips are so indispensable that Nvidia has achieved a commanding 80% market share in AI-specific chips.

Collectively, these big tech firms are shaping the AI infrastructure landscape: they’re building global networks of AI supercomputing centers, designing custom silicon, and open-sourcing AI development frameworks. Their deep pockets (hundreds of billions in cash flow) allow them to make bold bets – such as Alphabet (Google) establishing an AI-first research center, or Amazon designing its own AI chips – that smaller players cannot.

b) Government & Policy Makers: US, EU, and China’s AI Infrastructure Investments

National governments see AI as a strategic priority and are injecting public funds and crafting policies to boost AI infrastructure.

In the United States, the federal government has launched initiatives like the CHIPS and Science Act, which set aside roughly $50 billion to fund domestic semiconductor manufacturing and R&D – crucial for securing AI chip supply chains. The U.S. also funds AI research centers and supercomputers (e.g. the National AI Research Resource proposal) and maintains national labs that advance AI computing.

Recently, there was an announcement of up to $500 billion in private-sector led AI infrastructure investment in the U.S., under a project dubbed “Stargate,” backed by companies like SoftBank, Oracle, and OpenAI, aiming to build AI-focused data centers across the country. This indicates a close collaboration in the U.S. between government aims and industry capital for AI build-out.

Meanwhile, the European Union is taking a regulatory-forward and investment-supported approach. The EU is finalizing the EU AI Act, the first comprehensive AI regulation, which will impose requirements on “high-risk” AI systems and ban certain AI uses – this regulatory environment incentivizes investments in trusted, compliant AI infrastructure. On funding, the EU has announced plans to mobilize €200 billion for AI investments by combining public and private funds. Specifically, the European Commission is contributing €50 billions of EU funds to bolster AI, including financing for four new AI “gigafactories” (large AI compute centers) across member states. Europe also invests in HPC (with initiatives like EuroHPC building world-class supercomputers for AI research) and in connectivity (e.g. pushing for widespread 5G to support AI and IoT).

China, for its part, has an explicit national goal to be the world leader in AI by 2030 and is making colossal investments in AI infrastructure. The Chinese government and state-affiliated funds are pouring money into building AI parks and computing centers – already over 40 industrial parks specializing in AI or robotics have been built, with more on the way. China’s tech giants (Baidu, Alibaba, Tencent, Huawei) work closely with the state on AI projects, such as developing AI supercomputing clouds and AI-driven city infrastructure.

Notably, China is launching a new 1 trillion-yuan (~$138 billion) government-backed fund to support emerging technologies including AI and semiconductors. This fund, structured as a public-private partnership, will invest in high-risk, long-term tech projects to strengthen China’s capabilities in areas like advanced AI chips and quantum computing.

Additionally, Chinese government policies provide generous subsidies, land, and electrical power allocations for new data center constructions within China, while also securing local supply chains (for instance, supporting domestic GPU startups to reduce reliance on foreign tech).

c) Venture Capital & Private Equity: AI-Focused Investment Funds

The surge in AI adoption has fueled record-breaking venture capital (VC) and private equity (PE) investments in AI infrastructure. In 2024, over 50% of all global VC funding went to AI startups, totaling $131.5 billion, marking a 52% year-over-year increase. Many investments target AI chips, cloud infrastructure, and data management tools, not just consumer applications.

Private equity firms are also making big moves, such as Blackstone’s $16 billion acquisition of data center operator AirTrunk, betting on the rising demand for AI computing power. Similarly, the Global AI Infrastructure Investment Partnership (GAIIP), backed by BlackRock, Blackstone, Microsoft, and Nvidia, aims to raise $80–100 billion for AI data centers and energy infrastructure. These investments signal that AI infrastructure is now viewed as a distinct asset class, akin to real estate or utilities.

While this capital influx accelerates innovation and lowers costs, it also creates a crowded market, making it harder to identify future winners. However, corporate venture arms and sovereign wealth funds remain actively seeking AI infrastructure opportunities, solidifying this as one of the hottest investment sectors today.

d) AI Startups & Innovators: Emerging AI Infrastructure Providers

Beyond tech giants, a growing ecosystem of startups is pioneering AI infrastructure solutions across hardware, software, and data management. Leading hardware startups include Graphcore (raised $700M, competing with Nvidia in AI chips), Cerebras Systems (developer of the world’s largest AI chip, funded with $720M), and SambaNova Systems (valued at $5B). These companies are pushing the boundaries of AI computing efficiency.

In networking, startups are designing low-latency interconnects and AI-specific network switches, while cloud-based innovators are offering decentralized AI computing platforms using blockchain. Emerging MLOps providers are streamlining AI deployment, and startups tackling data challenges are optimizing data lakes and synthetic data generation.

Big tech is actively acquiring AI infrastructure startups—Google, Microsoft, and Intel have all purchased AI chip and distributed computing companies to enhance their infrastructure portfolios. Meanwhile, startups like Tenstorrent (backed by Samsung) and Hugging Face’s BigCode are gaining traction by developing alternative AI hardware and open AI platforms.

For investors and enterprises, these startups present opportunities to diversify AI infrastructure investments and potentially disrupt dominant players. While some will be acquired, others may evolve into key players shaping the future of AI computing.

4. Investment Trends & Market Growth in AI Infrastructure

The momentum behind AI infrastructure is reflected in remarkable market growth and evolving investment trends. Analysts often refer to the current period as a paradigm shift where AI infrastructure is emerging as a new asset class of its own.

Let’s explore some key trends and market indicators:

a) Explosive Market Growth

The AI infrastructure market – spanning data centers, cloud services, and computing hardware—is expanding rapidly. AI workloads are fueling growth, with hyperscale data centers projected to quadruple in market size to $1.4 trillion by 2029. Global AI data center spending alone is expected to exceed $1.4 trillion by 2027, significantly outpacing general IT spending.

By 2027, global AI-related IT investments will reach $521 billion, up from $180 billion in 2023, reflecting businesses’ growing prioritization of AI infrastructure. North America and Asia (especially the U.S. and China) lead investments, but Europe, the Middle East, and other regions are increasing funding, often with government support. AI chip demand is also pushing semiconductor revenues to record highs, while data center real estate is booming, including REITs focused on server farms.

b) AI Infrastructure as a New Asset Class

AI infrastructure is now seen as a critical investment category, akin to power grids or transportation. Large institutional investors are backing AI infrastructure for long-term, stable returns, as demand for AI computation skyrockets. The GAIIP (Global AI Infrastructure Investment Partnership) is raising $100 billion for AI-related projects.

Data center trusts and AI-focused investment funds are emerging, while venture capitalists increasingly adopt the “picks and shovels” strategy, investing in GPU farms and AI platforms rather than AI applications. AI infrastructure ETFs and indices are also gaining traction, attracting sovereign wealth funds and pension funds seeking exposure to this high-growth sector.

c) Private vs. Public Sector Investments

Private sector players – tech giants and venture-backed firms – are driving most AI infrastructure funding, with U.S. private companies alone announcing $500 billion in AI infrastructure projects. However, governments are playing a key role in funding fundamental research and infrastructure in underserved areas.

The EU, for example, is mobilizing €200 billion in AI investments, leveraging €50 billion in public funds. China, meanwhile, provides state subsidies and strategic investments in AI data centers and domestic semiconductor manufacturing.

Public-private partnerships are key in areas like smart city infrastructure, national AI clouds, and AI-powered healthcare, offering steady returns for investors. Geopolitical tensions, such as U.S.-China competition in AI, are also shaping investment dynamics—export controls and subsidies are reshaping supply chains and funding strategies.

d) Mergers, Acquisitions & Strategic Partnerships

The M&A landscape in AI infrastructure is accelerating, with major acquisitions shaping the market:

- AMD acquired Xilinx for $35 billion to enhance AI and 5G computing.

- Intel bought Habana Labs for $2 billion to expand AI accelerator chip capabilities.

- NVIDIA’s $40 billion acquisition of ARM was blocked, highlighting the strategic importance of AI chip IP.

- Equinix and Digital Realty are acquiring data center firms, responding to AI-driven cloud demand. Private equity firms are consolidating AI infrastructure companies, while cloud providers are striking AI-specific partnerships (e.g., Oracle & NVIDIA’s DGX Cloud on OCI). AI hardware companies are integrating vertically—Microsoft not only invested in OpenAI but is also developing its own AI server hardware to control more of the AI stack.

✍️ Pro Tip: When planning your investment in AI infrastructure, it’s essential to understand the financial components involved. For more details on managing costs and budgeting for AI projects, check out our comprehensive guide on AI Development Costs.

5. AI Infrastructure Investment Strategies

Investing in AI infrastructure presents numerous opportunities across computing power, data centers, AI hardware, networking, and sustainability. Here are the key investment strategies:

a) Direct Investments in AI Compute Power

Companies and investors can directly purchase AI computing resources, such as GPUs and TPUs, to power AI workloads. Large enterprises, universities, and even investment firms are funding dedicated AI supercomputers or leasing AI hardware for cloud services. The challenge is ensuring high utilization and balancing capital expenditure with long-term ROI.

b) Data Centers & Cloud Infrastructure

Investing in data center real estate (via REITs like Equinix) or major cloud providers (Amazon, Microsoft, Google) is a way to capitalize on the growing demand for AI workloads. Investors can also target edge computing infrastructure, which supports AI applications in real-time, especially in telecom and 5G networks.

c) AI Hardware Investments (GPUs, TPUs, AI Chips)

Semiconductors are at the heart of AI, making investments in Nvidia, AMD, Intel, and AI-focused chip startups attractive. AI memory manufacturers, contract fabs like TSMC, and suppliers of critical semiconductor components also offer strong investment potential. Quantum and neuromorphic computing present long-term opportunities but carry higher risks.

d) AI Networking & Connectivity Ventures

AI requires robust networking infrastructure, including fiber optics, satellite internet (Starlink, OneWeb), and 5G/6G providers. Companies optimizing low-latency AI networking hardware (e.g., Arista Networks) are critical in supporting AI data traffic.

e) AI Energy Efficiency & Sustainability

Given AI’s high energy demands, investments in sustainable AI infrastructure—such as data centers powered by renewables, liquid cooling, and energy-efficient chips—are becoming essential. Companies innovating in green computing will likely gain regulatory support and customer preference.

6. Risks & Challenges in AI Infrastructure Investments

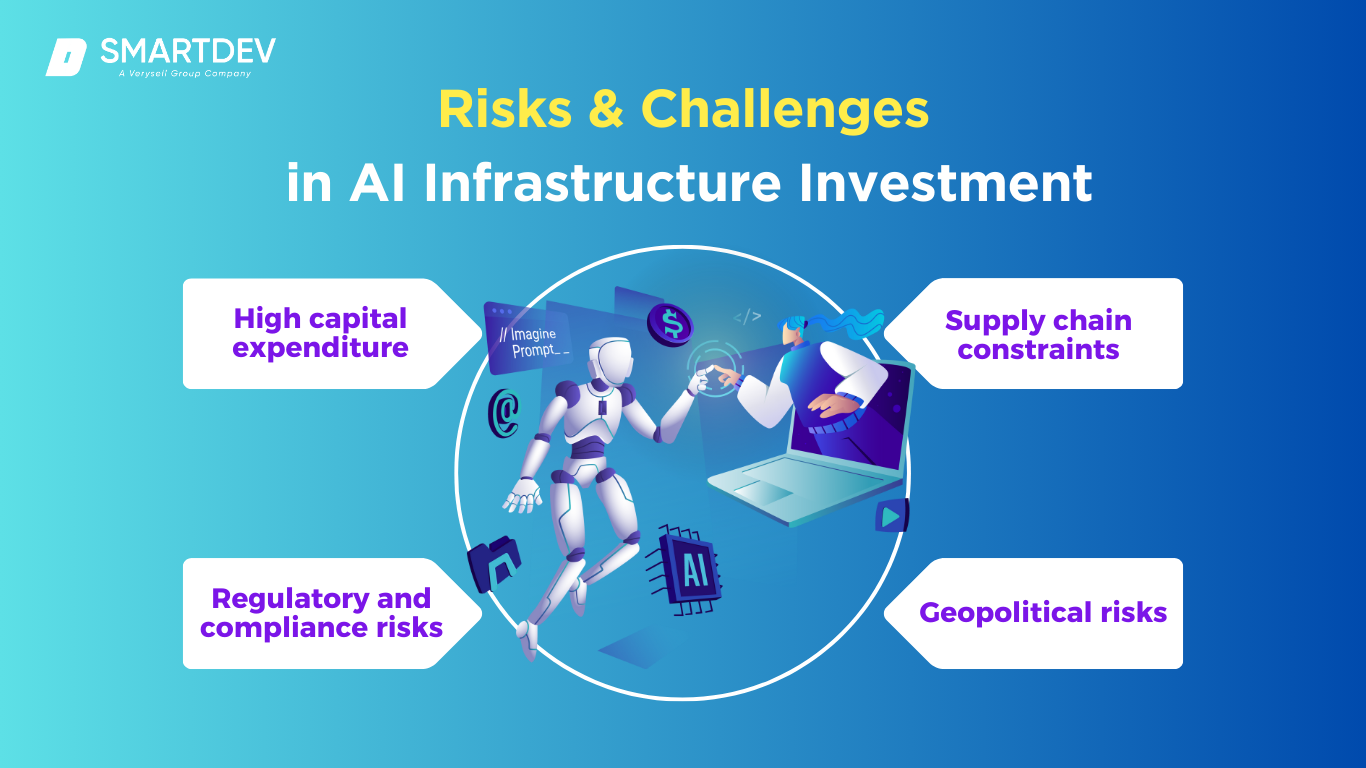

AI infrastructure investments come with significant risks. High capital expenditure is a primary challenge, requiring substantial funding before yielding returns. The rapid pace of technological advancements also creates uncertainty in ROI, as newer, more efficient AI hardware can quickly render existing investments obsolete.

AI infrastructure investments come with significant risks. High capital expenditure is a primary challenge, requiring substantial funding before yielding returns. The rapid pace of technological advancements also creates uncertainty in ROI, as newer, more efficient AI hardware can quickly render existing investments obsolete.

Regulatory and compliance risks are another concern. AI ethics laws, data privacy regulations, and emerging AI governance policies across different jurisdictions require infrastructure providers to ensure compliance, adding legal and operational complexities.

Supply chain constraints, especially in semiconductor manufacturing, continue to impact AI infrastructure expansion. The global chip shortage and geopolitical restrictions on semiconductor exports can delay infrastructure projects and drive-up costs.

Geopolitical risks also pose a major challenge. Trade restrictions, national security concerns, and cross-border data regulations can limit investment opportunities in certain regions. Companies investing in AI infrastructure must navigate these challenges while maintaining scalability and adaptability.

7. The Role of Governments & Public Policy in AI Infrastructure

Governments play a crucial role in shaping AI infrastructure through policies, funding, and regulations. The US is bolstering AI and semiconductor investments through initiatives like the CHIPS Act, which funds domestic chip production and research. The EU’s AI regulations and Digital Europe program focus on building ethical AI frameworks while supporting AI data centers and research facilities. China continues its aggressive push for AI and semiconductor self-sufficiency, with significant state-backed funding for AI parks, national AI research centers, and homegrown chip manufacturing.

For investors, navigating AI regulations is essential. Compliance with GDPR in the EU, AI governance policies in the US, and China’s cybersecurity laws can determine the viability of AI infrastructure investments in different markets.

8. AI Infrastructure Investment Case Studies

- Meta’s $65 Billion AI Infrastructure Plan

Meta has committed over $65 billion to AI infrastructure, focusing on building hyperscale data centers optimized for AI workloads. The company has deployed over 1.3 million GPUs across its facilities, enhancing AI-driven services such as content moderation, personalized recommendations, and virtual reality applications.

Meta’s investment in AI data centers supports the increasing demand for AI-powered applications across its social media platforms.

- Nvidia’s Dominance in AI Chips & Compute Investments

Nvidia has positioned itself as the undisputed leader in AI chips, commanding over 80% market share in AI accelerators used in data centers. The company’s A100 and H100 GPUs are the backbone of AI model training and inference for enterprises and cloud providers.

Nvidia is also investing in next-generation AI chips, including those designed for quantum computing, to maintain its leadership in the AI compute market.

- OpenAI & Microsoft: The AI Supercomputing Partnership

Microsoft has invested billions into OpenAI, providing Azure-based supercomputing resources to support the training of models like GPT-4. This collaboration has led to the development of one of the most powerful AI supercomputers, capable of processing large-scale AI workloads efficiently.

Microsoft’s AI investment strategy aligns with its push to integrate AI across its cloud services, productivity tools, and enterprise solutions.

- SoftBank & Oracle’s AI Cloud Investment Strategy

SoftBank and Oracle have formed a strategic partnership to expand AI cloud infrastructure. Oracle’s cloud services, optimized for AI workloads, provide enterprises with scalable AI computing power.

SoftBank’s investment focus is on leveraging AI for automation and robotics, with Oracle’s cloud infrastructure serving as a key enabler of these advancements.

9. The Future of AI Infrastructure Investment

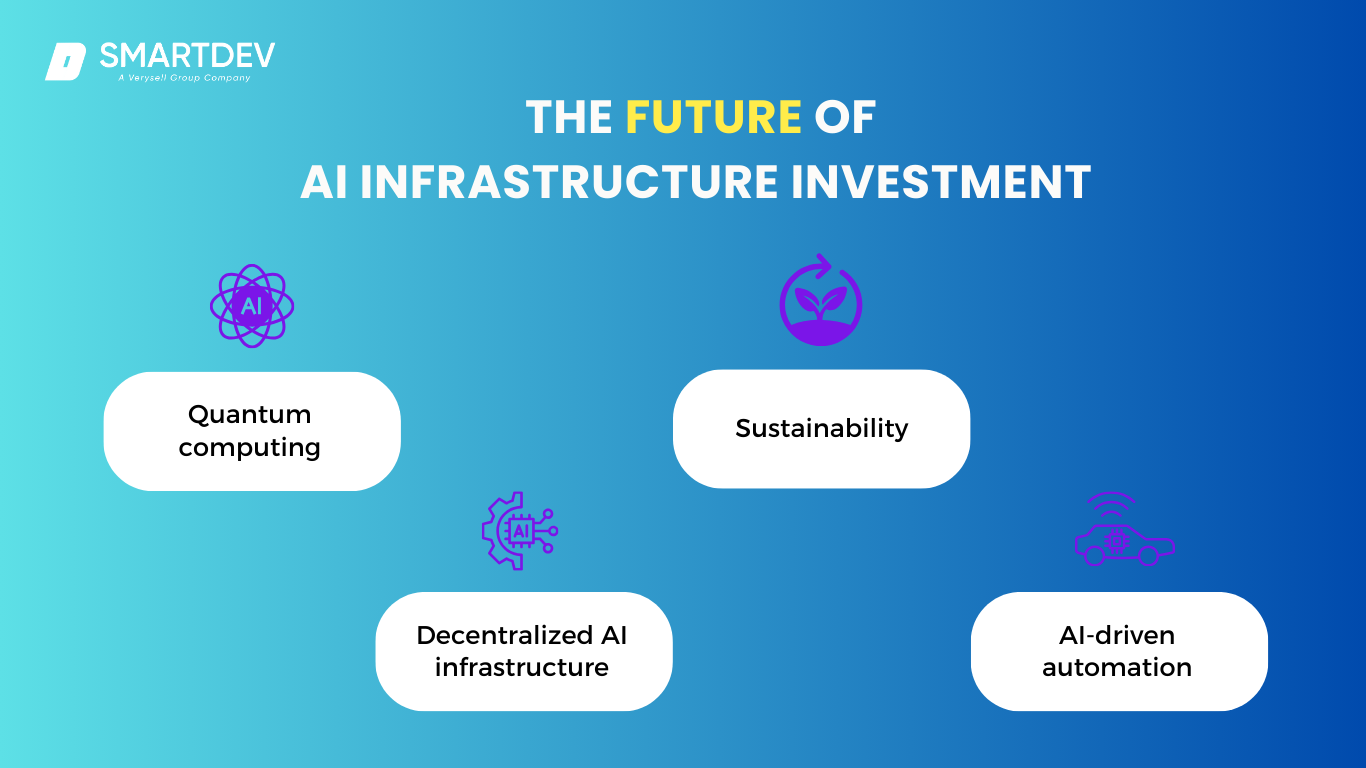

Quantum computing is emerging as a disruptive force in AI infrastructure, offering exponential improvements in AI computation capabilities. Companies investing in quantum AI infrastructure today could gain a significant competitive edge as the technology matures.

Quantum computing is emerging as a disruptive force in AI infrastructure, offering exponential improvements in AI computation capabilities. Companies investing in quantum AI infrastructure today could gain a significant competitive edge as the technology matures.

Sustainability in AI infrastructure is becoming a priority. With AI data centers consuming massive amounts of energy, investments in green AI infrastructure—such as carbon-neutral data centers, AI-powered energy optimization, and liquid cooling solutions—are gaining traction.

Decentralized AI infrastructure, enabled by blockchain and edge computing, is set to challenge traditional centralized cloud models. Distributed AI networks could reduce latency and improve AI service reliability, driving interest in edge computing investments.

AI-driven automation is also optimizing infrastructure investment decisions. AI-powered resource allocation, predictive maintenance, and automated scaling in data centers are reducing operational costs and improving efficiency. Businesses investing in AI infrastructure automation will gain a competitive advantage in managing large-scale AI operations.

10. Conclusion & Actionable Insights for Investors

As we’ve explored, AI infrastructure investment is a complex but immensely promising domain at the intersection of technology and finance. For businesses and investors looking to engage, here are some key takeaways and actionable insights:

- AI Infrastructure is the Backbone of the AI Revolution: Investing in the foundation – compute power, data centers, networks – enables all the value on top. As AI continues to transform industries and generate new applications, the demand for underlying infrastructure will only grow.

- Diversify Across the AI Infrastructure Stack: The ecosystem spans hardware (chips), physical infrastructure (data centers), cloud platforms, and emerging tech (edge, quantum). Each has its own risk/reward profile. For stability, one might invest in established data center REITs or major cloud providers. For high growth, consider leading chipmakers or promising startups in new hardware. For a forward bet, allocate a small portion to speculative areas like quantum computing or decentralized compute networks.

- Prioritize Sustainability and Efficiency: A recurring theme is that power and cooling are major challenges. Infrastructure that is energy-efficient and sustainable will have lower operating costs and higher acceptability in an increasingly regulated, eco-conscious world.

- Leverage Government Incentives and Stay Compliant: Governments worldwide are offering incentives – from grants to tax breaks – for building AI and semiconductor infrastructure domestically. They’re also rolling out new regulations. Savvy investors will align with these trends rather than fight them.

- Form Strategic Partnerships: The scale of AI infrastructure often requires collaboration. Whether it’s a cloud provider teaming up with a chipmaker (like Oracle+Nvidia) or an investor consortium funding a big project (like GAIIP with BlackRock, Microsoft, etc.), partnerships can pool resources and expertise.

- Stay Agile and Scalable: The AI field moves quickly – new model architectures, suddenly viral applications, or breakthroughs (like transformer models in 2017) can change requirements for infrastructure almost overnight. Build in flexibility.

- Focus on Talent and Expertise: At the end of the day, having skilled people is key to making the most of AI infrastructure. The world has a shortage of experts in high-performance computing, AI model tuning, and data center design.

- Plan for Risks and Mitigate Them: We discussed risks like supply chain issues, geopolitical factors, and regulatory changes. Proactively mitigating these will differentiate successful investments from failures.

- Future-Proof Your Portfolio: Keep an eye on emerging trends – like those we outlined (quantum, edge, etc.). You don’t need to jump on every hype but be aware and ready to move if they show tangible progress. For instance, if quantum computing starts solving useful problems, be prepared to invest in or partner with quantum providers to integrate that capability.

- Utilize AI to Invest in AI: As noted, use AI tools to aid your decisions. From predictive analytics for capacity planning to AI-driven market research, let the technology itself guide you. Many investors now use AI to analyze financial data or tech trends; apply that to the AI infrastructure domain specifically.

✍️ How to Get Started?

For investors new to this area, a sensible approach is to start with learning and small steps. Engage consultants or advisors who are experts in AI infrastructure to educate your team. Visit data centers or chip fabs if possible, to understand the physical reality of these investments. Begin with a modest investment or partnership to get hands-on experience – for instance, invest in a smaller data center project or a Series A round of an AI hardware startup, where you can observe and learn. Use that experience to scale up into bigger investments.

For businesses wanting to leverage AI infrastructure, start by identifying a high-impact AI project (like improving a product with AI or automating a process) and ensure you have the necessary infrastructure for that – maybe through a cloud trial or a small on-prem cluster – then build from there.

The world is betting big on AI – and that means betting big on the infrastructure that makes AI possible. By following the insights above, you can align your investment strategy with this megatrend and help shape the future of how intelligence is powered and delivered across the globe. The opportunity is vast: as AI transforms industries from healthcare to finance to entertainment, the demand for robust, efficient, and scalable infrastructure will skyrocket.

Now is the time to lay the groundwork (quite literally) for that future, and in doing so, secure a share of the tremendous value creation that AI promises. Future-proofing your portfolio with AI infrastructure investments today could be one of the best decisions to ensure relevance and growth in the tech-driven economy of tomorrow.

At SmartDev, we help businesses harness the power of AI infrastructure with cutting-edge solutions tailored to your needs. Whether you’re looking to integrate AI-driven automation, optimize cloud infrastructure, or develop scalable AI-powered applications, our expertise ensures seamless implementation and maximum ROI. Contact SmartDev today to explore how we can accelerate your AI strategy!

References:

- IDC: Worldwide AI Infrastructure Spending to Reach $154 Billion in 2027

- Meta’s $65 Billion AI Infrastructure Investment

- Nvidia’s Dominance in AI Chip Market

- Microsoft & OpenAI’s AI Supercomputer Partnership

- SoftBank and Oracle Cloud Partnership

- US CHIPS Act: Boosting Semiconductor and AI Infrastructure

- EU Digital Europe Programme

- China’s AI Development Plan and Semiconductor Strategy

- AWS Cloud Infrastructure for AI

- Quantum Computing’s Impact on AI Infrastructure

a) Big Tech Giants

a) Big Tech Giants