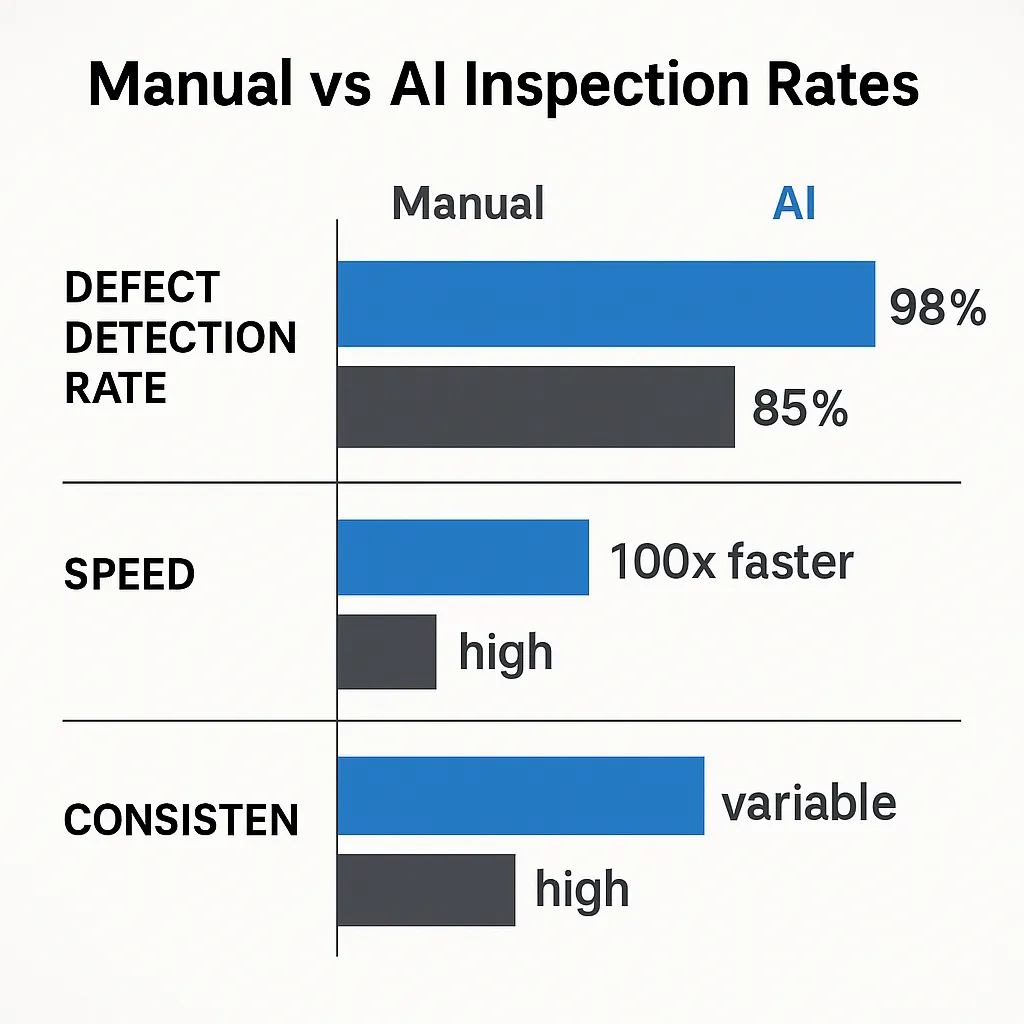

Computer vision is reshaping manufacturing quality control, with systems processing visual data 100 times faster than human inspectors while maintaining accuracy rates above 95% in mature, well-calibrated deployments. Traditional manual inspection methods catch only 80-85% of defects and require constant human oversight, creating bottlenecks that slow production and increase costs.

Smart manufacturers are implementing AI-powered visual inspection systems that slash manual QA effort by up to 50% while improving detection accuracy to 98-99% for trained defects. These automated systems operate 24/7 without fatigue, provide consistent results across shifts, and integrate seamlessly with existing production workflows.

This guide provides a practical roadmap for building effective computer vision quality control systems, from initial planning through deployment and optimization. Manufacturing companies report average savings of $200,000-500,000 annually per production line after successful implementation in optimized high-volume deployments.

Computer vision systems reduce manual manufacturing QA by up to 50% through automated defect detection at 98%+ accuracy rates in mature implementations.

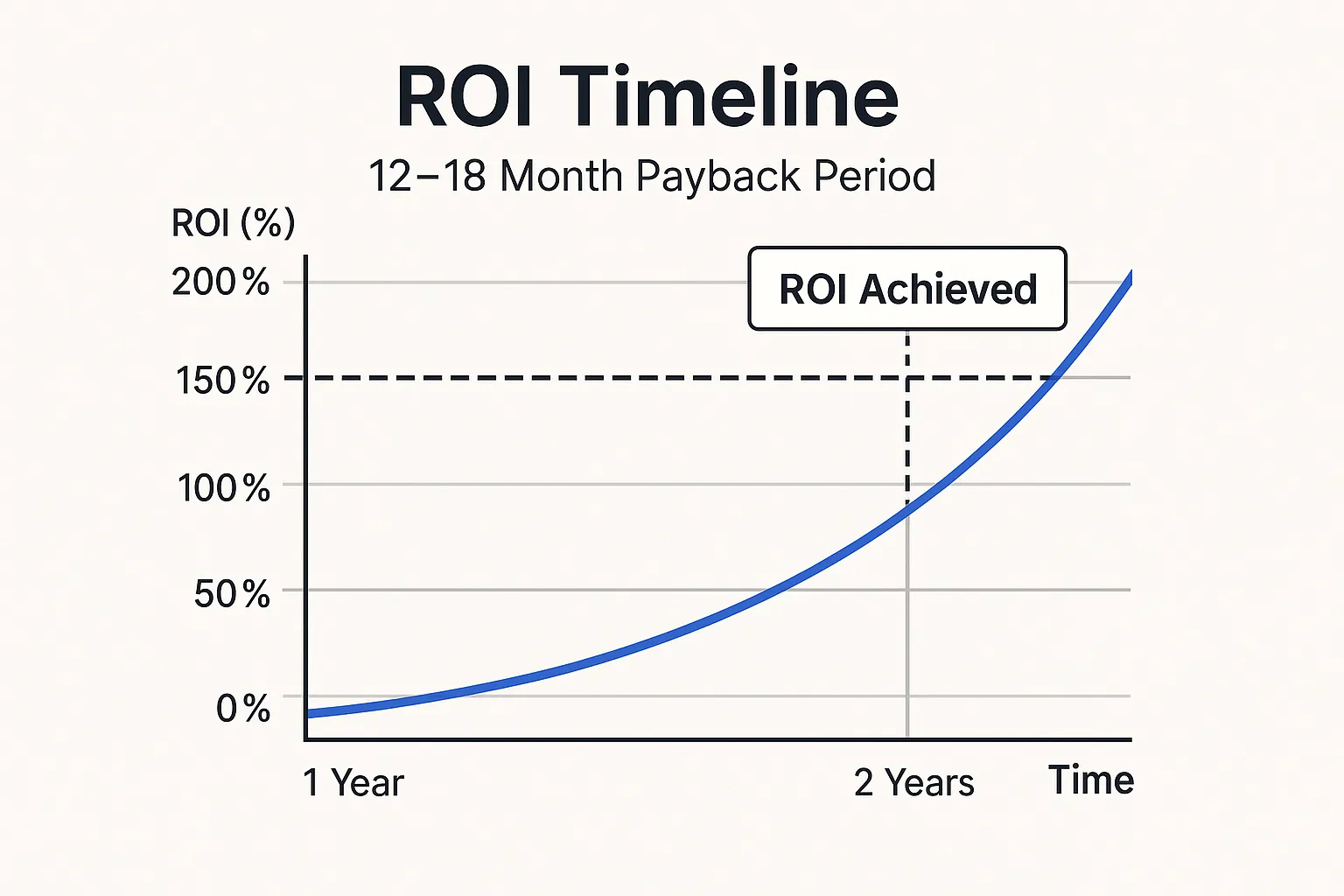

Most deployments achieve ROI within 12-18 months, with potential savings of $200,000-500,000 annually per production line while providing consistent 24/7 inspection capabilities.

Why Computer Vision Beats Traditional Manual QA

Computer vision fundamentally changes how quality control operates in manufacturing environments. These systems analyze thousands of products per hour with consistent precision that human inspectors simply can’t match over extended periods.

Automated visual inspection systems in electronics manufacturing reduced escapes and manual labor by 40-50%, with top-performing lines achieving over 98% defect detection rates. Unlike human inspectors who experience fatigue and inconsistency, AI-powered systems maintain peak performance throughout entire production shifts.

The speed advantage is equally impressive. Computer vision systems process visual data up to 100 times faster than human inspectors while maintaining accuracy rates above 95% in well-deployed systems. This processing speed allows real-time quality feedback that can immediately flag issues before defective products advance down the production line.

Fig.1 Manual vs AI Inspection Rates

“AI vision systems not only spot minute flaws with unprecedented consistency, but also uncover hidden trends in product quality no human audit could match,” explains Dr. Jörg Schreiber, Head of AI Engineering at Siemens Digital Industries.

Panasonic deployed a cognitive visual inspection service using deep convolutional neural networks (DCNN) for LCD manufacturing quality control, replacing manual inspection processes with AI-based computer vision in real-world production lines at major facilities, demonstrating how advanced AI vision systems transform traditional quality assurance workflows in electronics manufacturing. The system operates continuously without breaks, shift changes, or performance degradation that affects human inspectors.

Key Advantages Over Traditional Manual Methods

The performance gap between manual and automated inspection is substantial. Manual inspection achieves 80-85% defect detection, while computer vision delivers 98-99% for trained defects and operates 24/7 without performance loss.

Human inspectors face inherent limitations including:

- Eye strain and attention fatigue after 2-3 hours

- Subjective interpretation of quality standards

- Inconsistent detection rates between shifts and operators

- Inability to maintain peak performance throughout full production cycles

Computer vision eliminates these variables by applying consistent criteria to every inspection. Manufacturing facilities using automated visual inspection report 50% reduction in quality control labor costs within the first year of implementation. This reduction comes from reassigning QA staff to higher-value tasks like process improvement and exception handling rather than repetitive visual inspection.

The data collection capabilities of computer vision systems also surpass manual methods. Every inspection generates detailed records that enable trend analysis, process optimization, and predictive quality insights that manual inspection logs can’t provide.

Realistic ROI Expectations and Timeline

Most manufacturing computer vision implementations achieve full ROI within 12-18 months through reduced labor costs and improved product quality. Results vary based on production line complexity, deployment maturity, and integration requirements.

Direct savings include reduced QA staffing requirements, decreased product waste from catching defects earlier, and lower customer return rates. Soft benefits including improved brand reputation, reduced warranty claims, and enhanced customer satisfaction contribute significant long-term value that traditional ROI calculations may underestimate.

Companies report average savings of $200,000-500,000 annually per production line after successful deployment in optimized high-volume environments. These savings compound over time as systems require minimal ongoing maintenance compared to human labor costs that increase annually.

Implementation costs typically include:

- Hardware: $50,000-150,000

- Software development: $100,000-300,000

- Training/integration: $25,000-75,000

Note: Costs vary depending on production line complexity, integration requirements, and vendor selection.

How to Define Your Quality Control Requirements

Start by cataloging your current defect types, inspection frequency, and quality standards to determine which processes offer the highest automation potential. Focus on repetitive, high-volume inspection tasks where human inspectors spend more than 4 hours daily on visual assessment.

Document your existing quality control workflow including:

- Inspection points and frequency

- Defect categories and classification criteria

- Current detection rates and false positive levels

- Time spent per inspection task

- Quality standards and tolerance ranges

This baseline data helps identify the most valuable automation opportunities and sets realistic performance targets for your computer vision system.

Over 70% of manufacturers plan to automate high-volume, repeatable visual inspections as labor costs and quality standards increase. The key is selecting processes where automation provides clear benefits without disrupting critical human judgment tasks.

Create a priority matrix ranking inspection tasks by:

- Daily inspection volume

- Current error/escape rates

- Standardization potential

- Impact on production flow

Focus initial efforts on high-volume, standardized processes where consistent criteria apply and human inspectors currently spend significant time on routine visual assessment.

BMW Group developed the AIQX (Artificial Intelligence Quality Next) platform and SORDI—the world’s largest reference dataset for artificial intelligence in manufacturing since 2019—to digitize defect patterns and optimize inspection processes across production facilities, enabling AI systems to identify quality issues that human inspectors might miss, with AI-powered vision systems deployed across 26 cameras throughout BMW’s factory floors achieving up to 60% reduction in vehicle defects while delivering measurable financial returns, including $1 million in annual savings from AI-optimized processes, demonstrating how comprehensive defect digitization and machine learning datasets transform quality control from reactive to predictive.

Hardware Selection for Different Production Environments

Industrial-grade cameras with resolution between 2-12 megapixels handle most manufacturing inspection tasks, while specialized lighting systems ensure consistent image quality across production shifts. Camera selection depends on your specific defect types, required detection precision, and production line speed.

Essential Hardware Components:

- Cameras: 5-8 megapixel cameras provide optimal balance of detail and processing efficiency for most applications

- Lighting: LED ring lights, backlighting, and strobed illumination eliminate shadows

- Processing: Edge computing devices like NVIDIA Jetson or Intel NUC for real-time analysis

- Mounting: Industrial mounting systems with vibration dampening for harsh environments

Higher resolution cameras capture more detail but require more processing power and storage. For most manufacturing applications, 5-8 megapixel cameras provide the optimal balance of detail and processing efficiency. Specialized cameras like thermal or hyperspectral sensors may be needed for specific defect types.

Lighting represents the most critical component for consistent image quality. LED ring lights, backlighting, and strobed illumination eliminate shadows and provide uniform lighting conditions regardless of ambient factory lighting changes throughout shifts.

Edge computing devices provide real-time processing power without cloud dependency latencies. These systems process images locally, reducing network bandwidth requirements and enabling faster response times for real-time quality decisions.

Data Collection Strategy That Actually Works

Successful computer vision systems require 500-2000 labeled images per defect category for initial training, with ongoing collection of 50-100 new samples monthly for model refinement. Data quality directly impacts system accuracy, making careful collection planning essential.

Data Collection Best Practices:

- Diverse conditions: Collect images under various lighting conditions, product orientations, and defect severities

- Edge cases: Include borderline examples that human inspectors find challenging

- Representative sampling: Ensure data covers all product variants and seasonal variations

- Proper labeling: Use consistent annotation standards across all team members

“The foundation of any successful AI vision deployment is the time invested in collecting robust, representative image data—get this right and the rest falls into place,” explains Katie Hughes, Computer Vision Practice Lead at Capgemini.

High-quality training data with proper lighting and positioning reduces development time by 30-40% compared to rushed data collection approaches. Invest time upfront in standardized image capture protocols to accelerate model training and improve final system performance.

Store images with detailed metadata including defect classifications, severity levels, and production context. This structured approach enables efficient model training and supports future system improvements as production requirements evolve.

Framework Selection Guide for Manufacturing CV

TensorFlow and PyTorch dominate manufacturing computer vision applications, with TensorFlow Lite optimized for edge deployment and real-time inference speeds under 100 milliseconds.

Framework selection impacts development time, deployment options, and long-term maintenance requirements.

Framework Comparison:

| Framework | Best for | PROs | CONs |

| TensorFlow | Production deployment | Mature tools, pre-trained models | Steeper learning curve |

| PyTorch | Research & development | Flexible debugging, easier experimentation | Fewer deployment tools |

| OpenCV | Image preprocessing | Essential preprocessing capabilities | Limited ML capabilities |

TensorFlow offers mature production deployment tools and extensive pre-trained models for transfer learning. PyTorch provides more flexible research and development capabilities with easier debugging and experimentation workflows. Most teams choose based on existing expertise and specific deployment requirements.

OpenCV provides essential image preprocessing capabilities, while specialized libraries like Detectron2 excel at object detection and segmentation tasks. Combine these frameworks to create comprehensive computer vision pipelines that handle image preprocessing, model inference, and result processing.

“OpenCV remains the backbone for preprocessing, while frameworks like Detectron2 and YOLOv5 set industry standards in speed and accuracy for industrial object detection,” notes Dr. Abhinav Valada, Assistant Professor at the University of Freiburg.

Model Training That Delivers Results

Transfer learning from pre-trained models like YOLO or ResNet reduces training time from weeks to days while achieving 90%+ accuracy with limited datasets. This approach uses existing model knowledge to accelerate development for manufacturing-specific applications.

Custom model training requires GPU clusters for 24-48 hours, but transfer learning approaches can complete training in 4-8 hours on single GPU systems. Start with pre-trained models and fine-tune for your specific defect types rather than training from scratch.

Foxconn automated smartphone assembly QA using PyTorch/TensorFlow, slashing defect rates by 55% and achieving real-time defect flagging across 50 production lines. Their success came from systematic model optimization and iterative training with production feedback.

Model Training Process:

- Data preparation: Clean and organize training images

- Transfer learning: Start with pre-trained models

- Fine-tuning: Adapt models to specific defect types

- Validation: Test with separate datasets

- Optimization: Improve accuracy through iterative refinement

Model validation requires separate test datasets that the training process never sees. Use cross-validation techniques and hold-out test sets to ensure your system performs well on new, unseen production data rather than just memorizing training examples.

[Discover SmartDev’s Custom AI Model Training Services]

Integration with Manufacturing Systems

Modern computer vision systems integrate with MES (Manufacturing Execution Systems) and PLCs through REST APIs and MQTT protocols for seamless workflow integration. Plan integration architecture early to avoid costly retrofitting during deployment.

Real-time alerts and quality metrics feed directly into existing dashboards without requiring separate monitoring systems. Use standard industrial communication protocols to ensure compatibility with existing automation infrastructure and minimize integration complexity.

Database integration enables quality tracking, trend analysis, and compliance reporting through existing business intelligence systems. Design data schemas that support both real-time decision making and historical analysis for continuous improvement.

Ready to cut your manufacturing QA workload by 50%?

Build high-accuracy computer vision systems with SmartDev’s AI experts to automate defect detection, improve consistency, and streamline end-to-end quality control.

Boost throughput, reduce manual inspection hours, and confidently scale your production with enterprise-ready AI computer vision workflows.

Start My RAG Implementation PlanDefect Types That Work Best with Computer Vision

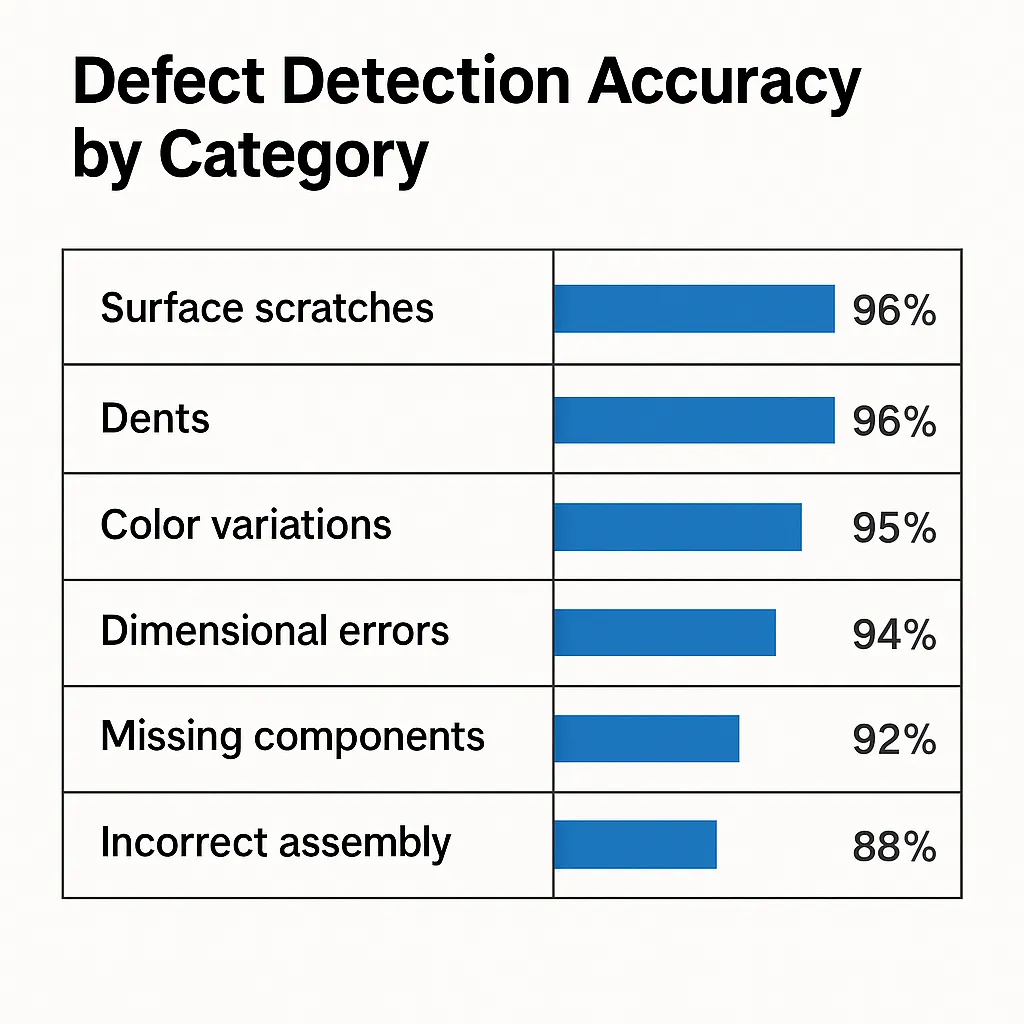

Surface scratches, dents, color variations, and dimensional inconsistencies represent 80% of manufacturing defects detectable through computer vision systems. These visual defects respond well to automated inspection because they create consistent patterns that machine learning models can reliably identify.

Highly Detectable Defects:

- Surface scratches and marks

- Dents and deformations

- Color variations and inconsistencies

- Dimensional errors and misalignment

- Missing components

- Incorrect assembly configurations

Object detection models excel at identifying missing components, misaligned parts, and incorrect assembly configurations. Image classification handles surface quality assessment including color matching, texture analysis, and finish quality evaluation with 95%+ accuracy rates in well-tuned systems.

Computer vision succeeds with defects that have clear visual characteristics and consistent appearance patterns. Complex defects requiring material property assessment or internal component inspection may require specialized sensors beyond standard cameras.

Dimensional measurement capabilities detect size variations, positioning errors, and geometric inconsistencies when combined with calibrated camera systems and appropriate lighting. These systems measure features to sub-millimeter precision when properly configured.

Fig.2 Defect Detection Accuracy Table by Category

Unilever‘s Tultitlán Factory implemented deep learning vision on a soap production line, reducing quality escapes by 48% and maintaining real-time feedback at 120 units/minute after setting defect thresholds from 3,000+ samples.

Speed Requirements for Production Lines

Manufacturing production lines require inference speeds under 200 milliseconds to avoid bottlenecks, achievable through optimized models running on dedicated edge computing hardware. Processing speed directly impacts production throughput and determines system feasibility for high-speed lines.

Batch processing approaches can handle 10-50 items per second depending on image resolution and model complexity. Single-item processing enables immediate feedback but requires faster hardware and optimized software for high-volume applications.

TensorFlow Lite and PyTorch Mobile deliver real-time inferencing at <100 ms per image on edge hardware. These mobile-optimized frameworks reduce model size and computational requirements without sacrificing accuracy for production deployment.

Processing Optimization Techniques:

- GPU acceleration

- Model quantization

- Neural network pruning

- Edge computing deployment

- Batch processing strategies

Network latency becomes critical for cloud-based processing. Edge computing eliminates network delays but requires local hardware investment. Hybrid approaches process routine inspections locally while sending complex cases to cloud resources for detailed analysis.

Quality Threshold Calibration

Establish quality thresholds using statistical analysis of 1000+ good and defective samples to minimize false positives while maintaining defect detection rates above 98%. Threshold setting requires balancing sensitivity against production disruption from false alarms.

Start with conservative thresholds that catch obvious defects with minimal false positives. Gradually optimize based on production feedback and operator experience to improve detection sensitivity without increasing false alarm rates.

Major manufacturers recalibrate vision inspection thresholds every 30-60 days and retain a rolling database of 1,000+ reference samples to avoid drift. Regular calibration ensures system accuracy as lighting conditions and product variations evolve.

“Regular quality threshold calibration is vital. In dynamic environments, recalibration every 4–6 weeks maintains 98%+ reliability as conditions evolve,” advises Min Liu, Senior AI Solution Architect at Cognex Corp.

Statistical process control techniques help identify when threshold adjustments are needed. Monitor detection rates, false positive trends, and operator feedback to optimize threshold settings for maximum effectiveness.

Automation Strategies That Reduce Manual QA

Implement graduated automation where computer vision handles primary screening and flags borderline cases for human review, reducing manual inspection workload by 70-80% in optimized deployments. This hybrid approach balances efficiency and accuracy while maintaining human oversight for complex decisions.

Automated sorting and rejection systems can process identified defects without human intervention for clearly defined quality failures. Reserve human judgment for edge cases and complex defect patterns that require contextual understanding beyond visual assessment.

Combining AI pre-screening with human escalation reduces manual inspection by 70–80% for large-scale manufacturers. This approach maintains quality standards while dramatically reducing routine inspection workload.

Automation Implementation Steps:

- Start conservative: Begin with high-confidence pass/fail decisions

- Gradual expansion: Increase automated decision scope as confidence builds

- Track performance: Monitor automation rates and false positive trends

- Optimize thresholds: Adjust based on production feedback

- Scale success: Apply proven approaches to additional production lines

GE Aviation automated blade inspection with CV + human-in-the-loop, cutting manual inspection time by 55% and increasing detection consistency year-on-year. Their success came from clearly defining which decisions systems could handle independently versus requiring human review.

Human-AI Collaboration That Works

Effective systems reserve human expertise for complex judgment calls while automating routine pass/fail decisions, achieving optimal accuracy with minimal labor investment. Train QA staff to focus on system monitoring, exception handling, and continuous improvement rather than repetitive visual inspection tasks.

“AI-powered QA teams shift from labelers to orchestrators, elevating their focus to process improvement and system tuning rather than repetitive checks,” explains Julia Ratner, CTO of Instrumental AI.

Human operators become quality system managers rather than individual item inspectors. They monitor system performance, investigate flagged items, and provide feedback that improves model accuracy over time. This evolution increases job satisfaction while improving overall quality outcomes.

Automated rejection systems can independently resolve 90% of defects in pharmaceutical and electronics plants, drastically shrinking human intervention needs. Focus human attention on the 10% of cases that require complex judgment or process improvement decisions.

New QA Staff Responsibilities:

- System performance monitoring

- Exception investigation and resolution

- Model training data collection

- Process improvement analysis

- Quality trend identification

Cross-training programs help QA staff transition from manual inspection to system oversight roles. Provide training on computer vision principles, statistical analysis, and continuous improvement methodologies to maximize the value of your human resources.

Performance Monitoring for Sustained Results

Track key metrics including detection accuracy, false positive rates, and processing speed to identify optimization opportunities and maintain system effectiveness. Performance monitoring enables proactive system maintenance and continuous improvement.

Weekly performance reviews and monthly model updates ensure sustained 50%+ reduction in manual QA effort over time. Regular monitoring prevents performance degradation and identifies opportunities for further automation.

Mature AI QA projects see 5–10% monthly accuracy gains using structured feedback loops with human-in-the-loop for threshold fine-tuning. Continuous improvement processes maximize long-term system value and ROI.

Key Performance Indicators:

- Defect detection rate (target: 98%+)

- False positive rate (target: <2%)

- Processing speed (target: <200ms per item)

- System uptime and availability

- Manual intervention frequency

Dashboard systems provide real-time visibility into system performance, quality trends, and operator productivity. Design metrics that support both operational decision-making and strategic quality improvement initiatives.

Performance Metrics That Matter

Monitor defect detection rate (target: 98%+), false positive rate (target: <2%), and processing speed (target: <200ms per item) as primary technical KPIs. These metrics directly impact both quality outcomes and production efficiency.

Business metrics should track labor cost reduction, quality improvement, and overall equipment effectiveness (OEE) improvements. Financial KPIs demonstrate ROI and support ongoing investment in system improvements and expansion.

System availability metrics including uptime, maintenance requirements, and mean time between failures ensure reliable operation. Track these operational metrics alongside quality performance to optimize total system effectiveness.

“True digital transformation ROI comes not only from cost savings, but from improved quality metrics and higher equipment effectiveness,” explains Steve Lyman, Director of Manufacturing Analytics at Deloitte.

Quality trend analysis identifies patterns in defect types, production shifts, and equipment performance that enable proactive process improvements. Use statistical process control techniques to detect meaningful changes in quality performance.

ROI Calculation Framework

Calculate ROI using reduced QA labor costs, improved product quality, and decreased customer returns against system development and maintenance expenses. Comprehensive ROI analysis includes both direct savings and indirect quality benefits.

Most implementations show positive ROI within 12-18 months with ongoing annual savings of 40-60% compared to manual inspection costs in optimized deployments. Factor in productivity improvements, reduced waste, and improved customer satisfaction for complete business impact assessment.

Include total cost of ownership in your analysis including hardware, software, training, and ongoing maintenance costs. Compare these investments against current and projected manual inspection costs over a 3-5 year timeframe for accurate ROI calculation.

Procter & Gamble scaled AI visual inspection from a pilot to 12 lines in 8 months, reporting 40% labor cost reduction and a 22% boost in OEE. Their systematic approach to ROI measurement and scaling provides a replicable model for other manufacturers.

Fig.3 12-18 month payback period

Soft benefits including improved brand reputation, reduced warranty claims, and enhanced customer satisfaction contribute significant long-term value that traditional ROI calculations may underestimate.

Scaling to Multiple Production Lines

Successful pilot implementations can scale to additional production lines within 3-6 months using established models and infrastructure templates. Scaling strategies should balance speed with quality to ensure consistent performance across multiple lines.

Cloud-based model management and edge deployment strategies support 5-20 production lines from centralized AI development resources. Standardized deployment approaches reduce per-line implementation costs and enable centralized maintenance and updates.

Template-based deployment accelerates scaling by standardizing hardware configurations, software installation, and integration procedures. Develop repeatable processes that minimize custom development for each new production line.

Scaling Best Practices:

- Standardize hardware configurations

- Create deployment templates

- Centralize model management

- Implement consistent training programs

- Plan phased rollouts with adequate support

Training programs ensure consistent operator capabilities across multiple lines. Standardized training materials and certification processes maintain quality standards as systems expand to additional production areas.

Change management becomes critical during scaling to ensure operator adoption and maintain production efficiency. Plan phased rollouts with adequate training and support to minimize disruption during system expansion.

Common Implementation Challenges

Inconsistent lighting causes 60% of computer vision implementation failures, resolved through controlled LED lighting systems and automated exposure adjustment algorithms. Lighting represents the most common technical challenge in computer vision deployments.

Standardized image capture protocols ensure consistent data quality across shifts and seasonal lighting variations. Companies succeeding in CV QA deployments standardize imaging protocols across all shifts and update calibration protocols every 30-60 days to ensure data consistency regardless of plant conditions.

Most Common Implementation Challenges:

- Inconsistent lighting conditions (60% of failures)

- Model accuracy and false positive management

- Integration complexity with legacy systems

- Inadequate training data collection

- Unrealistic performance expectations

Model accuracy challenges require a systematic approach to training data collection, threshold setting, and performance monitoring. Start with conservative detection thresholds and gradually optimize based on production feedback to minimize disruption while maintaining quality standards.

Integration complexity with legacy systems represents another common challenge. Legacy manufacturing systems require API development and middleware solutions to enable computer vision integration without disrupting existing workflows.

Bosch retrofitted legacy assembly with CV APIs and middleware, delivering full MES integration in 6 months and zero unplanned downtime during rollout. Their systematic integration approach provides a template for other manufacturers facing similar challenges.

Managing False Positives and Model Accuracy

Start with conservative detection thresholds and gradually optimize based on production feedback to minimize disruption while maintaining quality standards. Implement feedback loops where human QA decisions retrain models, improving accuracy by 5-10% monthly during initial deployment phases.

False positive management requires balancing sensitivity against production disruption. Too many false alarms reduce operator confidence and can lead to system bypass, while insufficient sensitivity misses actual defects.

Statistical analysis of inspection results helps identify optimal threshold settings. Use A/B testing approaches to evaluate threshold changes and measure their impact on both detection rates and false positive frequency.

Regular model retraining with updated production data maintains accuracy as products, processes, and conditions evolve. Plan for quarterly model updates and annual comprehensive model refresh to sustain long-term performance.

False Positive Reduction Strategies:

- Conservative initial thresholds

- Statistical threshold optimization

- Regular model retraining

- Operator feedback integration

- A/B testing for improvements

Legacy System Integration Solutions

Legacy manufacturing systems require API development and middleware solutions to enable computer vision integration without disrupting existing workflows. Plan integration architecture carefully to minimize development complexity and ensure reliable operation.

“Most legacy system challenges are surmountable with intermediary API layers—phased approaches mitigate risk while delivering quick wins,” notes Hafiz Rahman, Senior Solution Engineer at Siemens.

Phased integration approaches minimize production risk while enabling gradual transition to automated quality control processes. Start with parallel operation where computer vision runs alongside existing manual inspection before transitioning to full automation.

Database integration enables quality data to flow into existing MES and ERP systems without requiring separate data management. Design data schemas that support both real-time quality decisions and historical analysis for continuous improvement.

Network security considerations become important when integrating computer vision systems with existing IT infrastructure. Plan for secure data transmission, access control, and system monitoring to maintain cybersecurity standards.

Ready to implement computer vision quality control in your manufacturing operation? SmartDev’s AI development services combine deep manufacturing expertise with proven computer vision capabilities to deliver systems that achieve 50%+ reduction in manual QA effort.

Our manufacturing industry specialists have successfully deployed automated inspection systems across multiple production environments, helping clients achieve ROI within 12-18 months while maintaining Swiss-quality standards.

Contact our AI consulting team to discuss your specific quality control requirements and develop a customized implementation roadmap.