Introduction

Most AI initiatives fail to scale not because the models underperform, but because their business value remains unclear. Leaders fund pilots expecting evidence, yet many experiments end with technical results that cannot support investment decisions.

Early AI ROI measurement solves this problem. It helps organizations translate AI experiments into credible signals of value before committing to scale. Instead of waiting for full deployment, teams can assess whether an AI initiative deserves further investment based on measurable business impact.

Why AI ROI Must Be Measured Before Scale

Why AI ROI Must Be Measured Before Scale

AI ROI measurement at the experiment stage is about learning, not perfection. Unlike traditional projects, AI initiatives evolve rapidly. Models improve, data pipelines change, and user behavior adapts over time. Early ROI measurement must reflect this dynamic environment.

At this stage, AI project ROI calculation focuses on identifying signals of value rather than final returns. These signals indicate whether continued investment is justified. The emphasis is on direction. Is the AI solution reducing friction, improving outcomes, or unlocking efficiencies that did not exist before?

Key elements of early AI ROI measurement include:

- Estimating potential value ranges instead of single figures

- Linking technical performance to business processes

- Tracking both benefits and emerging costs

A common pitfall is treating AI proof of concept ROI as a purely technical validation. High accuracy or performance scores do not automatically translate into business value. If the AI output does not change decisions, workflows, or resource allocation, its ROI remains limited.

Early experiments also reveal constraints. Data quality issues, integration challenges, and adoption barriers often surface quickly. Capturing these insights is part of AI experiments business value. Even when pilots fall short, they provide evidence that informs smarter decisions.

Setting the Right Success Criteria for AI Proof of Concept ROI

Clear success criteria are the foundation of credible AI proof of concept ROI. Without them, pilot results are hard to interpret and easy to dispute. Defining success upfront ensures all stakeholders evaluate outcomes using the same standards.

Success criteria should focus on business outcomes, not model performance. Instead of asking whether the model performs well, teams should define what meaningful change the business expects to see and translate that into measurable targets.

Effective success criteria typically:

- Tie directly to a specific business problem

- Are observable within the pilot timeframe

- Balance ambition with feasibility

Well-defined criteria focus on tangible improvements rather than technical metrics. For example, an AI pilot may aim to reduce manual processing effort by a measurable percentage, lower error or exception rates in a key workflow, or shorten decision turnaround time for operational teams. In revenue-driven use cases, success may be defined by incremental uplift generated through AI-supported actions such as better targeting or pricing.

To improve clarity and alignment, teams should:

- Define minimum acceptable outcomes to justify continuation

- Specify expected outcomes based on current assumptions

- Outline upside scenarios if performance improves

Avoid vanity metrics unless they clearly support AI business impact metrics. Measuring AI pilot success depends on whether the AI system delivers outcomes that matter to the organization, not just results that look strong in technical evaluations.

Measuring AI Pilot Success with Practical Metrics

Once success criteria are defined, teams must consistently track the right metrics throughout the pilot. Measuring AI pilot success requires a balanced approach. Relying on a single metric often creates a distorted view of impact, while combining multiple perspectives provides a more reliable assessment.

Operational metrics usually reveal impact first. They show whether AI is meaningfully changing how work is performed across processes and teams. Common indicators include:

- Reduction in cycle or processing time

- Increased automation or task coverage

- Declines in error rates, rework, or exception handling

- Improvements in throughput or overall capacity

These metrics help teams pinpoint where AI is delivering value and where friction or adoption challenges still exist.

Financial metrics translate operational improvements into economic outcomes that leadership can evaluate. Typical examples include estimated labor cost savings, reductions in operational expenses, avoided losses or downtime, and early signs of revenue uplift linked to AI-supported actions.

At the pilot stage, AI project ROI calculation depends on assumptions rather than final numbers. Clearly documenting these assumptions is essential. Transparency strengthens credibility and helps leaders realistically assess risk and upside.

Supporting metrics adds important context to the results. Measures such as time-to-value from pilot launch, user adoption and confidence levels, and the stability of AI outputs help explain why certain financial or operational outcomes were achieved. Together, operational, financial, and supporting metrics form a balanced view of AI proof of concept ROI and reduce overreliance on any single indicator.

From Pilot Metrics to Board-Level Evidence

Collecting metrics alone is not sufficient to justify continued AI investment. AI business impact metrics must be translated into clear, credible evidence that decision-makers can easily understand and trust. This requires shifting the conversation away from technical performance and toward measurable business outcomes.

Effective business evidence explicitly connects pilot results to strategic priorities. Decision-makers are more receptive when AI outcomes align with goals they already track and care about, such as:

- Improved cost efficiency and productivity across teams or processes

- Revenue growth or revenue protection enabled by better decisions

- Reduced operational, regulatory, or financial risk

- Enhanced customer experience through faster or more consistent outcomes

One of the most persuasive techniques is using clear before-and-after comparisons. Showing how a process performed prior to the AI experiment and how it performed afterward makes the impact tangible. These comparisons help stakeholders see exactly what changed, why it changed, and how much value was created as a result of the AI system.

One of the most persuasive techniques is using clear before-and-after comparisons. Showing how a process performed prior to the AI experiment and how it performed afterward makes the impact tangible. These comparisons help stakeholders see exactly what changed, why it changed, and how much value was created as a result of the AI system.

Scenario-based presentations further strengthen credibility by acknowledging uncertainty rather than hiding it. Instead of presenting a single ROI number, teams should outline:

- Conservative scenarios based on minimum observed performance

- Expected scenarios that reflect actual pilot results

- Upside scenarios that assume reasonable improvements as the system matures

Concrete examples to add clarity and relevance. Explaining how a specific team reduced manual work, avoided costly errors, or accelerated decision-making helps leaders visualize what scaling could look like in practice. Including non-financial benefits such as faster response times, improved consistency, or higher confidence in decisions. rounds out the ROI narrative and presents a more realistic picture of AI experiments business value.

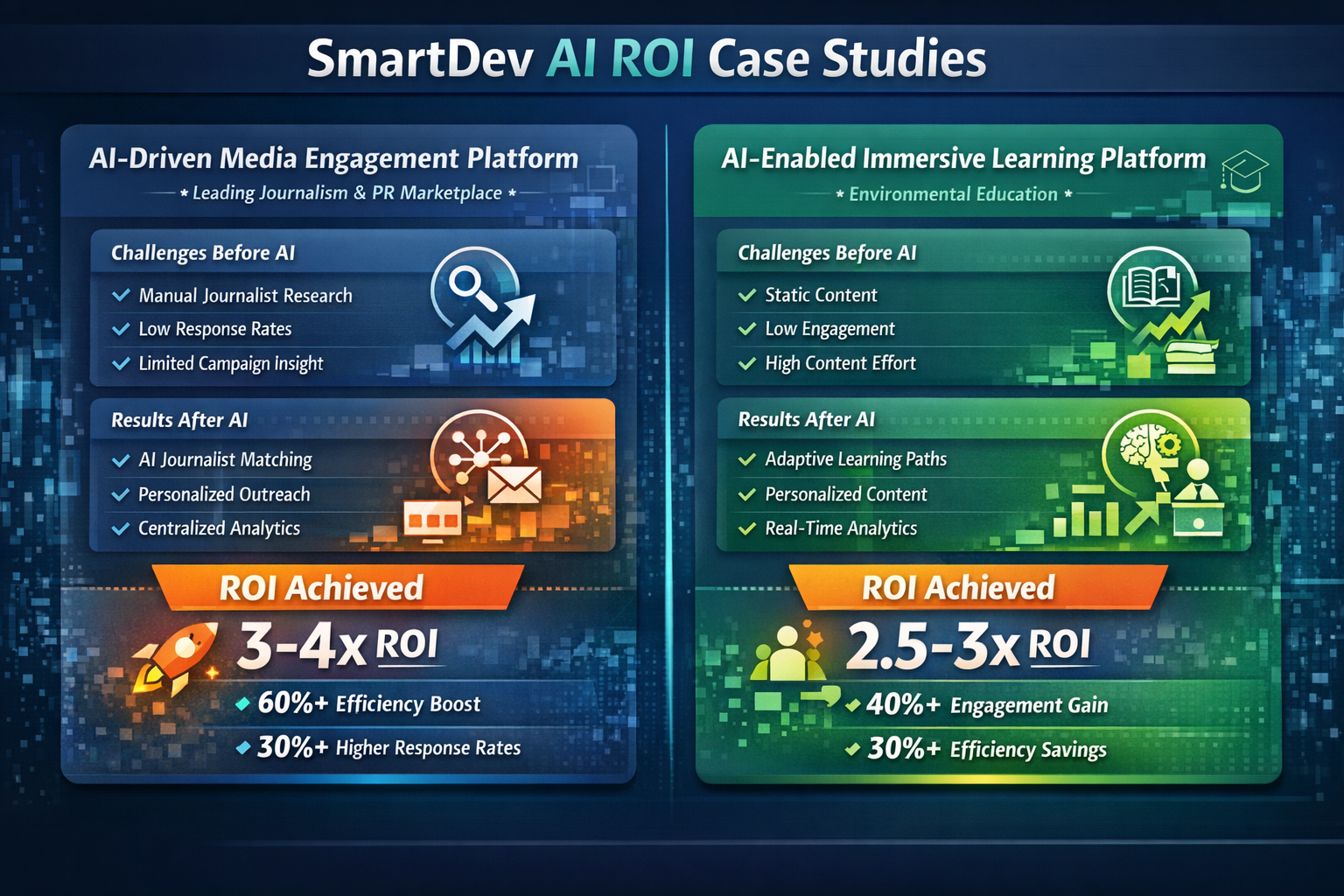

Case Studies: Turning AI Experiments into Measurable Business Impact

Including concrete examples with actual numbers helps make AI business impact metrics real and actionable. Below are two detailed case examples showing how AI pilots produced significant, quantifiable ROI and how those results were communicated to decision-makers.

Case Study 1: AI-Driven Media Engagement Platform

A leading journalism and PR marketplace partnered with SmartDev to build an AI-driven media engagement platform. The goal was to improve how PR teams identify relevant journalists, personalize outreach, and track engagement performance. Prior to the AI initiative, outreach workflows were largely manual, time-consuming, and difficult to measure in terms of business impact.

Baseline Challenges (Before AI Pilot)

- Journalist discovery and outreach relied heavily on manual research

- Low response rates due to generic messaging

- Limited visibility into engagement performance and campaign ROI

- High operational effort with inconsistent results

Pilot Results (After AI Implementation)

- AI-powered journalist matching and recommendation engine deployed

- Automated content relevance scoring and audience segmentation

- Personalized outreach workflows enabled at scale

- Centralized dashboard for engagement tracking and insights

Business Impact

- Outreach efficiency improved by approximately 60–70%

- Journalist response rates increased by an estimated 30–40%

- Campaign preparation time reduced significantly across PR teams

- Overall AI proof of concept ROI estimated at 3–4x compared to manual workflows

How the Results Were Framed

- Operational impact: PR teams reduced manual research and targeting effort by 60–70%, allowing faster campaign setup and higher throughput without additional headcount.

- Business impact: Improved journalist relevance and personalization increased engagement quality, driving 30–40% higher response rates across targeted outreach campaigns.

- Strategic impact: The AI platform established a scalable, data-driven engagement model, enabling consistent performance across campaigns and supporting future growth without proportional cost increases.

Case Study 2: AI-Enabled Immersive Learning Platform

An environmental education organization partnered with SmartDev to deliver a cross-platform, AI-enhanced learning experience. The objective was to increase learner engagement, personalize educational journeys, and improve content effectiveness across web and interactive environments.

Baseline Challenges (Before AI Pilot)

- Static learning content with limited personalization

- Inconsistent learner engagement across platforms

- Difficulty measuring learning effectiveness and content impact

- High effort required to update and adapt educational materials

Pilot and Expansion Results

- AI-driven content personalization based on learner behavior

- Adaptive learning paths integrated across web platforms

- Real-time analytics to track engagement and learning progress

- Seamless cross-platform experience for learners and educators

Business and Learning Impact

- Learner engagement levels increased by approximately 40–50%

- Content relevance and completion rates improved significantly

- Educator content management effort reduced by 30%+

- Early AI project ROI calculation showed ~2.5–3x return driven by efficiency and engagement gains

How This Translated to Business Evidence

- Before-and-after comparisons highlighted clear improvements in engagement and completion

- Measurable gains in efficiency supported ROI justification for further investment

- Alignment with organizational goals around scalability and impact enabled broader rollout

- This case demonstrated how measuring AI pilot success through engagement and efficiency metrics provided decision-makers with confidence to scale the platform beyond the initial pilot.

Key Takeaway from SmartDev Case Studies

These SmartDev-led initiatives show how early AI ROI measurement turns pilots into decision-ready evidence. By focusing on AI business impact metrics from the start and framing results in operational, strategic, and ROI terms, organizations can confidently move from experimentation to scale.

Explore how SmartDev partners with teams through a focused AI discovery sprint to validate business problems, align stakeholders, and define a clear path forward before development begins.

SmartDev helps organizations clarify AI use cases and feasibility through a structured discovery process, enabling confident decisions and reduced risk before committing to build.

Learn how companies accelerate AI initiatives with SmartDev’s discovery sprint.

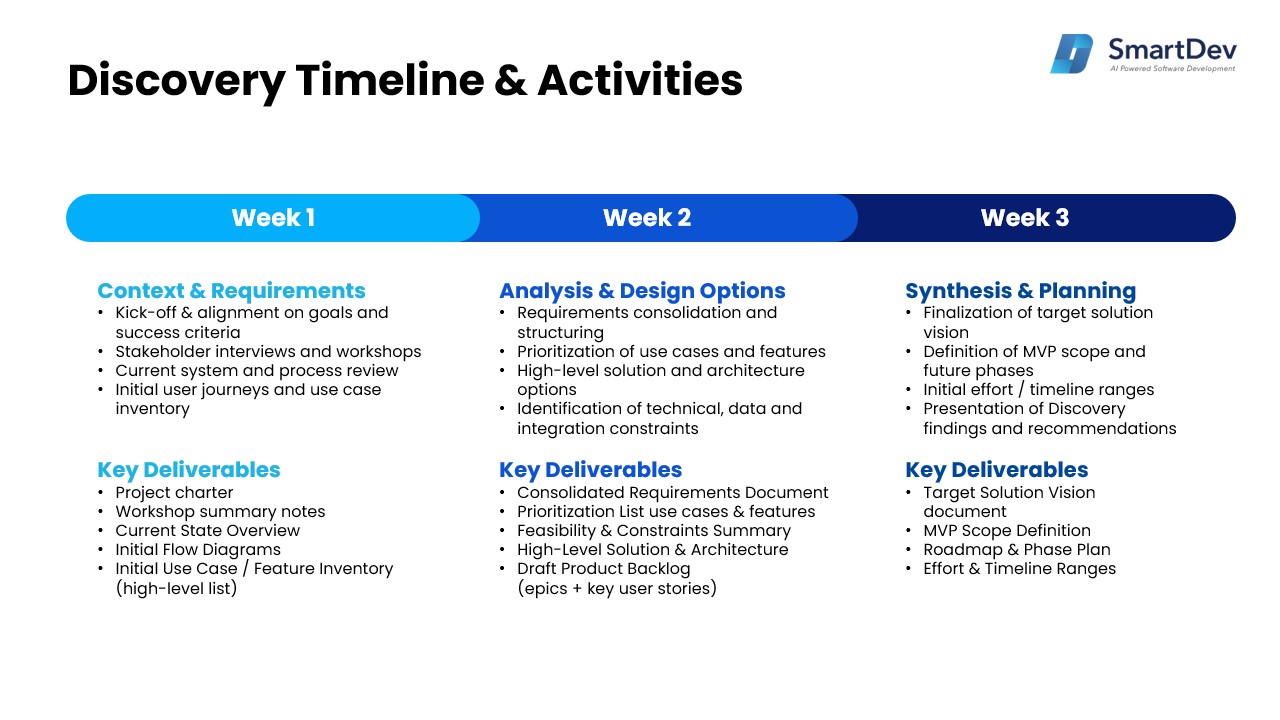

Start Your 3-Week Discovery Program NowHow SmartDev’s 3-Week AI Discovery Turns AI Ideas into Decisions

SmartDev’s 3-week AI Discovery is designed to turn an AI idea into a validated, decision-ready plan. During this phase, SmartDev works closely with business and technical stakeholders to clearly define the problem, identify the highest-value AI use case, and establish success criteria tied to AI ROI measurement. The goal is not exploration for its own sake, but early validation of business value.

Within three weeks, SmartDev delivers a tangible foundation. This includes a lightweight AI prototype or proof of concept, a high-level system architecture, and a prioritized roadmap. Just as importantly, the discovery defines AI business impact metrics and an initial AI project ROI calculation, so the organization understands what value success should create and how it will be measured.

By the end of the 3-week discovery, organizations gain clarity and confidence. They know whether the AI initiative should move forward, how it should be built, and what evidence will justify scaling. This reduces risk, prevents wasted development effort, and ensures that any next step. build, pivot, or stop. is grounded in real business insight rather than assumptions.

How SmartDev’s 10-Week AI Product Factory Leverages Early AI ROI Measurement

Many organizations run AI pilots, but struggle to convert experimental results into credible business evidence. Models may perform well, yet leaders still lack clarity on AI proof of concept ROI and whether further investment is justified.

This is the exact gap SmartDev’s AI Product Factory is designed to address. Instead of treating ROI as a post-deployment exercise, the factory embeds AI ROI measurement from the earliest stage, ensuring that every AI experiment is built to answer a clear business question. Does this initiative deliver measurable value, and should it be scaled, adjusted, or stopped?

The 10-Week AI Product Factory. Designed for Early ROI Evidence

SmartDev’s AI Product Factory helps organizations rapidly validate AI use cases, reduce product risk, and accelerate time-to-market compared to traditional development cycles. More importantly, it operationalizes early AI project ROI calculation by structuring experimentation around business outcomes, not just technical feasibility.

Each phase of the factory is intentionally aligned with best practices for:

- Measuring AI pilot success using really operational and financial metrics

- Defining AI business impact metrics before development begins

- Translating AI experiments business value into decision-ready evidence

This approach ensures that AI initiatives generate insight quickly, long before major scale investment is required.

Phase 1: Define & Discover (Weeks 1–2). Framing AI Proof of Concept ROI

Phase 1 directly supports early AI ROI measurement by transforming AI ideas into testable business hypotheses. Instead of starting with models or data, SmartDev works with stakeholders to define what success must look like from a business perspective.

During this phase, teams:

- Align on business objectives and success criteria

- Define AI business impact metrics tied to cost reduction, efficiency, or revenue

- Establish an initial AI proof of concept ROI hypothesis

- Identify assumptions and risks that affect ROI

By the end of Phase 1, AI experiments are clearly scoped around measurable outcomes, creating a strong foundation for credible ROI evaluation.

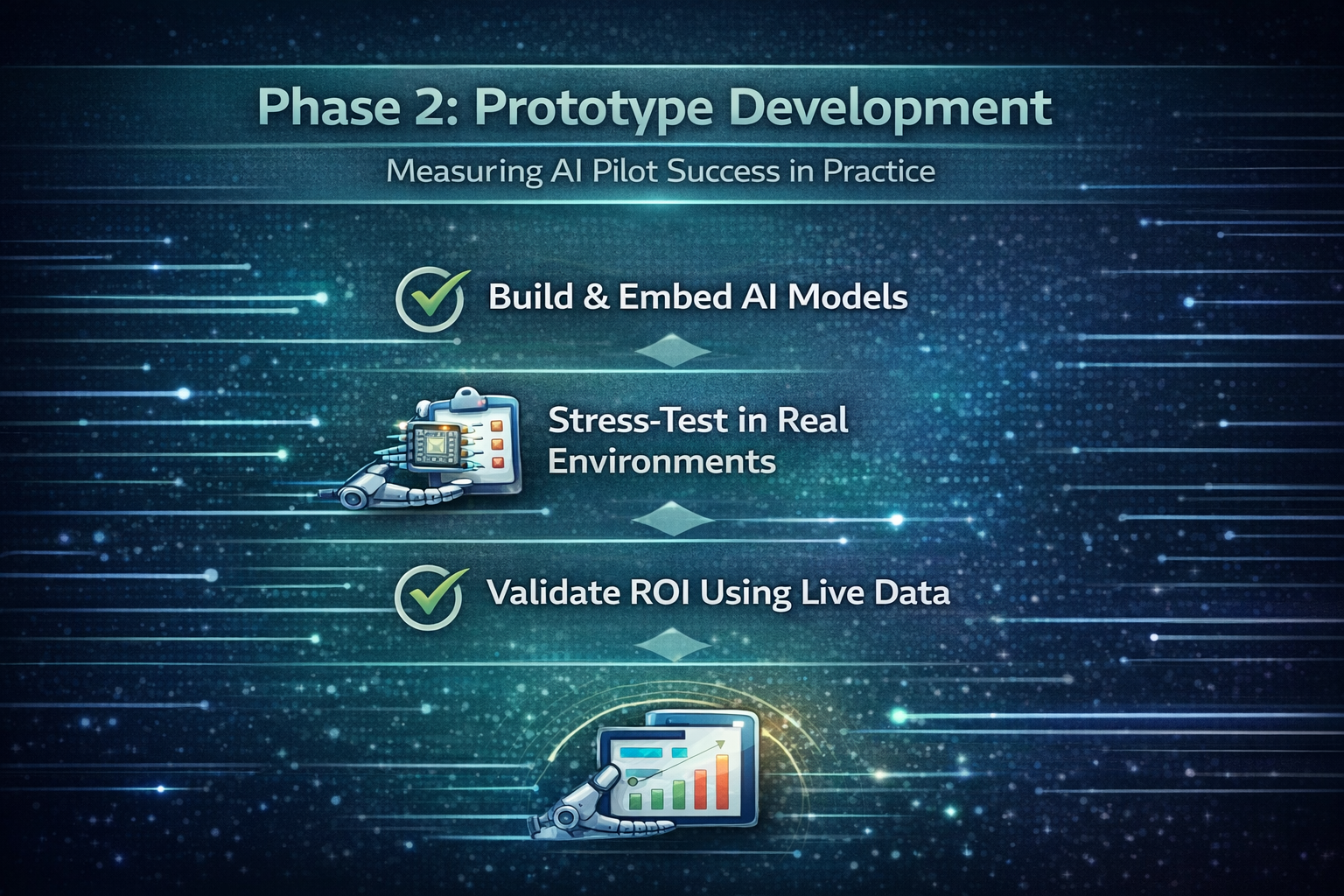

Phase 2. Prototype Development (Weeks 3–8). Measuring AI Pilot Success in Practice

Phase 2. Prototype Development (Weeks 3–8). Measuring AI Pilot Success in Practice

Phase 2 is where AI experiments begin generating real business signals, not just technical outputs. This phase reflects the principle that measuring AI pilot success must happen during execution, not after delivery.

SmartDev focuses development on:

- High-impact workflows that directly move AI business impact metrics, ensuring every feature contributes to measurable cost, revenue, or productivity outcomes.

- AI models fully embedded and stress-tested in real operational environments, exposing performance, adoption, and integration risks early.

- Ongoing validation of AI project ROI calculation using live pilot data, turning assumptions into evidence throughout delivery.

Through iterative demos and feedback loops, stakeholders can already observe where value is being created, where adoption of friction exists, and whether expected ROI remains achievable. This turns experimentation into measurable AI experiments business value, rather than speculative promise.

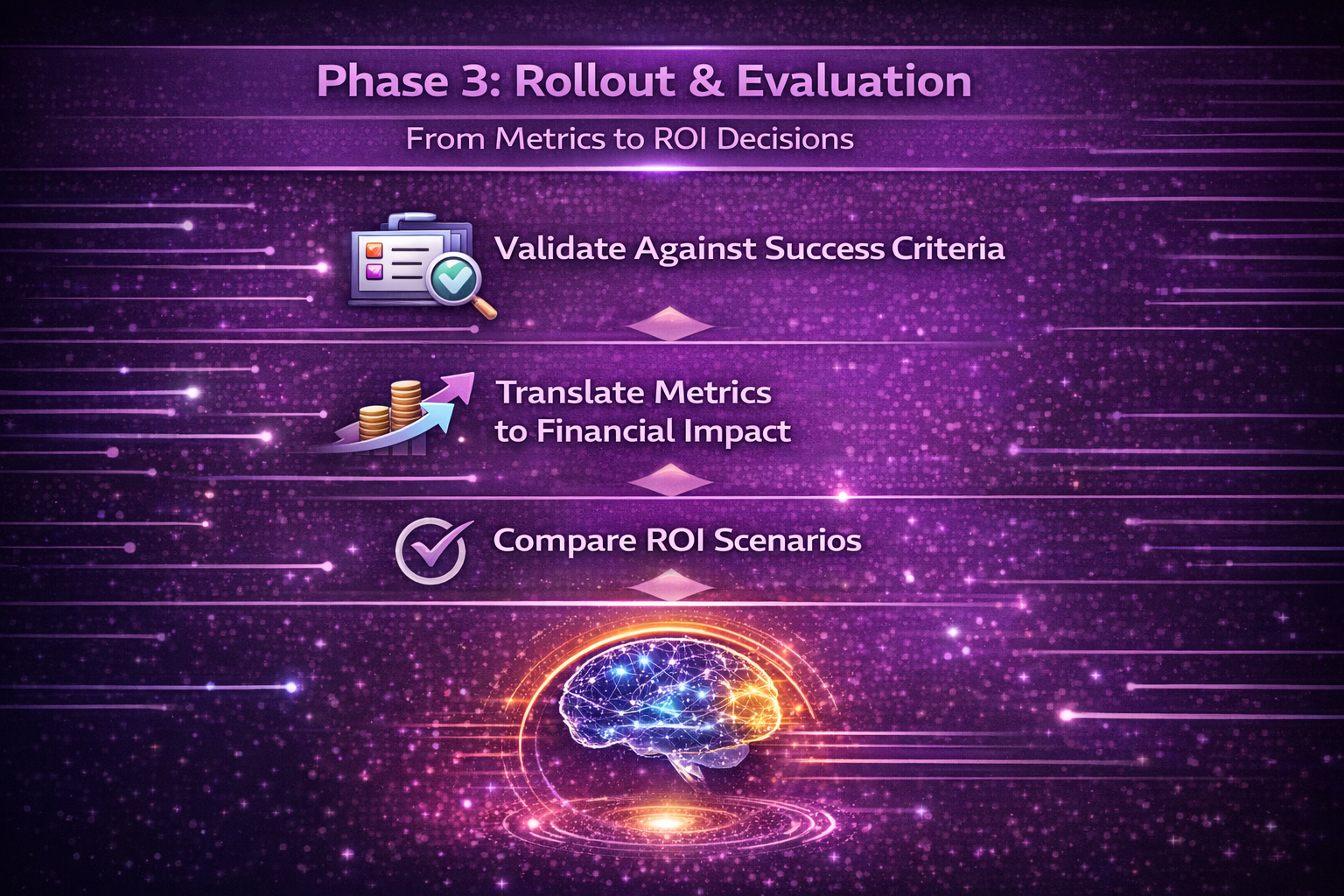

Phase 3. Rollout & Evaluation (Weeks 9–10). From Metrics to ROI Decisions

Phase 3. Rollout & Evaluation (Weeks 9–10). From Metrics to ROI Decisions

The final phase transforms collected metrics into clear ROI insights. This is where early AI ROI measurement enables confident decision-making.

SmartDev helps organizations:

- Validate performance against predefined success criteria

- Translate operational metrics into financial impact

- Compare conservative, expected, and upside ROI scenarios

The outcome is a clear, evidence-based view of AI proof of concept ROI, allowing leaders to decide whether to scale, pivot, or stop the initiative. This ensures AI investments are guided by data, not optimism.

From Measuring AI ROI Early to Acting on It

From Measuring AI ROI Early to Acting on It

SmartDev’s AI Product Factory delivers the execution model that makes early ROI validation possible through a structured Proof of Concept. By embedding ROI thinking into discovery, development, and evaluation, the PoC is designed from day one to generate business evidence. not just a working model. This gives organizations clarity fast and significantly reduces investment risk.

Every PoC becomes a controlled experiment with clear success criteria. Every pilot produces data that strengthens AI project ROI calculation, replacing assumptions with measurable results. And every scale, pivot, or stop decision is backed by concrete AI business impact metrics, not intuition.

If your organization wants to move beyond AI experimentation and start proving value early, investing in the right PoC is essential. SmartDev’s AI Product Factory provides the structure, speed, and discipline needed to turn PoCs into confident business decisions.

Conclusion

Measuring AI ROI early shifts AI initiatives from experimentation to execution. By grounding pilots in clear success criteria and practical metrics, organizations can move beyond technical results and demonstrate real business impact. Early ROI measurement builds confidence, reduces uncertainty, and ensures AI investments are evaluated with the same rigor as any strategic initiative.

More importantly, early AI ROI enables better decisions. It provides the evidence needed to scale promising initiatives, pivot intelligently when partial value emerges, or stop projects that do not justify further investment. This disciplined approach protects resources while accelerating adoption of AI solutions that truly matter to the business.

If your organization is running AI pilots but struggling to prove value, now is the time to act. Start embedding AI ROI measurement into your experimentation process and turn every pilot into decision-ready evidence. The sooner ROI is measured; the faster AI can deliver meaningful, defensible business results.

Why AI ROI Must Be Measured Before Scale

Why AI ROI Must Be Measured Before Scale

Phase 2. Prototype Development (Weeks 3–8). Measuring AI Pilot Success in Practice

Phase 2. Prototype Development (Weeks 3–8). Measuring AI Pilot Success in Practice Phase 3. Rollout & Evaluation (Weeks 9–10). From Metrics to ROI Decisions

Phase 3. Rollout & Evaluation (Weeks 9–10). From Metrics to ROI Decisions From Measuring AI ROI Early to Acting on It

From Measuring AI ROI Early to Acting on It