Introduction

In the high-stakes world of software development, the most expensive mistake isn’t a bug in the code-it’s building a product that cannot survive in the real world. Industry data shows that nearly 35% of IT projects fail because of poor upfront planning and undefined requirements. At SmartDev, we see this not as a failure of engineering, but as a failure of Technical Feasibility Study.

For CTOs and product managers, the excitement of a new vision often overshadows the gritty reality of execution. You have a concept, a budget, and a deadline. But do you have the architectural runway to support 100,000 concurrent users? Is your legacy database compatible with modern cloud infrastructure? Are there hidden compliance landmines in your proposed data flow?

These are the questions a Technical Feasibility Study answers. It is the bridge between a “good idea” and a “shippable product.” At SmartDev, we don’t just estimate hours; we validate reality. By embedding a rigorous technical assessment into our Project Discovery Phase, we de-risk your investment before a single line of code is written. This guide outlines exactly what we evaluate during this critical phase, ensuring your project is not just possible, but profitable and scalable.

What is a Technical Feasibility Study?

A Technical Feasibility Study is an evidence-based assessment conducted to determine if a proposed software solution can be built effectively with the available technologies, budget, and timeframe.

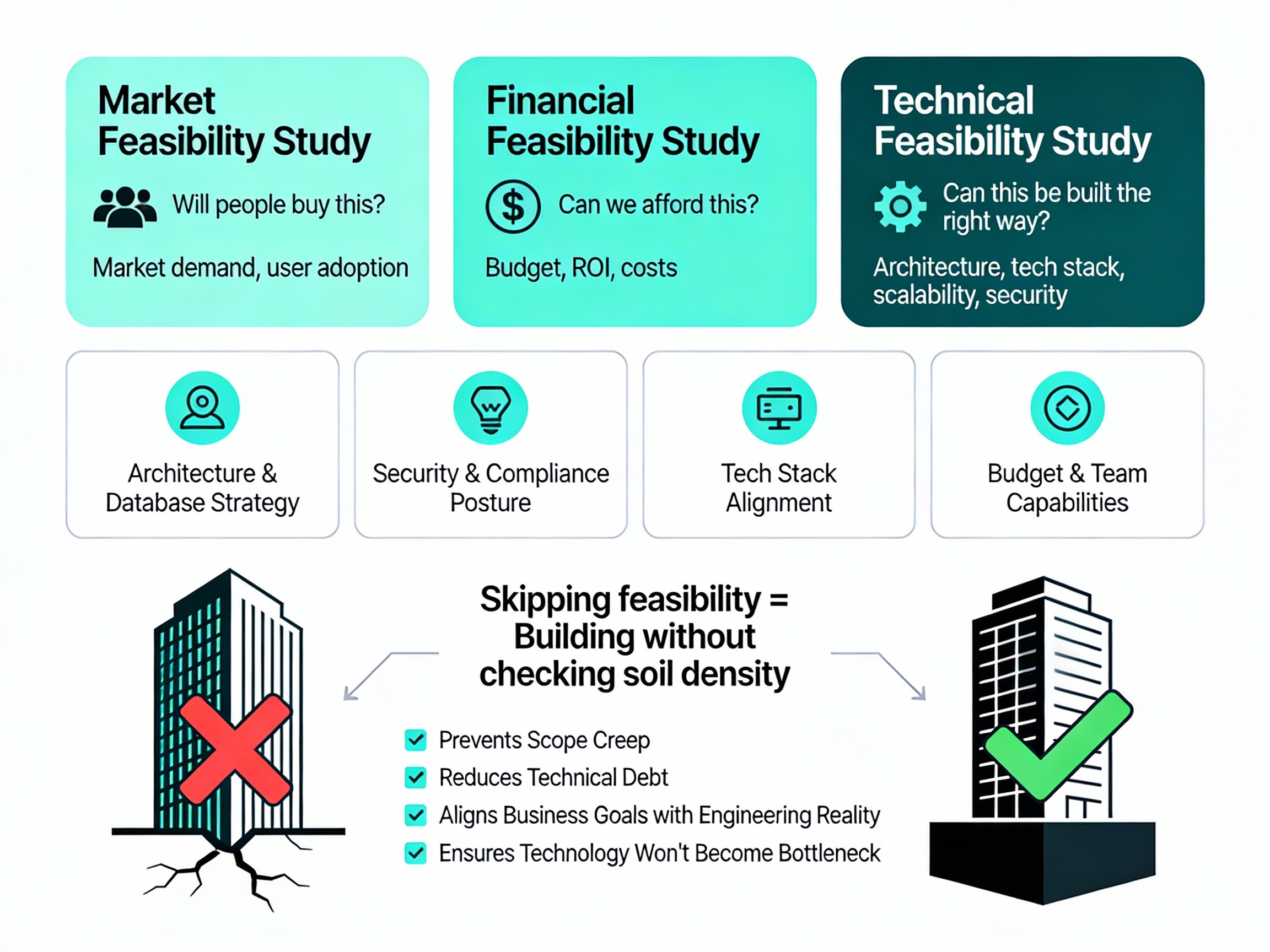

Unlike a market feasibility study (which asks, “Will people buy this?”) or a financial feasibility study (“Can we afford this?”), the technical study asks: “Can this be built with the right technology choices, and should it be built this way?”

In the context of modern software development, this study is not a passive report. It is an active stress test of your project’s assumptions. It evaluates whether your chosen architecture, database strategy, security posture, and tech stack align with your business objectives, budget constraints, and team capabilities.

Why It Is the Foundation of Success

Skipping this step is akin to building a skyscraper without checking the soil density. You might get the first few floors up, but eventually, the structure will crack.

A robust Software Feasibility Study aligns your business goals with engineering reality. It prevents the dreaded “scope creep” and Technical Debt that plague rushed projects, ensuring that the technology you choose today won’t become a bottleneck tomorrow. The difference between a well-planned project and a chaotic one often comes down to whether feasibility was properly assessed upfront.

The Role of Technical Feasibility in the Project Discovery Phase

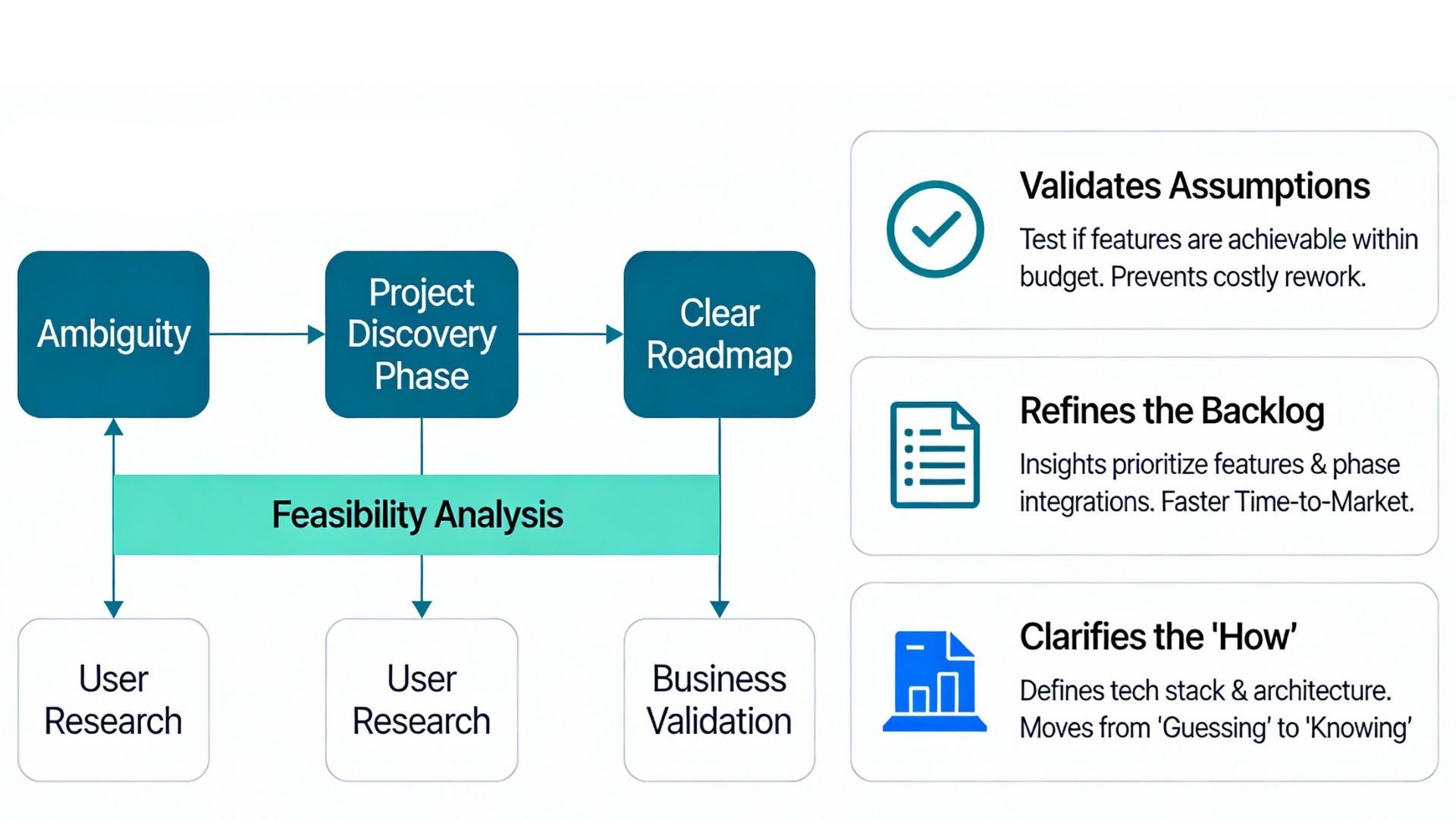

The Project Discovery Phase is the initial stage of the software development lifecycle (SDLC) where ambiguity is transformed into a clear roadmap.

While discovery involves user research and UI/UX prototyping, the technical backbone is the Feasibility Analysis. At SmartDev, we treat the discovery phase as a structured, time-boxed engagement, typically 2 to 4 weeks where our solution architects and lead engineers dig deep into your requirements, validating every critical assumption.

How Feasibility Supports Discovery

Validates Assumptions: We test if your desired features and performance targets are achievable within your budget constraints. This prevents costly rework later in development.

Refines the Backlog: Technical insights from our Technical Risk Assessment help prioritize features. We might advise moving a complex integration to Phase 2 to ensure a faster Time-to-Market (TTM) for your MVP (Minimum Viable Product).

Clarifies the “How”: While the product owner defines what to build, the Feasibility Analysis defines how to build it, selecting the right tech stack and architecture. For legacy system projects, this includes evaluating Legacy System Modernization strategies and approaches. By conducting a thorough Technical Risk Assessment early, we move from “guessing” to “knowing,” providing you with a reliable foundation for the heavy lifting of development that follows.

System Architecture Viability

Your system architecture is the skeleton of your application. If it’s weak, the body collapses under real-world pressure.

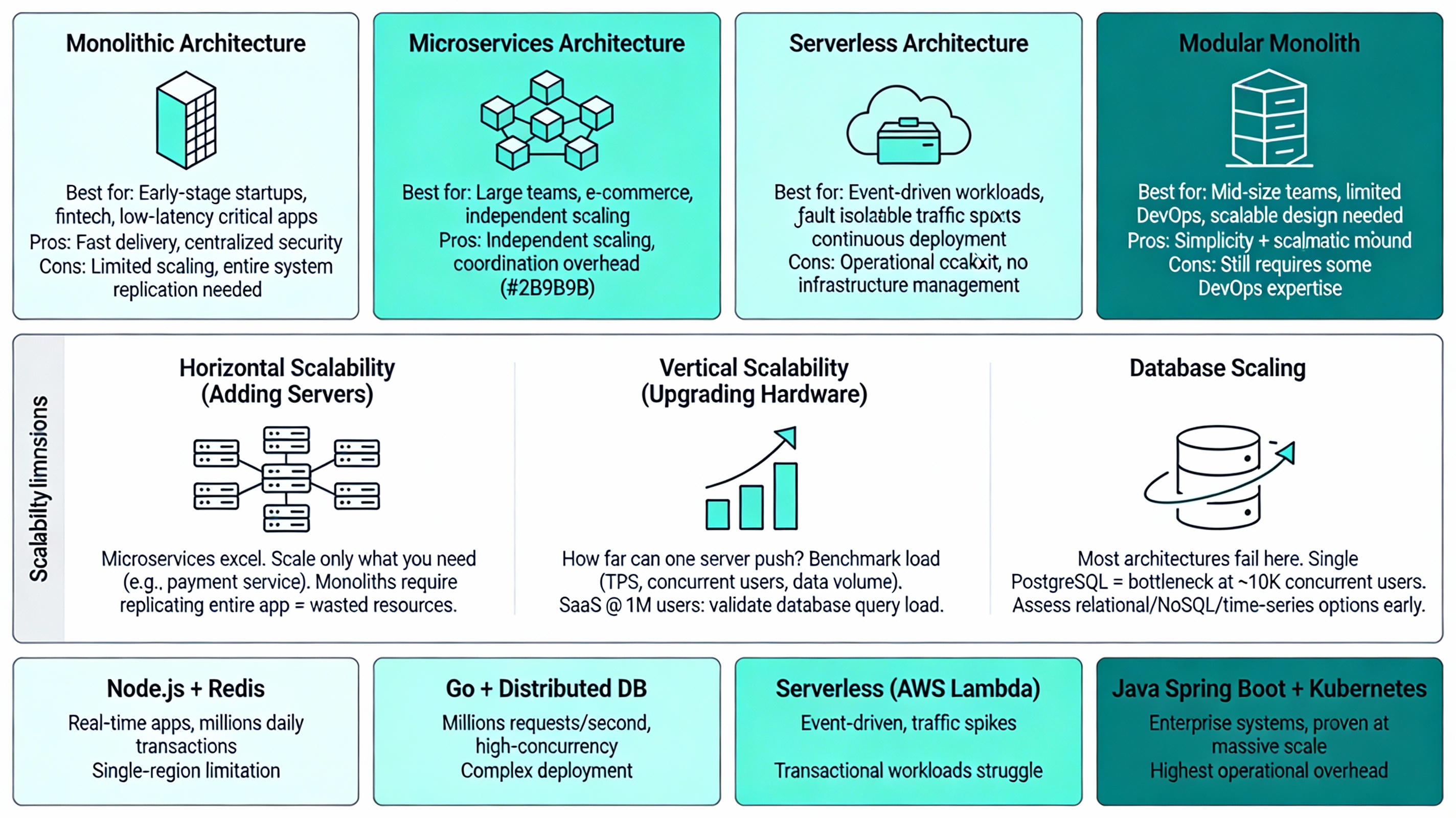

We evaluate whether your proposed architecture, whether Monolithic, Microservices, Serverless, or Modular Monolith-aligns with your long-term business goals and technical constraints. This is not a theoretical exercise; this is where we determine if your system can actually survive.

Why Architecture Choice Matters for Technology Fit

Monolithic Architecture: A single unified codebase deployed as one unit. Best for:

- Early-stage startups with small teams (faster initial delivery)

- Financial systems requiring centralized compliance and security controls

- Applications where internal latency is critical (bank transfers, payment processing)

Example: A fintech startup needs rapid time-to-market but strict security and regulatory compliance. A monolithic architecture allows a small team (3-5 developers) to coordinate easily while maintaining centralized security policies and HIPAA/PCI-DSS compliance from day one.

Microservices Architecture: Independent services communicating via APIs. Best for:

- Large, distributed teams working on different domains (payment, fraud detection, analytics)

- E-commerce platforms requiring independent scaling of checkout vs. inventory services

- Platforms needing continuous deployment of individual services without full system restarts

Example: Square Payroll transitioned from monolithic to serverless microservices, reducing system complexity, improving scalability, enhancing fault tolerance, and enabling independent team velocity. If one service fails, others remain operational.

Modular Monolith: Single deployment unit with clear module boundaries and internal APIs. Best for:

- Mid-size teams wanting microservice benefits without operational complexity

- Organizations with limited DevOps maturity but requiring scalable design

- 2025 trend: increasingly chosen as a pragmatic middle ground

Scalability Analysis During Discovery

We evaluate three critical scalability dimensions:

Horizontal Scalability (Adding More Servers): Can your architecture distribute load across multiple instances? Microservices excel here-you scale only the payment service during peak hours, not the entire system. Monoliths require replicating the entire application, wasting resources.

Vertical Scalability (Upgrading Hardware): How far can you push a single server? We benchmark your expected load (transactions per second, concurrent users, data volume) against infrastructure limits. For a SaaS platform expecting 1M users, we validate that your database can handle the query load, not just your application layer.

Database Scaling: This is where most architectures fail. We assess whether your chosen database (relational, NoSQL, time-series) can scale horizontally when data volumes explode. A monolithic system with a single PostgreSQL database becomes a bottleneck at ~10,000 concurrent users. We identify this in discovery, not at launch.

Technology Stack Alignment for Scalability

Each technology choice has hard limits on scalability. We don’t recommend random stacks; we match technology to your growth trajectory:

- Node.js with Redis caching: Excellent for real-time applications, moderate data volumes (up to millions of daily transactions). Limited to single-region architecture.

- Go with distributed databases: Ideal for high-concurrency systems (millions of requests per second). Complex deployment and team expertise required.

- Serverless (AWS Lambda): Perfect for event-driven workloads with unpredictable traffic spikes. Struggles with consistent, high-throughput transactional workloads.

- Java with Spring Boot on Kubernetes: Battle-tested for enterprise systems. Highest operational overhead but proven scalability at massive scale.

We validate that your chosen stack can grow with you, not become the limiting factor.

Data Availability and Quality Assessment

Data is the lifeblood of digital transformation. A beautiful interface is useless if the underlying data is incorrect, incomplete, or inaccessible. During discovery, we conduct a rigorous data quality assessment to understand what data you have, where it lives, how reliable it is, and whether it supports your business logic.

Data Profiling and Inventory

Before we design a new system, we must understand your current data landscape:

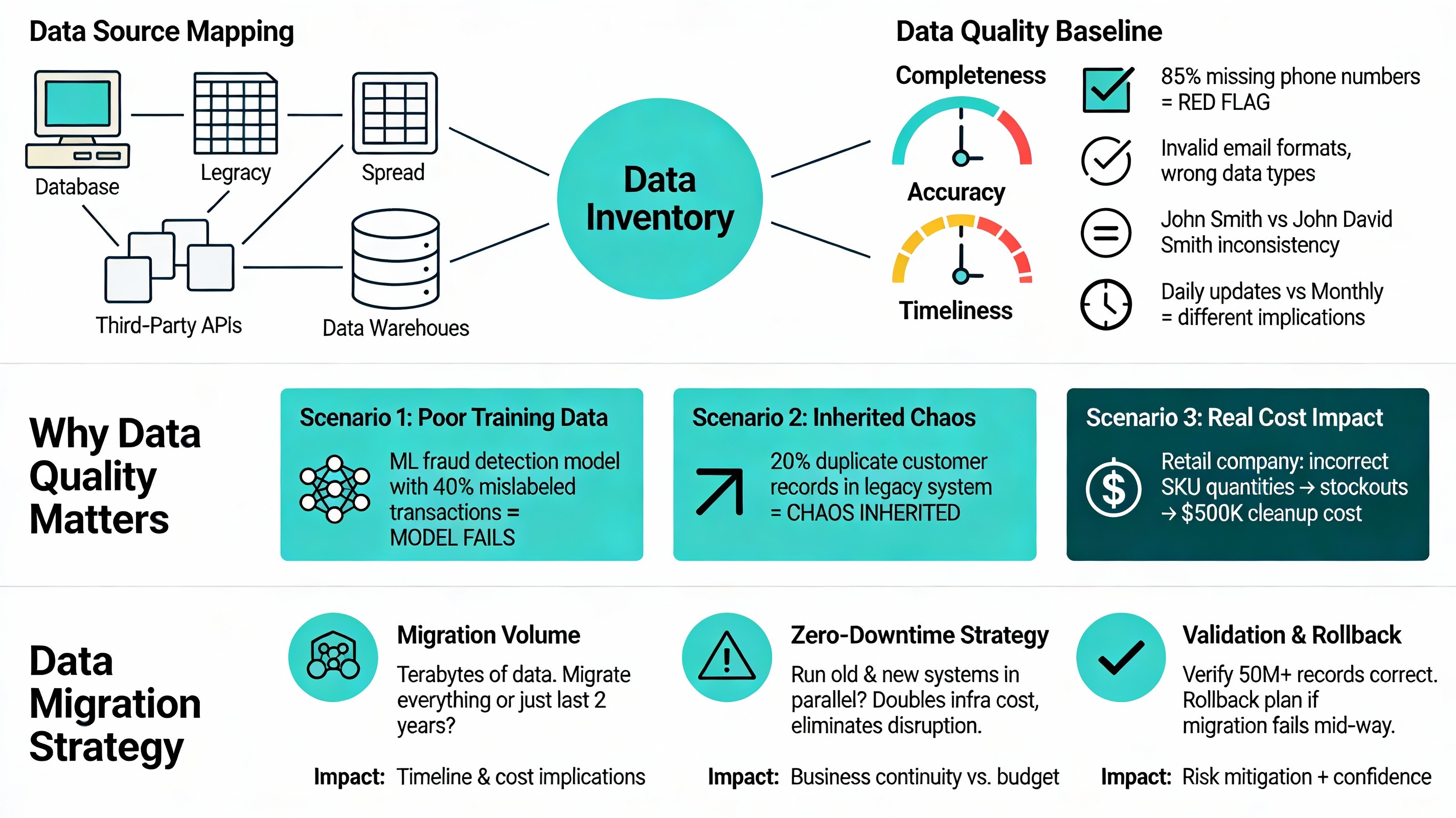

Data Source Mapping: Where does your data live? Legacy databases, spreadsheets, third-party APIs, data warehouses? We create an inventory of all data sources and their connectivity.

Data Quality Baseline: Using data quality assessment frameworks, we measure:

- Completeness: Are required fields populated? (e.g., 85% of customer records missing phone numbers is a red flag)

- Accuracy: How many records contain invalid values? (e.g., email addresses in wrong format)

- Consistency: Is the same customer data consistent across systems? (e.g., John Smith vs. John David Smith)

- Timeliness: How fresh is the data? (data updated daily vs. monthly has huge implications for real-time features)

Why Data Quality Determines Project Success

Poor data quality costs projects dearly. If you’re building a machine learning model for fraud detection, but the training data contains 40% mislabeled transactions, your model will fail. If you’re migrating from legacy systems but 20% of customer records have duplicated entries, your new system will inherit this chaos.

Real example: A retail company implemented a new inventory management system without assessing data quality. Half of their SKUs had incorrect quantities in the legacy system. The new system inherited this garbage, leading to stockouts and missing revenue. The fix costs $500K in data cleanup.

During discovery, we identify these data quality issues and propose fixes before they become catastrophic problems. This might mean:

- Allocating 1-2 weeks of development to data cleansing

- Implementing validation rules at API boundaries to prevent bad data

- Using automated data quality tools in your CI/CD pipeline to catch issues early

Data Migration Strategy

For legacy modernization projects, data migration is often the hardest part of a Technical Feasibility Study.

We assess:

- Migration volume: Terabytes of historical data require careful planning. Do you migrate everything, or just the last 2 years?

- Zero-downtime migration: Can you run old and new systems in parallel? This doubles infrastructure costs but eliminates business disruption.

- Validation strategy: How do you verify that 50 million migrated records are correct? We design automated validation checks.

- Rollback plan: If migration fails halfway through, can you revert to the old system? (spoiler: if you haven’t planned this, you’re at massive risk)

Ready to eliminate hidden technical risks before they cost you millions?

SmartDev's proven discovery process replaces assumptions with validated insights, ensuring your tech stack scales with growth, security is built in from day one, and timelines reflect real complexity, not wishful thinking.

De-risk your investment, align stakeholders around a clear technical strategy, and make confident go/no-go decisions backed by comprehensive feasibility analysis.

Schedule Your Technical Feasibility Assessment TodaySecurity, Compliance, and Risk Constraints

In an era of GDPR, CCPA, and HIPAA, security cannot be an afterthought. As an ISO/IEC 27001 certified company, SmartDev integrates security into the feasibility layer from day one.

How Technology Architecture Enables Security

Different architectural choices have different security implications. We don’t just say “use SSL”-we design security into the architecture itself:

Monolithic Systems – Centralized Security:

- Single authentication/authorization point (easier to manage, but single point of failure)

- Centralized encryption keys (simpler key rotation, but higher impact if breached)

- Best for: Financial institutions, healthcare where audit trails must be crystal clear

- Risk: If the central auth service goes down, the entire system is compromised

Microservices – Distributed Security:

- Each service has its own authentication and authorization (resilient, but complex)

- Distributed encryption keys (harder to manage, but limits blast radius of key compromise)

- Requires service-to-service authentication (mutual TLS) and API gateway security

- Best for: SaaS platforms, e-commerce where services need independent operation

- Risk: Inconsistent security implementation across services if not carefully governed

Compliance Mapping to Technology Choices

We map regulatory requirements to specific technology decisions:

GDPR Compliance (EU user data):

- Requirement: Data residency in EU

- Technology choice: AWS EU regions (Ireland, Frankfurt) or on-premise infrastructure in EU

- Architecture impact: Limits horizontal scaling across global regions, increases latency for non-EU users

HIPAA Compliance (healthcare data):

- Requirement: Encryption at rest and in transit, audit logging, access controls

- Technology choice: Monolithic architecture with centralized logging and access controls

- Specific tools: CloudHSM for encryption keys, CloudTrail for audit logs, identity management systems

- Cost impact: 30-40% higher infrastructure costs due to compliance overhead

PCI-DSS Compliance (payment data):

- Requirement: Network segmentation, no storage of card data, tokenization

- Technology choice: Separate payment service (microservice) with restricted access

- Architecture: Payment service isolated on own infrastructure, accessed only through secure APIs

DevSecOps Integration into Development Timelines

Security is not a phase; it’s built into development from day one. When we design your 3-week discovery, we include security validation at each stage:

Week 1 – Security Planning:

- Identify compliance requirements (GDPR, HIPAA, PCI-DSS)

- Map threat landscape (what could attackers exploit?)

- Define security architecture (how are secrets stored? How is authentication handled?)

- Design risk controls (firewalls, WAF, rate limiting, DDoS protection)

Week 2 – Threat Modeling & Risk Assessment:

- Using frameworks like STRIDE (Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, Elevation of Privilege), we identify attack vectors

- Assess likelihood and impact of each risk

- Propose mitigations: encryption, network segmentation, secrets management

Week 3 – Security in Development Process:

- Design DevSecOps practices: automated security testing in CI/CD, infrastructure-as-code scanning, dependency vulnerability checking

- Establish SAST/DAST (Static/Dynamic Application Security Testing) in your build pipeline

- Plan security training for development teams

Why Security Maturity Matters for Implementation

We assess your organization’s Cloud Security Maturity level and recommend technologies accordingly:

Level 1 – Ad Hoc Security: Small teams, no formal processes

- Recommendation: Monolithic architecture with managed security services (AWS managed authentication, managed WAF)

- Why: Fewer moving parts = fewer security configuration mistakes

Level 2 – Basic Controls: Security policies exist, basic monitoring

- Recommendation: Microservices with centralized identity management and API gateway

- Why: Can handle distributed architecture without losing control

Level 3+ – Mature Security: Continuous monitoring, threat hunting, incident response

- Recommendation: Advanced microservices with service mesh security (Istio), zero-trust architecture

- Why: Infrastructure can handle the complexity of distributed security

Real timeline impact: A company at Level 1 security maturity attempting a microservices architecture will spend an extra 4-6 weeks on security implementation during development. A company at Level 3+ can execute the same architecture in 2-3 weeks because their processes and tooling are already optimized.

Development Effort and Delivery Risk Estimation

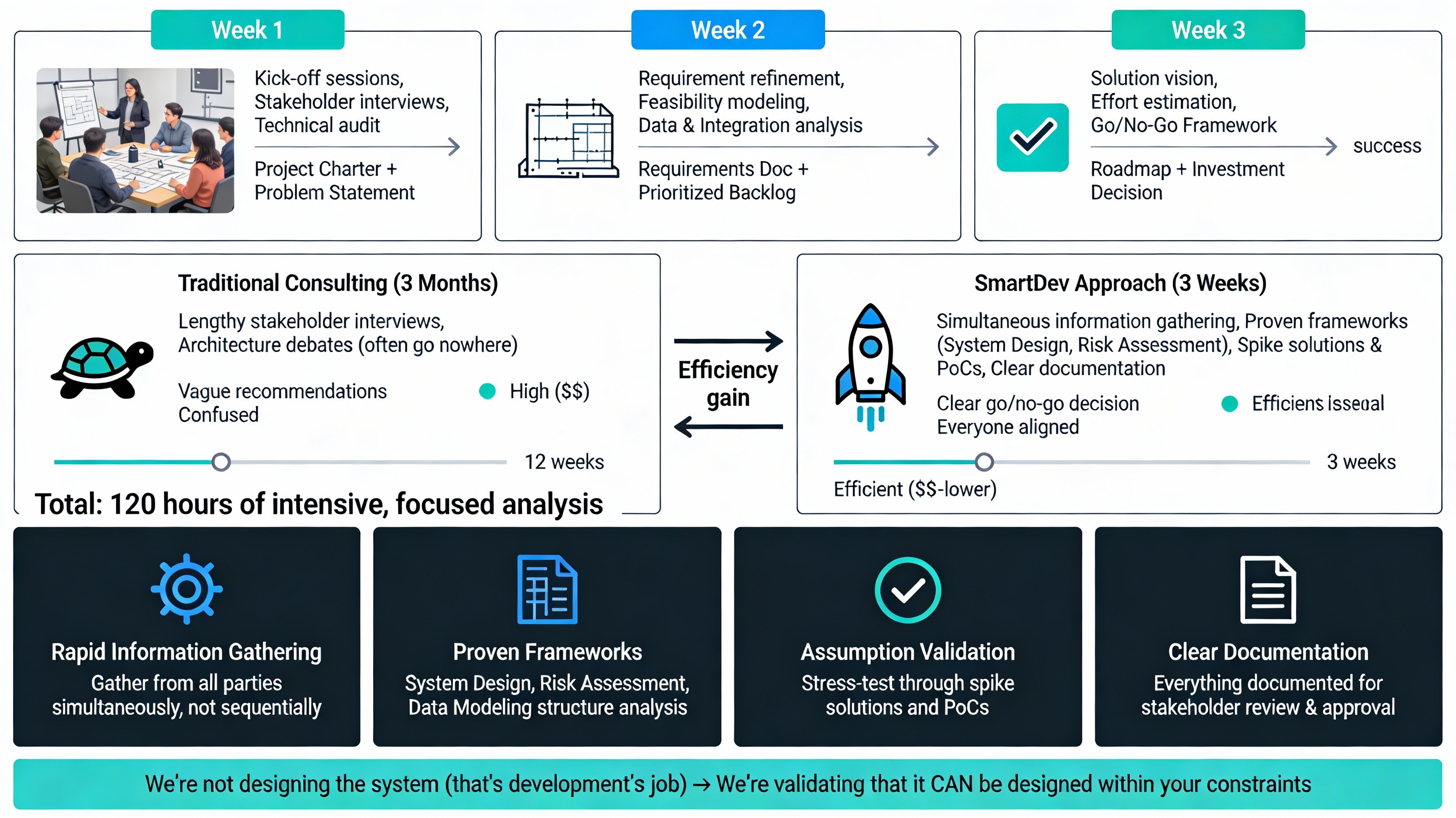

Why can SmartDev deliver results in 3 weeks of discovery instead of 3 months of guessing? Because we’ve mapped the entire technical landscape.

Our process is detailed in How SmartDev’s 3-Week AI Discovery Program Reduces Product Risk, which demonstrates how we align business goals, data realities, and technology feasibility before development begins.

The 3-Week Discovery Timeline Explained

Week 1: Business Goals, User Pain Points, and Problem Validation (40 hours)

The foundation of technical feasibility is business clarity. As emphasized in our discovery guide, we start by validating the problem:

- Kick-off sessions: Align on business goals and success criteria.

- Stakeholder interviews: Identify core user journeys and constraints.

- Technical audit: Review existing systems, data ownership, and tech stack constraints.

- Deliverable: A clear project charter and shared problem statement that anchors technical decisions.

Week 2: Translating Business Problems Into AI-Ready Use Cases (40 hours)

Once the problem is validated, we shift to solution architecture:

- Requirement refinement: Translating business needs into prioritized technical features.

- Feasibility modeling: Evaluating high-level solution options (e.g., build vs. buy, cloud vs. on-prem).

- Data & Integration Deep Dive: Identifying data constraints and integration risks early.

- Deliverable: A consolidated requirements document and prioritized backlog that balances value with technical reality.

Week 3: Decision-Making Framework and Risk Prioritization (40 hours)

The final week focuses on the roadmap and investment decision:

- Solution vision: Finalizing the target architecture and MVP scope.

- Effort estimation: Providing high-level timeline ranges and resource needs.

- Go/No-Go Framework: A clear recommendation based on technical feasibility and business value.

- Deliverable: A comprehensive roadmap and phased delivery plan that enables confident decision-making.

Why 3 Weeks Is Not Fast & Loose, It’s Intensive & Focused

Many companies say, “3 weeks seems too short to plan a $2M project.” But here’s the secret: we don’t guess. We stress-test every assumption.

Instead of spending 3 months interviewing stakeholders and debating architectures (which often go nowhere), we:

- Gather information rapidly from all parties simultaneously

- Use proven frameworks (System Design, Risk Assessment, Data Modeling) to structure analysis

- Validate assumptions through spike solutions and PoCs

- Document everything clearly for stakeholders to review and approve

Comparison to traditional consulting:

- Traditional approach: 3 months discovery, vague recommendations, stakeholders confused

- SmartDev approach: 3 weeks intense discovery, clear go/no-go decision, everyone aligned

The speed comes from focus: we’re not designing the system (that’s development’s job), we’re validating that it can be designed and executed within your constraints.

Effort Estimation Confidence Through Technology Analysis

Here’s how we convert technical analysis into confidence in delivery timelines:

High Confidence (±10% estimate accuracy):

- Using proven, mature tech stack (Java Spring Boot, React, PostgreSQL)

- Clear data structures and API requirements

- No major legacy system integration

- Team skills match technology choices

- Realistic timeline: 12-20 week projects

Medium Confidence (±25% estimate accuracy):

- Some newer technologies (Rust, Golang, GraphQL)

- Moderate third-party integrations (Stripe, Salesforce APIs)

- Some legacy system migration

- Minor skill gaps, training budget allocated

- Timeline range: 16-32 weeks, with contingency

Low Confidence (±50% estimate accuracy):

- Unproven or bleeding-edge tech (custom blockchain, novel ML architectures)

- Major legacy system rewrite

- Uncertain compliance requirements

- Significant skill gaps or team building needed

- Recommendation: break into phases, don’t commit to final budget

By the end of Week 3, we’ll tell you which category you fall into-and why. This honest assessment prevents the project from starting with false assumptions.

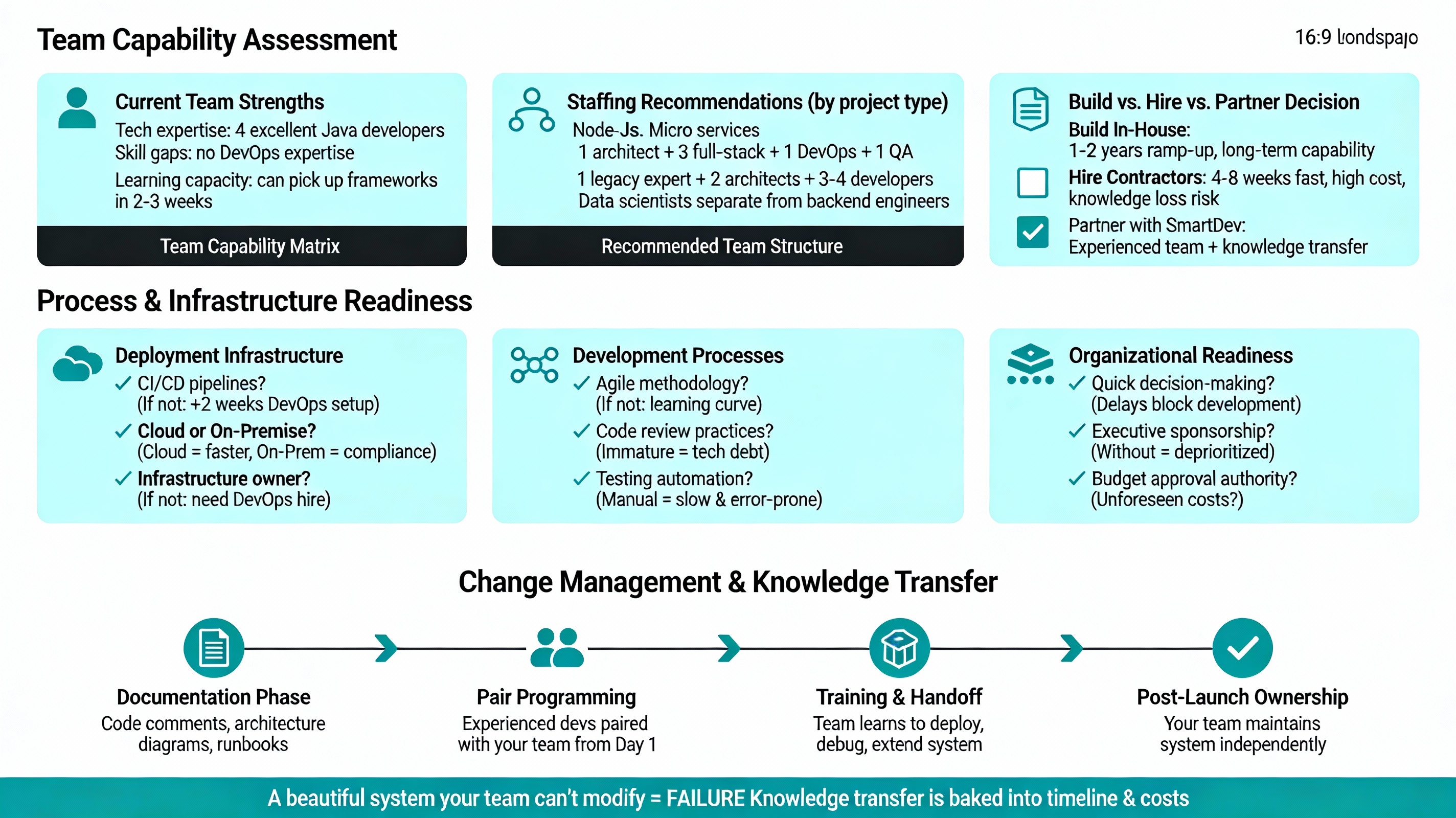

Your Business Readiness with SmartDev

Technical feasibility is only half the equation. The other half is organizational readiness: Do you have the team, processes, and commitment to succeed?

Team Capability Assessment

During discovery, we evaluate:

Current Team Strengths:

- What technologies does your team know well? (e.g., “we have 4 excellent Java developers”)

- Or what gaps exist? (e.g., “we need DevOps expertise but have none”)

- What’s the learning capacity? (e.g., “team can pick up new frameworks in 2-3 weeks”)

Staffing Recommendations:

- For a Node.js microservices project, we recommend 1 senior architect, 3 mid-level full-stack developers, 1 DevOps engineer, 1 QA engineer

- Or for a legacy migration, we recommend 1 legacy system expert (to understand old system), 2 new system architects, 3-4 developers

- For AI/ML projects, we recommend data scientists separate from backend engineers (these are distinct skill sets)

Build vs. Hire vs. Partner Decision:

- Build an in-house? Only if a team has 1-2 years to ramp up on new technologies. Good for long-term capability.

- Hire contractors? Fast ramp-up (4-8 weeks) but higher cost, knowledge leaves when they do

- Partner with dedicated team (like SmartDev)? Dedicated Team model gives you experienced developers and knowledge transfer

Process & Infrastructure Readiness

Deployment Infrastructure:

- Do you have CI/CD pipelines? (If not, first 2 weeks of development will be DevOps setup)

- Cloud infrastructure or on-premises? (Cloud = faster, on-premises = compliance advantages)

- Who manages infrastructure? (If nobody, you need a DevOps hire)

Development Processes:

- Do you use Agile? (If not, there’s a learning curve)

- Code review practices? (Immature code review leads to technical debt)

- Testing automation? (Manual testing is slow and error-prone)

Organizational Readiness:

- Can stakeholders make decisions quickly? (Delayed decisions block development)

- Is there an executive sponsorship? (Projects without C-level support often get deprioritized)

- Budget approval authority? (Do you have sign-off for unforeseen costs?)

Change Management & Knowledge Transfer

We don’t just build systems; we ensure your team can maintain them. During discovery, we recommend:

Knowledge Transfer Plan:

- Will your team take over the system post-launch? (If yes, they need to understand architecture early)

- Pair programming: experienced developers paired with your team from day 1

- Documentation: code comments, architecture diagrams, runbooks for operations

- Training: how to deploy, debug, and extend the system

Why this matters: A project that delivers a beautiful system but leaves your team unable to modify it is a failure. We factor knowledge transfer into timelines and costs.

Conclusion

A Technical Feasibility Study is not luxury insurance. It protects your investment by validating architecture choices, assessing data readiness, embedding security from the ground up, and ensuring realistic timelines. Our intensive 3-week discovery process compresses months of traditional consulting into focused analysis backed by technology decisions, risk assessments, and go/no-go recommendations.

At SmartDev, we don’t believe in “move fast and break things” when breaking things costs millions. We believe in “move fast and validating everything.”

Ready to validate your next big idea with technical rigor?

Don’t gamble on assumptions. Contact SmartDev today to schedule your Project Discovery Consultation and start your development journey with confidence. Our experience across multiple service offerings-Mobile App Development, System Integration, QA & Testing, Dedicated Teams, and AI Proof of Concept-ensures you get pragmatic, proven guidance grounded in real technology constraints.

We’ll give you the clarity to move forward confidently.