Introduction

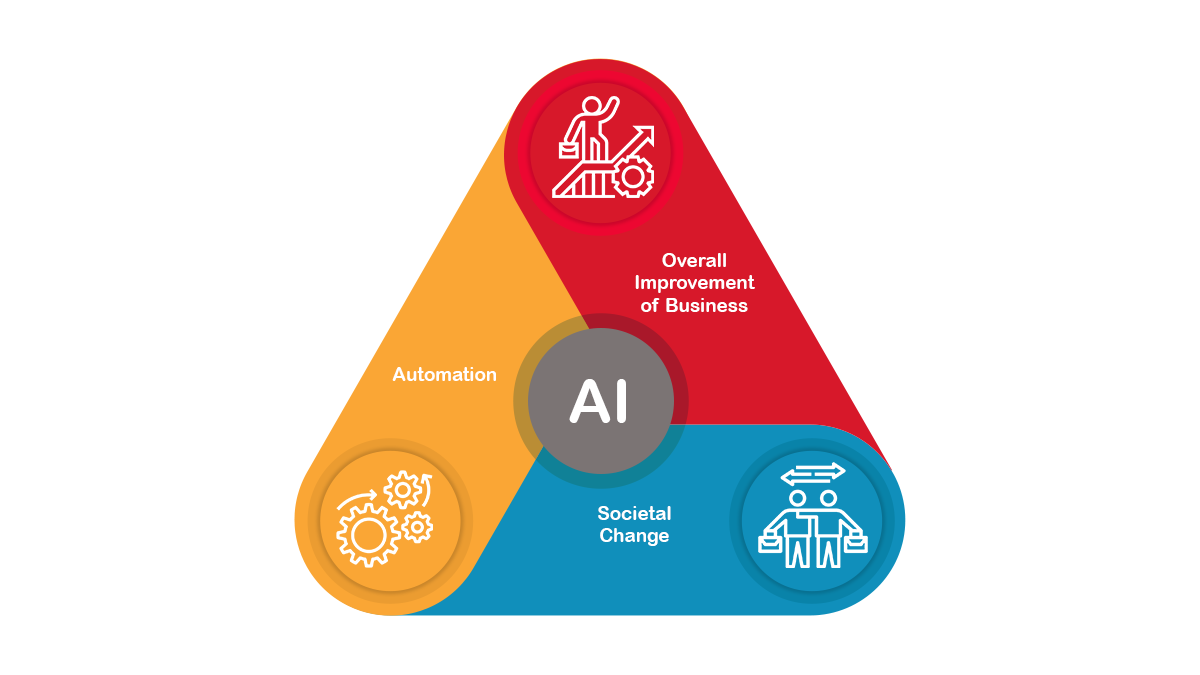

Artificial intelligence is no longer just a buzzword—it’s the backbone of innovation across industries, powering everything from personalized recommendations to autonomous systems. But behind every groundbreaking AI solution is a well-structured AI tech stack—a carefully selected combination of tools, frameworks, and infrastructure that determines the efficiency, scalability, and success of AI projects.

A strong AI tech stack isn’t just about choosing the latest technologies; it’s about creating a seamless workflow from data collection and model training to deployment and continuous optimization. The right stack can mean the difference between an AI solution that scales effortlessly and one that struggles with performance, interoperability, or cost inefficiencies.

In this guide, we’ll break down the key components of an AI tech stack, explore different types of AI architectures, and provide a step-by-step approach to building and managing an efficient AI stack. Whether you’re a startup looking for a lean AI setup or an enterprise aiming for large-scale AI integration, this post will help you make informed decisions and stay ahead of the curve.

Let’s dive in!

1.1. What is an AI Tech Stack?

Imagine building a house without a blueprint or the right tools. It’d be chaotic, wouldn’t it? In the same way, creating and implementing artificial intelligence (AI) solutions require a structured foundation—this is where the AI technology stack comes into play.

An AI tech stack is a combination of tools, frameworks, libraries, and infrastructure needed to develop, deploy, and manage AI-powered applications. Think of it as the backbone of AI development, encompassing everything from data collection and preprocessing to model deployment and monitoring. Whether you’re working with natural language processing, computer vision, or predictive analytics, your AI tech stack is the silent enabler making it all possible.

1.2. Importance of a Well-Defined AI Tech Stack

Why does your choice of tech stack matter? The short answer: efficiency, scalability, and success. A well-defined AI stack acts as a north star for teams, ensuring they use the best-fit tools for their specific needs while avoiding redundancies or inefficiencies.

- Boosts Productivity: With the right tools in place, developers spend less time navigating technical hurdles and more time innovating.

- Ensures Scalability: As businesses grow, their AI systems need to adapt. A robust stack supports seamless scaling without major overhauls.

- Enhances Collaboration: Defined tools and processes streamline workflows across cross-functional teams, reducing friction.

- Cost Efficiency: A well-optimized stack minimizes wasted resources by focusing on purpose-fit technologies.

In short, investing in a thoughtful AI tech stack isn’t just about staying competitive; it’s about future-proofing your AI endeavors.

1.3. Evolution of AI Tech Stacks: From Traditional to Modern Approaches

The journey of AI tech stacks reflects the broader evolution of AI itself. In the early days, traditional approaches dominated, with systems that were often rule-based. These systems relied heavily on predefined algorithms and manual programming. Hardware limitations and the absence of advanced frameworks made development labor-intensive, requiring significant time and resources.

The mid-2010s marked a transformative period with the rise of frameworks like TensorFlow and PyTorch. These tools revolutionized AI development by democratizing access to powerful capabilities, offering pre-built functions, and fostering extensive community support. This era enabled developers to achieve more with less effort, setting the stage for rapid progress.

Today, modern approaches to AI tech stacks emphasize automation, cloud integration, and modularity. Advanced solutions like AutoML, MLOps platforms, and serverless architectures empower teams to build smarter, more efficient systems. These innovations have made AI development faster and more accessible, even for organizations with limited specialized resources. The evolution of AI tech stacks highlights how the field has grown from niche applications into a cornerstone of transformative solutions. The journey of AI tech stacks reflects the broader evolution of AI itself.

1.4. Human Resource and AI Tech Stacks

AI tech stacks aren’t just for developers in a room full of code. They have a broader audience:

- Developers: For software engineers and data scientists, understanding the stack means mastering the tools to build, train, and deploy models effectively. It’s the technical roadmap for every project.

- Enterprises: Businesses leveraging AI need to choose or build stacks that align with their goals, ensuring systems integrate seamlessly with existing operations and future needs.

- Startups: For fledgling companies, the right tech stack can mean the difference between rapid innovation and being bogged down by inefficiencies.

- IT Operations Teams: With the growing emphasis on MLOps and system reliability, IT teams play a vital role in maintaining and optimizing the tech stack.

At its core, anyone involved in the journey of developing or leveraging AI technologies should care about AI tech stacks. They are the linchpin connecting innovation with real-world impact.

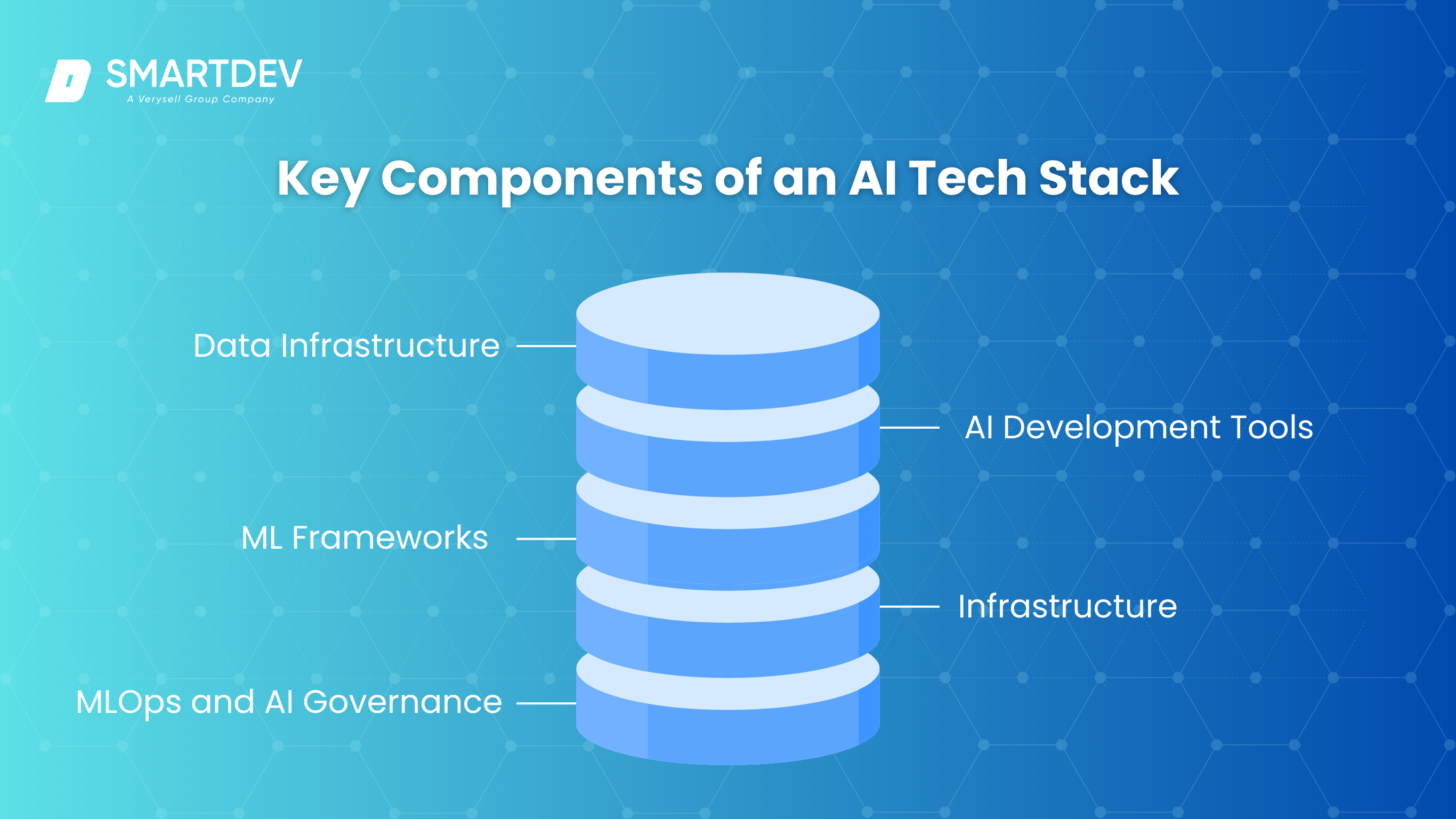

2. Key Components of an AI Tech Stack

As mentioned above, building an AI solution is like crafting a complex machine – it requires a series of carefully selected components working seamlessly together. Let’s delve into the key components of an AI tech stack and their critical roles.

2.1. Data Infrastructure

Data forms the backbone of any AI application. To extract meaningful insights, an AI tech stack requires robust infrastructure for managing data effectively:

Data Sources – AI systems rely on diverse data inputs such as

- Internal Data: Proprietary data generated within an organization, like customer transaction records or operational logs.

- External Data: Third-party data sources like market trends or competitor analysis.

- Open Data: Freely available datasets, often used for benchmarking or initial model training

Data Storage Solutions

- Databases: Structured storage solutions ideal for transactional or relational data.

- Data Lakes: Scalable repositories for raw, unstructured data, offering flexibility for big data processing.

- Data Warehouses: Optimized for analytics, enabling faster queries on structured data.

Data Processing

- ETL (Extract, Transform, Load): Processes that prepare data for analysis by extracting it from sources, transforming it into usable formats, and loading it into storage systems.

- Streaming: Real-time data processing for applications that require immediate insights.

- Batch Processing: Processing large volumes of data at regular intervals, suitable for periodic analysis.

2.2. Machine Learning Frameworks

Machine learning frameworks are the engines that drive AI development. They simplify complex processes, enabling developers to focus on solving problems rather than reinventing the wheel. These frameworks are integral to AI tech stacks because they offer pre-built tools, extensive libraries, and scalability that empower teams to create, train, and deploy models efficiently.

The three frameworks discussed here—TensorFlow, PyTorch, and Scikit-learn—are noteworthy because they cater to different needs. TensorFlow excels in scalability and production readiness, PyTorch is highly favored for its research-friendly features, and Scikit-learn provides simplicity for traditional machine learning tasks. This diversity ensures that teams of all expertise levels and project types can find a framework that suits their specific requirements.

Framework | Pros | Cons | Best Use Cases |

| TensorFlow | Flexible, scalable, extensive community support | Steep learning curve | Large-scale deep learning and production models |

| PyTorch | Intuitive syntax, dynamic computation graph, excellent for research | Less mature for large-scale production | Research, prototyping, and dynamic models |

| Scikit-learn | Simple, great for classical ML, excellent documentation | Limited support for deep learning, not scalable | Small to medium ML projects, traditional ML tasks |

Criteria for Selection

- Project Size and Complexity: Select frameworks that align with the scale and requirements of your project.

- Community Support and Documentation: Strong community backing ensures better resources and troubleshooting options.

- Team Expertise: Choose a framework that matches the proficiency of your development team.

2.3. AI Development Tools

AI development tools are indispensable for building, testing, and collaborating on AI projects. These tools streamline workflows and enhance productivity:

- Integrated Development Environments (IDEs): Popular IDEs like Jupyter Notebook and PyCharm simplify code writing and debugging for AI projects.

- No-Code and Low-Code Platforms: Tools like DataRobot and H2O.ai enable non-technical users to build AI models, democratizing access to AI capabilities.

- Experiment Tracking Platforms: Solutions like MLflow and Weights & Biases help teams manage and track experiments, ensuring reproducibility and efficient collaboration.

AI development tools accelerate innovation by reducing the technical barriers associated with creating AI systems.

2.4. Deployment and Runtime Infrastructure

The deployment and runtime infrastructure enable AI models to operate effectively in production environments. Key components include:

- Cloud Providers: Services like AWS, Azure, and GCP offer scalable compute and storage solutions tailored for AI.

- Containerization: Tools like Docker and Kubernetes enable consistent deployment across environments, ensuring seamless scalability.

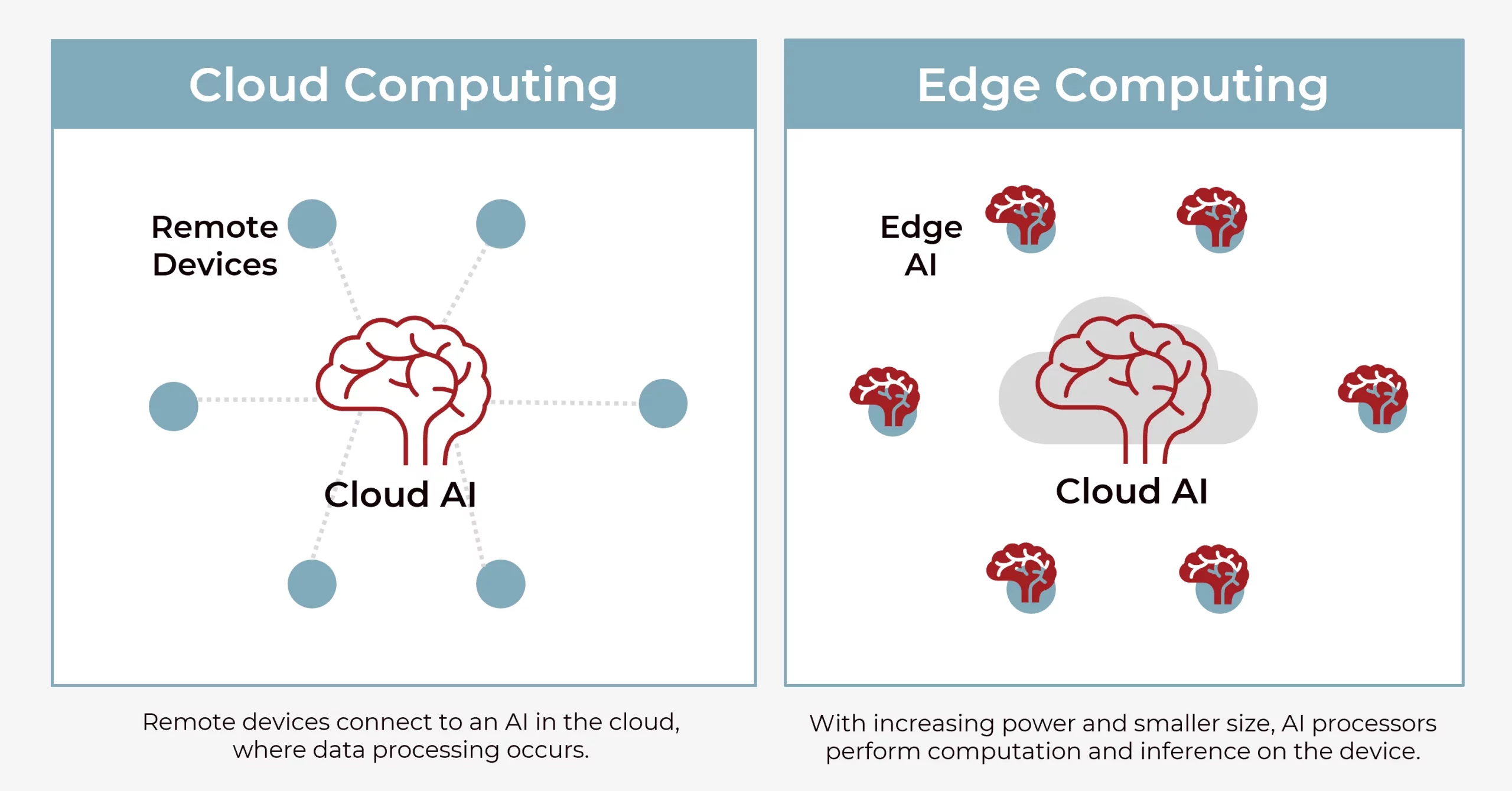

- Edge AI Deployment: Deploying models on edge devices reduces latency and enhances real-time decision-making capabilities.

Effective deployment infrastructure ensures AI models deliver reliable performance, whether in the cloud, on-premises, or at the edge.

2.5. MLOps and AI Governance

MLOps and AI governance are critical for managing the lifecycle of AI models and ensuring ethical, compliant use:

- Model Training Pipelines: Automating model training pipelines improves efficiency and reduces errors during updates.

- Version Control for Models: Tools like DVC (Data Version Control) ensure reproducibility and track changes to models over time.

- Bias Detection, Auditing, and Compliance: Frameworks like IBM AI Fairness 360 help identify and mitigate biases, ensuring ethical AI practices.

MLOps and governance frameworks provide the backbone for operationalizing AI at scale while maintaining transparency and compliance.

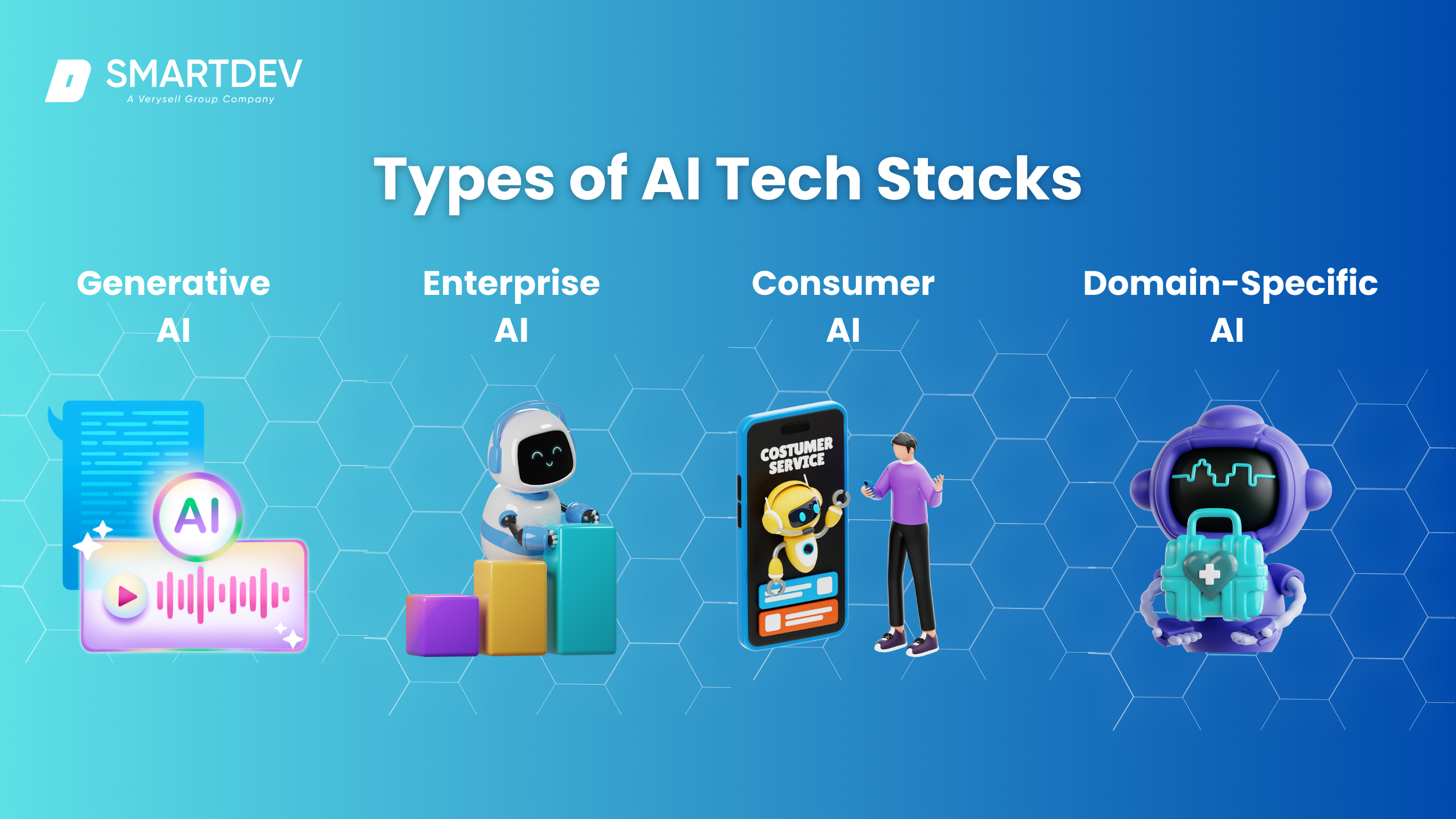

3. Types of AI Tech Stacks

Not all AI tech stacks are created equal. Different use cases and objectives require tailored approaches. Let’s explore the main types of AI tech stacks and what makes them unique.

3.1. Generative AI Tech Stack

Generative AI tech stacks focus on creating models capable of producing new content, such as text, images, or music. These stacks are pivotal for enabling innovation in areas like creative industries, automation, and content generation.

One of the foundational components is Transformer Models, such as GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers). These models excel at understanding and generating human-like content, driving advancements in natural language processing and generative tasks.

Another critical element is Datasets. Generative AI depends heavily on diverse, high-quality datasets. Properly curated and labeled datasets provide the context and depth needed to train sophisticated models. For example, OpenAI’s GPT-3 was trained using a massive and varied dataset, enabling its versatility across tasks.

Development Tools further simplify the process of building and deploying generative AI solutions. Platforms like Hugging Face Transformers and OpenAI API provide pre-trained models and APIs, allowing developers to fine-tune or integrate generative capabilities efficiently. These tools lower barriers to entry and foster rapid prototyping.

3.2. Enterprise AI Tech Stack

Enterprise AI tech stacks are designed to meet the unique needs of large organizations. These stacks prioritize scalability, customization, and integration with existing systems. Key characteristics include:

- Tailored Solutions: Enterprises often require bespoke AI solutions that align with their specific workflows and objectives. Custom model development and integration are common.

- Balancing Scalability and Customization: Enterprise stacks must handle large-scale operations while remaining adaptable to evolving business needs.

- MLOps Integration: Ensuring robust model deployment, monitoring, and retraining capabilities is essential for long-term success.

This type of stack is well-suited for industries like finance, manufacturing, and telecommunications.

3.3. Consumer AI Tech Stack

Consumer AI tech stacks are centered on delivering intuitive and impactful AI-driven experiences directly to end-users. These stacks are designed to prioritize usability, responsiveness, and performance, making them essential for applications that interface directly with consumers.

A major component of consumer AI is the focus on Applications such as chatbots, virtual assistants, and recommendation engines. These tools enhance user engagement and streamline experiences across various industries, from e-commerce to entertainment. Virtual assistants like Siri or Alexa and recommendation engines like those on Netflix or Amazon exemplify how consumer AI integrates seamlessly into daily life.

Lightweight Models are another crucial feature, tailored for speed and efficiency. Unlike enterprise-grade systems, consumer AI models must deliver real-time performance with minimal latency, ensuring users have a smooth and interactive experience. This requirement makes optimization and model compression vital in consumer AI tech stacks.

Lastly, Cloud and Edge Integration play a significant role in ensuring reliability and scalability. By leveraging cloud resources, consumer AI applications can process complex tasks, while edge devices enable immediate, localized responses. This hybrid approach balances power and speed, catering to global and local needs simultaneously.

3.4. Domain-Specific AI Tech Stacks

Some industries require highly specialized AI stacks tailored to their unique challenges. Examples include:

- Healthcare: AI stacks in healthcare emphasize data privacy and regulatory compliance while enabling applications like diagnostic tools and patient management systems.

- Finance: Fraud detection and algorithmic trading rely on AI stacks that prioritize real-time processing and robust security.

- Retail: Personalization engines and inventory optimization solutions are powered by AI stacks designed for scalability and customer insights.

Domain-specific stacks showcase the versatility of AI, enabling transformative solutions across diverse fields.

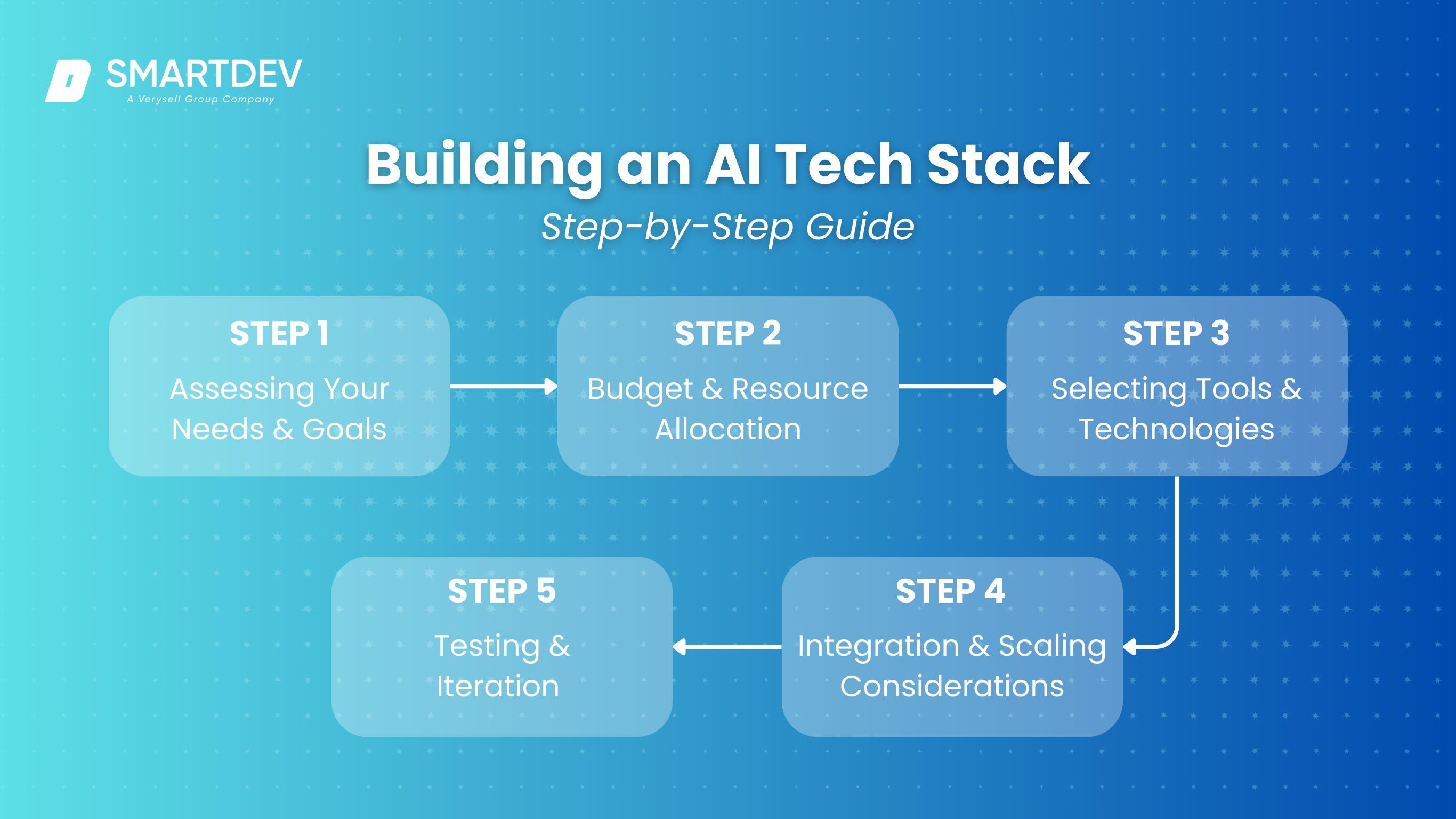

4. Building an AI Tech Stack: Step-by-Step Guide

Creating a successful AI tech stack requires careful planning and execution. Whether you’re starting from scratch or optimizing an existing stack, following a step-by-step approach ensures your stack aligns with your goals and scales effectively over time.

Step 1: Assessing Your Needs and Goals

Begin by defining what you want to achieve with AI. Are you developing a customer-facing chatbot, optimizing internal processes, or creating predictive analytics models? Clear goals will guide your decisions at every stage of the stack-building process.

Evaluate the data you have and the data you’ll need. Identify the challenges you want to solve and the value AI can bring to your organization. This initial assessment lays the foundation for selecting the right tools and strategies.

Step 2: Budget and Resource Allocation

Your budget will heavily influence the components you can include in your AI tech stack. Prioritize essential tools and allocate resources for potential growth. Consider costs for:

- Hardware: Servers, GPUs, or cloud infrastructure.

- Software: Licenses for frameworks, libraries, or development tools.

- Talent: Hiring skilled personnel or upskilling existing teams.

Proper resource allocation ensures that your stack is both cost-effective and capable of scaling as your needs evolve.

Must read: AI Development Cost: An Ultimate Guide

Step 3: Selecting Tools and Technologies

Choose tools and technologies that align with your goals, expertise, and budget. Focus on components that complement each other and are widely supported in the AI community. Evaluate:

- Frameworks: TensorFlow, PyTorch, or others based on your use case.

- Development Tools: IDEs, no-code platforms, and experiment tracking solutions.

- Deployment Infrastructure: Cloud providers, containerization tools, or edge solutions.

Research each option’s strengths and limitations to ensure a cohesive and effective stack

Step 4: Integration and Scalability Considerations

Think long-term when building your stack. Will it integrate smoothly with existing systems? Can it scale to handle increased data volumes or user demands? Prioritize technologies with robust APIs and modular designs that enable seamless integration and adaptability.

Ensure compatibility between different components of your stack to avoid bottlenecks or inefficiencies during deployment and scaling.

Step 5: Testing and Iteration

Building an AI tech stack is an iterative process. Regularly test your stack’s components to identify and resolve issues early. Conduct:

- Performance Testing: Evaluate speed, accuracy, and reliability.

- Scalability Testing: Ensure the stack can handle increased workloads without degradation.

- User Feedback: Incorporate input from end-users or stakeholders to refine functionalities.

Continuous iteration ensures that your stack remains effective, resilient, and aligned with your evolving goals.

5. Challenges in Managing an AI Tech Stack

Building and maintaining an AI tech stack is not without its challenges. Organizations must navigate various hurdles to ensure their stack performs effectively and sustainably. Below are some of the key challenges faced when managing an AI tech stack.

5.1. Complexity and Interoperability

AI tech stacks often consist of multiple layers, including data infrastructure, machine learning frameworks, development tools, and deployment platforms. Ensuring that these components work together seamlessly can be a significant challenge. Integration issues, compatibility constraints, and conflicting updates between tools can create inefficiencies and delays.

To address this, organizations need a well-thought-out architecture and regular updates to ensure interoperability. Using modular tools with robust APIs can also help mitigate complexity.

5.2. Managing Large-Scale Data

The volume and variety of data needed for AI applications can be overwhelming. Storing, processing, and analyzing large-scale datasets requires robust infrastructure and specialized tools. Additionally, ensuring data quality and consistency across diverse sources can pose significant challenges.

Organizations must invest in scalable data solutions such as cloud-based data lakes and warehouses. Implementing rigorous data governance practices and using automated tools for data cleaning and preprocessing can also ease the burden of managing large-scale data.

5.3. Ethical and Regulatory Concerns

AI systems often interact with sensitive data, raising ethical and regulatory concerns. Ensuring compliance with data protection laws such as GDPR or CCPA is critical, as is addressing issues related to bias, transparency, and accountability in AI models.

To navigate these challenges, organizations must implement AI governance frameworks, conduct regular audits for fairness and compliance, and adopt ethical AI practices. Tools like IBM AI Fairness 360 can help detect and mitigate biases.

5.4. Talent Gap in AI Development

Building and managing an AI tech stack requires a team with diverse skills, including expertise in data science, machine learning, software engineering, and system integration. However, finding professionals who possess a deep understanding of these areas remains a challenge. The talent gap in AI development is exacerbated by the rapid pace of technological advancement, which outstrips the availability of adequately trained individuals. Organizations must invest in upskilling their existing workforce, partner with educational institutions, or leverage external consultants to bridge this gap. By fostering a culture of continuous learning, businesses can address this challenge and build resilient teams capable of managing complex AI tech stacks.

6. Modern Trends in AI Tech Stacks

The field of AI is dynamic, and its technology stacks are constantly evolving to meet new challenges and opportunities. Here are some of the key trends shaping the landscape of AI tech stacks.

Trend No.1: Rise of Generative AI in Tech Stacks

Generative AI is rapidly gaining prominence as a transformative force in technology stacks. Tools like OpenAI’s GPT models and DALL·E are clear examples, driving advancements in content creation, personalized marketing, and even software development. For instance, GitHub Copilot, powered by OpenAI Codex, demonstrates how generative AI is reshaping coding practices by assisting developers in writing and debugging code.

These technologies require specialized components, such as high-performance GPUs and extensive training datasets, underscoring the need for advanced infrastructure in modern AI stacks. This trend highlights the growing importance of integrating generative capabilities into mainstream tech stacks.

Trend No.2: Democratization of AI Development

The rise of no-code platforms and AutoML tools has made AI accessible to a broader audience. Google AutoML, for example, allows users to build sophisticated machine-learning models without requiring coding expertise. Similarly, platforms like DataRobot empower smaller businesses to integrate AI solutions into their workflows.

This trend is evident in industries such as retail and healthcare, where non-technical teams leverage AI to personalize customer experiences or improve patient care. By reducing technical barriers, these tools are significantly expanding the adoption of AI.

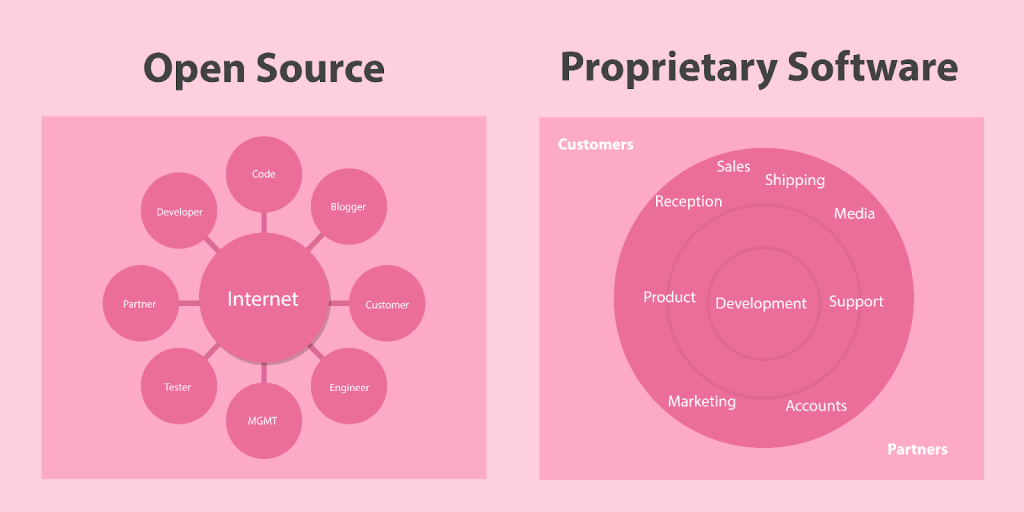

Trend No.3: Open-Source Dominance vs. Proprietary Tools

The debate between open source and proprietary tools continues to shape AI tech stacks. For instance, PyTorch’s dynamic computation graph has made it a favorite among researchers for experimentation and innovation. On the other hand, proprietary tools like AWS SageMaker and Microsoft Azure Machine Learning offer integrated solutions with dedicated support, appealing to enterprises that prioritize streamlined deployment.

A case in point is Netflix, which uses a mix of open-source tools for innovation and proprietary systems for scalability, highlighting the hybrid approach many organizations adopt to balance cost and functionality. The choice between the two often depends on an organization’s budget, expertise, and specific requirements, with many opting for a hybrid approach to maximize benefits.

Trend No.4: Edge AI and Real-Time AI Processing

The increasing demand for real-time decision-making is driving the adoption of edge AI. By processing data directly on edge devices, such as smartphones and IoT sensors, organizations can reduce latency and enhance privacy. Technologies like NVIDIA Jetson and TensorFlow Lite are at the forefront of this trend, enabling efficient on-device AI. Modern AI tech stacks are evolving to incorporate edge computing solutions alongside cloud capabilities, ensuring a balance between performance and scalability.

A prominent example is autonomous vehicles, which rely on edge AI to process sensor data and make split-second decisions. Similarly, smart home devices like Amazon Echo use edge processing to ensure faster response times while minimizing data sent to the cloud.

7. AI Tech Stack for Startups vs. Enterprises

AI tech stack needs vary significantly between startups and enterprises due to differences in resources, goals, and scale. Understanding these differences is key to selecting or building the right stack.

7.1. Lean AI Tech Stacks for Startups

Startups often operate under tight budgets and need to focus on rapid development. Lean AI tech stacks prioritize simplicity and cost-effectiveness. For example:

- Tools: Free or open-source frameworks like PyTorch or Scikit-learn.

- Infrastructure: Cloud platforms offering pay-as-you-go pricing, such as AWS or Google Cloud.

- Focus Areas: Pre-trained models or AutoML tools to minimize development time.

A practical use case is a startup building a recommendation engine using open-source libraries to keep costs low while achieving functional results quickly.

7.2. Comprehensive AI Architectures for Enterprises

Enterprises require comprehensive AI architectures capable of handling large-scale operations and integrating seamlessly with existing systems. Key characteristics include:

- Enterprise Tools: Frameworks like TensorFlow paired with MLOps tools such as Kubeflow.

- Infrastructure: Hybrid solutions combining on-premises and cloud resources for scalability and control.

- Customization: Bespoke model development tailored to industry-specific needs.

A global retailer using AI for inventory optimization might leverage advanced analytics platforms integrated with real-time supply chain data.

7.3. Cost vs. Performance Considerations

Aspect | Startups | Enterprises |

| Cost Priorities | Focus on low-cost solutions for agility and rapid iteration. | Emphasize long-term ROI with robust, scalable systems. |

| Infrastructure | Cloud-based, pay-as-you-go models for affordability. | Hybrid solutions combining cloud and on-premises systems. |

| Tools | Leverage free/open-source tools like PyTorch or Scikit-learn. | Use enterprise-grade tools like TensorFlow and Kubeflow. |

| Decision Metrics | Prioritize tools that minimize time-to-market. | Focus on tools that ensure operational efficiency and scalability. |

Both startups and enterprises must balance cost and performance. Startups prioritize low-cost solutions to stay agile, while enterprises often focus on achieving long-term ROI through robust, scalable systems. Decisions should be guided by clear ROI metrics and future scalability needs.

8. Case Studies: Successful AI Tech Stack Implementations

Learning from real-world examples not only inspires but also demonstrates how tailored AI tech stacks can achieve remarkable outcomes. Get to know some popular cases that showcase the potential of well-crafted stacks.

8.1. AI in E-commerce: Netflix and Personalization Engines

When you open Netflix and see a list of binge-worthy recommendations tailored just for you, that’s AI in action. Netflix’s personalization engine, powered by an AI tech stack including TensorFlow and AWS, sifts through millions of data points—your watch history, preferences, and even trending shows—to provide real-time suggestions.

By employing deep learning models and cloud-based computing, Netflix improved user engagement dramatically, leading to a 20% boost in streaming hours, as highlighted in their internal analytics reports. Their success showcases the power of combining scalable frameworks with robust cloud services, as detailed in published talks and case studies by Netflix’s engineering team.

8.2. AI in Healthcare: Google DeepMind and Predictive Analytics

Google’s DeepMind is revolutionizing healthcare through predictive analytics. Their AI system uses PyTorch and proprietary data pipelines to analyze patient data and predict potential health risks, such as kidney failure, 48 hours before symptoms appear.

According to a study published in Nature, DeepMind’s Streams app has demonstrated success in assisting clinicians with real-time data insights. This advanced tech stack includes massive datasets, cutting-edge machine learning frameworks, and partnerships with leading hospitals like the Royal Free London NHS Foundation Trust to ensure accurate and actionable insights.

By deploying these AI solutions, DeepMind has not only enhanced patient outcomes but also significantly reduced costs for healthcare providers, as highlighted in various healthcare case studies and peer-reviewed research.

8.3. AI in Autonomous Vehicles: Tesla and Edge Computing

Tesla’s autonomous driving systems are a testament to the power of AI at the edge. Their vehicles use a tech stack featuring NVIDIA GPUs for training deep learning models and proprietary software for on-device computation.

According to Tesla’s AI Day presentations and publicly available technical deep dives, this stack enables Tesla cars to process data from cameras, radar, and sensors in real time, ensuring split-second decision-making critical for autonomous navigation. By leveraging both edge and cloud technologies, Tesla has set the gold standard for self-driving capabilities, balancing safety, efficiency, and adaptability.

For example, their use of Tesla Vision—an end-to-end computer vision system—demonstrates their innovative approach to eliminating radar dependency while enhancing vehicle performance in diverse environments.

9. Tools and Platforms for AI Tech Stacks

Choosing the right tools and platforms is crucial for building an effective AI tech stack.

Category | Tool/Platform | Key Features |

| Frameworks | TensorFlow vs. PyTorch |

|

| Cloud Services | AWS AI vs. Google AI |

|

9.1. Open Source vs. Proprietary Platforms

The choice between open-source and proprietary platforms plays a critical role in shaping an organization’s AI tech stack. While open-source solutions offer unmatched flexibility and cost savings, proprietary platforms provide streamlined workflows and dedicated support.

Aspect | Open Source | Proprietary Platforms |

| Cost | Free to use with no licensing fees. Examples: TensorFlow, PyTorch. | Subscription-based or licensing fees. Examples: AWS SageMaker, Google Vertex AI. |

| Customizability | Highly customizable, allowing developers to tailor solutions to specific needs. | Limited to built-in features but often comes with advanced pre-configured workflows. |

| Support | Relies on community forums and user contributions for troubleshooting and updates. | Offers enterprise-grade support and regular updates with dedicated teams. |

| Ease of Use | Requires expertise to set up and configure effectively. | Simplifies integration and deployment with user-friendly interfaces and automation. |

| Adoption Examples | Widely adopted by companies like Netflix (TensorFlow for personalization engines). | Used by enterprises like Uber (AWS SageMaker for scalable AI deployments). |

| Best Use Cases | Ideal for startups, researchers, and developers needing flexibility and cost savings. | Best suited for large-scale enterprises needing robust, integrated solutions. |

9.2. Free vs. Paid Solutions

Free tools are ideal for experimentation and startups, while paid solutions often provide advanced features and dedicated support. For instance, Scikit-learn is widely used in free projects, whereas enterprises might prefer Azure Machine Learning for scalability.

10. Metrics to Evaluate Your AI Tech Stack

Establishing metrics ensures that your tech stack delivers measurable value.

Model Accuracy and Performance: Evaluate how well models perform using metrics like precision, recall, and F1 scores. Regular testing ensures accuracy over time.

Scalability and Reliability: Test the stack’s ability to handle increased workloads and maintain uptime. Scalability ensures long-term adaptability.

Cost Efficiency: Track ROI by comparing operational costs to business outcomes. Use budget-friendly tools where possible.

Time-to-Market: Measure how quickly your team can develop and deploy AI solutions. A streamlined tech stack accelerates innovation.

11. The Future of AI Tech Stacks

As we approach 2025, AI technology stacks are set to evolve in groundbreaking ways, driven by advancements in computational power, ethical AI practices, and global challenges. Here’s a glimpse into the emerging trends shaping the future of AI stacks:

Hyperautomation and Autonomous AI Systems

By 2025, hyperautomation will extend beyond traditional industries, transforming sectors like agriculture and public services. AI stacks will integrate robotic process automation (RPA), natural language processing (NLP), and IoT seamlessly, enabling fully autonomous decision-making systems. For example, AI-driven smart farms could use integrated stacks to automate irrigation, pest control, and harvesting.

AI Tech Stacks in a Post-Quantum Computing Era

Quantum computing will no longer be a distant concept but an emerging reality. In 2025, AI tech stacks may begin incorporating quantum-resistant algorithms and leveraging early-stage quantum processors for complex problem-solving tasks. Companies like IBM and Google are already leading the charge, with their quantum systems predicted to impact industries such as logistics, cryptography, and material sciences.

Evolving Role of AI in Sustainability and Green Computing

Sustainability will be at the forefront of AI development. By 2025, AI stacks will increasingly prioritize energy-efficient architectures, including low-power models and optimized hardware. For instance, the use of AI to manage renewable energy grids and reduce carbon footprints in supply chains will gain momentum. Advances in green AI, supported by policies and global initiatives, will drive widespread adoption of eco-friendly AI solutions.

Ethical and Explainable AI

The demand for transparency and accountability in AI systems will redefine tech stack requirements. By 2025, explainable AI (XAI) frameworks will become a standard feature, ensuring that models are interpretable and aligned with ethical guidelines. Organizations will adopt tools to audit and govern AI systems, particularly in high-stakes fields like healthcare and finance.

The AI tech stacks of 2025 will reflect a fusion of cutting-edge technology, ethical considerations, and sustainability goals, paving the way for a smarter, greener, and more responsible future.

Conclusion

A well-defined AI tech stack is essential for driving innovation and achieving scalability, whether you’re a startup focusing on agility or an enterprise aiming for long-term ROI. As AI technologies evolve, organizations must continuously adapt their stacks to stay competitive and meet future challenges.

Take the first step by assessing your needs and experimenting with accessible tools. Stay informed about emerging trends like edge AI and generative models to build a future-ready AI stack. Start small, think big, and let SmartDev be your partner in leveraging AI to transform your business and achieve unparalleled success.

Frequently Asked Questions (FAQs)

1. What is the best AI tech stack for small businesses?

Start with open-source tools like Scikit-learn or TensorFlow for affordability and versatility. Combine these with cloud services like AWS or Google Cloud, which offer flexible, pay-as-you-go pricing models. Platforms like Hugging Face can also provide pre-trained models to reduce development time and costs.

2. How does MLOps improve AI stack efficiency?

MLOps platforms such as Kubeflow and MLflow streamline the lifecycle of AI projects. They help automate deployment pipelines, monitor model performance in real time, and enable version control for datasets and models, ensuring consistent and reliable updates.

3. Can I build an AI stack without coding skills?

Yes, no-code platforms like DataRobot and Google AutoML make AI accessible to non-technical users. These tools provide drag-and-drop interfaces and pre-configured models, enabling users to train and deploy AI solutions without writing a single line of code.

—

References

- Top 10 AI Trends Shaping 2025: What You Need to Prepare For Now | All About AI

- 10 Artificial Intelligence Trends in 2025 To Stay Ahead | Northwest Education

- AI in Action: 6 Business Case Studies on How AI-Based Development is Driving Innovation Across Industries | Appinventiv

- 100+ AI Use Cases & Applications: In-Depth Guide [’25] | AI Multiple Research

- Gartner Top 10 Strategic Technology Trends for 2025 | Gartner

- Tesla’s Elon Musk Was Right About Self-Driving Cars. Just Ask Nvidia’s Jensen Huang | Barron’s

- How Netflix & Amazon Use AI to do Better Customer Segmentation | Tegfocus

- Using AI to give doctors a 48-hour head start on life-threatening illness | Google DeepMind