1. What is Ethical AI Development?

In the digital age, Artificial Intelligence (AI) has transformed nearly every aspect of society, from healthcare and finance to entertainment and manufacturing. As AI technology continues to evolve, there is an increasing need for its development to be guided by ethical principles.

In the digital age, Artificial Intelligence (AI) has transformed nearly every aspect of society, from healthcare and finance to entertainment and manufacturing. As AI technology continues to evolve, there is an increasing need for its development to be guided by ethical principles.

Ethical AI development refers to the creation and deployment of AI systems that adhere to moral guidelines and prioritize fairness, transparency, accountability, and the well-being of society.

This article explores the core aspects of ethical AI development, the challenges it faces, and the future trends that will shape its evolution.

Keep reading!

1.1. Definition of Ethical AI

Ethical AI is the practice of developing AI systems that operate within the bounds of human-centric values. These values typically include fairness, accountability, transparency, privacy, and respect for human rights. The goal is to ensure that AI systems are designed and implemented in ways that benefit society without causing harm.

As AI’s capabilities grow, its ethical implications become more significant, requiring developers and stakeholders to consider the broader impact of AI systems.

1.2. Why AI Ethics Matters: The Impact on Society & Business

AI has the potential to revolutionize industries, but its unchecked development can have serious consequences. For instance, biased AI algorithms can lead to discrimination in hiring processes, healthcare diagnostics, and criminal justice decisions.

Businesses that fail to implement ethical AI risk not only reputational damage but also legal repercussions, as regulatory bodies and governments begin to introduce laws around AI ethics. AI systems that operate without ethical considerations can perpetuate social inequalities, making it imperative for developers to build AI with ethics in mind.

1.3. The Difference Between Responsible AI, Fair AI, and Ethical AI

While the terms responsible AI, fair AI, and ethical AI are often used interchangeably, they have subtle differences.

- Responsible AI refers to AI that is developed and used in ways that are morally responsible, ensuring it operates within the scope of societal norms and laws.

- Fair AI focuses specifically on eliminating bias in AI systems to ensure they treat all individuals equally, regardless of their race, gender, or socioeconomic background.

- Ethical AI encompasses both of these principles, along with additional aspects such as transparency, accountability, and human oversight.

1.4. Real-World Ethical AI Failures & Their Consequences

The lack of ethical AI development has led to several real-world failures. One prominent example is the use of biased algorithms in criminal justice systems, such as predictive policing tools that disproportionately target minority communities.

Another example is the controversy surrounding facial recognition technology, which has been found to have higher error rates for people of color, leading to wrongful arrests and surveillance concerns. These failures highlight the need for rigorous ethical guidelines in AI development to prevent harm and ensure the technology benefits everyone equally.

2. The Core Principles of Ethical AI Development

The development of artificial intelligence (AI) must be guided by ethical principles to ensure that these technologies serve humanity responsibly and equitably. As AI systems become increasingly integrated into various sectors of society, there is an urgent need to establish a framework that prioritizes transparency, fairness, accountability, and alignment with human values. These core principles form the foundation for ethical AI development and help mitigate potential risks and harmful impacts.

The development of artificial intelligence (AI) must be guided by ethical principles to ensure that these technologies serve humanity responsibly and equitably. As AI systems become increasingly integrated into various sectors of society, there is an urgent need to establish a framework that prioritizes transparency, fairness, accountability, and alignment with human values. These core principles form the foundation for ethical AI development and help mitigate potential risks and harmful impacts.

2.1. Transparency & Explainability (Black Box AI vs. White Box AI)

One of the most significant challenges facing AI development today is the opacity of its decision-making processes. Many AI systems, particularly those built on machine learning algorithms, are often referred to as “black box” models. This means that, while the systems can produce highly accurate predictions and decisions, it is difficult or even impossible for developers and end-users to fully understand how these conclusions are drawn. The lack of transparency can raise concerns about fairness, accountability, and trust in AI technologies.

Ethical AI development emphasizes the need for explainability—ensuring that AI systems are transparent and that their decision-making processes can be understood and justified. This is critical for fostering trust and confidence in AI systems, as users and stakeholders need to be able to comprehend the reasoning behind AI-generated outcomes.

White box AI models promote this transparency, allowing developers and users to trace how an AI system reaches its decisions. This not only enhances the credibility of AI but also allows for more effective troubleshooting, adjustments, and improvements.

The trade-off between black box and white box AI lies in complexity and performance. While white box models tend to be more transparent, they may sometimes perform less effectively than their black box counterparts, particularly in complex tasks. Nonetheless, the ethical emphasis is on balancing the desire for high performance with the need for explainability and accountability, ultimately ensuring that AI technologies are both effective and aligned with societal values.

2.2. Fairness & Bias Mitigation (Addressing AI Discrimination)

Fairness is a cornerstone of ethical AI development, and its importance cannot be overstated. AI systems must be designed to avoid outcomes that discriminate based on race, gender, age, socioeconomic status, or other characteristics that could lead to unjust treatment. These biases often stem from the data used to train AI models. If the datasets reflect historical inequalities or prejudices, the AI system may perpetuate or even amplify these biases, leading to discriminatory outcomes.

To ensure fairness, developers must focus on identifying and mitigating biases throughout the AI development process. This involves using diverse and representative datasets, ensuring that AI models are tested across a variety of conditions and demographic groups, and implementing mechanisms for continuous monitoring. Ethical AI development also necessitates the use of bias detection tools and audits, which can help flag potential discriminatory behavior and allow for corrective actions.

Ultimately, ensuring fairness in AI requires an ongoing commitment to evaluating and adjusting algorithms, acknowledging that biased outcomes can have real-world consequences, particularly in areas such as hiring, lending, law enforcement, and healthcare. Ethical AI developers must adopt a proactive approach to avoid perpetuating existing societal biases and strive to create systems that contribute to equality and inclusivity.

2.3. Accountability & Responsibility (Who is Responsible for AI’s Actions?)

As AI systems become more autonomous and capable of making complex decisions, the question of accountability becomes increasingly important. When an AI system makes a harmful or unethical decision, who should bear responsibility? This issue is particularly pressing in contexts such as healthcare, criminal justice, and autonomous vehicles, where AI decisions can have life-altering consequences.

Ethical AI development requires that clear frameworks for accountability be established. This involves creating systems where responsibility is explicitly assigned to developers, organizations, and regulators, ensuring that AI systems are not used in ways that could harm individuals or society. Accountability also includes addressing the question of liability in cases where an AI system causes damage or violates rights. It is crucial to define who is responsible for the actions of AI, whether it is the developers who created the system, the companies that deploy it, or the regulators who oversee its compliance.

The ethical principle of accountability ensures that AI systems are subject to human oversight and control. Moreover, it reinforces the idea that AI technologies should be used in a manner that is ethically sound and legally compliant, ensuring that any harm caused by AI is addressed in a transparent and fair manner.

2.4. Privacy & Data Protection (GDPR, CCPA, and AI Regulations)

In an era where data is considered one of the most valuable assets, privacy protection is a critical concern in AI development. AI systems often require access to vast amounts of data, including personal and sensitive information. This data is used to train models, refine predictions, and enhance decision-making capabilities. However, the collection and use of such data can raise serious privacy concerns, particularly if it is done without adequate safeguards or user consent.

Ethical AI development must prioritize privacy and data protection, ensuring that AI systems comply with established regulations such as the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States. These regulations focus on protecting individuals’ personal data and ensuring that it is used in a way that respects privacy rights. Furthermore, they emphasize transparency, consent, and control, ensuring that individuals have a clear understanding of how their data is being collected and used, and that they have the ability to opt out when necessary.

Adhering to privacy laws not only helps prevent unauthorized data access but also builds trust with users, reassuring them that their personal information is protected. Ethical AI developers must implement robust data protection practices, such as encryption, anonymization, and access control, to ensure that AI systems operate in compliance with privacy regulations and uphold users’ rights.

2.5. Human Oversight & Autonomy (Avoiding Over-Reliance on AI)

While AI has the potential to enhance decision-making and optimize processes, ethical AI development emphasizes the importance of maintaining human oversight. Human judgment remains essential in critical areas, such as healthcare, criminal justice, and other domains where life-or-death decisions are made. Over-reliance on AI systems can lead to unforeseen consequences, as AI is not infallible and can make mistakes or act in ways that are inconsistent with human values.

Ethical AI prioritizes human autonomy, ensuring that AI systems act as tools to assist and complement human decision-making, rather than replace it entirely. In healthcare, for example, AI may provide recommendations or diagnoses, but it is ultimately the responsibility of healthcare professionals to make final decisions in the best interests of the patient. This ensures that human empathy, ethical reasoning, and accountability remain central in decision-making processes, particularly in sensitive or high-stakes situations.

AI should be viewed as an augmentation to human expertise, not a substitute for it. Ethical AI frameworks must include mechanisms for human oversight, enabling users to intervene and override AI decisions when necessary. This balance ensures that AI remains a useful tool without undermining human autonomy.

2.6. Sustainability & Environmental Impact of AI

The rapid growth of AI has raised concerns about the environmental impact of these technologies, particularly when it comes to energy consumption. Large-scale machine learning models require significant computational resources, which often translate into high energy consumption and an increased carbon footprint. As AI systems become more widespread, there is a growing need to consider the environmental consequences of their development and deployment.

Ethical AI development includes efforts to optimize algorithms for energy efficiency, reduce the environmental impact of AI infrastructure, and align AI technologies with sustainability goals. For instance, developers can design AI systems to minimize the computational power needed to perform tasks or adopt greener energy sources for data centers. This approach helps ensure that AI technologies contribute positively to society while mitigating their ecological impact.

Additionally, as the global focus on climate change intensifies, integrating sustainability into AI development is not just an ethical obligation but a societal imperative. Ethical AI must consider both the short-term and long-term effects of AI technologies on the environment, ensuring that AI’s benefits do not come at the cost of the planet’s health.

3. Ethical AI Challenges & Risks

Despite the core principles of ethical AI development, a variety of challenges and risks persist. These challenges must be proactively addressed to ensure that AI technologies benefit society without causing unintended harm.

Despite the core principles of ethical AI development, a variety of challenges and risks persist. These challenges must be proactively addressed to ensure that AI technologies benefit society without causing unintended harm.

The risks associated with ethical AI span multiple sectors, ranging from algorithmic biases to privacy violations, misinformation, workforce disruption, and even military applications. A comprehensive examination of these risks is essential to the responsible development and deployment of AI technologies.

3.1. Bias in AI Algorithms & Datasets

One of the most prominent ethical challenges in AI development is the inherent bias that can exist in both algorithms and the datasets used to train AI systems.

AI algorithms learn patterns from historical data, which, if biased, can perpetuate and even magnify existing societal inequalities. This becomes particularly problematic in high-stakes fields such as criminal justice, hiring, lending, and healthcare, where biased AI systems can lead to discriminatory outcomes.

For example, predictive policing algorithms trained on biased historical crime data may unfairly target minority communities. Similarly, biased hiring algorithms may disadvantage women or underrepresented groups by using historical hiring practices that favor certain demographics.

To address this issue, developers must actively work to identify and mitigate biases in both the datasets and the algorithms themselves. Techniques such as algorithmic fairness, diverse data sourcing, and bias auditing are essential to reducing the risk of perpetuating discrimination. Additionally, the involvement of diverse teams in AI development and the use of transparency measures such as explainability can help ensure that AI systems are fair and unbiased.

3.2. AI & Misinformation (Deepfakes, Fake News, AI-Generated Content)

AI technologies, especially those used in content generation, present significant ethical concerns related to misinformation. The proliferation of deepfakes—highly realistic, AI-generated videos and audio that manipulate reality—raises serious issues regarding the authenticity of media. These technologies can be used to create fake news, misleading information, and even fabricated events, which can have severe consequences for public opinion, democracy, and societal trust.

For instance, deepfakes can be weaponized to spread political disinformation, damage reputations, or incite violence.

AI-generated content is also becoming more prevalent in journalism, with algorithms being used to produce articles, reports, and even news stories. This poses the risk that false or misleading content could be produced at scale, compromising the integrity of information. Moreover, the ease of creating convincing fake media could lead to a diminishing trust in all forms of digital content.

To counter these risks, ethical AI development must prioritize strategies for detecting and combating misinformation. Approaches such as AI-driven detection tools for deepfakes, increased media literacy education, and transparency regarding AI-generated content are critical in mitigating the negative impact of misinformation on society.

3.3. Surveillance & Privacy Violations

The deployment of AI technologies in surveillance systems presents a particularly troubling ethical challenge, especially regarding the potential violations of privacy. AI-powered surveillance tools can track individuals’ movements, behaviors, and even predict their actions, leading to concerns about mass surveillance and the erosion of civil liberties. Facial recognition technology, for example, can be used to identify individuals in public spaces without their knowledge or consent, creating a chilling effect on freedom of expression and assembly.

Moreover, AI systems are often employed in conjunction with big data analytics, enabling authorities or organizations to gather and process large amounts of personal information about individuals. The collection and use of this data without proper safeguards can lead to abuses such as unjust profiling, discrimination, or the exploitation of sensitive information. In an age where privacy is a fundamental human right, the ethical use of AI in surveillance requires strict regulations, transparency, and accountability.

Ethical AI in surveillance must prioritize the protection of individual rights and privacy. This includes ensuring that AI technologies are used transparently, with clear guidelines and oversight, and that data is handled in a secure and ethical manner. Moreover, AI systems should be designed with the capability for human intervention, ensuring that automated surveillance does not infringe on personal freedoms.

3.4. AI & Job Displacement: Ethical Considerations in Workforce Automation

As AI technologies increasingly automate tasks previously performed by humans, concerns about job displacement and the socioeconomic impact on workers intensify. While AI has the potential to increase efficiency and reduce operational costs, it also poses significant risks to employment across various industries.

For instance, sectors such as manufacturing, retail, and logistics are already seeing job losses due to automation, and as AI continues to advance, more complex and higher-skilled jobs may also be at risk.

The ethical challenge here lies in ensuring that the benefits of AI-driven automation are distributed fairly and do not disproportionately affect vulnerable workers or exacerbate existing inequalities. Developers and policymakers must consider the societal implications of job displacement, including how to provide retraining and upskilling opportunities for workers whose jobs are automated. Additionally, the deployment of AI should aim to augment human labor rather than replace it entirely, creating new opportunities for workers to engage in higher-value tasks.

Addressing the ethical considerations of AI-driven workforce changes involves fostering collaborations between AI developers, businesses, and governments to ensure the implementation of policies that promote equitable access to retraining and support for affected workers.

3.5. Weaponization of AI & Military AI Ethics

AI’s potential use in military applications raises profound ethical concerns, particularly regarding accountability, escalation, and the potential for misuse. Autonomous weapons—systems that can operate without human intervention—have become a focal point of debate in military AI ethics. These weapons, which can make life-or-death decisions without direct human involvement, present serious risks in terms of accountability and control. For example, if an autonomous weapon system mistakenly targets civilians, it may be unclear who is responsible for the resulting harm.

Furthermore, the use of AI in warfare could lead to an arms race, with nations racing to develop increasingly sophisticated autonomous weaponry, potentially escalating conflicts and increasing the risk of unintended consequences. The prospect of AI-powered warfare also raises concerns about the rules of engagement and the adherence to international humanitarian laws, which aim to protect civilians during conflict.

Ethical AI development in the military context must focus on ensuring that AI systems are used in a manner consistent with international humanitarian law, with robust mechanisms for accountability and oversight. Additionally, military AI should be designed to minimize harm to civilians and prevent escalation, ensuring that human oversight remains integral in critical decision-making processes.

3.6. AI in Healthcare & Ethical Dilemmas in Life-or-Death Decisions

AI’s integration into healthcare presents a unique set of ethical dilemmas, particularly in life-or-death situations where the stakes are incredibly high. AI systems are increasingly being used to assist in diagnosing diseases, recommending treatments, and even performing surgeries. While these technologies have the potential to enhance the accuracy and efficiency of medical care, they also introduce challenges related to the delegation of critical decisions to machines.

One significant concern is the potential for over-reliance on AI in healthcare decision-making, which may undermine the role of human medical professionals. For example, a misdiagnosis made by an AI system could have devastating consequences for a patient. Moreover, AI’s ability to make decisions based on algorithms raises questions about whether it can fully account for the complexity of individual cases, including factors such as patient preferences, emotions, and values.

Ethical AI development in healthcare must prioritize patient well-being and autonomy, ensuring that AI systems are used as supportive tools rather than replacing human judgment. Additionally, robust human oversight and transparency in AI decision-making processes are necessary to ensure that patients receive the best possible care.

In conclusion, while the potential benefits of AI are immense, these ethical challenges highlight the need for careful, responsible development and deployment of AI technologies. Addressing these challenges requires a multi-disciplinary approach involving developers, policymakers, and stakeholders from various sectors to create frameworks and guidelines that promote fairness, accountability, and transparency in AI systems.

These challenges are just the tip of the iceberg—if you’re looking for a deeper dive into the most pressing concerns around AI ethics, check out our detailed post on AI ethics concerns.

4. ISO & IEEE AI Ethics Standards

The International Organization for Standardization (ISO) and the Institute of Electrical and Electronics Engineers (IEEE) have both taken significant steps in setting ethical standards for AI. These standards are essential in guiding the design, development, and deployment of AI systems in a manner that respects human values.

The International Organization for Standardization (ISO) and the Institute of Electrical and Electronics Engineers (IEEE) have both taken significant steps in setting ethical standards for AI. These standards are essential in guiding the design, development, and deployment of AI systems in a manner that respects human values.

- The ISO/IEC 23894 standard, for example, focuses on the ethical and societal implications of AI and provides guidelines on managing AI systems that respect human dignity, privacy, and fairness.

- The IEEE’s Global Initiative on Ethics of Autonomous and Intelligent Systems has created a framework that emphasizes transparency, accountability, and inclusivity in AI.

Their standards help ensure that AI technologies are developed in a way that maximizes societal benefit while minimizing the risks of harm. Both ISO and IEEE are recognized as critical players in setting ethical guidelines for AI, but their focus differs slightly in scope and application—ISO’s standards are often broader and industry-agnostic, while IEEE tends to focus on technological standards and implementation.

4.1. UNESCO’s AI Ethics Recommendations

In 2021, the United Nations Educational, Scientific and Cultural Organization (UNESCO) adopted a comprehensive set of recommendations on the ethics of artificial intelligence. These recommendations are intended to guide AI development globally, emphasizing the need for AI to be human-centric and respect human rights.

UNESCO’s approach to AI ethics is grounded in inclusivity and seeks to ensure equitable access to the benefits of AI. The recommendations stress the importance of ensuring that AI development is driven by shared ethical values, such as fairness, non-discrimination, and the protection of privacy.

UNESCO also advocates for the creation of regulatory frameworks at the national and international levels to safeguard against the potential misuse of AI, such as its use for mass surveillance or discriminatory practices. While UNESCO’s recommendations are not legally binding, they serve as a moral and ethical guideline for nations and organizations seeking to implement AI technologies in a responsible and inclusive manner.

4.2. The EU’s AI Act: Ethical AI Compliance in Europe

The European Union (EU) has taken a proactive and regulatory approach to AI ethics through its proposed AI Act, which is one of the most comprehensive legislative frameworks for regulating AI in the world.

The AI Act aims to ensure that AI systems developed and deployed in the EU are safe, ethical, and trustworthy. One of the primary features of the AI Act is its risk-based approach, classifying AI systems according to their potential risks. It designates high-risk AI systems (such as those used in healthcare, transportation, and law enforcement) and imposes stricter requirements on them, including transparency obligations and the need for human oversight.

The AI Act also includes provisions for ensuring that AI systems do not promote discrimination or bias, while providing mechanisms for redress in cases of harm caused by AI systems. The EU’s AI Act is seen as a potential model for AI regulation globally, with its focus on accountability, transparency, and human-centric development being key aspects of its ethical framework.

4.3. US AI Regulations & White House AI Executive Orders

In the United States, AI regulation has largely been driven by executive actions, such as the White House’s Executive Orders on Artificial Intelligence. These orders focus on creating a national AI strategy that ensures the development of AI technologies that are transparent, fair, and accountable while also driving innovation.

The U.S. approach places a strong emphasis on promoting technological advancement while addressing the ethical concerns that arise with AI, such as its potential to exacerbate inequalities or be used for surveillance purposes. The National Institute of Standards and Technology (NIST) plays a significant role in providing guidance on the development of trustworthy AI, and several other agencies are focused on ensuring that AI systems are deployed in ways that promote the common good.

However, the U.S. has faced criticism for lacking a cohesive and comprehensive regulatory framework akin to the EU’s AI Act. Instead, the U.S. regulation remains somewhat fragmented, with ongoing debates about whether comprehensive national legislation is necessary.

4.4. China’s AI Governance & Ethical Considerations

China’s approach to AI ethics and governance has been influenced by the country’s broader political and economic goals. China’s government has set clear priorities in AI development, focusing heavily on economic growth and national security.

In recent years, the Chinese government has introduced a variety of ethical guidelines and policies aimed at shaping the development of AI technologies in a manner that aligns with national interests. These include the Ethical Guidelines for AI issued by the Chinese Ministry of Science and Technology, which stress the need for AI to promote social stability and economic prosperity.

However, there are significant concerns about the balance between these ethical guidelines and the potential for AI technologies to be used for mass surveillance, censorship, and social control. Critics argue that China’s approach to AI governance may prioritize state interests over individual rights, leading to ethical dilemmas regarding privacy and human autonomy.

4.5. Industry-Specific AI Ethics Frameworks (Healthcare, Finance, Tech, etc.)

As AI continues to permeate various industries, it has become increasingly clear that sector-specific ethical frameworks are necessary to address the unique challenges and risks associated with AI applications.

For instance, in the healthcare sector, there is a strong focus on protecting patient privacy and ensuring that AI systems used in diagnostics, treatment planning, and drug development adhere to the highest ethical standards. The use of AI in finance is similarly subject to ethical considerations, with a particular emphasis on transparency in algorithmic decision-making to prevent discrimination and ensure fairness in lending, investment, and insurance processes.

In the technology sector, AI ethics frameworks tend to emphasize accountability, data protection, and human oversight of autonomous systems.

Each industry has its own set of ethical challenges, and as AI continues to evolve, it is critical that these sector-specific frameworks remain flexible and adaptive to new developments in technology and regulatory landscapes. Industry-specific frameworks provide tailored guidance, ensuring that AI development is responsible and aligned with the core values of each sector.

In conclusion, the global landscape of AI ethics regulations is diverse and evolving. As AI technology continues to progress, it is crucial that these regulations continue to adapt and balance the competing priorities of innovation, accountability, fairness, and human rights. Each country and industry faces its own unique challenges, but the shared goal remains clear: the responsible development and use of AI that benefits society as a whole

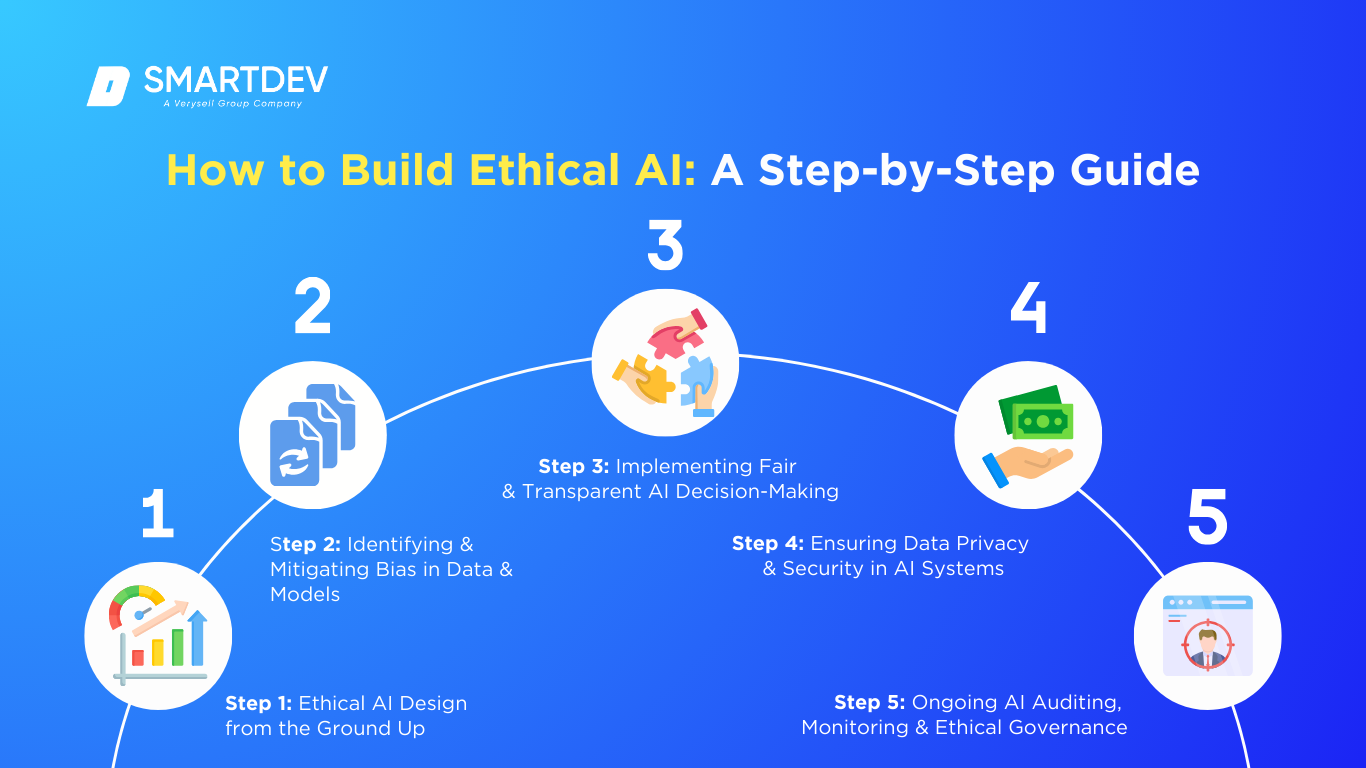

5. How to Build Ethical AI: A Step-by-Step Guide

Building ethical AI is not a one-time process but an ongoing commitment to creating AI systems that prioritize fairness, transparency, and accountability.

Building ethical AI is not a one-time process but an ongoing commitment to creating AI systems that prioritize fairness, transparency, and accountability.

Here is a step-by-step guide to help developers and organizations build ethical AI systems.

Step 1: Ethical AI Design from the Ground Up

The first step in creating ethical AI is ensuring that ethical considerations are embedded from the very beginning of the design process. Developers must define clear ethical objectives for the AI system, such as promoting fairness, ensuring privacy, and avoiding bias. This involves:

- Setting ethical principles and values that align with both the intended use and broader societal good.

- Considering the potential societal impact of the AI system during its conceptualization phase.

- Engaging with stakeholders (including underrepresented groups) to understand their needs and concerns.

A well-designed ethical framework guides the AI throughout its lifecycle, ensuring that ethical principles are incorporated into every decision, from design to deployment.

Step 2: Identifying & Mitigating Bias in Data & Models

Bias in AI systems is a major ethical concern. AI models are trained on data that can contain biases—whether racial, gender, socioeconomic, or otherwise—that can be reflected in the outcomes produced by these systems. Identifying and mitigating bias involves:

- Data Auditing: Reviewing datasets to identify any imbalances or biases that could skew results.

- Data Diversification: Ensuring that datasets are diverse and representative of different groups to avoid perpetuating biases.

- Bias Detection Tools: Implementing tools and algorithms that detect and correct biases in the models before deployment.

By prioritizing unbiased data collection and model design, developers can mitigate the risks of discriminatory outcomes.

Step 3: Implementing Fair & Transparent AI Decision-Making

AI systems must be fair and transparent in their decision-making processes to ensure that users can trust their results. This involves:

- Explainability: Ensuring that AI decisions are explainable and can be understood by users, regulators, and stakeholders. This reduces the “black-box” nature of AI models, fostering trust and accountability.

- Fairness Audits: Regularly assessing whether the AI system produces outcomes that are equitable and non-discriminatory for all user groups.

Transparent decision-making is fundamental to preventing bias and maintaining the ethical integrity of AI systems.

Step 4: Ensuring Data Privacy & Security in AI Systems

Data privacy and security are central to ethical AI. Since AI systems often rely on vast amounts of personal and sensitive data, safeguarding this data is crucial. Developers should:

- Implement Robust Privacy Protocols: Adhere to regulations such as the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA) to protect users’ personal data.

- Data Encryption & Secure Storage: Ensure that data is encrypted and stored securely to prevent unauthorized access or data breaches.

- User Consent: AI systems should always operate under the premise of informed consent, ensuring that individuals understand how their data will be used and have control over it.

Incorporating privacy protections into AI systems prevents misuse of personal information and upholds individuals’ rights.

Step 5: Ongoing AI Auditing, Monitoring & Ethical Governance

AI systems must be regularly audited and monitored to ensure they continue to operate ethically after deployment. This includes:

- Regular Audits: Conducting routine audits of AI systems to identify and address potential ethical issues, such as bias, transparency problems, or privacy violations.

- Continuous Monitoring: Implementing mechanisms to continuously monitor AI’s performance and adjust it as necessary to align with ethical principles.

- Ethical Governance Frameworks: Establishing clear governance structures, such as AI ethics boards or committees, to oversee the ongoing ethical implications of AI systems.

Ethical governance ensures that AI systems are continuously aligned with moral guidelines and societal needs.

6. The Role of Organizations & Companies in Ethical AI

As artificial intelligence (AI) becomes increasingly integrated into various facets of society, the responsibility for ensuring that it is developed and deployed ethically lies not only with governments and regulators but also with the organizations and companies at the forefront of AI innovation.

The ethical implications of AI extend far beyond technical and operational considerations, influencing societal norms, privacy, equality, and human rights. In this context, organizations, especially those in the tech industry, play a pivotal role in fostering the development of AI technologies that are aligned with ethical standards.

Below is a detailed exploration of the role of organizations and companies in promoting ethical AI.

6.1. Big Tech’s Ethical AI Policies (Google, Microsoft, IBM, Meta, OpenAI)

Major technology companies, often leading the charge in AI research and development, have an outsized influence on the way AI is implemented across industries and sectors. Due to their scale and impact, these companies are tasked with ensuring that their AI systems operate in a manner that aligns with societal values and human rights.

Many of the world’s most influential technology companies have developed comprehensive ethical AI policies that reflect their commitment to responsible AI development. These policies are not only influential in guiding their internal projects but also serve as important benchmarks for other organizations striving to create AI systems that are trustworthy and fair.

- Google: Google was one of the first tech giants to create a set of AI ethics principles, which were introduced in 2018. The company’s principles emphasize key values such as fairness, privacy, and transparency. Google has committed to ensuring that AI will be used for socially beneficial purposes that uphold human rights and do not cause harm. The company also prioritizes accountability in AI decision-making, ensuring that its AI systems can be traced and explained when needed. In line with these principles, Google has made efforts to minimize bias in its AI models and has focused on making its AI technologies more explainable.

- Microsoft: Microsoft’s AI ethics framework is built around the core values of fairness, accountability, and transparency. The company has also expressed a strong commitment to mitigating bias in AI models and improving the explainability of AI decision-making processes. Microsoft further empowers users by providing them with greater control over their data, ensuring that data privacy is a critical aspect of its AI initiatives. Through initiatives like the AI and Ethics in Engineering and Research (Aether) committee, Microsoft actively promotes ethical considerations in its AI development, fostering a culture of responsible AI.

- IBM: IBM has long been at the forefront of advocating for ethical AI and has developed a robust set of policies to ensure that its AI systems adhere to ethical principles. IBM’s AI ethics policies emphasize fairness, transparency, and explainability, ensuring that its AI models are transparent and understandable to users. Notably, IBM has created the AI Fairness 360 toolkit, which helps developers assess and mitigate bias in machine learning models. By offering such tools and frameworks, IBM helps other organizations address ethical challenges in AI development.

- Meta (formerly Facebook): Meta, a company that has faced considerable scrutiny for its use of AI in content moderation and advertising, has also developed a set of ethical guidelines to improve the safety, fairness, and privacy of its AI systems. Meta’s AI ethics focus includes addressing algorithmic bias, ensuring privacy protections, and creating safer online environments. The company has implemented tools to identify and mitigate bias in its AI models and is also transparent about its efforts to ensure that its AI technologies respect user privacy.

- OpenAI: OpenAI, as a leading AI research organization, has made ethical considerations a cornerstone of its mission. The company’s charter emphasizes the commitment to developing AI that benefits humanity, ensuring that its technologies are developed in a way that is safe, fair, and aligned with human values. OpenAI places significant emphasis on safety in AI, particularly in the development of its large language models, and is dedicated to ensuring that its technologies do not cause harm to individuals or society.

These companies have recognized the significance of integrating ethical principles into their AI practices and have set standards for other organizations to follow. Their AI ethics policies reflect their recognition of the vast power and influence AI holds, and they are taking steps to ensure that this power is wielded responsibly.

6.2. AI Ethics Committees & Governance Boards

In response to the growing complexity and impact of AI technologies, many organizations have established AI ethics committees or governance boards to oversee AI projects and ensure that they adhere to ethical guidelines.

These committees typically consist of diverse stakeholders, including AI developers, legal experts, ethicists, and representatives from marginalized communities. By bringing together a range of perspectives, these committees ensure that AI systems are developed with consideration for various ethical, legal, and societal concerns.

The role of AI ethics committees and governance boards is multi-faceted and critical to ensuring that AI systems are developed in a responsible manner. Some of their key responsibilities include:

- Reviewing AI Projects for Ethical Risks: Ethics committees assess AI projects from a risk management perspective, identifying potential risks associated with algorithmic biases, privacy violations, and unintended consequences. This proactive review ensures that potential ethical concerns are identified early in the development process.

- Ensuring Transparency and Accountability: These bodies work to guarantee that AI systems are transparent in their operations and that developers are held accountable for the decisions made by their AI systems. This can involve ensuring that AI systems can be explained and understood by users and regulators.

- Providing Guidance on Ethical Dilemmas: AI ethics committees often serve as advisory bodies, offering guidance on how to address complex ethical dilemmas such as algorithmic bias, data privacy, and discrimination. They ensure that AI systems are built in a way that aligns with ethical standards, such as non-discrimination and equity.

In this way, AI ethics committees and governance boards play a crucial role in ensuring that AI systems do not inadvertently cause harm and that they uphold the values of fairness, transparency, and accountability.

6.3. How Startups & Enterprises Can Adopt Ethical AI Best Practices

While large technology companies often have the resources to implement comprehensive ethical AI practices, startups and enterprises—which may have fewer resources—can also adopt ethical AI principles and best practices to ensure that their AI technologies are developed responsibly.

These companies benefit from implementing ethical practices early in the development process, as it helps them avoid costly mistakes, mitigate risks, and build trust with their users.

Key steps for startups and enterprises to adopt ethical AI practices include:

- Integrating Ethics into AI Design: It is essential for startups and enterprises to consider ethical principles such as fairness, transparency, and accountability from the very beginning of the AI design process. By embedding these principles into the design phase, companies can proactively address potential ethical issues.

- Training and Education: Providing regular training and educational opportunities on AI ethics is crucial for raising awareness and ensuring that all stakeholders, including AI developers and business leaders, understand the ethical implications of their work. This could involve offering workshops, seminars, and other forms of training on ethical AI development.

- Collaborating with Experts: Even startups can benefit from collaborating with AI ethics consultants or partnering with academic institutions to ensure that their AI systems adhere to established ethical guidelines. Such partnerships provide access to expert knowledge and resources that may not be available in-house.

By following these steps, startups and enterprises can not only create responsible AI systems but also avoid reputational risks and potential regulatory challenges.

6.4. Ethical AI as a Competitive Advantage in Business

Ethical AI practices can serve as a significant competitive advantage for businesses. As AI technologies become more integrated into everyday life, customers are increasingly concerned about the privacy and fairness of AI systems. Companies that prioritize ethical AI development can leverage this concern to differentiate themselves in the marketplace.

The advantages of ethical AI as a competitive differentiator include:

- Trust and Responsibility: Companies that prioritize ethical AI can foster trust with their customers by demonstrating their commitment to responsible AI practices. Trust becomes a key differentiator in industries such as healthcare, finance, and technology, where the ethical implications of AI systems are particularly high.

- Customer Loyalty: Customers are more likely to support companies that align with their values, especially when it comes to issues such as data privacy and fairness. Ethical AI can help companies attract and retain customers who are increasingly concerned about how their data is used and the potential for algorithmic bias.

- Regulatory Compliance: By adopting ethical AI practices, companies can better position themselves to comply with existing and upcoming AI regulations. This helps mitigate the risk of regulatory penalties or reputational damage, which could arise from non-compliance.

In conclusion, integrating ethical principles into AI development is not just a moral imperative—it is also a strategic business decision. Companies that prioritize responsible AI can enhance their reputation, attract customers, and minimize regulatory risks, ultimately positioning themselves as leaders in the responsible use of AI technology.

7. Case Studies of Ethical & Unethical AI in Action

7.1. IBM’s AI Ethics Framework & Responsible AI Initiatives

7.1. IBM’s AI Ethics Framework & Responsible AI Initiatives

IBM has been a pioneer in ethical AI development, emphasizing fairness and transparency in its AI systems. Through its AI Fairness 360 toolkit and commitment to developing explainable AI, IBM has set an industry standard for responsible AI initiatives. IBM’s ethics framework ensures that its AI systems are free from bias and operate in ways that promote social good.

7.2. Google’s Ethical AI Failures (AI Bias in Hiring & Face Recognition)

Google, despite its strong ethical AI principles, has faced significant challenges related to bias in its AI systems. One notable failure was the discovery that its AI recruitment tool exhibited gender bias, favoring male candidates over female candidates.

Similarly, its facial recognition technology faced criticism for racial bias, especially in misidentifying people of color. These issues demonstrate the importance of ongoing monitoring and refinement of AI systems to ensure they adhere to ethical standards.

7.3. OpenAI’s ChatGPT & Ethical AI Moderation Challenges

OpenAI’s ChatGPT has raised ethical concerns regarding content moderation and bias. Although OpenAI has made efforts to ensure the model generates safe and unbiased responses, challenges remain. Ensuring that AI models like ChatGPT do not perpetuate harmful stereotypes or misinformation is a crucial aspect of ethical AI development.

7.4. The Role of AI in Social Media Manipulation & Political Influence

AI has been increasingly used in social media platforms to manipulate public opinion and influence political outcomes. AI-powered algorithms often prioritize sensational content, which can lead to the spread of misinformation and the manipulation of public sentiment.

Ethical AI development in the social media space must address these concerns by ensuring that algorithms are transparent, accountable, and designed to prioritize accuracy over engagement.

7.5. AI in Criminal Justice: Predictive Policing & Racial Bias Concerns

AI has been deployed in criminal justice systems for predictive policing and risk assessment. However, these systems have been criticized for perpetuating racial bias, as they are often trained on historical data that reflects biased policing practices. Ethical AI in the criminal justice sector must focus on eliminating racial bias and ensuring that AI systems do not disproportionately target marginalized communities.

8. The Future of Ethical AI: Trends & Innovations

As AI continues to evolve, new technologies and advancements introduce fresh ethical challenges and opportunities. The future of ethical AI is shaped by innovations in generative AI, quantum computing, blockchain, and the evolving relationship between humans and AI systems.

As AI continues to evolve, new technologies and advancements introduce fresh ethical challenges and opportunities. The future of ethical AI is shaped by innovations in generative AI, quantum computing, blockchain, and the evolving relationship between humans and AI systems.

Below, we explore the emerging trends and innovations that will shape the ethical landscape of AI in the years to come.

8.1. Ethical AI in the Age of Generative AI & LLMs

Generative AI and Large Language Models (LLMs) represent some of the most powerful tools in the AI landscape today. These models have the ability to generate highly realistic content, including text, images, and even deepfake videos, raising significant ethical concerns. The development and use of these technologies introduce a variety of potential risks, including the misuse of AI for misinformation and the spread of harmful content. The ability of these models to generate deepfakes—realistic images or videos of individuals doing or saying things they never did—poses a serious threat to public trust, privacy, and democracy.

The ethical implications of generative AI and LLMs are multifaceted. One major concern is authenticity—as these systems can create content indistinguishable from real-world data, they challenge the very notion of what constitutes truth in the digital age. To ensure responsible use, ethical AI development must focus on transparency, such as disclosing when content has been generated by AI, and developing safeguards to prevent the malicious use of these models. Additionally, there must be mechanisms to ensure accountability for harmful or illegal content generated by AI, particularly when such content can be easily disseminated at scale across platforms.

Another significant concern with generative AI is its potential to perpetuate biases. AI models, including those used for generating text and images, can often inherit and amplify biases present in the data they are trained on. If not carefully monitored, these biases could contribute to social injustice or discrimination. Ethical frameworks will need to focus on ensuring fairness in generative AI by incorporating diverse data sets, applying bias detection tools, and establishing clear guidelines for responsible content creation.

8.2. Quantum AI & New Ethical Challenges

Quantum computing has the potential to revolutionize many fields, including artificial intelligence, by enabling the processing of vast amounts of data at unprecedented speeds. However, the arrival of quantum AI introduces new ethical challenges that must be carefully considered. Quantum computers are capable of solving complex problems much faster than classical computers, and this could have profound implications for privacy, data security, and surveillance.

One of the most pressing ethical concerns related to quantum AI is its potential to break existing cryptographic protocols. Today, much of our digital security relies on encryption algorithms that quantum computers could eventually render obsolete. This raises significant issues around the confidentiality and integrity of personal and corporate data. As quantum computing develops, the ethical responsibility of businesses and governments to protect personal privacy will become even more critical, and quantum-safe cryptographic solutions must be explored and implemented.

In addition to concerns about data security, quantum computing’s ability to analyze large-scale datasets could lead to the erosion of personal privacy. The power of quantum AI to sift through enormous amounts of information could enable the extraction of sensitive data without individuals’ knowledge or consent. As quantum technologies evolve, ethical guidelines will need to be established to restrict invasive surveillance and protect individual rights in the age of quantum computing.

8.3. The Role of Blockchain in Ethical AI Transparency

Blockchain technology, which is often associated with cryptocurrencies like Bitcoin, has a significant role to play in enhancing the transparency and accountability of AI systems. By leveraging decentralized ledgers and immutable records, blockchain can provide an auditable trail of decisions made by AI systems. This can be especially valuable in sectors like finance, healthcare, and government where transparency is essential to build trust in AI-driven decisions.

Blockchain can be used to track the decision-making processes of AI systems, ensuring that every step in the AI’s decision-making chain is recorded in a tamper-proof manner. This enables greater accountability, as stakeholders can trace back to the data and algorithms that influenced a particular AI decision. For example, in healthcare, if an AI system recommends a specific treatment plan, a blockchain-enabled system could allow doctors and patients to review the AI’s reasoning, ensuring that it aligns with medical ethics and best practices.

Another important aspect of blockchain’s role in ethical AI is its potential to reduce bias. By ensuring that AI systems’ decision-making processes are transparent and accessible to all stakeholders, blockchain can help identify and correct instances where algorithms may inadvertently favor one group over another. Blockchain can also ensure that datasets used to train AI systems are stored in a secure, transparent, and verifiable manner, reducing the risk of biased or manipulated data.

As blockchain technology becomes more widespread, it can serve as a tool to enforce ethical accountability in AI, ensuring that organizations adhere to ethical standards and remain transparent in their AI operations.

8.4. AI & Human Collaboration: The Future of AI Governance

Looking ahead, the ethical development of AI is not just about creating autonomous machines; it is about fostering collaboration between AI systems and human beings. As AI continues to evolve, it is becoming increasingly clear that AI should be used to augment human capabilities rather than replace them. This shift from automation to collaboration presents new challenges and opportunities in AI governance.

The future of ethical AI will involve ensuring that AI systems are designed to work alongside humans in a way that empowers individuals and enhances human decision-making. Rather than being an autonomous force that operates in isolation, AI should be viewed as a tool that supports human judgment and expertise. The role of AI governance frameworks will be to ensure that humans retain ultimate control over AI systems and that AI is used as a means of empowerment rather than a tool for displacement.

AI governance frameworks will need to focus on the ethical principles of human oversight, inclusivity, and collaborative decision-making. For example, in healthcare, AI systems might assist doctors in diagnosing diseases, but human doctors should always retain the final say in treatment decisions. This human-AI collaboration should be designed to maximize efficiency and outcomes, while ensuring that human dignity, autonomy, and accountability are preserved.

In this context, AI governance frameworks must prioritize the humanization of AI, ensuring that AI systems remain tools for human benefit and that they are aligned with human values.

9. Conclusion & Actionable Takeaways for Ethical AI Development

As AI technologies evolve and new innovations emerge, the landscape of ethical AI will continue to shift. To navigate this complex and rapidly changing terrain, organizations, policymakers, and developers must remain vigilant and proactive in addressing the ethical challenges that arise with these technologies.

As AI technologies evolve and new innovations emerge, the landscape of ethical AI will continue to shift. To navigate this complex and rapidly changing terrain, organizations, policymakers, and developers must remain vigilant and proactive in addressing the ethical challenges that arise with these technologies.

Below, we outline actionable takeaways for ensuring that AI development continues to prioritize ethical considerations.

9.1: Key Lessons from AI Ethics Failures & Successes

AI ethics failures and successes provide critical insights into how AI systems should be designed and deployed. Key lessons learned include the importance of transparency and accountability. AI systems must be fair, unbiased, and explainable, with built-in safeguards to prevent misuse.

The failures of past AI systems, such as biased facial recognition algorithms or discriminatory hiring practices, underscore the need for ongoing monitoring and human oversight. At the same time, successes in areas like AI-powered healthcare diagnostics demonstrate the enormous potential of AI when it is developed responsibly.

9.2: How Businesses Can Future-Proof Their AI Ethics Policies

Businesses can future-proof their AI ethics policies by staying abreast of emerging regulations, investing in continuous AI auditing, and fostering an organizational culture that prioritizes ethical AI development. Regular updates to AI ethics policies and practices are essential in ensuring that businesses remain compliant with new regulations and are prepared for future advancements in AI. Developing cross-disciplinary teams that include ethicists, technologists, and policymakers will be crucial to staying ahead of potential ethical challenges.

9.3: Next Steps for Developers, Policymakers & Businesses

To build a future where AI serves humanity’s best interests, developers, policymakers, and businesses must work together. Collaborative efforts should focus on developing comprehensive AI ethics frameworks, sharing best practices, and ensuring that AI systems are built with fairness, transparency, and accountability at the core. This includes addressing issues such as bias, privacy, and data security while promoting human-centric AI that enhances human decision-making. The future of ethical AI depends on a collective commitment to responsible development and governance, with a focus on the well-being of individuals and society as a whole.

In summary, while the future of AI holds immense promise, the ethical challenges it presents are complex and require the collaborative efforts of all stakeholders—businesses, governments, and civil society. Only through continued attention to ethical principles and proactive governance can we ensure that AI technologies are developed and deployed in ways that benefit humanity and uphold our collective values.

—

References:

- UNESCO Recommendation on the Ethics of Artificial Intelligence

- OECD Principles on Artificial Intelligence

- AI Now Institute – Bias in AI

- World Economic Forum – Responsible AI

- European Commission – General Data Protection Regulation (GDPR)

- Future of Life Institute – AI Alignment

- MIT Technology Review – The Carbon Footprint of AI

- IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

- Brookings Institution – Deepfakes and Disinformation

- World Health Organization – Ethics & Governance of AI for Health