Introduction

Artificial Intelligence (AI) has revolutionized industries, from healthcare and finance to retail and education. Its ability to analyze vast amounts of data, predict trends, and automate complex processes has positioned AI as a transformative force.

However, alongside this rapid innovation, the issue of data privacy has emerged as a central concern in the digital era. As organizations increasingly rely on AI systems, safeguarding personal information becomes both a challenge and a responsibility.

Understanding the Intersection of AI and Data Privacy

In the rapidly evolving digital landscape, the convergence of Artificial Intelligence (AI) and data privacy has become a focal point for both innovation and concern. As AI systems become increasingly integrated into our daily lives, understanding their implications on data privacy is paramount.

Defining AI and data privacy

AI refers to the development of systems capable of performing tasks that typically require human intelligence, such as decision-making and pattern recognition. Data privacy, on the other hand, focuses on protecting personal information from unauthorized access and misuse.

How AI systems collect, process, and utilize personal data

AI systems often rely on large datasets to function effectively. For example, recommendation algorithms in e-commerce platforms analyze browsing and purchasing histories to deliver personalized shopping experiences. However, this reliance on personal data raises concerns about transparency, consent, and data misuse.

The symbiotic relationship between AI advancements and data protection

AI can be both a threat and a solution for data privacy. On one side, it poses risks by potentially exposing sensitive information to breaches or misuse. On the other hand, AI-driven tools can enhance privacy protection through automated threat detection, anomaly analysis, and secure data processing techniques.

The integration of AI into various domains underscores the need for robust data privacy frameworks. Regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States highlight the global push for privacy-conscious innovation. Companies must adopt responsible AI practices, ensuring compliance with these laws while maintaining user trust.

As AI continues to permeate various aspects of society, addressing data privacy concerns remains imperative. By implementing privacy-preserving technologies and adhering to regulatory standards, it is possible to harness the benefits of AI while safeguarding individual privacy.

AI’s Impact on Data Privacy

The integration of Artificial Intelligence (AI) into our daily lives brings numerous benefits while also raising concerns about data privacy. Understanding this dual impact is crucial for fostering a secure and ethical digital environment.

Benefits of AI in data analysis and decision-making

AI systems enhance data analysis and decision-making processes, enabling organizations to identify trends, predict behaviors, and automate complex workflows. For example, AI-powered healthcare systems can analyze patient data to predict diseases early, improving outcomes and saving lives. Similarly, in the financial sector, AI detects fraudulent activities with impressive accuracy.

Potential risks and challenges AI poses to personal data security

Despite its advantages, AI introduces significant risks to data privacy. AI models often require vast amounts of personal data for training, creating vulnerabilities. Incidents like the Cambridge Analytica scandal illustrate how AI can be exploited to manipulate user data for political or financial gains (James Clayton, 2021)

Case studies illustrating both positive and negative impacts

The application of AI in various domains has led to both commendable advancements and notable challenges concerning data privacy.

Positive Impact: AI-Powered Floor Plan Design

A notable example is the development of an AI-driven platform that enables users to create detailed floor plans and 3D home designs efficiently. This technology streamlines the design process, making it more accessible to professionals and homeowners alike. By leveraging AI, the platform adapts to various environmental factors and user inputs, ensuring precision and user-friendliness (SmartDev, 2024).

Negative Impact: Clearview AI’s Data Privacy Controversy

Conversely, the case of Clearview AI highlights significant data privacy concerns. Clearview AI developed a facial recognition system by scraping billions of images from the internet without individuals’ consent. This practice led to legal challenges and fines in multiple countries, including a €30.5 million penalty imposed by the Dutch Data Protection Authority for violating privacy laws (Autoriteit Persoonsgegevens, 2024).

As AI continues to permeate various aspects of society, addressing data privacy concerns remains imperative. By implementing privacy-preserving technologies and adhering to regulatory standards, it is possible to harness the benefits of AI while safeguarding individual privacy.

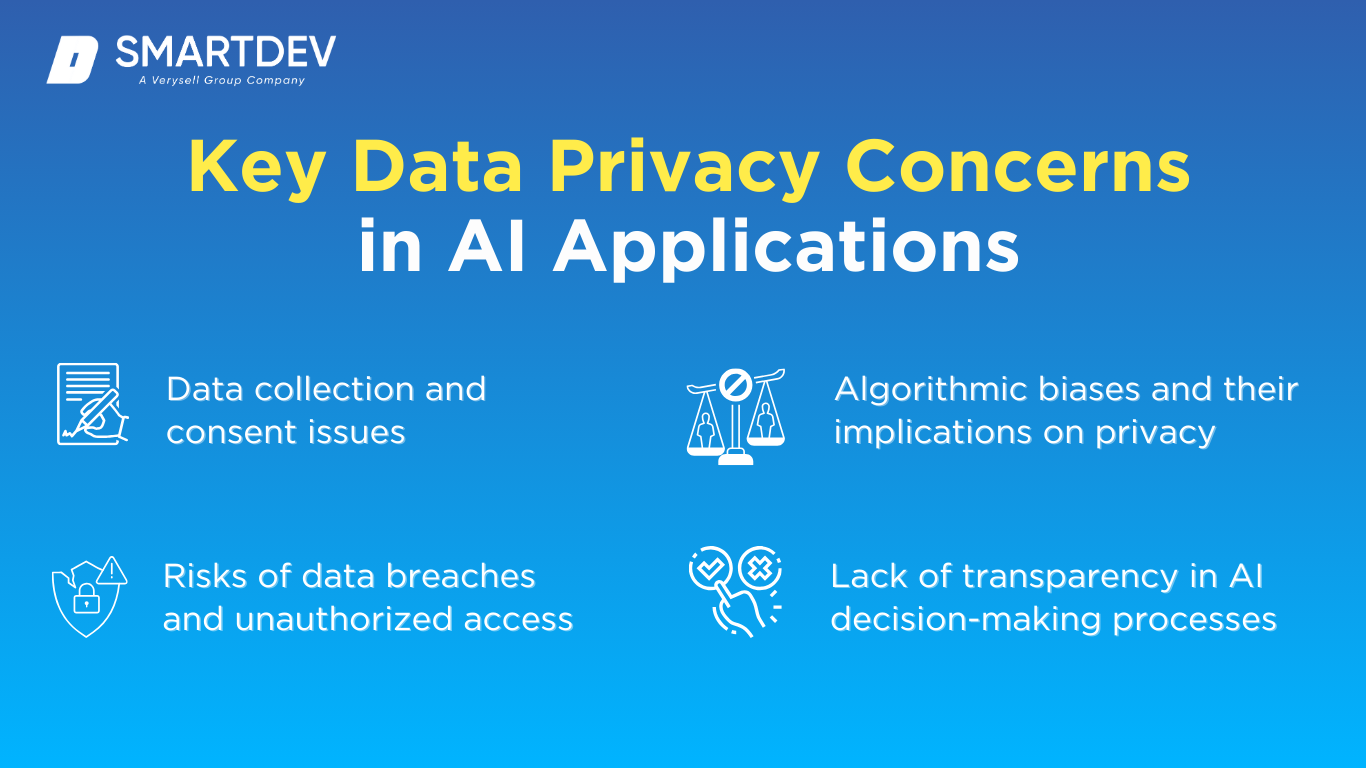

Key Data Privacy Concerns in AI Applications

As AI systems grow more prevalent in various industries, several critical concerns related to data privacy have emerged. These issues highlight the need for ethical AI development and transparent practices to protect individuals’ rights and data security.

Data collection and consent issues

AI systems rely heavily on data to function effectively. However, the collection of such data often raises concerns about consent and transparency. Many AI-powered applications gather personal information without providing users with adequate information about how their data will be used.

For example, the controversy surrounding apps like TikTok collecting user data without sufficient disclosure has drawn global criticism. Concerns arose about user data potentially being shared with third-party organizations without user consent (Jane Wakefield, 2021).

Risks of data breaches and unauthorized access

The centralization of personal data in AI systems presents a significant risk of data breaches and unauthorized access. Hackers targeting AI databases can gain access to sensitive information, leading to identity theft, financial fraud, and reputational damage for the affected organizations.

The 2017 Equifax breach, for instance, exposed the personal data of 147 million Americans, showcasing the devastating effects of inadequate data protection measures. This event led to increased scrutiny and a $700 million settlement with regulatory authorities (FTC News, 2024).

Algorithmic biases and their implications on privacy

Algorithmic biases occur when AI models inadvertently learn, and replicate biases present in their training data. This can result in discriminatory outcomes that disproportionately impact certain groups.

Discover More: AI Model Testing: The Ultimate Guide in 2025

For instance, a study revealed that Amazon’s AI hiring tool showed bias against women, as it was trained on male-dominated datasets (Jeffrey Dastin, 2018). Such biases not only violate ethical standards but also risk infringing on individuals’ rights to privacy and equality.

Lack of transparency in AI decision-making processes

AI systems often function as “black boxes”, making decisions without clear explanations of how those decisions were reached. This lack of transparency poses significant challenges in areas like finance, healthcare, and law enforcement.

For example, AI-powered credit scoring systems like those used by FICO can reject loan applications without providing applicants with comprehensible reasons. This has led to calls for explainable AI (XAI) initiatives, which prioritize making AI decision-making processes more transparent.

Regulatory Landscape Governing AI and Data Privacy

Governments and organizations worldwide are increasingly recognizing the importance of regulating AI technologies to protect data privacy. A comprehensive regulatory framework is essential to ensure that AI development aligns with ethical and legal standards.

Overview of global data protection regulations (e.g., GDPR, CCPA)

The General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States are two landmark regulations that emphasize data privacy.

- GDPR enforces stringent rules, such as the right to data portability, the right to be forgotten, and mandatory breach notifications within 72 hours (GDPR Portal).

- Similarly, CCPA provides California residents with the right to know what personal data is collected, request its deletion, and opt out of its sale (California Department of Justice, 2024).

Emerging AI-specific legislation and guidelines

Beyond general data protection laws, governments are beginning to develop AI-specific regulations. For instance, the European Union’s Artificial Intelligence Act seeks to categorize AI systems based on risk levels, mandating stricter compliance for high-risk applications like biometric identification (European Commission, 2024)

In the U.S, the Algorithmic Accountability Act has been introduced to require companies to assess the impacts of automated decision systems, including potential risks related to privacy and bias (Congressgov, 2022).

Compliance requirements for organizations deploying AI technologies

Organizations implementing AI technologies must adhere to several compliance requirements:

- Conducting Data Protection Impact Assessments (DPIAs) to identify risks

- Appointing Data Protection Officers (DPOs) to ensure ongoing compliance.

- Implementing privacy by design practices during AI system development.

These measures not only help organizations avoid penalties but also build trust with their stakeholders.

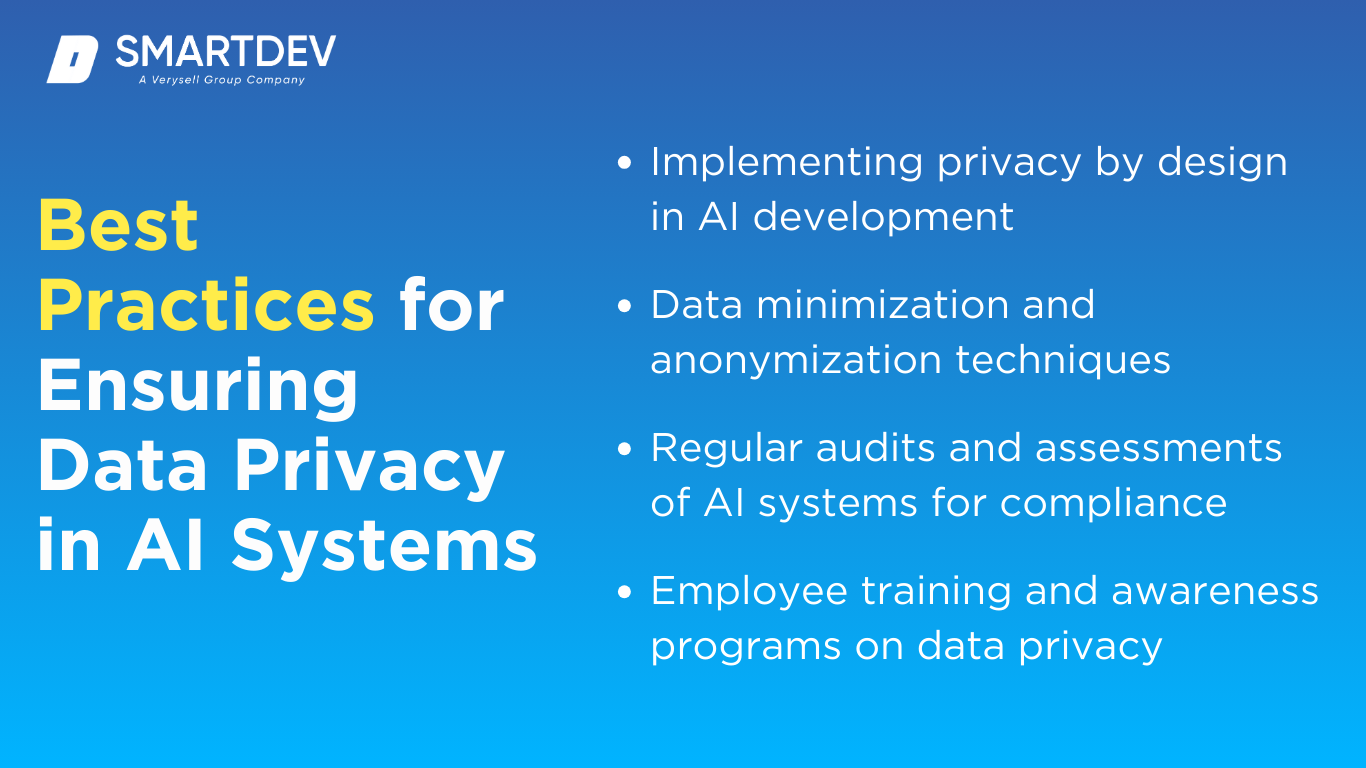

Best Practices for Ensuring Data Privacy in AI Systems

To protect sensitive information and maintain user trust, organizations deploying AI systems must adopt robust practices that integrate privacy protection into every stage of the AI lifecycle.

Data minimization and anonymization techniques

Data minimization ensures that only the necessary amount of data is collected and processed for AI systems to function effectively. By reducing the scope of data collection, organizations limit their exposure to breaches and misuse. Anonymization techniques, such as removing personally identifiable information (PII) or using pseudonyms, further protect users’ privacy by rendering datasets non-identifiable. An example of this approach is Google’s use of differential privacy in tools like Google Maps, where user data is anonymized to preserve privacy while enabling functionality.

Regular audits and assessments of AI systems for compliance

Organizations should conduct regular audits of their AI systems to ensure compliance with relevant data privacy regulations. This includes evaluating data storage practices, ensuring transparency in data processing, and identifying potential vulnerabilities. For example, internal audits can help detect whether an AI system inadvertently collects more data than intended, violating data minimization principles.

Employee training and awareness programs on data privacy

Human error remains one of the most common causes of data breaches. Regular training and awareness programs ensure that employees understand the importance of data privacy and adhere to best practices. For instance, equipping teams with knowledge about phishing attacks, secure data handling, and AI ethics fosters a culture of accountability. Organizations can also implement mandatory certification programs for teams working with sensitive data.

Technological Solutions to Enhance AI Data Privacy

Emerging technological advancements offer powerful tools to safeguard data privacy in AI systems. By integrating these technologies, organizations can further enhance security and build user trust.

Adoption of advanced encryption methods

Encryption techniques like homomorphic encryption allow data to be processed without decrypting it, significantly enhancing data privacy. For instance, AI models can perform computations on encrypted datasets without ever exposing the underlying data. This is particularly beneficial in sectors like healthcare, where sensitive patient information must remain confidential. IBM and Microsoft have been exploring homomorphic encryption to ensure secure data processing in their AI projects.

Utilization of federated learning to keep data decentralized

Federated learning enables AI models to train on decentralized data across multiple devices without transferring it to a central server. This approach minimizes the risk of data breaches and ensures privacy by design. Google has implemented federated learning in Gboard, its mobile keyboard app, to train predictive text models locally on users’ devices without centralizing sensitive data (Google Research, 2024)

Differential privacy techniques to protect individual data points

Differential privacy involves adding statistical noise to datasets, making it nearly impossible to identify individual data points. This technique is widely used by organizations like Apple to collect aggregate user data for improving services without compromising individual privacy (Apple)

AI governance frameworks to monitor and control AI behaviors

AI governance frameworks establish guidelines for monitoring and controlling the behavior of AI systems. These frameworks ensure accountability and transparency by defining rules for data usage, bias mitigation, and decision-making processes. For example, Microsoft’s AI governance framework outlines principles for building responsible AI systems, including fairness, privacy, and inclusiveness (Microsoft)

The Future of AI and Data Privacy

As AI technology continues to advance, its implications on data privacy will grow more significant. The future of AI and data privacy will be shaped by innovations, ethical considerations, and the need for regulatory alignment to balance technological growth with user protection.

Predictions on AI advancements and their potential impact on privacy

Predictions on AI advancements and their potential impact on privacy

Emerging AI technologies like autonomous systems, generative AI, and enhanced natural language processing will require increasingly complex datasets for training and operation. While these advancements will offer tremendous benefits, they will also introduce new risks to privacy. For example:

- Autonomous Vehicles: Collect massive amounts of real-time location and behavioral data, raising concerns about surveillance and unauthorized tracking.

- Generative AI Models: Like ChatGPT or DALL-E, may inadvertently expose sensitive training data if not carefully managed.

A report by McKinsey highlights that by 2030, AI-powered systems will handle over 70% of customer interactions, requiring organizations to innovate responsibly while protecting user privacy (McKinsey Digital, 2023).

The evolving role of data privacy in AI ethics

AI ethics will increasingly emphasize data privacy as a cornerstone. Concepts like transparency, accountability, and fairness are intertwined with privacy protection. The ethics of consent in AI data collection will likely evolve, requiring systems to adopt more dynamic, user-centric models where individuals have more control over their data.

Organizations will also need to address issues of algorithmic bias and discrimination, as the ethical handling of data directly impacts equitable AI development. Initiatives like the EU’s Artificial Intelligence Act aim to incorporate privacy into ethical AI practices (European Commission, 2023).

Strategies for balancing innovation with stringent data protection

To foster innovation while ensuring data protection, organizations must:

- Invest in Privacy-Enhancing Technologies (PETs): Techniques like federated learning, differential privacy, and secure multiparty computation will play a pivotal role.

- Develop Dynamic Regulatory Frameworks: Governments and organizations must create adaptive policies that evolve with technological advancements.

- Promote Collaborative Governance Models: Partnerships between private and public sectors can standardize ethical AI and privacy protocols globally.

SmartDev’s Commitment to Data Privacy in AI

SmartDev has established itself as a leader in secure AI development, demonstrating its commitment to integrating data privacy into its solutions. The company’s approach focuses on building trust with clients while advancing responsible AI practices.

SmartDev’s approach to integrating data privacy in AI solutions

SmartDev adheres to the principles of privacy by design, ensuring that data privacy considerations are embedded in every stage of AI development. By leveraging advanced encryption methods, robust anonymization techniques, and secure cloud platforms, SmartDev ensures compliance with global regulations such as GDPR and CCPA.

Case studies showcasing SmartDev’s successful implementations

- AI-Driven Media Engagement Platform

SmartDev developed a media engagement platform for a journalism and PR marketplace that processes large amounts of user data. To ensure privacy, SmartDev implemented role-based access controls, ensuring only authorized personnel could access sensitive information. The solution significantly improved user trust and compliance.

- AI-Powered Floor Plan and 3D Design Tool

In another case, SmartDev built an AI platform for creating detailed floor plans and 3D house designs. The system anonymized user data and incorporated differential privacy techniques to safeguard sensitive information while delivering highly personalized results.

Future initiatives and goals in promoting secure AI development

SmartDev is committed to advancing secure AI development by addressing emerging data privacy challenges and ensuring ethical practices in its solutions. The company’s future initiatives include:

- Expanding Federated Learning

SmartDev plans to integrate federated learning into its solutions, enabling AI models to train on decentralized data without compromising user privacy. This approach will be particularly impactful in sensitive industries like healthcare and finance.

- Strengthening Explainable AI (XAI)

To foster transparency and trust, SmartDev aims to implement Explainable AI techniques, ensuring stakeholders can understand the rationale behind AI-driven decisions. This will include developing user-friendly dashboards to visualize decision-making processes.

- Advancing Differential Privacy

SmartDev will enhance its use of differential privacy techniques, which add statistical noise to datasets to protect individual user identities. This will be applied across customer-focused platforms to ensure privacy without sacrificing functionality.

- Collaborating on Regulatory Development

SmartDev will actively engage with policymakers and industry leaders to help shape global AI privacy standards, including contributions to frameworks like the EU Artificial Intelligence Act. These efforts will align innovation with compliance.

- Investing in Privacy-Enhancing R&D

The company plans to focus its R&D on cutting-edge encryption technologies, such as homomorphic encryption, to ensure secure data handling. SmartDev will also explore privacy-preserving methods tailored for high-risk domains like healthcare.

Through these initiatives, SmartDev demonstrates its dedication to ethical AI development while balancing innovation with stringent data protection.

Conclusion,

The intersection of AI and data privacy is a critical area where innovation must align with ethical responsibility. By embedding data privacy into every stage of AI development, organizations can foster trust, comply with regulations, and build sustainable solutions. SmartDev’s proactive approach to secure and transparent AI demonstrates its commitment to protecting user privacy while delivering cutting-edge technologies.

As AI continues to advance, partnering with experts who prioritize data privacy is crucial. If you’re looking for tailored AI solutions that prioritize security and compliance, contact SmartDev today to explore how we can help you achieve your goals.

—-

REFERENCES

- Clayton, B. J. (2021, May 5). Trump launches new “communications” platform. https://www.bbc.com/news/technology-56989500

- Dutch DPA imposes a fine on Clearview because of illegal data collection for facial recognition. (n.d.). Autoriteit Persoonsgegevens. https://www.autoriteitpersoonsgegevens.nl/en/current/dutch-dpa-imposes-a-fine-on-clearview-because-of-illegal-data-collection-for-facial-recognition

- Wakefield, B. J. (2021, May 25). AI emotion-detection software tested on Uyghurs. https://www.bbc.com/news/technology-57101248

- Equifax Data breach settlement. (2024, November 4). Federal Trade Commission. https://www.ftc.gov/enforcement/refunds/equifax-data-breach-settlement

- Dastin, J. (2018, October 10). Insight – Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. https://www.reuters.com/article/world/insight-amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK0AG/

- General Data Protection Regulation (GDPR) – legal text. (2024, April 22). General Data Protection Regulation (GDPR). https://gdpr-info.eu/

- California Consumer Privacy Act (CCPA). (2024, March 13). State of California – Department of Justice – Office of the Attorney General. https://oag.ca.gov/privacy/ccpa

- European Artificial Intelligence Act comes into force. (2024, August 1). European Commission – European Commission. https://ec.europa.eu/commission/presscorner/detail/en/ip_24_4123

- Improving Gboard language models via private federated analytics. (n.d.). https://research.google/blog/improving-gboard-language-models-via-private-federated-analytics/

- Apple. (n.d.). Privacy – Approach to privacy. Apple (India). https://www.apple.com/in/privacy/approach-to-privacy/index.html

- Responsible AI Principles and Approach | Microsoft AI. (n.d.). https://www.microsoft.com/en-us/ai/principles-and-approach

- Das, A. C., Phalin, G., Patidar, I. L., Gomes, M., & Thomas, R. (2023, March 27). The next frontier of customer engagement: AI-enabled customer service. McKinsey & Company. https://www.mckinsey.com/capabilities/operations/our-insights/the-next-frontier-of-customer-engagement-ai-enabled-customer-service