Introduction

Automation testing has long been critical for delivering high-quality software, yet legacy tools often struggle with flaky tests, rising maintenance costs, and coverage gaps. Today, Artificial Intelligence (AI) is stepping in – as a game-changer – to optimize test suites, generate intelligent cases, and enhance QA efficiency. This guide explores real-world AI applications in automation testing, highlighting tangible benefits, adoption trends, and implementation challenges.

What is AI and Why Does It Matter in Automation Testing?

Definition of AI and Its Core Technologies

Artificial Intelligence (AI) refers to computer systems designed to perform tasks that typically require human intelligence. These tasks include learning from data, understanding language, and interpreting images, enabled by technologies such as machine learning, natural language processing (NLP), and computer vision. AI systems identify patterns, draw insights, and make decisions that streamline complex processes.

In automation testing, AI enhances every stage from test creation to maintenance. Machine learning helps identify high-risk areas in code, while NLP converts user stories into automated test cases. Computer vision allows AI to detect changes in the user interface, reducing test failures and accelerating release cycles.

The Growing Role of AI in Transforming Automation Testing

AI is advancing the field of software testing by streamlining manual processes and enabling more strategic quality assurance. Modern testing platforms now leverage AI to automatically generate test cases from system requirements or user interactions. This not only broadens test coverage but also allows QA teams to allocate their time toward more complex testing scenarios.

Self-healing capabilities have become a key feature, allowing test scripts to adjust automatically when user interface elements are modified. AI also enables predictive analytics that highlights unstable or low-value tests, guiding teams toward the most impactful areas for validation. These functions significantly reduce test maintenance and improve overall test suite reliability.

In addition, AI enhances the breadth and depth of automated testing. It supports the generation of realistic and varied test data, automates API testing routines, and conducts precise visual inspections of user interfaces. Together, these applications allow organizations to increase test accuracy, reduce release cycles, and maintain higher software quality standards.

Key Statistics and Trends Highlighting AI Adoption in Automation Testing

AI adoption in software testing is growing rapidly. According to Leapwork, the share of teams using AI in testing rose from 7% in 2023 to 16% in 2025. TestGuild further reports that over 72% of QA teams are actively exploring or implementing AI-driven testing practices.

Stanford’s 2024 AI Index notes that 78% of companies now use AI in at least one business function, up from 55% the year before. Yet Boston Consulting Group finds that only 26% of firms have realized measurable value, highlighting a gap between experimentation and effective deployment. Most organizations are still in early adoption phases despite increasing investment.

Market forecasts point to sustained momentum. According to Future Market Insights, the AI in test automation market is projected to grow from $0.6 billion in 2023 to $3.4 billion by 2033 at a 19% CAGR. Meanwhile, Capgemini’s World Quality Report 2024 reveals that 68% of enterprises now use AI in some form of quality engineering.

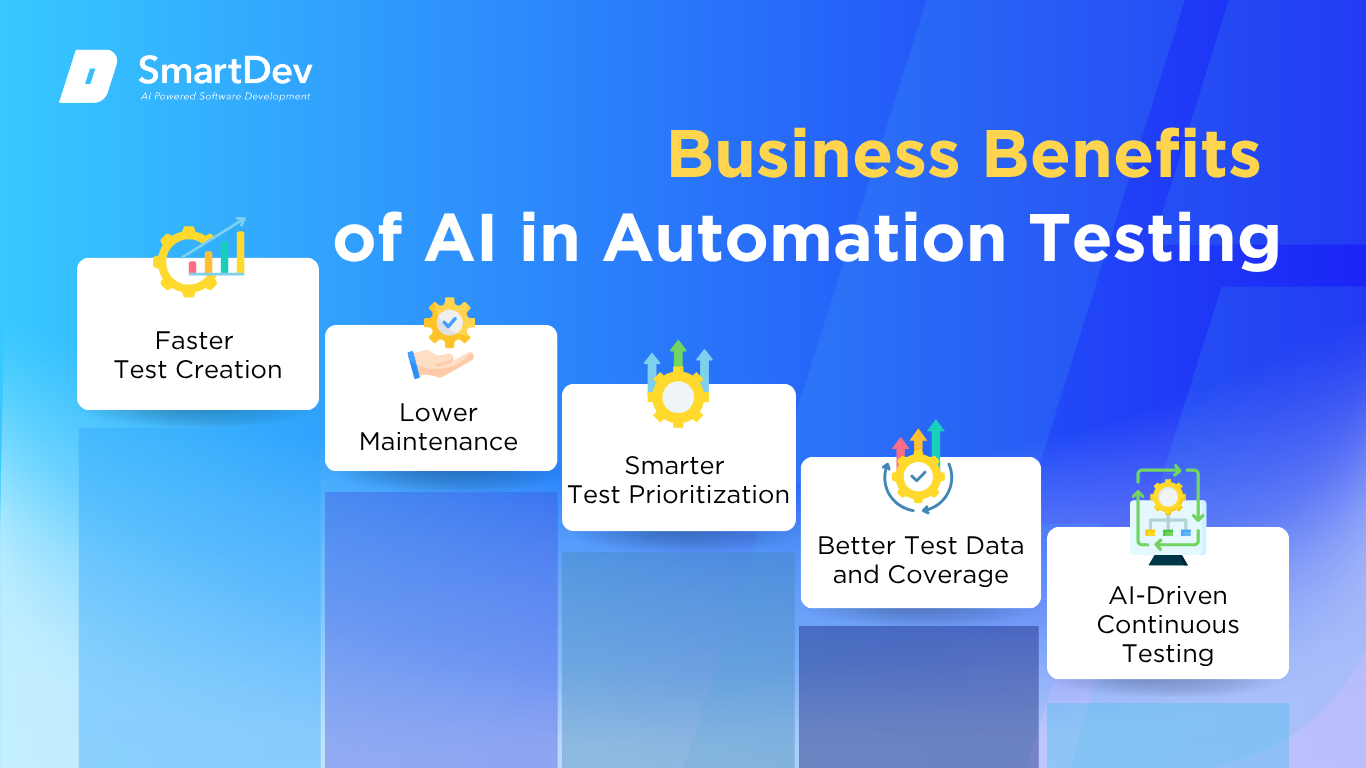

Business Benefits of AI in Automation Testing

AI is delivering measurable value across the testing lifecycle by addressing long-standing inefficiencies and gaps. From accelerating test creation to enabling smarter analytics, it empowers QA teams to achieve faster, more reliable software delivery.

1. Faster Test Creation

AI accelerates the creation of test cases by analyzing requirements or monitoring user interactions to auto-generate scripts. This dramatically reduces manual effort and expands test coverage across key workflows. As a result, QA teams can adapt quickly to changes and new feature releases.

In agile and DevOps environments, speed is critical. AI shortens the feedback loop, enabling testing to keep pace with rapid development cycles. Faster test creation ultimately helps maintain release velocity without compromising quality.

2. Lower Maintenance with Self-Healing

AI-powered platforms can automatically detect and update test scripts when UI elements change, avoiding unnecessary test failures. These self-healing capabilities keep test suites stable, even as the application evolves. Teams save time otherwise spent fixing broken scripts after every update.

This benefit is especially valuable in fast-moving projects with frequent deployments. Instead of scrambling to repair test cases, teams rely on AI to maintain continuity. The result is a more resilient testing process and fewer interruptions.

3. Smarter Test Prioritization

By analyzing historical test runs and defect patterns, AI identifies which tests are most likely to catch new issues. This helps teams focus on high-value cases and reduce time spent on redundant or low-impact tests. More targeted testing leads to earlier bug detection and faster resolution.

When full regression suites are impractical, prioritization becomes essential. AI ensures the most important tests run first, even under tight timelines. This leads to more efficient use of resources and improved release confidence.

4. Better Test Data and Coverage

AI generates realistic and diverse test data that mirrors real-world usage scenarios, including edge cases often missed manually. This enhances test coverage and improves the system’s ability to handle complex input conditions. It also supports thorough validation across both functional and compliance-related tests.

For industries with strict regulations, such as finance or healthcare, AI-generated data helps meet testing requirements without breaching data privacy. By covering a broader range of inputs, teams can catch more bugs before release. This results in higher software reliability and lower risk.

Find out how to align innovation with compliance in our guide to AI and responsible data privacy.

5. AI-Driven Continuous Testing

Integrated with CI/CD pipelines, AI enables continuous testing by selecting and executing relevant tests automatically after each code change. This provides instant feedback to developers and minimizes the time between defect detection and resolution. Faster feedback loops improve code quality across the board.

Continuous testing ensures that quality checks are embedded into every stage of the development cycle. With AI, testing becomes proactive rather than reactive. This leads to shorter release cycles and more consistent product performance.

See how intelligent automation enhances agile workflows in our guide on transforming software delivery.

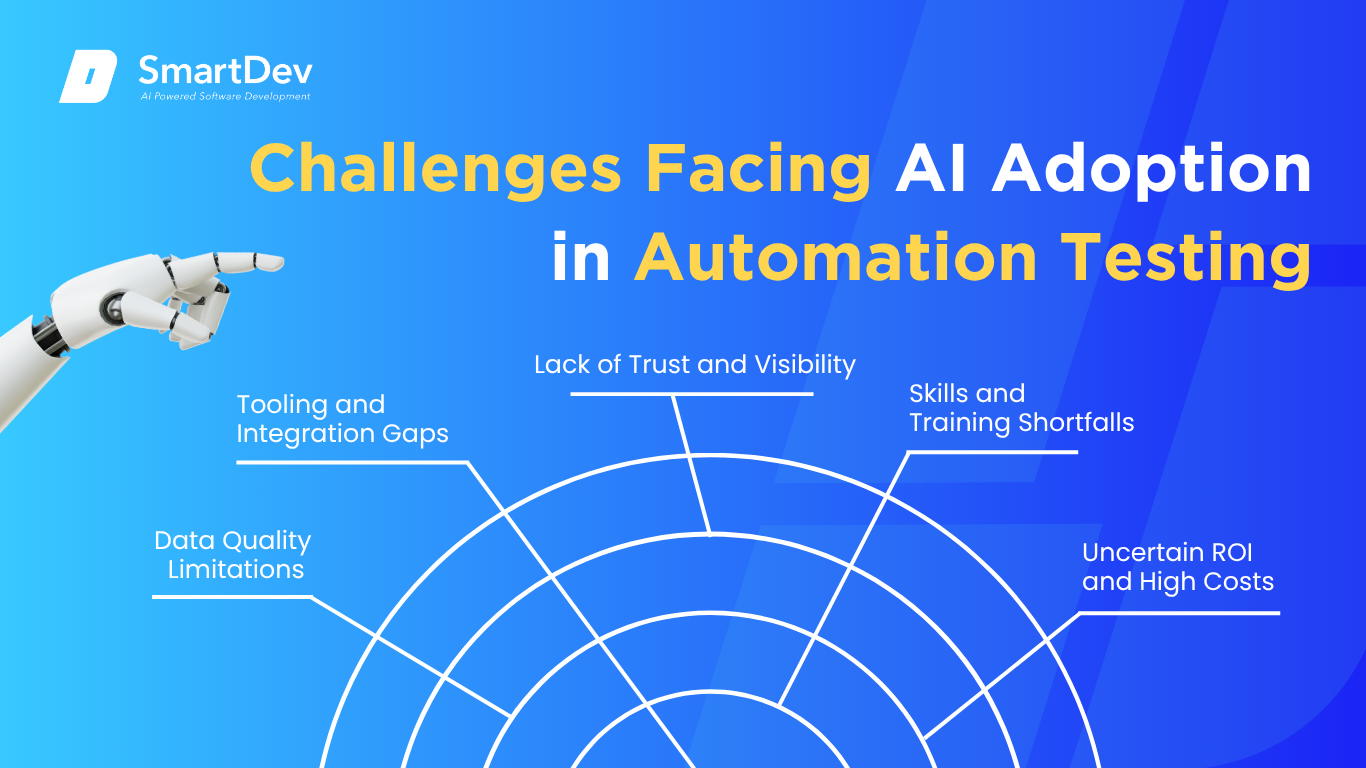

Challenges Facing AI Adoption in Automation Testing

Despite its potential, AI adoption in testing comes with real-world hurdles that can limit impact if not addressed early. Success depends on solving data, tooling, and skills challenges while building trust and demonstrating clear value.

1. Data Quality Limitations

AI in testing depends on clean, structured data to generate accurate insights and predictions. Many teams face fragmented test data, inconsistent logging, or missing historical records, which weakens AI performance. Poor-quality input leads to unreliable test automation and flawed prioritization.

Establishing a centralized, high-integrity dataset is critical for effective AI adoption. Without it, even advanced tools cannot function optimally. Strong data practices must be in place before automation can deliver meaningful results.

2. Tooling and Integration Gaps

Legacy or heavily customized test frameworks often lack support for AI capabilities. Integrating AI typically requires migrating to modern platforms or rebuilding pipelines, which can be resource-intensive. This slows implementation and creates resistance to change.

Smooth AI adoption depends on compatibility with existing infrastructure. Without flexible tools and API support, organizations risk delays and inefficiencies. Choosing platforms that integrate easily into current workflows is essential for success.

3. Lack of Trust and Visibility

AI tools may adjust or create tests autonomously, raising concerns about accuracy and oversight. Teams are often reluctant to rely on black-box systems, especially when quality assurance is on the line. Without explainable outcomes, trust in automation can erode.

Building transparency into AI behavior is essential. Human review and validation should remain part of the process during early adoption. Over time, consistent results will help establish confidence in the technology.

4. Skills and Training Shortfalls

AI tools introduce concepts like model training, data analysis, and predictive logic, which may be unfamiliar to QA professionals. Without the right expertise, teams struggle to use these tools effectively. This knowledge gap can stall adoption and limit value.

Upskilling is necessary to bridge the divide. Investing in training empowers teams to engage with AI confidently and productively. Long-term success depends on both the technology and the people using it.

5. Uncertain ROI and High Costs

Adopting AI requires upfront investment in tools, infrastructure, and onboarding. Early results can be difficult to quantify, making ROI unclear. Leaders may hesitate to scale without tangible, short-term benefits.

To address this, organizations should start with focused pilot projects and define clear performance metrics. Tracking outcomes like defect detection rates and test efficiency helps build the business case. A phased approach ensures sustainable value over time.

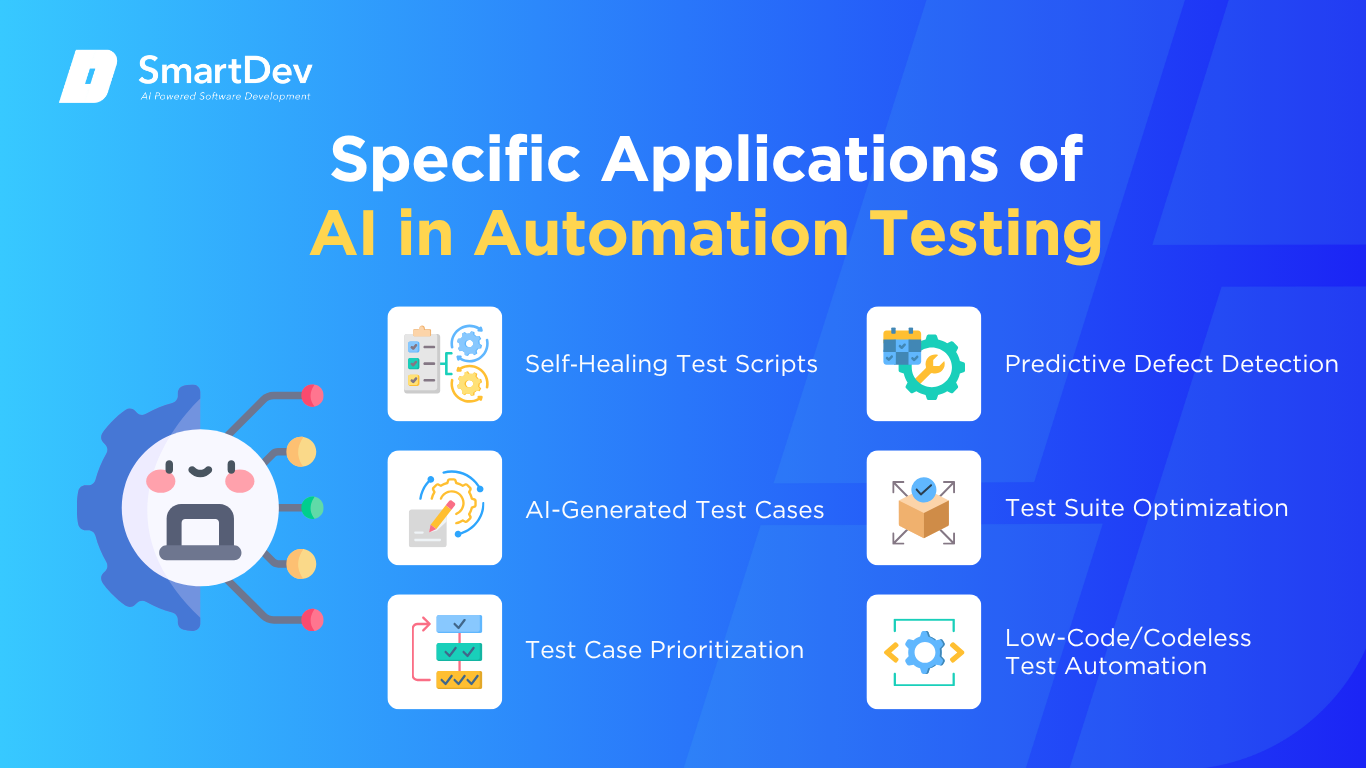

Specific Applications of AI in Automation Testing

1. Self‑Healing Test Scripts

AI-powered self-healing test scripts are designed to automatically detect and repair broken locators in UI-based test automation. These scripts leverage machine learning algorithms and computer vision to recognize UI element changes without human intervention. As a result, test scripts continue to function even when underlying UI changes occur frequently.

Self-healing systems rely on historical test execution data, DOM snapshots, and element attributes to identify the most likely match for a changed component. The AI compares new interface elements against stored identifiers and updates the locator dynamically. This process helps avoid unnecessary test failures caused by minor UI modifications.

This capability is especially beneficial in agile and DevOps environments where UI changes are constant. It ensures test suite stability, accelerates release cycles, and reduces the manual overhead of maintenance. However, organizations must ensure validation layers exist to prevent masking real issues due to false auto-heals.

Real-world example:

Functionize enabled Software Solutions Integrated (SSI) to maintain stable UI testing during a platform migration. Their self-healing engine dynamically updated broken locators, minimizing test failure rates. This resulted in significantly reduced maintenance time and improved test suite reliability.

Learn more about scalable test strategies in our guide to automated testing.

2. AI-Generated Test Cases

AI-generated test cases help streamline test design by automatically producing tests based on user requirements or application behavior. This is especially useful in scenarios with large volumes of feature updates or changing user stories. AI tools can also identify edge cases and untested scenarios that manual testers may overlook.

The generation process typically employs NLP to interpret user stories or business logic and LLMs to formulate structured test scenarios. Some tools also analyze application usage patterns and historical defect data to ensure comprehensive test coverage. This creates a more intelligent test suite that adapts to application evolution.

By automating test case creation, organizations can significantly reduce time spent on test design. It also empowers business users and non-technical stakeholders to contribute to testing. However, human review is still essential to ensure test cases align with business priorities.

Real-world example:

Testsigma’s AI Copilot enabled Qualitrix to automate test generation using plain English inputs. This led to a 50% increase in test coverage and a 60% faster regression cycle. The company also cut maintenance time by 40%.

3. Test Case Prioritization

Test case prioritization with AI ensures the most impactful tests run first, maximizing the efficiency of CI/CD pipelines. AI systems analyze factors like recent code changes, historical failure rates, and risk areas to determine priority. This helps testers catch high-severity bugs early in the release cycle.

Reinforcement learning models and decision trees can continuously improve prioritization accuracy. These algorithms learn which test cases tend to fail under specific conditions and adjust their execution order accordingly. This minimizes redundant executions and optimizes resource allocation during builds.

The strategic value includes faster feedback loops, reduced test cycle times, and higher developer satisfaction. It ensures test execution aligns with business risk and product stability. Still, accurate prioritization depends on access to high-quality historical test data.

Real-world example:

Retecs implemented AI-driven prioritization in a CI pipeline to reorder test execution based on risk analysis. This resulted in earlier bug detection and reduced CI feedback delays. Development teams reported greater efficiency and faster turnaround times.

4. Predictive Defect Detection

Predictive defect detection uses machine learning to identify parts of an application that are most likely to fail. It draws on historical defect data, code complexity metrics, and developer activity to assess risk levels. These insights guide testers and developers to focus on high-risk areas before issues manifest.

Classification algorithms such as random forests or support vector machines are commonly used. These models correlate input features like code churn or cyclomatic complexity with past defect patterns to make forecasts. Some systems even integrate with version control to flag risky code commits in real time.

The primary benefit is proactive quality assurance that prevents bugs from reaching production. It also optimizes the allocation of test resources and enhances application reliability. Ethical use requires care to avoid over-reliance or biases in model training data.

Real-world example:

Sauce Labs incorporated predictive analytics to forecast defect-prone modules in web applications. Their platform helped developers fix issues earlier in the lifecycle. Customers saw improved stability and reduced post-release defect rates.

See how predictive models are also used in fraud detection to prevent risks in real time in our detailed guide on AI-Driven Fraud Detection.

5. Test Suite Optimization

AI-enabled test suite optimization ensures testing efforts focus on valuable and effective test cases. The system evaluates tests based on frequency of execution, past outcomes, and code coverage relevance. Low-value, flaky, or redundant tests are flagged for removal or revision.

Clustering algorithms and unsupervised learning help identify overlapping test cases or excessive execution times. These methods detect patterns of inefficiency that might not be obvious through manual analysis. This leads to leaner, faster, and more reliable test suites.

Optimized suites reduce infrastructure usage and speed up feedback cycles. They support better CI/CD pipeline performance and lower the cost of test maintenance. However, oversight is necessary to avoid trimming tests that provide critical coverage.

Real-world example:

Tricentis applied AI to audit client test repositories for redundancy and performance. The optimization process helped remove unnecessary scripts and reduced overall testing time. QA teams were able to redirect efforts to more strategic test development.

6. Low-Code/Codeless Test Automation

Low-code and codeless test automation democratizes testing by enabling users without coding skills to build automated scripts. These platforms use AI to convert user interactions into reusable, self-maintaining test scripts. They cater to business users, product managers, and manual testers alike.

AI components such as NLP, computer vision, and decision logic help interpret intent and generate valid tests. These systems also offer smart suggestions, auto-corrections, and reusable object libraries. As a result, test authoring becomes faster, more intuitive, and scalable across teams.

The benefit lies in broader test automation coverage and reduced reliance on specialized resources. It speeds up delivery cycles and supports continuous testing in agile environments. Oversight is still needed for complex workflows or highly dynamic applications.

Real-world example:

Qualitrix adopted Testsigma’s low-code test platform for mobile and web app testing. Testers quickly created functional scripts without programming knowledge. This led to faster onboarding, wider test coverage, and improved time-to-market.

Need Expert Help Turning Ideas Into Scalable Products?

Partner with SmartDev to accelerate your software development journey — from MVPs to enterprise systems.

Book a free consultation with our tech experts today.

Let’s Build TogetherExamples of AI in Automation Testing

AI-driven solutions in automation testing have shifted from experimental to mainstream use. The following case studies highlight how leading companies have successfully adopted AI in real-world QA workflows.

Real-World Case Studies

1. Functionize: Self-Healing Test Automation

Functionize’s AI-powered platform enabled SSI to manage their complex UI transitions during cloud migration. The self-healing capabilities reduced manual maintenance by identifying and updating failing locators in real time. This drastically improved test suite stability and allowed the QA team to focus on expanding coverage.

The transition to Functionize allowed SSI to scale testing without linear cost increases. Their test reliability increased significantly, supporting faster release cycles. This case illustrates the operational ROI of AI-powered test maintenance.

2. Testsigma: AI for Low-Code and Test Generation

Qualitrix implemented Testsigma to empower QA teams to automate without coding. The AI-powered low-code interface enabled functional testers to create, maintain, and execute comprehensive test suites efficiently. Features like natural language test creation and auto-healing reduced learning curves and delays.

With Testsigma, Qualitrix achieved 50% more test coverage, 60% faster regression cycles, and 40% lower test maintenance effort. Their agile QA teams became more productive and proactive. This showcases how AI democratizes testing and supports DevOps culture.

3. Meta: LLM-Based Test Optimization

Meta uses TestGen-LLM, an internal AI model that improves test coverage and quality in developer workflows. It analyzes codebases and suggests improved unit tests using generative models. Developers accept suggestions into production, closing coverage gaps.

Meta reports 75% of generated tests were correctly built, with 57% reliability and 11.5% of classes improved. Engineers accepted 73% of AI-generated suggestions. This illustrates how LLMs are redefining unit test effectiveness and developer support.

Innovative AI Solutions

Advanced AI technologies are increasingly central to automation testing, introducing intelligent ways to build, maintain, and optimize test suites. Generative AI models, such as large language models, are now being used to automatically generate and refine test cases based on code and user stories. These innovations help teams detect coverage gaps, enhance test quality, and accelerate test creation without manual scripting.

AI-powered testing platforms are also leveraging natural language processing, self-healing logic, and visual recognition to streamline workflows. These features reduce the need for coding expertise, improve error detection, and lower the time required for test maintenance. As AI capabilities evolve, they are enabling a more adaptive, scalable, and collaborative testing environment across software development teams.

AI-Driven Innovations Transforming Automation Testing

Emerging Technologies in AI for Automation Testing

Artificial intelligence is rapidly transforming how teams approach test creation and maintenance. One standout advancement is generative AI, which can translate user stories or business logic into fully-formed test cases. This automation expands test coverage and accelerates release cycles by minimizing the manual effort required for test scripting.

Another major innovation is the use of computer vision in UI testing. These systems analyze visual components and adapt test scripts automatically when changes in layout or interface are detected. As a result, teams experience fewer false positives and less downtime from broken tests, leading to more stable and resilient testing pipelines.

AI’s Role in Sustainability Efforts

AI is playing a growing role in making automation testing more environmentally sustainable. By using predictive analytics, it helps teams focus only on the most relevant test cases, significantly reducing the number of unnecessary runs. This smarter approach conserves computing resources and lowers the overall energy footprint of testing operations.

It also enhances energy efficiency by managing test environments more intelligently. Rather than keeping multiple environments running at all times, AI can suggest spinning them up only when needed. This shift not only reduces infrastructure costs but also supports broader efforts to create greener, more responsible development practices.

Explore how AI improves workflows, reduces waste, and supports sustainable software testing operations through our detailed guide on unlocking operational efficiency with AI.

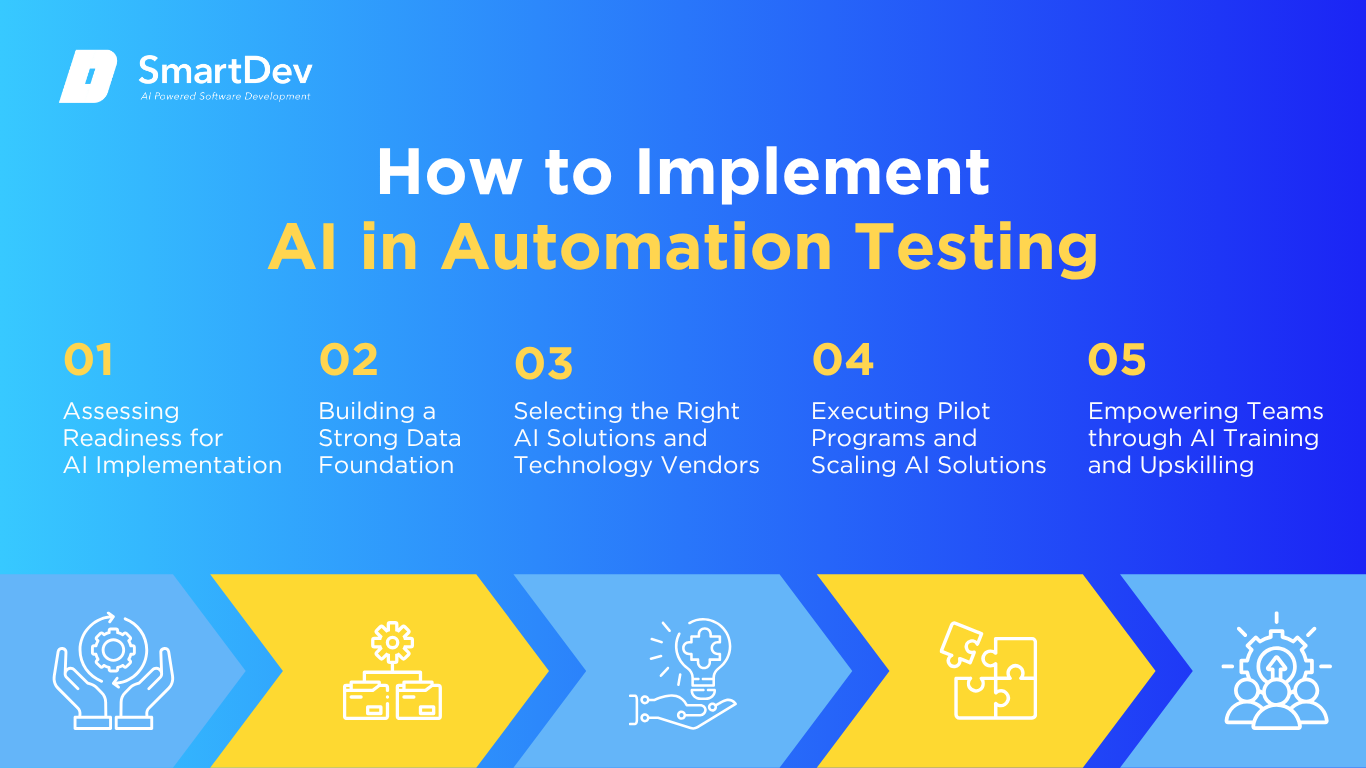

How to Implement AI in Automation Testing

Implementing AI in software testing isn’t just about adopting new tools – it’s about transforming how your team approaches quality assurance. Here’s a practical roadmap to help you integrate AI effectively, from planning to scaling.

Step 1: Assessing Readiness for AI Adoption

Before integrating AI into automation testing, it’s essential to evaluate current testing workflows and infrastructure. Areas characterized by repetitive tasks, high test maintenance, or unstable scripts often offer the greatest potential for AI-driven improvements. These segments provide a clear foundation for measurable impact without disrupting critical systems.

It’s equally important to assess the organization’s readiness for change and leadership support. Implementing AI involves shifting traditional testing mindsets toward data-centric and automated strategies. Without alignment across teams, even well-chosen tools can struggle to deliver value.

Step 2: Building a Strong Data Foundation

Effective AI testing depends on access to clean, well-labeled data from past test cases, defect logs, and execution histories. A centralized repository ensures that AI models can learn from consistent patterns and produce relevant, accurate test suggestions. Data integrity directly influences the reliability and scalability of automation.

Establishing proper data governance is essential to maintain consistency across teams and environments. Structured naming conventions, version control, and labeling enhance model performance and reduce false outputs. With strong data hygiene, AI becomes a reliable partner in the testing lifecycle.

Explore how precise AI model training contributes to accurate predictions and reliable QA performance in our article about AI Model Training.

Step 3: Choosing the Right Tools and Vendors

Choosing the right AI solution means aligning capabilities with the organization’s automation goals. Tools should integrate smoothly with existing CI/CD systems and support the testing frameworks already in use. Compatibility and scalability are more important than having the most features.

Vendor transparency is also critical, especially when it comes to how AI models are trained and how data is managed. Clear documentation, support, and ethical handling of test information build trust. Selecting a strategic vendor ensures a collaborative approach to continuous testing improvement.

Step 4: Pilot Testing and Scaling Up

A targeted pilot is the best way to evaluate AI’s impact without risking major disruption. Starting with a specific use case, like regression testing or test case generation, makes it easier to define goals and measure outcomes. These early experiments help refine workflows and surface practical challenges.

Once success is demonstrated, insights from the pilot can inform broader rollout plans. Refining based on performance data, user feedback, and process adjustments leads to stronger long-term adoption. With a clear roadmap, scaling becomes a structured and confident next step.

Step 5: Training Teams for Successful Implementation

Upskilling QA teams ensures AI tools are used effectively and confidently. Training should focus not just on tool use, but also on how AI integrates with existing processes and supports test strategy. When teams understand the value of AI, adoption naturally improves.

Cross-functional collaboration between testers, developers, and DevOps is essential for seamless integration. AI works best when guided by human expertise and aligned with clear testing objectives. A well-prepared workforce ensures AI enhances quality assurance rather than complicating it.

Discover how our tailored automation testing solutions support faster releases and higher software quality.

Measuring the ROI of AI in Automation Testing

Key Metrics to Track Success

Measuring ROI starts with tracking productivity improvements like test generation speed, reduced manual effort, and faster execution cycles. These gains show how AI boosts efficiency while minimizing repetitive work. Cost savings also come from fewer failures, less rework, and improved release stability.

Defect prevention adds long-term value by reducing post-release issues and enhancing customer satisfaction. Higher software quality means fewer support calls, lower churn, and stronger brand trust. Together, these metrics reflect both direct and indirect returns on AI investments.

Case Studies Demonstrating ROI

Caesars Entertainment saved over $1 million annually by using AI to automate regression testing and speed up API validation. Their testing cycle became 97% faster, allowing teams to focus on strategic QA efforts instead of maintenance. The results showed both operational efficiency and significant financial impact.

Razer’s QA team adopted an AI copilot and saw 20–25% more bugs detected and a 50% reduction in testing time. This shift also cut costs by 40%, proving that intelligent automation doesn’t just accelerate processes, it drives measurable business value. In both cases, clear ROI was achieved through targeted AI integration.

Common Pitfalls and How to Avoid Them

One major pitfall is trying to scale AI too quickly without a clear strategy. This often leads to bloated test suites, redundant scripts, and wasted resources. Starting with focused, well-defined use cases helps teams validate AI tools before expanding their scope.

Another common issue is overlooking data quality and team readiness. AI depends on clean, structured test data; without it, outputs can be inaccurate or irrelevant. Providing proper training and support ensures teams understand how to work with AI effectively, preventing misuse and resistance.

Future Trends of AI in Automation Testing

Predictions for the Next Decade

In the coming years, AI is expected to transition from task-specific enhancements to becoming a fully integrated partner in end-to-end test automation. Advancements in autonomous testing agents will enable AI to independently generate, execute, and optimize test suites with minimal human oversight. These agents will continuously learn from historical test outcomes, code changes, and user behavior to improve accuracy and responsiveness over time.

Computer vision and multi-modal AI will also mature, allowing for deeper validation across interfaces, APIs, and user interactions in real-world environments. Predictive analytics will become more precise, identifying potential failure points even before code is merged. As AI systems gain more contextual awareness, automation testing will shift from reactive quality control to proactive quality assurance.

How Businesses Can Stay Ahead of the Curve

Organizations preparing for these changes must focus on strengthening data infrastructure and fostering cross-functional collaboration between QA, development, and data science teams. Investing in explainable AI models and modular testing frameworks will support adaptability as technologies evolve. Establishing feedback loops where AI outcomes are continuously evaluated and refined will ensure long-term reliability and effectiveness.

Proactive engagement with innovation – through internal R&D, strategic partnerships, or participation in industry forums – will provide early exposure to emerging practices. Maintaining agility in processes and technology stacks will allow faster integration of AI-driven capabilities. Staying informed and adaptable will be crucial for leveraging the full potential of AI in the future of automation testing.

Explore how tech leaders are driving AI transformation in software engineering in our strategic guide.

Conclusion

Key Takeaways

AI is redefining automation testing by enabling faster, smarter, and more reliable quality assurance. Innovations like generative test creation and self-healing scripts are streamlining workflows while improving accuracy and test coverage. These advancements lead to reduced costs, quicker releases, and stronger overall software quality.

Measurable ROI is already evident in organizations that have adopted AI strategically. Success depends on clean data, cross-team collaboration, and continuous refinement. When implemented thoughtfully, AI becomes a key asset in driving scalable and efficient testing practices.

Moving Forward: A Strategic Approach to AI in Automation Testing

As AI continues to transform software testing, organizations have a powerful opportunity to enhance quality, accelerate delivery, and reduce testing costs. From intelligent test generation to predictive defect detection and autonomous script maintenance, AI is no longer optional, it’s becoming a strategic imperative for high-performing QA teams operating in fast-paced development environments.

At SmartDev, we specialize in delivering AI-driven testing solutions tailored to enterprise needs. Our team helps integrate intelligent automation into existing pipelines, reduce test flakiness, and unlock measurable ROI from every testing cycle.

Explore our AI-powered software development services to see how we build intelligent solutions tailored to your software lifecycle from concept to continuous delivery.

Contact us today to discover how AI can modernize your automation testing strategy and drive long-term value across the software development lifecycle.

—

References:

- 2025: The Year of AI Adoption for Test Automation | DEVOPSdigest

- AI In Software Testing: Full Guide For 2025 | Springs

- The Future of Software Testing: AI-Powered Test Case Generation and Validation | arXiv

- The 2025 State of Testing™ Report | PractiTest

- 30 Essential AI in Quality Assurance Statistics [2024] | QA.tech

- AI in Test Automation Market Size – CAGR of 19% | Market.us

- Reinforcement Learning for Automatic Test Case Prioritization and Selection in Continuous Integration | arXiv

- Razer launches new Wyvrn game dev platform with automated AI bug tester | The Verge

- 47% Growth in Test Coverage: How a Leading AI Company Cut Release Cycles with No-Code Test Automation | Testsigma

- Automated Unit Test Improvement using Large Language Models at Meta | arXiv

- Caesars Entertainment Defines & Measures ROI for Test Automation | Parasoft