Introduction

Data engineering is evolving rapidly, driven by the need to manage vast amounts of data efficiently and derive actionable insights. Artificial Intelligence (AI) is at the forefront of this transformation, offering tools and techniques that automate complex processes, enhance data quality, and optimize performance. This guide delves into how AI is revolutionizing data engineering, providing professionals with the knowledge to harness its full potential.

What is AI and Why Does It Matter in Data Engineering?

Definition of AI and Its Core Technologies

Artificial Intelligence (AI) encompasses computer systems designed to perform tasks that typically require human intelligence, such as learning, reasoning, and problem-solving. Core technologies include machine learning (ML), natural language processing (NLP), and computer vision. These technologies enable machines to process and analyze data, recognize patterns, and make decisions with minimal human intervention.

In the context of data engineering, AI refers to the application of these technologies to automate and enhance various data-related tasks. This includes data ingestion, transformation, validation, and pipeline optimization. By integrating AI, data engineers can streamline workflows, improve data quality, and accelerate the delivery of insights.

The Growing Role of AI in Transforming Data Engineering

AI is reshaping data engineering by introducing automation and intelligence into data workflows. Tasks that were once manual and time-consuming, such as data cleaning and transformation, are now being automated, allowing engineers to focus on more strategic initiatives.

Moreover, AI enhances decision-making by providing predictive analytics and real-time data processing capabilities. This enables organizations to respond swiftly to changing business conditions and customer needs.

The integration of AI also fosters innovation, as data engineers can leverage advanced tools to develop new data models and architectures that support emerging technologies and business models.

Key Statistics and Trends Highlighting AI Adoption in Data Engineering

AI adoption in data engineering is gaining momentum, driven by the promise of greater efficiency and strategic advantage. According to a recent McKinsey Global Survey, companies are beginning to structure their operations around generative AI, redesigning workflows and strengthening governance to extract meaningful value from these technologies.

A separate report from ThoughtSpot reveals that 65% of organizations are either using or actively exploring AI technologies within their data and analytics functions. Notably, early adopters are already seeing higher success rates in achieving business objectives, pointing to the tangible benefits of early AI integration.

However, the road to AI maturity isn’t uniform. While AI adoption may be progressing more slowly than anticipated, forward-thinking organizations are using this period to refine processes and prepare their infrastructure, emphasizing foundational readiness over quick fixes.

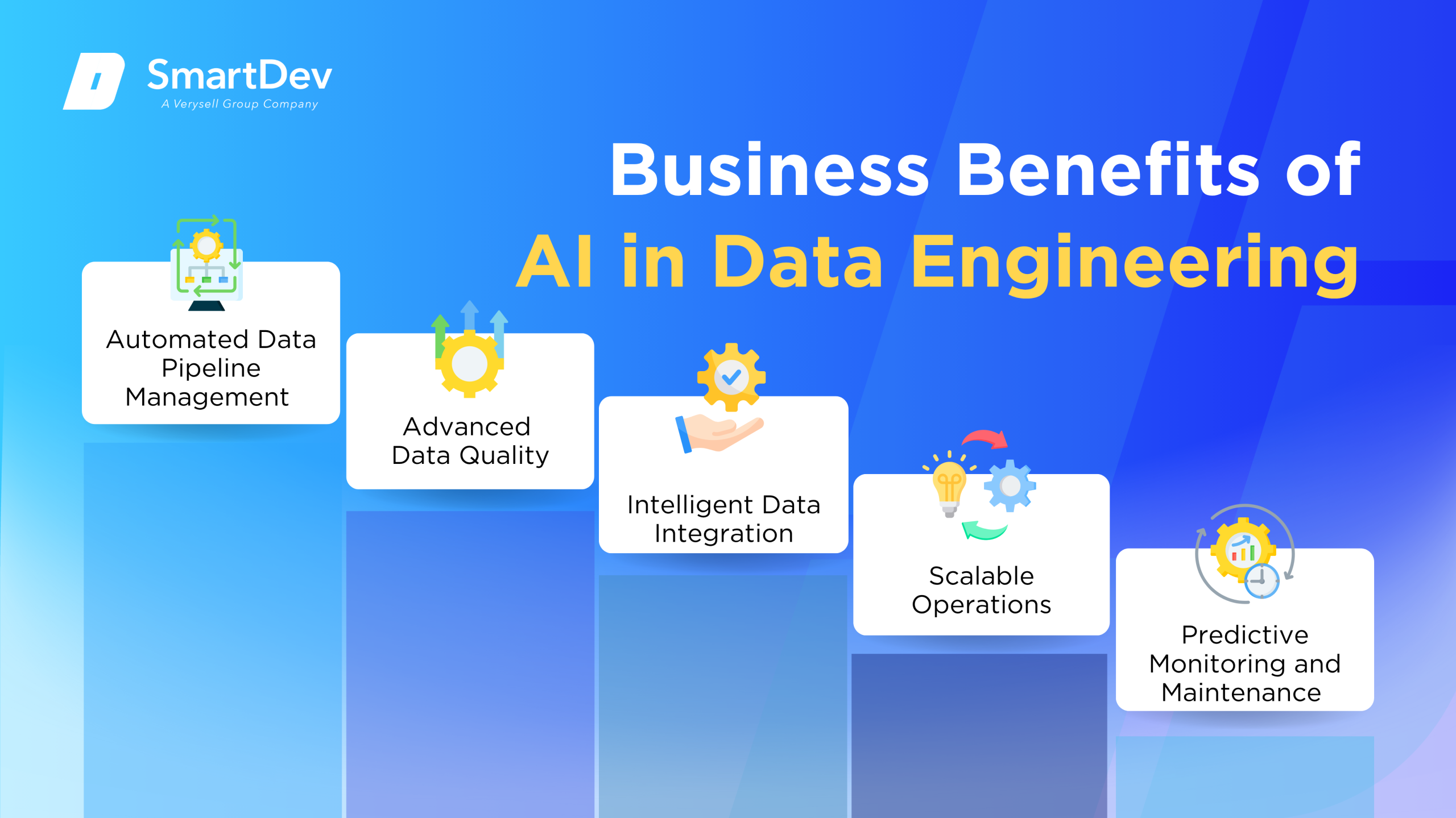

Business Benefits of AI in Data Engineering

1. Automated Data Pipeline Management

AI streamlines the creation, monitoring, and optimization of data pipelines. Machine learning models can dynamically adjust data workflows based on performance metrics and usage trends, minimizing downtime and manual intervention.

This level of automation allows data engineering teams to maintain consistent data flow across complex systems without constant oversight. It also enables faster time-to-insight, reducing bottlenecks in analytics and reporting processes.

2. Advanced Data Quality

AI-driven tools can identify data inconsistencies, duplicates, and anomalies in real-time. By continuously learning from historical patterns, these tools flag irregularities that could compromise the reliability of analytics.

This proactive data quality management ensures that business decisions are based on clean and trustworthy data. It also reduces the manual effort required for error checking and correction, saving valuable engineering resources.

3. Intelligent Data Integration

AI facilitates seamless integration of structured and unstructured data from diverse systems. It can automatically map fields, transform formats, and align semantics across databases, APIs, and third-party platforms.

This capability is particularly valuable in enterprises with heterogeneous data environments. It accelerates integration projects and ensures a unified view of enterprise data for analytics and compliance.

4. Scalable Operations

As data volumes grow, AI helps scale operations by predicting and managing resource requirements. It can adjust processing loads, optimize storage, and balance workloads across cloud and on-premise environments.

This intelligent scaling allows businesses to handle larger datasets without linearly increasing costs or engineering headcount. It supports growth while maintaining system performance and efficiency.

For a broader look at how AI boosts efficiency across operations, check out our guide on unlocking operational efficiency with AI.

5. Predictive Monitoring and Maintenance

AI enhances observability by predicting failures and performance issues in data systems before they occur. It monitors pipeline health, alert thresholds, and usage anomalies to recommend preventative actions.

This predictive maintenance minimizes downtime and enhances reliability, particularly in mission-critical data workflows. It reduces firefighting and supports a more strategic use of engineering efforts.

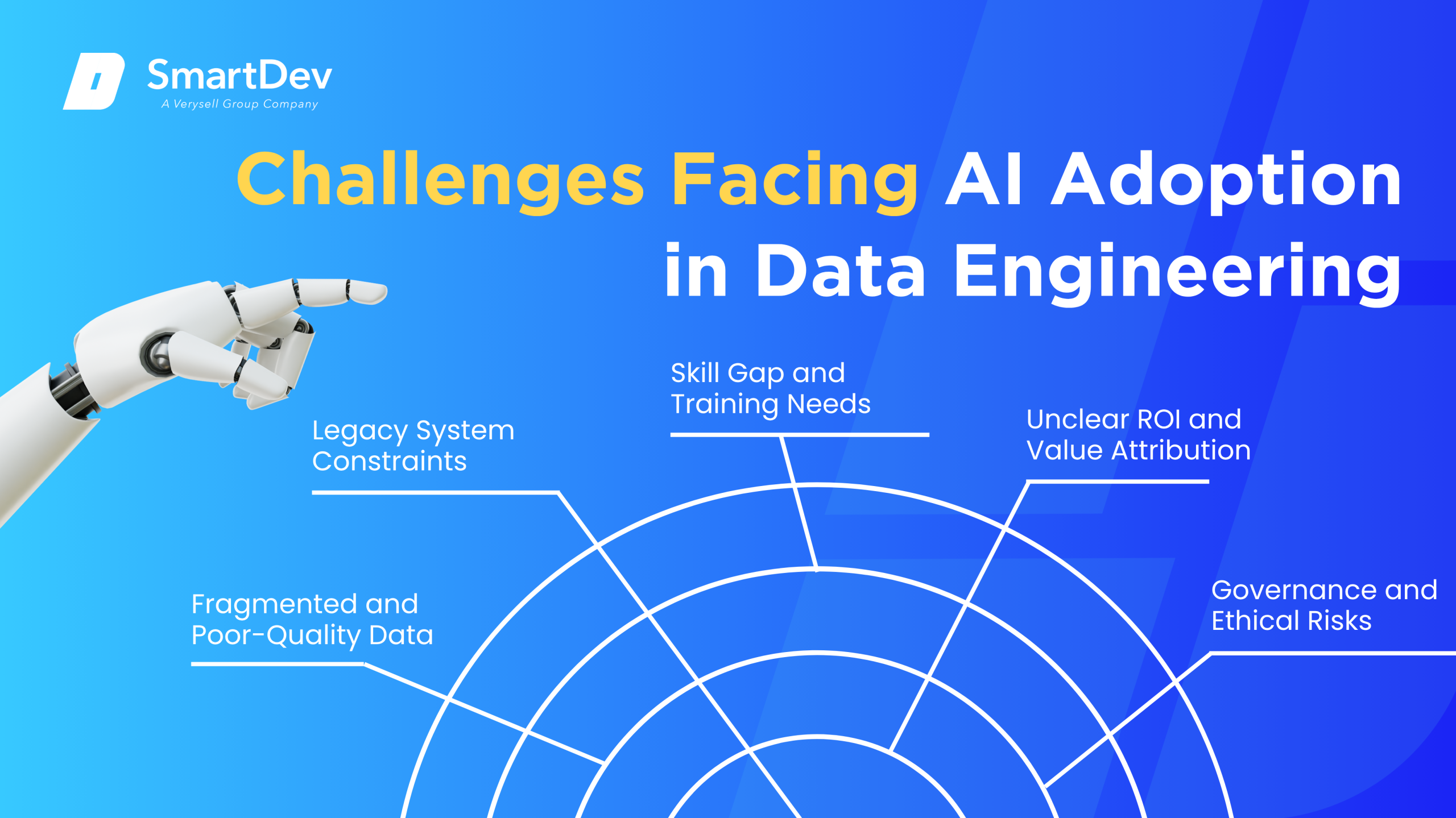

Challenges Facing AI Adoption in Data Engineering

1. Fragmented and Poor-Quality Data

AI relies heavily on data to train models and provide accurate outputs. Fragmented, inconsistent, or poorly documented data can degrade model performance and reduce trust in AI insights.

Data engineers must invest in extensive data cleaning and unification before AI can be effectively deployed. This often requires organizational alignment and tools not readily available in all environments.

2. Legacy System Constraints

Many organizations still rely on legacy data infrastructure that lacks compatibility with modern AI tools. These systems often do not support real-time data access or cloud-native architectures.

Upgrading these environments is costly and disruptive, often requiring a complete overhaul of existing systems. This creates a high barrier to AI integration and slows overall transformation efforts.

3. Skill Gap and Training Needs

While AI use cases in data engineering are expanding, the technical skillsets needed to deploy and manage these tools are often lacking. Traditional data engineers may not be trained in machine learning or advanced analytics.

Bridging this gap requires a strategic investment in upskilling and hiring talent with interdisciplinary knowledge. Without it, organizations may underutilize AI or misapply it in ways that generate low ROI.

4. Unclear ROI and Value Attribution

Many AI projects fail to define success metrics clearly, making it hard to quantify their business value. As a result, AI initiatives may struggle to secure ongoing funding or executive support.

For data engineering, where AI’s contributions are often indirect (e.g., improved pipeline efficiency), tying outcomes to business KPIs requires better tooling and measurement frameworks.

5. Governance and Ethical Risks

AI introduces new risks around bias, explainability, and data misuse. These concerns are especially acute in data engineering, where the foundational data supports downstream decisions and models.

Establishing governance frameworks that enforce transparency, data lineage, and model accountability is critical. Without them, organizations face compliance risks and potential reputational damage.

For practical strategies on managing AI’s privacy challenges, explore how to balance innovation with security in AI deployments.

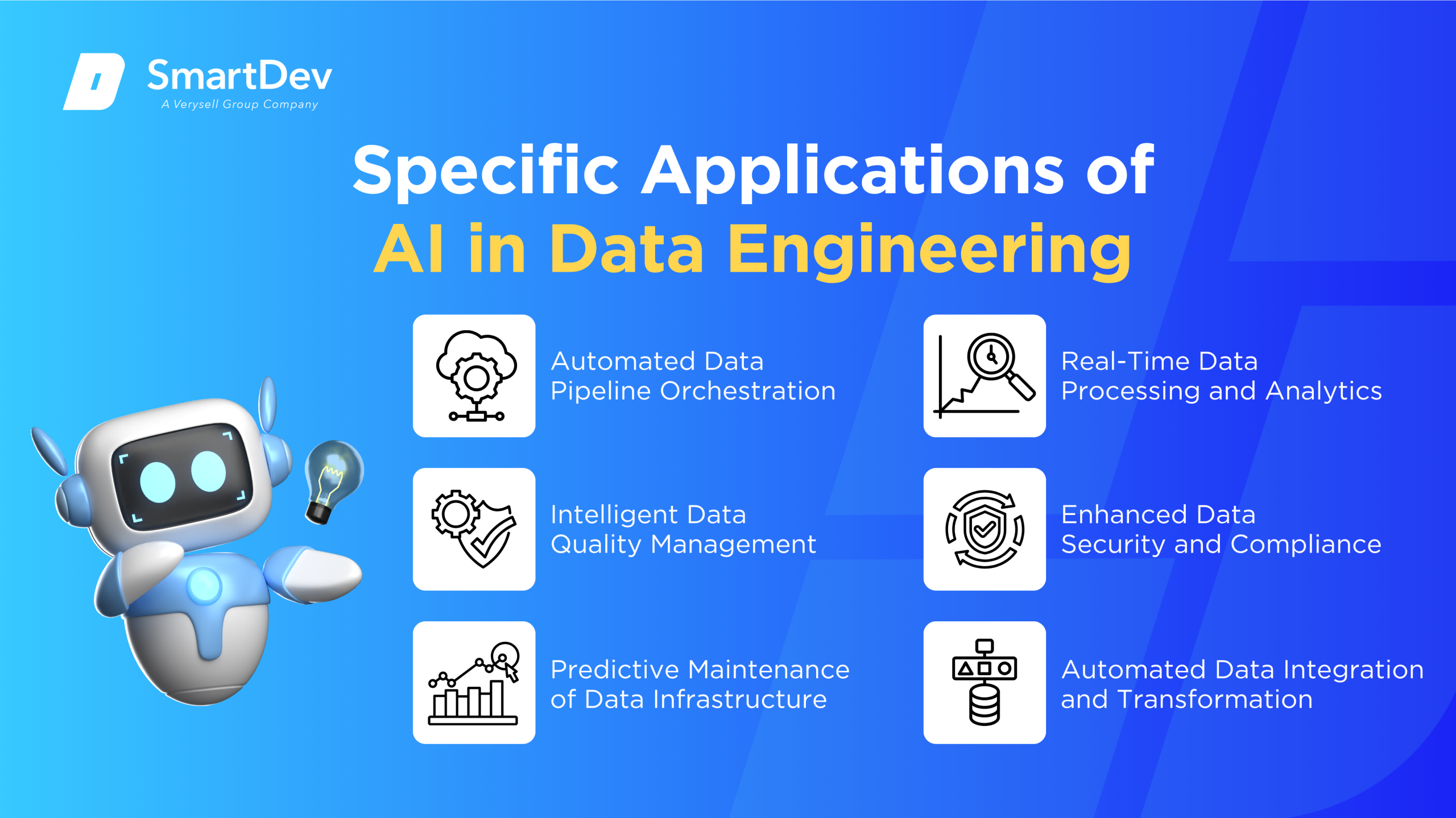

Specific Applications of AI in Data Engineering

1. Automated Data Pipeline Orchestration

AI automates data pipeline orchestration by reducing manual workload and enhancing workflow efficiency. It uses machine learning algorithms to forecast data flow needs and dynamically allocate resources. This ensures high system performance and minimal operational disruption.

These intelligent systems monitor pipelines in real time to detect bottlenecks and inefficiencies. When issues arise, the AI can reroute data flows and adjust parameters automatically. They also scale computing resources up or down based on changing data volumes.

This approach improves operational agility and lowers maintenance costs by minimizing downtime. It helps data engineers manage complex systems with fewer manual inputs. However, ensuring data security and privacy remains a critical implementation concern.

Real-World Example:

Netflix leverages AI to orchestrate its global data pipelines, maintaining seamless video streaming quality. The platform uses AI to predict network demands and optimize data routing. This has enhanced viewing experiences and reduced latency across regions.

2. Intelligent Data Quality Management

AI boosts data quality by identifying and correcting errors in datasets without manual inspection. It uses machine learning to recognize unusual data patterns and inconsistencies. This ensures that datasets remain trustworthy and usable across systems.

The system continuously checks data for issues during ingestion and processing. Upon detecting anomalies, it initiates automated cleaning and standardization processes. This helps maintain clean and consistent data pipelines in real time.

High data quality leads to more reliable analytics, reduced cleaning time, and improved stakeholder confidence. Automated systems reduce the time spent fixing common data errors manually. Still, models must be trained on diverse data to avoid bias or blind spots.

Real-World Example:

Airbnb applies AI to enhance data accuracy across millions of listings and user profiles. Their system automatically identifies outliers, duplicate entries, and inconsistent fields. This has resulted in more accurate search results and higher user satisfaction.

3. Predictive Maintenance of Data Infrastructure

AI enables predictive maintenance by forecasting failures before they disrupt operations. It analyzes historical system logs, performance metrics, and usage patterns to assess wear and tear. These insights help organizations plan maintenance ahead of time.

The AI schedules repairs during low-traffic periods to avoid business disruption. It also identifies which systems require attention, optimizing repair efforts and resource use. This proactive approach extends the lifespan of infrastructure components.

Organizations benefit from higher system availability, fewer outages, and lower maintenance costs. Predictive models depend on high-quality training data for accurate forecasting. Poor data quality can lead to false alarms or missed warnings.

Real-World Example:

GE uses AI to monitor and maintain its data infrastructure across multiple facilities. The company predicts hardware failures and schedules timely interventions. This strategy cut unplanned downtime by 30% and reduced repair expenses.

4. Real-Time Data Processing and Analytics

AI facilitates real-time data processing for instant analysis and faster decision-making. It is crucial for operations that require immediate action, such as fraud detection and supply chain monitoring. AI turns raw data into insights the moment it is generated.

Machine learning models evaluate streaming data on the fly to detect trends or outliers. They update insights continuously, enabling organizations to adapt as events unfold. This enhances business responsiveness and real-time visibility.

The advantage lies in faster reactions, competitive differentiation, and actionable intelligence. Real-time AI systems require low-latency infrastructure and careful data pipeline design. High throughput and accurate modeling are also essential for effectiveness.

Real-World Example:

Uber uses AI to match riders with drivers in real time, optimizing service based on location and traffic. The system continuously ingests data to adjust driver routes and wait times. This has reduced idle time and improved user experience.

Learn how virtual assistants use real-time AI analytics to improve decisions and user experience in this practical example.

5. Enhanced Data Security and Compliance

AI reinforces data security by detecting threats and ensuring regulatory compliance. It monitors access logs, user behavior, and data transactions in real time. When anomalies are detected, it flags or blocks suspicious activity immediately.

AI also automates compliance tasks by tracking data usage and generating audit trails. These logs are essential for meeting regulatory standards like GDPR or HIPAA. The AI helps maintain transparency without overwhelming human teams.

The benefit is lower risk of data breaches, quicker incident response, and better regulatory alignment. However, AI systems must be monitored to manage false positives and evolving attack vectors. Maintaining model accuracy is key to reliable detection.

Real-World Example:

IBM implements AI to secure its enterprise data systems, detecting potential breaches proactively. Their solution combines real-time monitoring with AI-driven compliance checks. This has improved system integrity and reduced compliance audit overhead.

For a closer look at how AI defends against modern cyber threats, explore our guide on AI-powered cyber defense strategies.

6. Automated Data Integration and Transformation

AI simplifies data integration by unifying information from diverse sources. It maps and merges data formats, removing inconsistencies across systems. This reduces the need for manual coding or spreadsheet manipulation.

Machine learning adapts to new data schemas and improves mapping accuracy over time. AI also standardizes and cleanses incoming data for downstream analytics. This shortens time-to-insight and boosts data usability.

The main value lies in faster integration workflows, fewer manual errors, and scalable ETL processes. However, AI must manage varied data types and structures without losing context. Poorly mapped data can reduce analytics accuracy or introduce risk.

Real-World Example:

Salesforce uses AI to integrate customer data from emails, CRM systems, and social media. The platform creates a 360-degree customer profile by automating data mapping and deduplication. This has led to more targeted campaigns and improved sales outcomes.

Examples of AI in Data Engineering

Real-World Case Studies

Real-world applications of AI in data engineering demonstrate its transformative impact across industries. These case studies highlight how organizations leverage AI to enhance data processes and drive business value.

1. Walmart – AI-Driven Inventory Management

Walmart has adopted AI to streamline its inventory management, ensuring products are in the right place at the right time.

Their system analyzes multiple variables such as historical sales data, seasonal trends, and local event schedules. This helps the company anticipate customer demand more accurately and adjust stock levels accordingly.

The implementation of AI has significantly reduced both product shortages and excess inventory. It has enabled faster restocking decisions and minimized manual intervention in logistics planning. As a result, Walmart has improved operational efficiency and reduced waste, contributing to a stronger bottom line.

2. General Electric (GE) – Predictive Maintenance in Manufacturing

General Electric uses AI to enhance the reliability of its manufacturing infrastructure through predictive maintenance. AI models continuously analyze real-time sensor data from machinery to detect patterns that precede equipment failure. This allows maintenance teams to intervene before a breakdown occurs, avoiding costly disruptions.

This predictive approach has lowered the risk of unscheduled downtime and extended the lifespan of critical assets. It has also improved overall plant productivity and resource allocation. By reducing emergency repairs, GE has achieved substantial cost savings and better asset management.

3. PayPal – Fraud Detection and Prevention

PayPal employs AI to combat fraud by analyzing user behavior and transaction data in real time. Their system uses machine learning to identify irregular patterns that suggest unauthorized activity. When anomalies are detected, transactions are either flagged for review or blocked automatically.

This AI-driven strategy has strengthened PayPal’s security framework and minimized financial losses from fraudulent activity. It also enhances user trust by ensuring safer online transactions. With billions of transactions processed annually, this level of automation is crucial to maintaining security at scale.

Innovative AI Solutions

Emerging AI technologies are significantly enhancing the capabilities of modern data engineering. These advancements enable more intelligent, scalable, and adaptive data processes across industries. Organizations are leveraging them to achieve better performance, accuracy, and operational resilience.

Generative AI is transforming data augmentation by creating synthetic datasets to supplement limited or imbalanced training data. This technique improves the robustness and accuracy of machine learning models without relying solely on real-world inputs. It is especially valuable in scenarios where acquiring high-quality data is costly or time-consuming.

Another breakthrough involves combining AI with edge computing to process data closer to where it is generated. This integration minimizes latency, reduces bandwidth consumption, and supports real-time decision-making in distributed environments. AI-powered data catalogs are also emerging, automatically classifying and managing data assets to enhance discoverability, governance, and regulatory compliance.

AI-Driven Innovations Transforming Data Engineering

Emerging Technologies in AI for Data Engineering

AI technologies are significantly enhancing data engineering processes. Generative AI, for instance, is automating code generation and optimization, reducing the time and effort required for data pipeline development. Tools like GitHub Copilot assist in writing SQL queries and data transformation scripts, streamlining the development process. Additionally, AI-powered code review tools are improving code quality by automatically detecting errors and suggesting improvements.

To understand the architecture behind autonomous systems powering modern data workflows, dive into our guide on creating AI agents.

Computer vision is also playing a role in data engineering by enabling the analysis of visual data. For example, AI models can process images and videos to extract meaningful information, which can then be integrated into data pipelines for further analysis. This capability is particularly useful in industries like manufacturing and retail, where visual data is abundant.

AI’s Role in Sustainability Efforts

AI is contributing to sustainability in data engineering by optimizing resource usage and reducing waste. Predictive analytics powered by AI can forecast system failures and maintenance needs, allowing for proactive interventions that minimize downtime and resource consumption. In data centers, AI algorithms are optimizing energy consumption by dynamically adjusting cooling systems and workload distribution, leading to significant energy savings.

Moreover, AI is aiding in the efficient management of data storage. By analyzing data usage patterns, AI can identify redundant or obsolete data, enabling data engineers to clean up storage systems and reduce unnecessary data retention. This not only conserves storage resources but also enhances data retrieval efficiency.

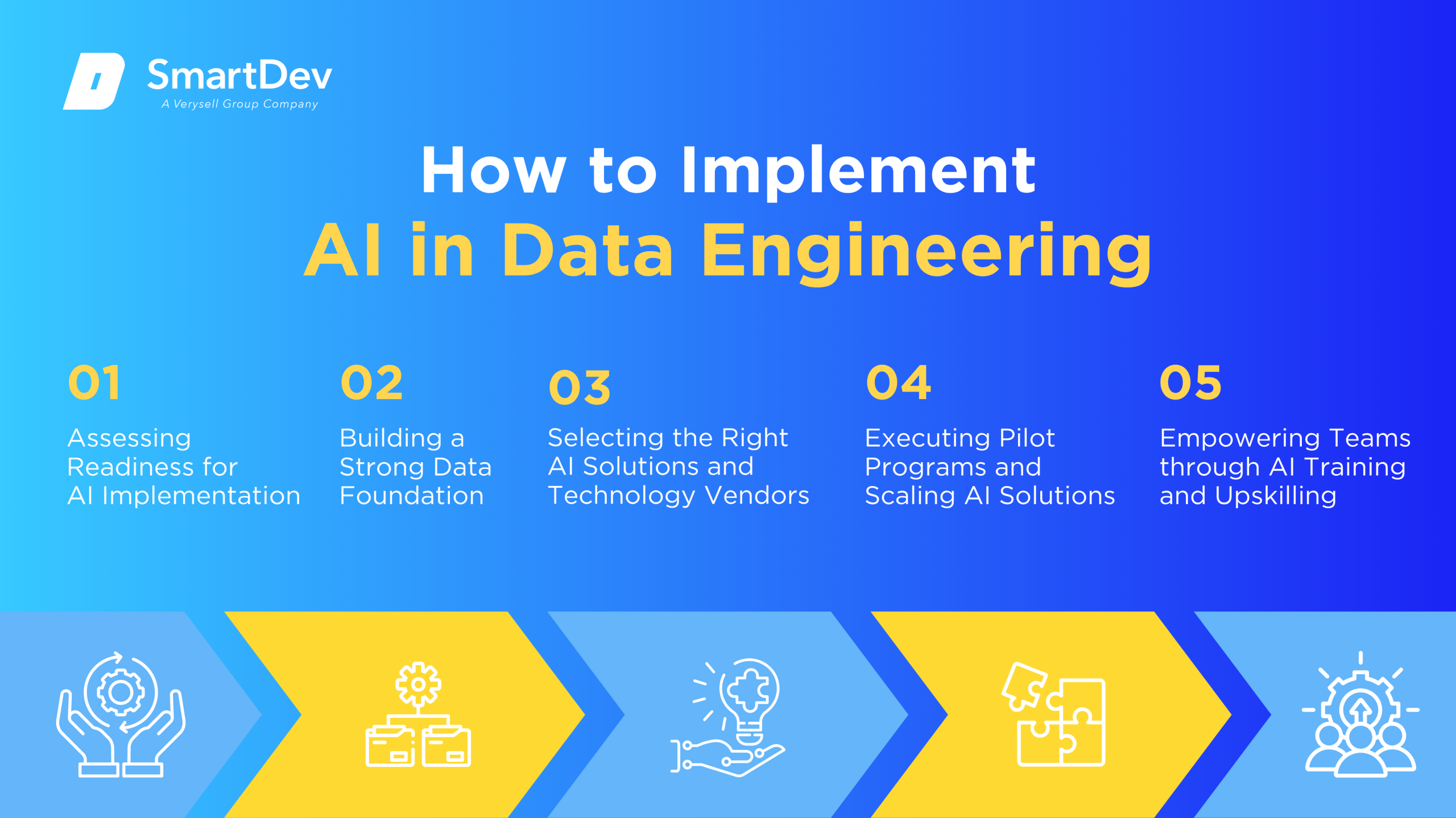

How to Implement AI in Data Engineering

Step 1. Assessing Readiness for AI Adoption

Before integrating AI into data engineering workflows, it’s essential to assess organizational readiness. This involves evaluating existing data infrastructure, identifying areas where AI can add value, and ensuring that the necessary resources and expertise are available. Organizations should start by pinpointing repetitive and time-consuming tasks that can benefit from automation, such as data cleaning, transformation, and pipeline monitoring.

Engaging stakeholders across departments is also crucial to understand the broader impact of AI adoption. Collaborating with IT, operations, and business units can help in aligning AI initiatives with organizational goals and ensuring a smooth transition. Additionally, conducting pilot projects can provide valuable insights into the feasibility and effectiveness of AI solutions in specific contexts.

Step 2. Building a Strong Data Foundation

A robust data foundation is critical for successful AI implementation in data engineering. This entails establishing standardized data collection methods, ensuring data quality, and implementing effective data governance practices. Data engineers should focus on creating centralized data repositories that facilitate easy access and integration of diverse data sources.

Implementing data version control systems can further enhance data management by tracking changes and enabling rollback capabilities. Tools like DVC (Data Version Control) allow data engineers to manage data sets and machine learning models efficiently, ensuring consistency and reproducibility across projects. Moreover, adopting metadata management practices helps in maintaining data lineage and understanding data dependencies, which is vital for complex data engineering tasks.

To see how strong data management practices empower real business growth, explore our guide focused on small business success through data.

Step 3. Choosing the Right Tools and Vendors

Selecting appropriate AI tools and vendors is a pivotal step in integrating AI into data engineering. Organizations should evaluate tools based on their compatibility with existing systems, scalability, ease of use, and support for customization. Open-source tools like Apache Airflow and Kubeflow offer flexibility and community support, while commercial platforms provide comprehensive solutions with dedicated support services.

When choosing vendors, it’s important to consider their track record, customer reviews, and the robustness of their AI solutions. Engaging in proof-of-concept projects with shortlisted vendors can provide practical insights into their capabilities and suitability for specific organizational needs. Additionally, ensuring that the chosen tools comply with data security and privacy regulations is essential to mitigate risks associated with AI implementation.

Step 4. Pilot Testing and Scaling Up

Implementing AI in data engineering should begin with pilot projects that focus on specific use cases. These pilots allow organizations to test AI solutions in controlled environments, assess their performance, and identify potential challenges. For instance, a pilot project could involve automating data ingestion processes or implementing AI-driven anomaly detection in data pipelines.

Upon successful completion of pilot projects, organizations can plan for scaling up AI solutions across the enterprise. This involves developing a roadmap for broader implementation, allocating necessary resources, and establishing monitoring mechanisms to track performance and outcomes. Continuous evaluation and iteration are key to refining AI applications and maximizing their benefits in data engineering workflows.

Step 5. Training Teams for Successful Implementation

Equipping data engineering teams with the necessary skills and knowledge is fundamental to the successful adoption of AI. Organizations should invest in training programs that cover AI concepts, tools, and best practices relevant to data engineering. Workshops, online courses, and certifications can help in building proficiency and confidence among team members.

Fostering a culture of continuous learning and collaboration encourages innovation and adaptability. Encouraging cross-functional teams to share insights and experiences can lead to the development of more effective AI solutions. Moreover, involving data engineers in the AI implementation process from the outset ensures that their expertise informs the design and deployment of AI applications, leading to more practical and impactful outcomes.

Measuring the ROI of AI in Data Engineering

Key Metrics to Track Success

Evaluating the return on investment (ROI) of AI in data engineering involves tracking various performance metrics. Productivity improvements can be measured by assessing the reduction in time spent on manual tasks, such as data cleaning and pipeline maintenance.

Cost savings achieved through automation are another critical metric. By automating data processing tasks, organizations can reduce labor costs and minimize errors that lead to costly rework. Additionally, improvements in data quality and pipeline reliability can lead to better decision-making and increased revenue opportunities.

Monitoring these metrics over time provides a comprehensive view of the financial and operational impact of AI in data engineering.

Case Studies Demonstrating ROI

Real-world case studies illustrate the tangible benefits of AI in data engineering. For instance, Ather Energy, an electric scooter manufacturer, leveraged Google Cloud’s AI-ready data platform to enhance its IoT capabilities. By integrating AI, the company increased the number of sensors in its scooters from 15 to 43, enabling advanced features like predictive maintenance and route optimization. This transformation allowed Ather Energy to update its platform monthly instead of twice per year, resulting in cost savings and improved customer satisfaction.

Another example is Euramax, a producer of coated aluminum and steel coils, which implemented AI to optimize its production scheduling. The AI system provided real-time insights into production delays, allowing the company to adjust schedules promptly and maintain productivity. As a result, Euramax could predict and update its weekly schedule at 15-minute intervals, enhancing operational efficiency and reducing downtime.

Common Pitfalls and How to Avoid Them

While AI offers significant advantages, organizations may encounter challenges during implementation. One common pitfall is underestimating the importance of data quality. AI models rely on accurate and consistent data; therefore, inadequate data governance can lead to unreliable outcomes. To mitigate this risk, organizations should establish robust data management practices and ensure that data used for AI applications is clean and well-structured.

Another challenge is the lack of clear objectives and success metrics. Without defined goals, it’s difficult to measure the effectiveness of AI initiatives. Organizations should set specific, measurable, achievable, relevant, and time-bound (SMART) objectives for AI projects. Additionally, involving stakeholders from various departments can provide diverse perspectives and foster a shared understanding of AI’s role and expectations within the organization.

Future Trends of AI in Data Engineering

Predictions for the Next Decade

Over the next ten years, AI is expected to become a foundational layer in data engineering, driving unprecedented levels of automation, intelligence, and scalability. Generative AI will evolve to support end-to-end data pipeline development, enabling systems to design, test, and deploy workflows with minimal human intervention. As data volumes continue to grow, AI will play a critical role in real-time processing and intelligent orchestration, making data operations more responsive and adaptive to business needs.

The convergence of AI with other emerging technologies – such as edge computing, 5G, and quantum computing – will further redefine the boundaries of data engineering. AI-powered observability tools will become standard, offering predictive insights into system performance and potential failures before they occur. Additionally, there will be a shift toward explainable AI in data engineering workflows, providing greater transparency and trust in automated decision-making.

This future points to a more autonomous, efficient, and resilient data infrastructure, where AI is not just a tool, but a strategic enabler.

How Businesses Can Stay Ahead of the Curve

To remain competitive in the evolving landscape of AI-driven data engineering, businesses must foster a culture of continuous innovation and agility. This involves not only investing in the latest AI technologies but also building internal capabilities to adapt quickly to change. Establishing cross-functional teams that include data engineers, AI specialists, and business strategists can accelerate the identification and execution of high-impact use cases. Regularly revisiting AI strategies to align with emerging trends ensures that implementations stay relevant and effective.

Proactive engagement with the broader AI ecosystem – through partnerships, participation in research, and collaboration with academic institutions – can also provide early access to new insights and tools. Moreover, prioritizing scalable and modular AI architectures will allow organizations to evolve their capabilities incrementally without overhauling existing systems.

Businesses that embrace a forward-thinking mindset, backed by strong data governance and ethical AI practices, will be best positioned to leverage AI as a long-term strategic advantage in data engineering.

Conclusion

Key Takeaways

AI is revolutionizing data engineering by automating complex tasks, improving data quality, and enabling faster, more accurate decision-making. Technologies like generative AI and computer vision are streamlining data pipeline development and expanding the ability to analyze diverse data types. These innovations are also contributing to sustainability through optimized resource use and energy efficiency.

To fully realize these benefits, organizations must take a strategic approach—starting with readiness assessments, building a strong data foundation, choosing the right tools, and investing in team training. Measuring ROI through improved productivity, reduced costs, and operational efficiency is critical for long-term impact. As AI continues to advance, staying proactive and agile will be key to maintaining a competitive edge in data engineering.

Moving Forward: A Path to Progress

As AI transforms the data engineering landscape, enterprises have a pivotal opportunity to enhance operational efficiency, accelerate data delivery, and unlock deeper business insights. From intelligent data pipeline automation to predictive data quality monitoring and AI-driven orchestration, strategic integration of AI is rapidly becoming essential for maintaining agility and driving data-centric innovation.

At SmartDev, we design custom AI solutions that empower organizations to scale data operations, reduce engineering overhead, and improve analytics performance. Whether it’s integrating generative AI for pipeline development or deploying machine learning models for real-time anomaly detection, our team collaborates closely to deliver systems aligned with your technical infrastructure and strategic goals.

Contact us today to discover how AI can transform your data engineering capabilities and position your business at the leading edge of digital innovation.

—

References:

- The state of AI: How organizations are rewiring to capture value | McKinset & Company

- Unlocking New Potential: How Generative AI and Data Engineering Drive Innovation and Business Value | Medium

- Top AI statistics and trends for analytics (2025) | ThoughtSpot

- Top 10 Data & AI Trends for 2025 | Medium

- AI in data engineering: Use cases, benefits, and challenges | Datafold

- The Future of Data Engineering in an AI-Driven World | Medium

- 20 Useful Data Engineering Case Studies [2025] | DigitalDefynd

- Automated data processing and feature engineering for deep learning and big data applications: a survey | arXiv

- How Morgan Stanley Tackled One of Coding’s Toughest Problems | The Wall Street Journal