Research shows that 95% of new products fail to meet market expectations a staggering statistic that costs billions annually. In AI product development, 42% of AI projects are abandoned before reaching production. Teams consistently make critical decisions based on opinions and the loudest voice in the room rather than validated evidence.

Without a structured product decision making framework to validate assumptions and align perspectives, product decisions become political negotiations. This article introduces SmartDev’s comprehensive 10-week AI Product Factory an evidence-based product discovery approach that transforms subjective opinions into testable hypotheses, builds stakeholder alignment around shared evidence, and delivers production ready AI prototypes that prove business value before major investment.

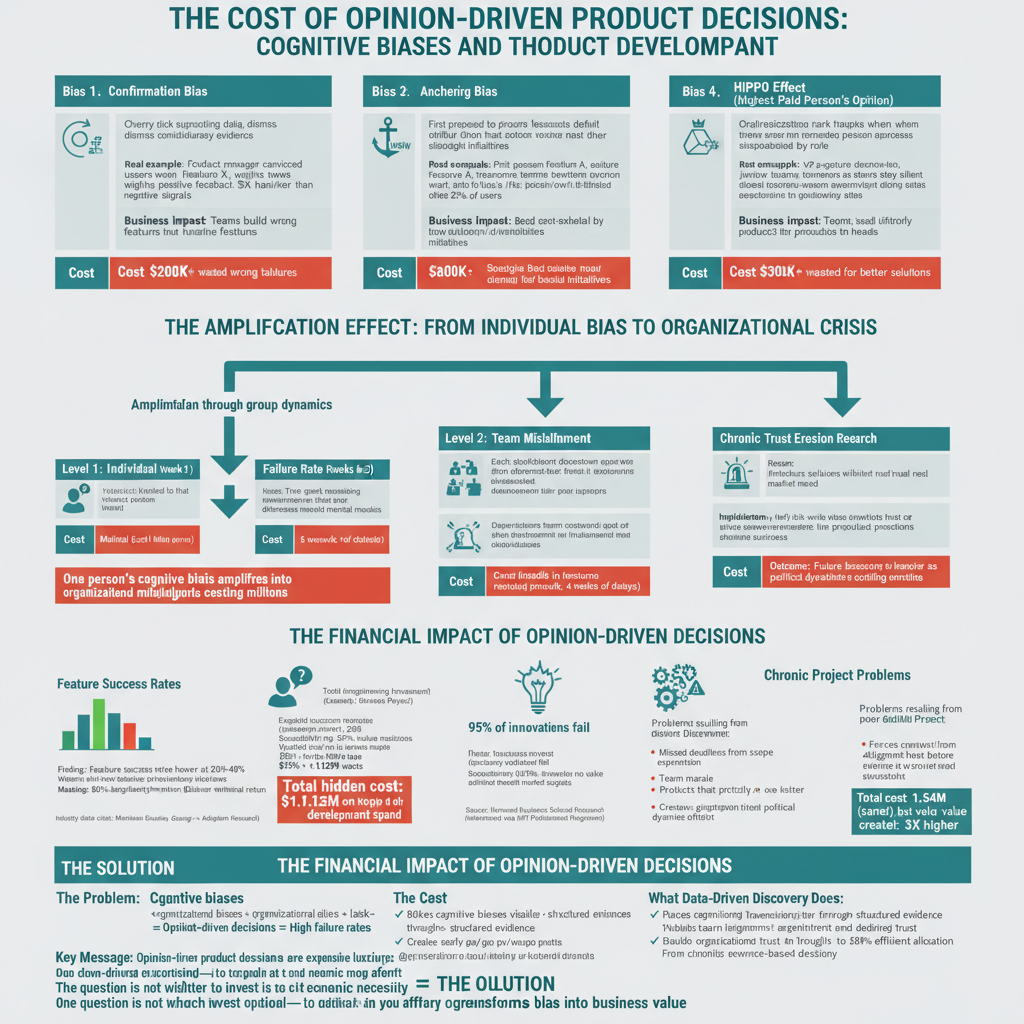

The Cost of Opinion Driven Product Decisions

Cognitive biases systematically undermine product development. Confirmation bias leads teams to cherry pick supporting data while dismissing contradictory evidence. A product manager convinced users want a specific feature will unconsciously weight positive feedback more heavily than negative signals. Anchoring bias makes the first proposed feature become the default option that other ideas must beat.

Recency bias causes teams to overreact to the latest complaint, pulling resources from strategic initiatives toward urgent feeling problems. The HiPPO effect (Highest Paid Person’s Opinion) makes organizational rank trump data when the most senior person expresses a preference, junior team members remain silent even when they possess contradictory evidence.

These individual biases amplify organizational alignment crises. Marketing prioritizes competitive differentiation based on market perception. Engineering worries about technical feasibility and system scalability. Leadership focuses on revenue targets and strategic positioning. Each stakeholder operates from incomplete information shaped by their role and incentives.

Without structured alignment, teams literally build different products in their heads, discovering the disconnect only after expensive development begins. Reducing bias in product management requires more than awareness it demands structural countermeasures embedded into the development process itself.

The financial impact extends far beyond immediate costs. Industry data shows feature success rates hover at 20-40% when discovery is inadequate, meaning most engineering investment delivers minimal return. Teams experience chronic rework, missed deadlines, and products that satisfy no one. Harvard Business School research reveals that 95% of innovations fail because they introduce solutions without real market need.

Each failed initiative erodes stakeholder trust in the product development process, making future alignment harder as groups default to protecting their interests rather than collaborating. Breaking this pattern requires systematic frameworks that make data-driven product decisions by default rather than the exception.

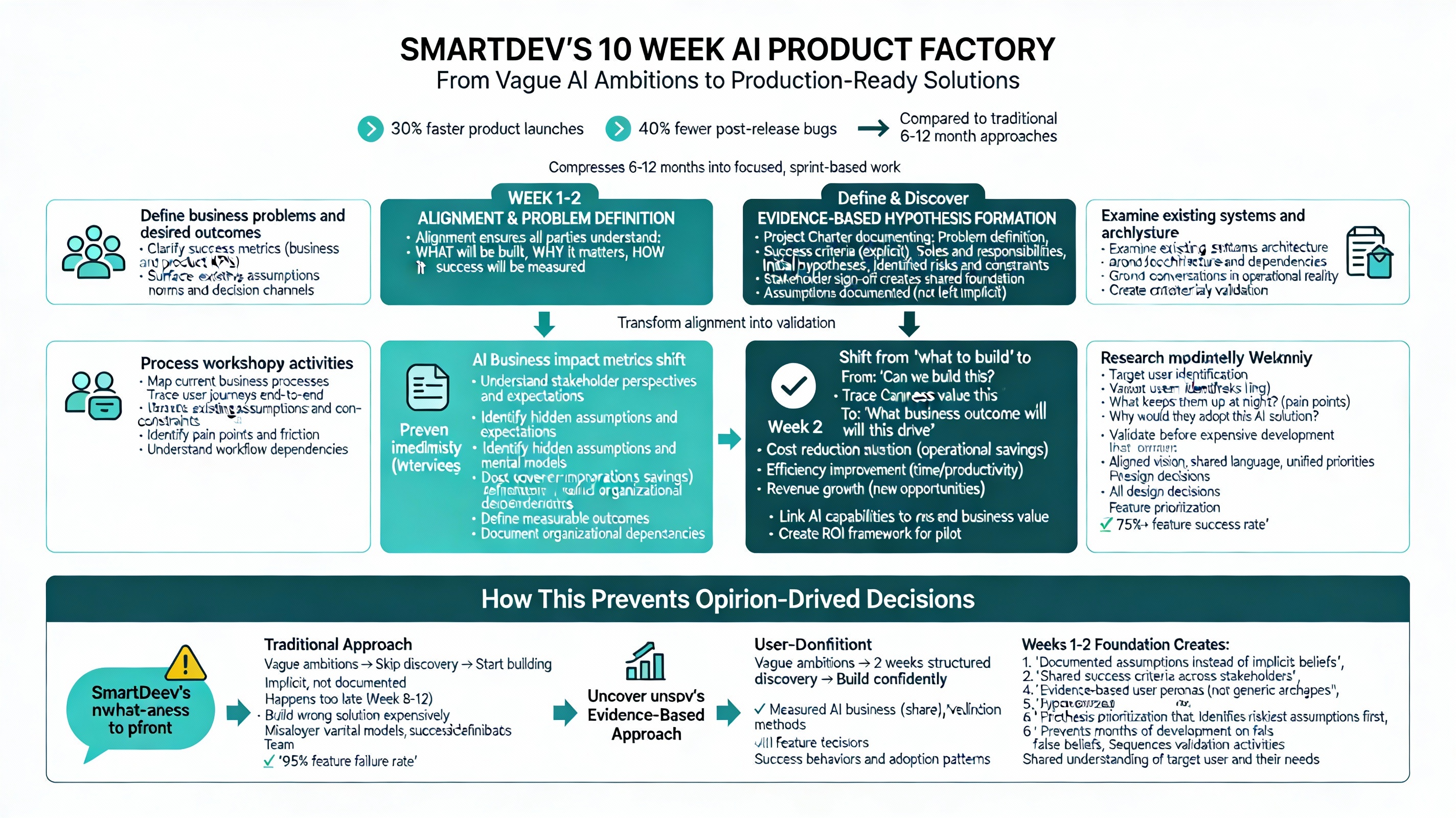

SmartDev’s 10 Week AI Product Factory: A Proven Product Decision Making Framework

Evidence-based product discovery follows a structured cycle transforming assumptions into validated, production-ready solutions. SmartDev’s 10 Week AI Product Factory compresses what traditionally takes 6-12 months into focused, sprint-based work that validates AI use cases, reduces product risk, and accelerates time to market.

The program delivers 30% faster product launches with 40% fewer post release bugs compared to traditional approaches. By systematically embedding validating product assumptions throughout the process, teams can confidently move from exploration to execution.

Weeks 1 – 2: Define & Discover from Assumptions to Business Hypotheses

The first two weeks transform vague AI ambitions into testable business hypotheses. This phase directly addresses opinion-driven decision making by creating structured processes for surfacing and validating product assumptions.

Week 1: Alignment & Problem Definition

SmartDev’s discovery kickoff sessions bring cross functional stakeholders together through collaborative workshops that define business problems and desired outcomes, clarify success metrics (both business and product), surface existing assumptions and constraints, and establish communication norms and decision channels.

This initial week focuses on stakeholder alignment, ensuring all parties understand not just what will be built, but why it matters and how success will be measured. The emphasis on data-driven product decisions begins here, as teams document their assumptions explicitly rather than leaving them implicitly.

Stakeholder interviews follow, conducted individually with each stakeholder group. These interviews focus on understanding perspectives, expectations, and priorities while identifying hidden assumptions, variations in success definitions, organizational dependencies, and unspoken constraints.

The team simultaneously conducts current state and system reviews, examining existing systems, data architecture, and dependencies. This grounds conversations in reality and prevents optimistic planning.

Week 1 Outcome: A Project Charter documenting problem definition, success criteria, stakeholder roles, initial value hypotheses, and identified risks. All stakeholders sign off, creating a shared foundation for evidence-based product discovery.

Week 2: Evidence-based Hypothesis Formation

The team transitions from alignment to validation planning. Collaborative workshops explore current business processes, user journeys, and pain points. Critically, the team defines AI business impact metrics tied to cost reduction, efficiency, or revenue shifting conversations from “can we build this?” to “what business outcome will this drive?”

User personas move beyond generic archetypes to specific, research-grounded profiles. Who is the target user? What keeps them up at night? Why would they adopt this AI solution? These personas become the foundation for all design and development decisions.

Week 2 Outcome: Clearly scoped AI experiments with measurable outcomes. Hypothesis prioritization Identifies the riskiest assumptions requiring validation first, preventing months of development based on false beliefs.

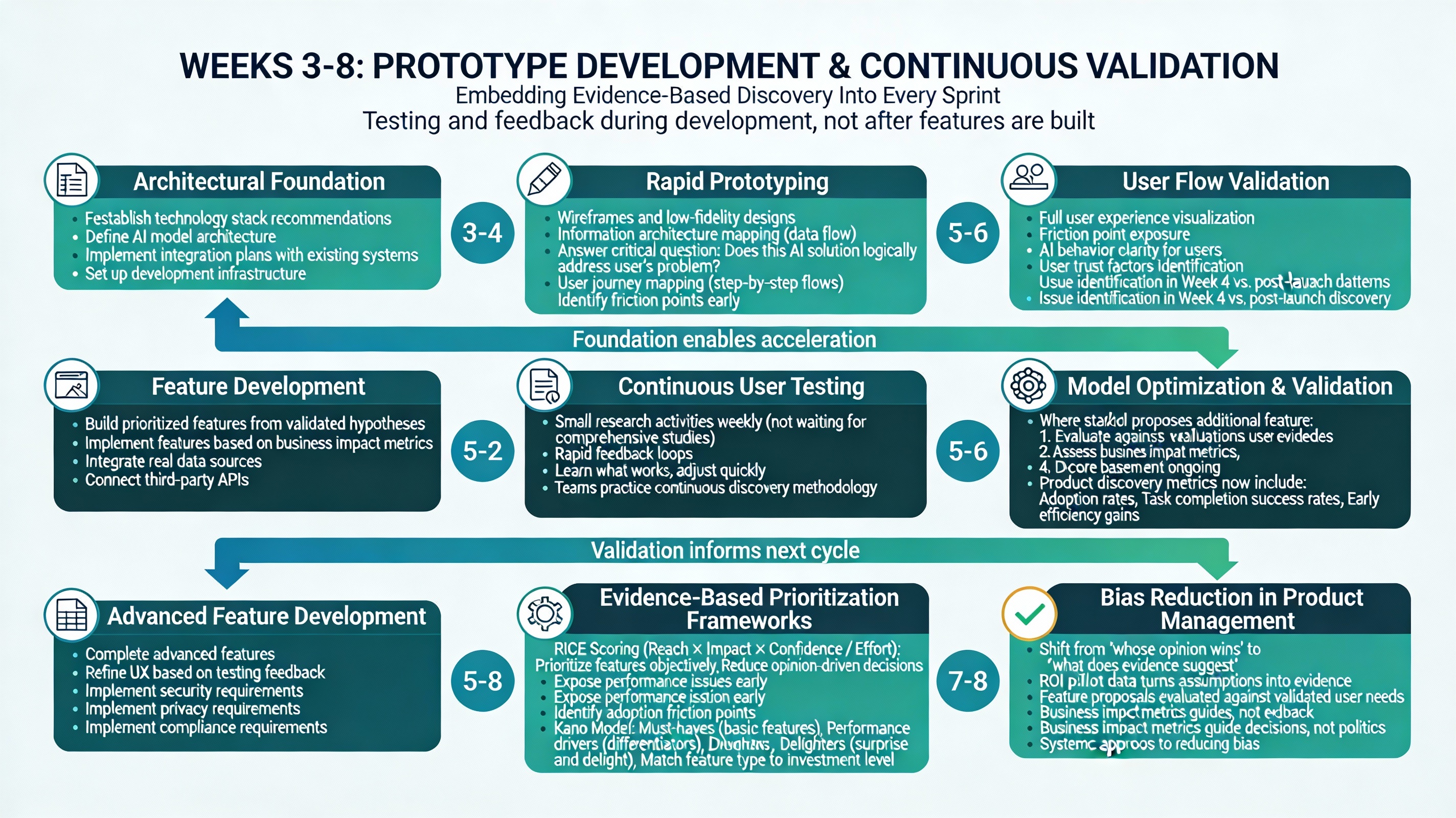

Weeks 3 – 8: Prototype Development Continuous Validation Through Evidence-based Product Discovery

Unlike traditional development where testing happens after features are built, Weeks 3 8 embed validating product assumptions into every sprint. This phase focuses on development on high impact workflows that directly move AI business impact metrics, ensuring every feature contributes to measurable outcomes.

Weeks 3 – 4: Core Architecture & Initial Prototype

Development begins with architectural foundation settings. The team establishes technology stack recommendations, defines AI model architecture, and implements integration plans with existing systems.

Simultaneously, rapid prototyping begins. Wireframes and low fidelity designs focus on information architecture how data flows through the system rather than just visual polish. This answers the critical question: “Does this AI solution logically address the user’s problem?”

User journey mapping outlines step by step flows users take to solve problems with the AI product. This exposes friction points early. When the team visualizes the full user experience, they often discover moments where the flow breaks down; the AI behavior confuses users, or user trust is undermined. Identifying these issues in Week 4 is invaluable compared to discovering them post launch.

Weeks 3 – 4 Outcome: A functioning technical foundation with initial AI model implementation and clickable prototypes simulating core user flows. Early product discovery metrics begin tracking prototype testing results and user feedback patterns.

Weeks 5 – 6: Feature Development & Model Refinement

With the foundation established, development accelerates. The team builds prioritized features based on validated hypotheses, implements AI model training and optimization, and integrates real data sources and third-party APIs.

Critically, this phase includes continuous user testing. Teams practicing continuous discovery conduct small research activities weekly rather than waiting for comprehensive studies. This cadence creates rapid feedback loops where teams learn what works and adjust direction quickly.

AI models are fully embedded and stress tested in operational environments, exposing performance issues, adoption friction, and integration risks early. Through iterative demos with stakeholders, teams observe where value is created, where adoption barriers exist, and whether expected ROI remains achievable.

This ongoing validation exemplifies data-driven product decisions in action teams continuously compare actual results against predicted outcomes, adjusting course based on evidence rather than pressing forward regardless of feedback.

Weeks 5 – 6 Outcome: A working AI prototype with core features implemented and validated. Ongoing ROI measurement using live pilot data turns assumptions into evidence. Product discovery metrics now include adoption rates, task completion success, and early efficiency gains.

Weeks 7 – 8: Advanced Features & User Experience Polish

The final development sprint focuses on completing advanced features, refining user experience based on testing feedback, and implementing security, privacy, and compliance requirements.

Team prioritizes features using (Reach × Impact × Confidence / Effort) and the Kano Model (must haves, performance drivers, and delighters).

These frameworks shift conversations from “whose opinion wins” to “what does the evidence suggest impact and feasibility?” When a stakeholder proposes an additional feature, the team evaluates it against validated user needs and business impact metrics, not political influence. This systematic approach to reducing bias in product management ensures features are selected based on contribution to defined success metrics.

Weeks 7 – 8 Outcome: A production ready AI prototype with all core functionality validated through user testing, AI models optimized for performance and accuracy, and complete technical documentation. Accumulated product discovery metrics provide clear evidence of which features drive user value.

Weeks 9 – 10: Rollout & Evaluation From Metrics to ROI Decisions

The final two weeks transform collected metrics into clear ROI insights and implementation of roadmaps.

This is where early AI ROI measurementata-contrast enables confident decision making about scaling, pivoting, or stopping the initiative.

Week 9: Comprehensive Testing & Performance Validation

The team conducts rigorous testing across multiple dimensions: performance validation against predefined success criteria, user acceptance testing with target user groups, security and compliance audits, and stress testing under expected production loads.

Simultaneously, the team translates operational metrics into financial impact. If Week 5 testing showed 60% processing time reduction, Week 9 calculates what that means in labor cost savings, increased capacity, or revenue impact.

ROI presentations outline conservative scenarios (minimum observed performance), expected scenarios (actual pilot results), and upside scenarios (reasonable improvements as the system matures). This acknowledges uncertainty while increasing credibility.

Week 9 Outcome: Comprehensive validation results with clear before and after comparisons. Supporting product discovery metrics like time to value, user adoption rates, and AI stability explain why outcomes were achieved.

Week 10: Strategic Roadmap & Implementation Planning

The final week synthesizes learnings into actionable strategic outputs. The team creates a phased implementation roadmap (MVP, V1.0, V2.0), defines scaling requirements, and establishes ongoing monitoring processes.

Every roadmap decision links back to specific research findings. When feature X ranks higher than feature Y, documentation explains: “8 out of 12 interview participants mentioned pain point X vs. only 3 mentioning pain point Y.” This prevents stakeholders from reopening debates with opinions, ensuring decisions remain grounded in evidence-based product discovery.

Week 10 Outcome: Complete implementation package including validated prototype, strategic roadmap for 6 12 months, technical architecture, ROI analysis with clear recommendations, and team transition documentation everything needed for confident scaling investment.

Ready to eliminate hidden technical risks before they cost you millions?

SmartDev's proven discovery process replaces assumptions with validated insights, ensuring your tech stack scales with growth, security is built in from day one, and timelines reflect real complexity, not wishful thinking.

De-risk your investment, align stakeholders around a clear technical strategy, and make confident go/no-go decisions backed by comprehensive feasibility analysis.

Schedule Your Technical Feasibility Assessment Today<span data-contrast="”none”">What Your Business Gets: Beyond the Documents

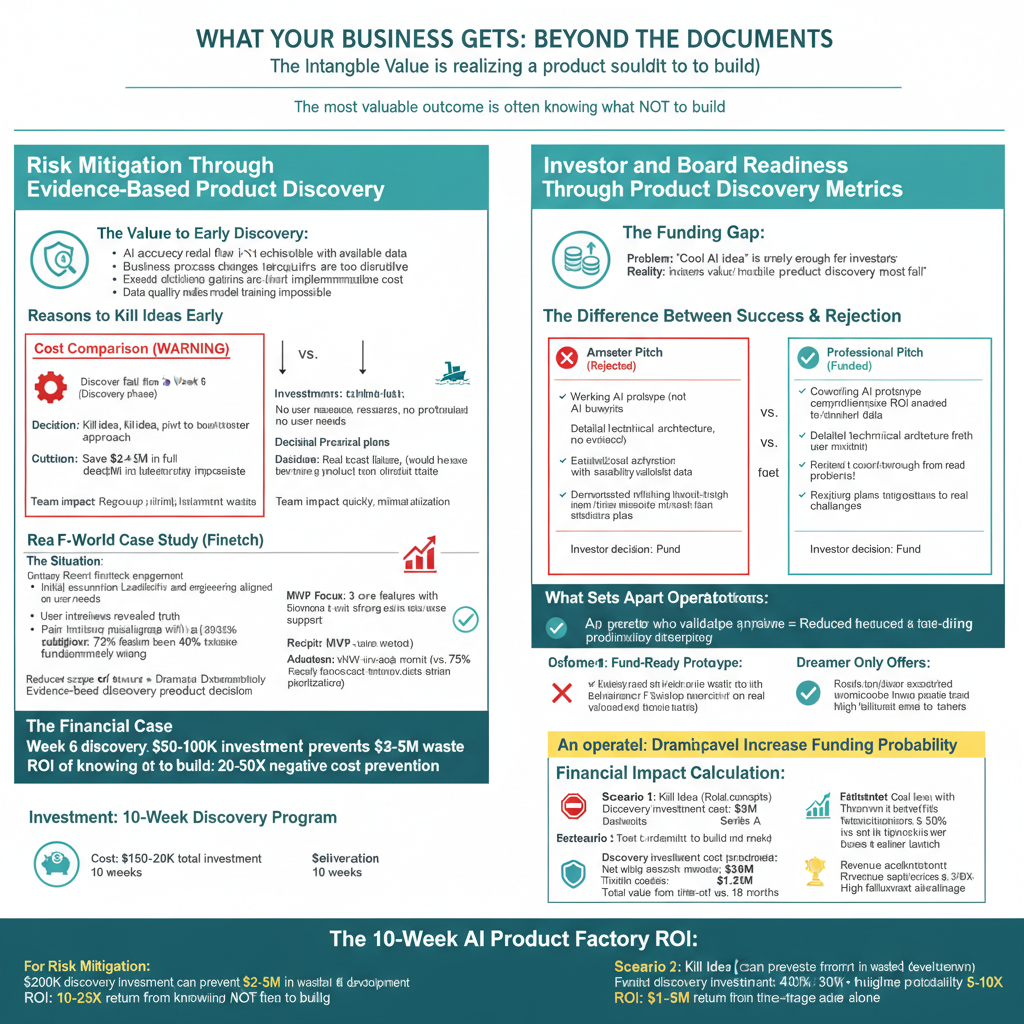

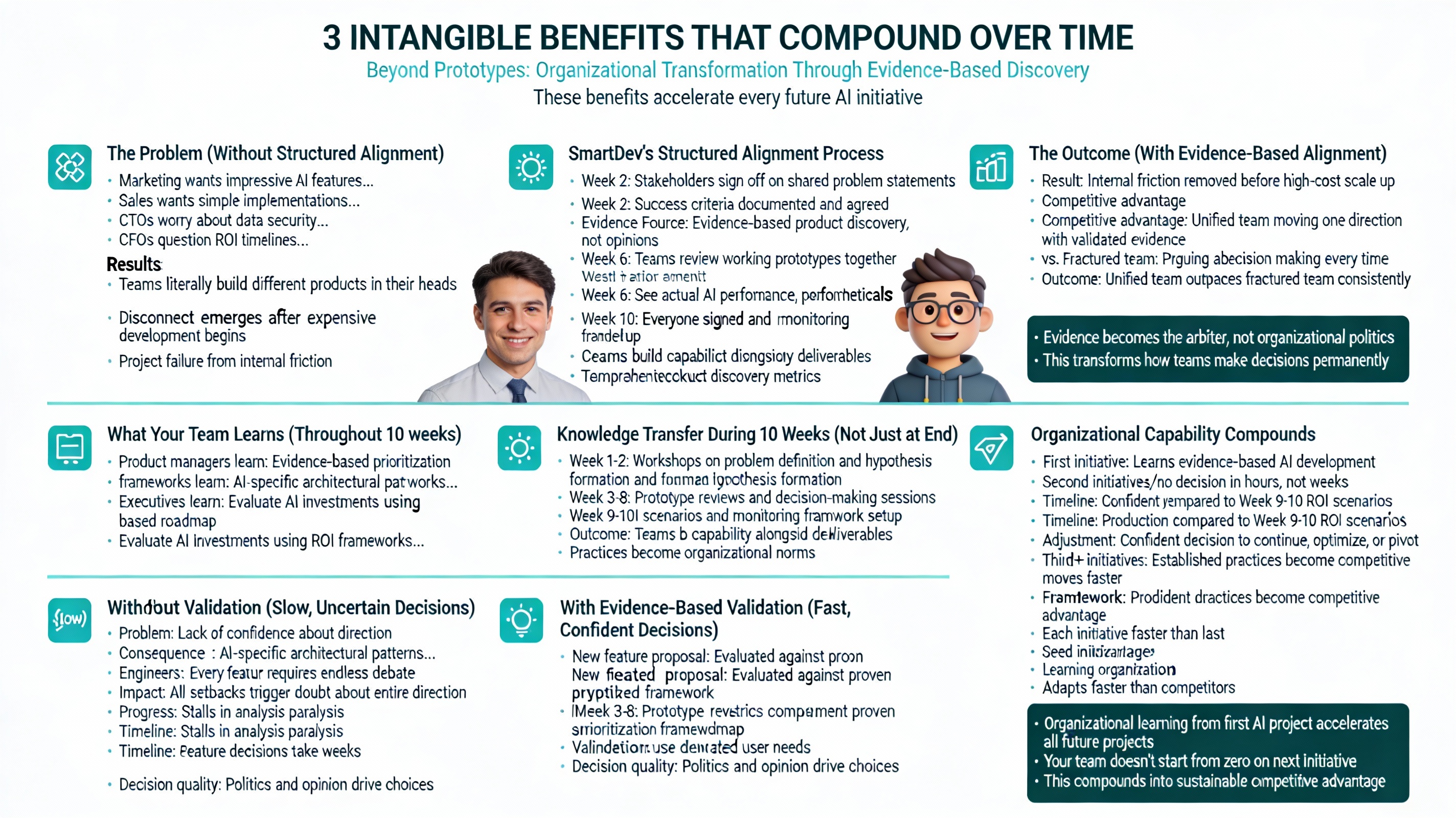

While the deliverables are critical, the intangible value to your business from adopting data-driven product decisions is often even greater. SmartDev’s 10 Week AI Product Factory fundamentally transforms how your organization approaches AI product development.

1. Risk Mitigation at the Right Stage ThroughEvidence-based Product Discovery

Sometimes, the best outcome of evidence-based product discovery and prototyping is the realization that a product shouldn’t be built at least not in its current form. This might happen because the AI accuracy required isn’t achievable with available data; the business process changes required for adoption are too disruptive, or the expected efficiency gains don’t justify the implementation cost.

Discovering a fatal flaw in Week 6 through systematic validating product assumptions costs tens of thousands of dollars. Discovering it in Month 18 of full production development costs millions of dollars and destroys team morale. SmartDev’s 10-week process acts as a safety filter for your capital, giving you permission to kill bad ideas or pivot to better approaches before they destroy your AI strategy.

In a recent fintech engagement, SmartDev’s discovery process (Weeks 1 2) revealed through evidence-based product discovery that stakeholder assumptions about user needs were fundamentally misaligned. Evidence from user interviews showed pain points neither leadership nor engineering had prioritized. By Week 10, the team delivered a validated MVP focusing on three core features with strong evidence support, eliminating speculative additions that would have consumed 40% more development time. The result: MVP development completed 40% faster due to reduced scope of churn, and feature adoption reached 73% within one month nearly double the historical average, demonstrating the power of data-driven product decisions.

2. Investor and Board Readiness Through Product Discovery Metrics

For startups seeking funding or enterprises requesting a budget for AI initiatives, a “cool AI idea” is rarely enough. Investors and executives have heard thousands of AI pitches; most fail to demonstrate clear business value through credible product discovery metrics.

Walking into a pitch meeting with a working AI prototype, comprehensive ROI analysis, detailed technical architecture, and validated user research separates you from the amateurs. It demonstrates that you are an operator who understands AI execution through evidence-based product discovery, not just a dreamer with a PowerPoint deck of AI buzzwords.

It shows you’ve thought through the hard problems of data quality, model accuracy, user adoption, scalability, and have realistic plans to solve them grounded in data-driven product decisions. This is the difference between a rejected pitch and a funded initiative. The 10-week investment in validation dramatically increases your probability of securing scaling investment.

3. Stakeholder Alignment That Actually Lasts

Internal conflict kills AI momentum faster than any external competitor. You have competing visions: Marketing wants impressive AI features for differentiation; Sales wants simple implementations that won’t confuse customers; CTOs worry about data security and model governance; CFOs question ROI timelines.

Without structured alignment through a product decision making framework, teams literally build different products in their heads, discovering the disconnect only after expensive development begins. SmartDev’s 10 week process forces these conversations to happen early, in a structured way, with evidence as the arbiter rather than organizational politics.

By Week 2, stakeholders have signed off on shared problem statements and success criteria through evidence-based product discovery. By Week 6, they’re reviewing working prototypes together, seeing actual AI performance rather than debating hypotheticals. By Week 10, everyone has signed off on the same evidence-based roadmap validated through comprehensive product discovery metrics. The internal friction is removed before the high-cost scale up begins.

A unified team moving in one direction with validated evidence will outpace a fractured team arguing about opinions every time, demonstrating the competitive advantage of data-driven product decisions.

4. Organizational Learning That Compounds

Perhaps the most valuable outcome is what your team learns during the 10-week process about reducing bias in product management and making data-driven product decisions. Your product managers learn evidence-based prioritization frameworks they’ll use on every future initiative. Your engineers learn AI specific architectural patterns and best practices. Your executives learn how to evaluate AI investments using ROI frameworks rather than hype.

This organizational capability around evidence-based product discovery compounds over time. Teams that master evidence-based AI product development build learning organizations that adapt faster than competitors. When the next AI opportunity emerges, your team doesn’t start from zero; they apply proven frameworks from the product decision making framework and move faster.

SmartDev’s process includes knowledge transfer throughout the 10 weeks, not just at the end. Your team participates in workshops, prototype reviews, and decision-making sessions, building their capability alongside the deliverables and internalizing practices for validating product assumptions.

5. Decision Confidence That Enables Speed

The ultimate business value is speed enabled by confidence through data-driven product decisions. Without the 10-week validation process using evidence-based product discovery, AI decisions become slow because stakeholders lack confidence. Every feature requires endless debate. All setback triggers doubt about the entire direction. Progress stalls in analysis of paralysis.

With validated evidence from the 10-week process and established product discovery metrics, decisions become fast. When someone proposes adding a new AI feature, the team evaluates it against the proven prioritization framework, checks if it aligns with validated user needs, and makes a confident yes/no decision in hours, not weeks.

When early production metrics come in below expectations, the team refers back to the Week 9 10 ROI scenarios, checks if performance falls within expected ranges, and makes a confident decision to continue, optimize, or pivot based on the product decision making framework.

Evidence-based product discovery creates organizations where the best idea wins regardless of who proposed it, where changing direction based on data is celebrated rather than seen as failure, and where psychological safety enables honest discussion of uncertainty and risk through systematic reducing bias in product management.

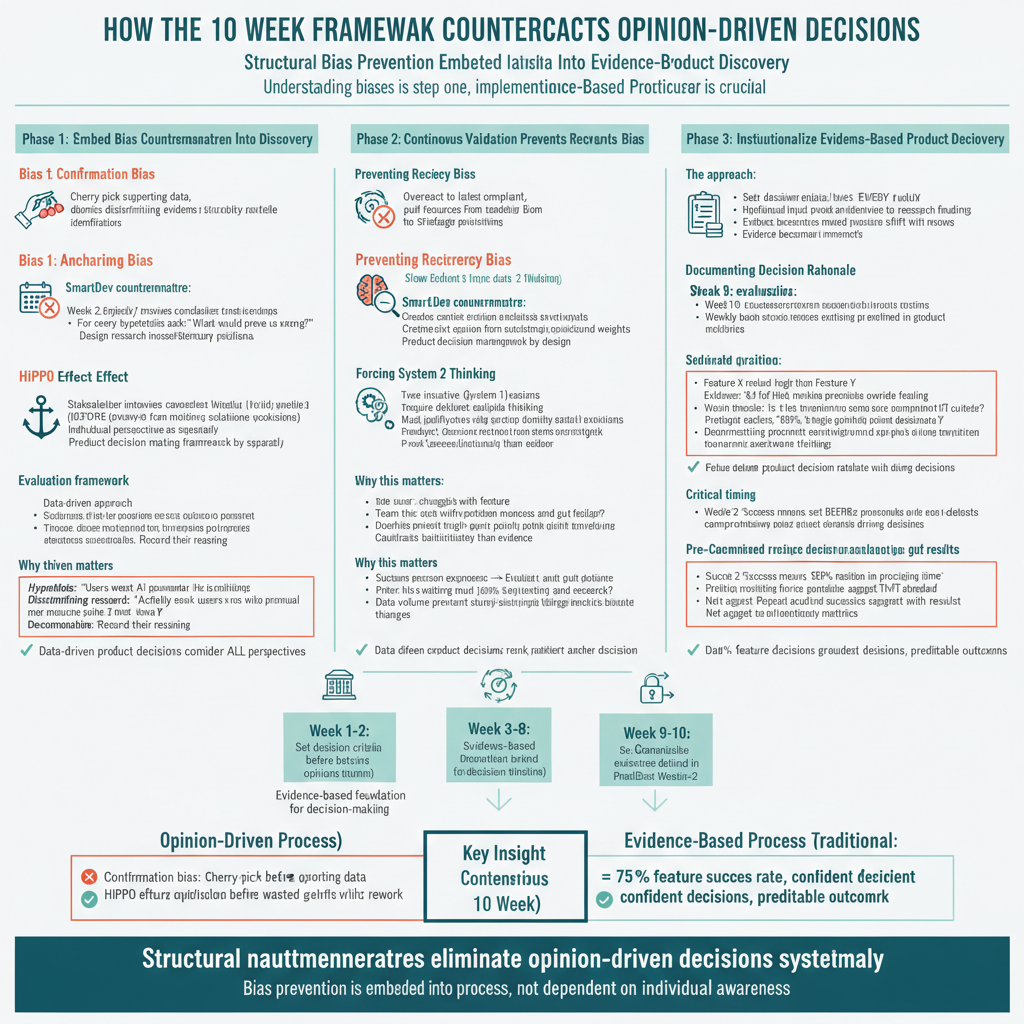

How the 10 Week Framework Counteracts Opinion Driven Decisions

Understanding biases is the first step; implementing countermeasures through a structured product decision making framework is crucial. SmartDev’s 10 Week AI Product Factory structurally prevents the cognitive biases that undermine AI product development by embedding reducing bias in product management practices throughout the engagement.

Structural Bias Prevention in Weeks 1-2: Alignment & Discovery

The discovery phase embeds bias countermeasures directly into evidence-based product discovery processes:

Combating Confirmation Bias: Week 2 explicitly requires teams to identify disconfirming evidence as part of validating product assumptions. For every hypothesis, the team asks, “What would prove us wrong?” and designs research investigating that question. If the hypothesis is “users want AI powered recommendations,” the research actively seeks users who might prefer manual selection and documents for their reasoning, ensuring data-driven product decisions consider all perspectives.

Preventing Anchoring Bias: Stakeholder interviews in Week 1 are conducted individually before group workshops, preventing the first person’s opinion from anchoring subsequent discussions. Only after individual perspectives are documented does the team synthesize in collaborative sessions where evidence, not speaking order, determines priority within the product decision making framework.

Countering HiPPO Effects: The Week 1 Project Charter establishes decision criteria and weights before discussing specific solutions, supporting reducing bias in product management. When the most senior person later expresses a preference, the team evaluates it against pre-defined criteria: “Does this align with our validated user personas?” “Does this address high priority pain points from research?” Rank becomes less influential than evidence in data-driven product decisions.

Continuous Validation in Weeks 3-8: Prototype Development

The iterative sprint model prevents recency bias and overconfidence through systematic evidence-based product discovery:

Preventing Recency Bias: Weekly user testing throughout Weeks 3 8 creates consistent feedback streams, preventing overreaction to the latest complaint. When one user struggles with a feature, the team checks if that feedback reflects a pattern across multiple users or is an outlier tracked through product discovery metrics. Data volume prevents single data points from triggering costly direction changes, enabling stable data-driven product decisions.

Forcing System 2 Thinking: Two-week sprint planning sessions require slow, deliberate, analytical thinking about priorities within the product decision making framework. Teams can’t rely on intuition and gut feeling when they must justify every sprint’s priority against validated hypotheses and success metrics. This structured forcing function counteracts the natural tendency toward fast, heuristic-based System 1 thinking, exemplifying reducing bias in product management.

Evidence-based Decisions in Weeks 9-10: Evaluation & Roadmapping

The final weeks institutionalize evidence-based product discovery and data-driven product decisions:

Documenting Decision Rationale: Week 10’s roadmap links every prioritization decision to specific research findings through the product decision making framework. When feature X is ranked higher than feature Y, the documentation explains: “8 out of 12 interview participants mentioned pain point X unprompted vs. 3 mentioning pain point Y” and “Prototype testing showed 85% task completion rate for X vs. 62% for Y.” Future stakeholders can’t reopen debates with opinions because the evidence-based decision rationale is explicit, demonstrating comprehensive validating product assumptions.

Pre Commitment to Success Criteria: Week 9 evaluation measures result against success criteria defined in Week 1 2, before the team knew what results would be. This prevents retroactively moving goalposts or cherry-picking favorable metrics. If Week 2 established “success means 50% reduction in processing time,” Week 9 reports actual results against that standard using product discovery metrics, honestly acknowledging whether the target was met through data-driven product decisions.

Creating a Decision Quality Culture Through Evidence-based Product Discovery

The cumulative effect of these structural bias countermeasures embedded in the product decision making framework is a fundamental shift in organizational culture around reducing bias in product management. Teams that complete the 10-week process learn that:

- Evidence is the arbiter: When stakeholders disagree, they don’t escalate to the highest-ranking person; they gather more data through evidence-based product discovery.

- Uncertainty is acceptable: Documenting assumptions and confidence levels through validating product assumptions is valued over projecting false certainty.

- Learning is celebrated: Discovering that Week 3 assumptions were wrong and pivoting in Week 5 based on evidence is seen as successful experimentation, not failure, enabling data-driven product decisions.

- Process beats politics: Following the evidence-based product discovery framework produces better outcomes than organizational influence.

These cultural shifts persist long after the 10-week program ends, improving decision quality across all future initiatives and establishing data-driven product decisions as the organizational standard.

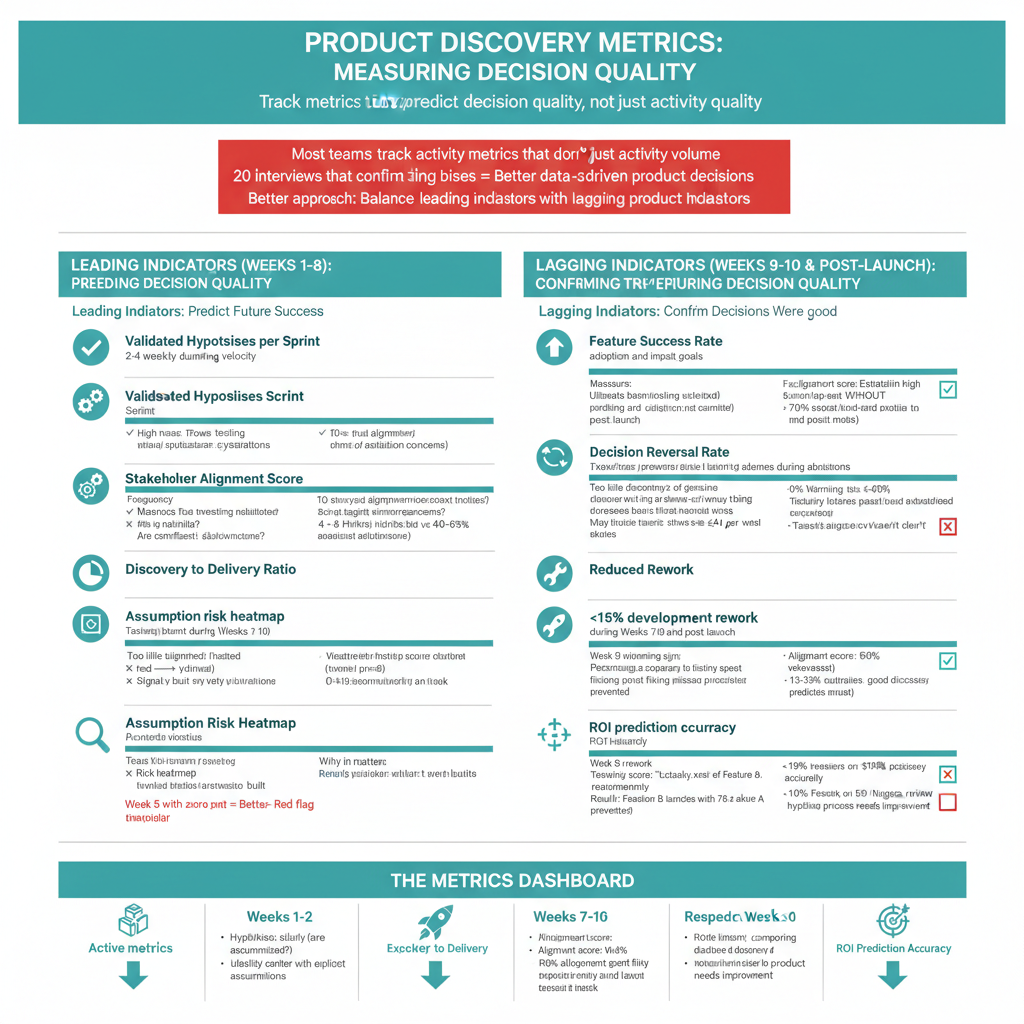

Product Discovery Metrics: Measuring Decision Quality Throughout the 10 Weeks

Most teams track activity metrics that don’t predict decision quality. Teams feel productive conducting 20 interviews or writing 50 user stories, but if those activities confirm existing biases or test wrong assumptions, the activity doesn’t translate to better data-driven product decisions. Better product discovery metrics balance leading indicators with lagging indicators.

SmartDev’s 10 Week AI Product Factory tracks metrics that actually predict and measure decision quality through systematic evidence-based product discovery, balanced across leading indicators (predicting future success) and lagging indicators (confirming decisions were good).

Leading Indicators (Weeks 1-8): Predicting Decision Quality

Validated Hypotheses per Sprint: Target 2 4 weekly during Weeks 3 8 as a core product discovery metric. This indicates healthy learning velocity where teams are actually testing assumptions through validating product assumptions, not just building features. If Week 5 passes with zero hypotheses validated, it signals the team is executing based on untested assumptions rather than data-driven product decisions.

Stakeholder Alignment Score: Surveyed weekly during Weeks 1 10 as part of reducing bias in product management, measuring whether stakeholders understand priorities, agree with rationale, and feel confident in direction. Scores below 7/10 trigger alignment sessions before misalignment of compounds. This metric tracks the effectiveness of the product decision making framework in maintaining consensus.

Discovery to Delivery Ratio: For new AI products, target 40-60% of time in discovery and validation (Weeks 1 6) versus execution (Weeks 7 10) as part of evidence-based product discovery. Too little discovery risks building wrong things; too much delays market learning. The 10-week model balances this precisely through its structured product decision making framework.

Assumption Risk Heatmap: Tracked throughout Weeks 1 8 as a key product discovery metric, mapping which critical assumptions have been validated (green), are in testing (yellow), or remain untested (red). Week 5 reviews ensure high risk assumptions are validated before dependent features are built, supporting data-driven product decisions and validating product assumptions systematically.

Lagging Indicators (Weeks 9-10 and Post Launch): Confirming Decision Quality

Feature Success Rate: Measured in Week 9 10 pilot testing and post launch as the ultimate product discovery metric. Target 60-80% of features achieving adoption and impact goals dramatically higher than the 20 40% typical without structured evidence-based product discovery. This is the ultimate test of predictive accuracy in data-driven product decisions.

Decision Reversal Rate: Target 20 40% of initial concepts modified or abandoned during Weeks 1 10 as evidence of genuine validating product assumptions. This signals genuine testing rather than theater validating predetermined conclusions. If zero concepts change, the process isn’t actually testing assumptions through evidence-based product discovery.

Reduced Rework: Tracked as percentage of development capacity. Target <15% rework during Weeks 7 10 and post launch scale up. Decreases indicate evidence-based product discovery captured requirements accurately upfront rather than discovering gaps during development, demonstrating effective product decision making framework implementation.

ROI Prediction Accuracy: Measured by comparing Week 2 ROI hypotheses to Week 9 validated results. Target ±30% accuracy. If Week 2 predicted 50% efficiency improvement and Week 9 validated 38%, that’s within an acceptable range for data-driven product decisions. If Week 9 shows only 10%, the evidence-based product discovery process needs improvement.

How Product Discovery Metrics Drive Continuous Improvement

SmartDev reviews these product discovery metrics with clients at the Week 5 checkpoint and Week 10 retrospective. When metrics trend positively, the team identifies contributing practices to replicate, strengthening the product decision making framework. When they decline, the team diagnoses whether issues stem from discovery of quality, execution problems, or external factors, enabling reducing bias in product management.

The goal isn’t hitting arbitrary targets but establishing feedback loops enabling continuous improvement in evidence-based product discovery. Organizations that track these metrics across multiple AI initiatives compound their capability, getting better at data-driven product decisions with each project.

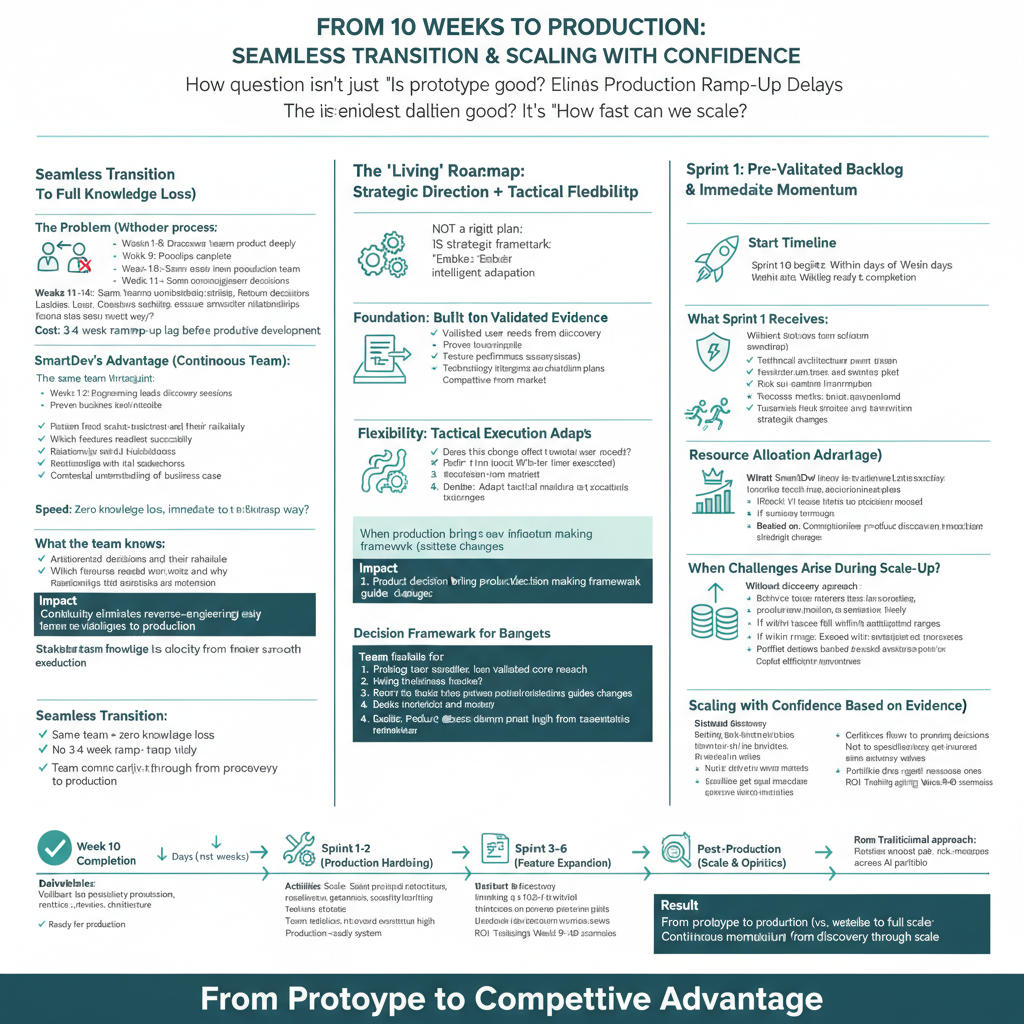

From 10 Weeks to Production: What Happens Next

You’ve finished SmartDev’s 10 Week AI Product Factory with comprehensive product discovery metrics and validated data-driven product decisions. You have your production ready prototype, your evidence-based roadmap, your ROI analysis, and your technical architecture. The question now is: What comes next?

Seamless Transition to Full Production

Because SmartDev’s engineering leads were involved from discovery (Weeks 1 2) through prototyping (Weeks 3 8) to roadmap creation (Week 10), the transition to full production is seamless. There is no knowledge loss during a “handover” to a different team who must learn the product decision making framework from scratch.

The team that built the prototype through evidence-based product discovery understands the architectural decisions, knows which features validated successfully and which needed pivots, and has relationships with your stakeholders. This continuity accelerates full production development by eliminating the 3-4 week ramp up lag typical when new teams must reverse engineer prior data-driven product decisions.

The “Living” Roadmap Enables Adaptive Execution

The Week 10 roadmap isn’t a rigid plan; it’s a strategic framework enabling intelligent adaptation grounded in evidence-based product discovery. Because the roadmap is built on validated evidence about user needs and business impact through validating product assumptions, you can be flexible on tactical execution while staying true to strategic direction.

When production brings new insights, a feature performs better than expected, a technology integration proves harder than planned, a competitor launches a similar capability the product decision making framework provides the structure for evaluating these changes. Does this change affect validated core user needs? Does it impact the proven business case? The “North Star” from the 10-week validation allows adaptive execution without chaos, maintaining data-driven product decisions.

Immediate Sprint Starts with Pre-Validated Backlog

With the backlog defined during the 10 weeks, production Sprint 1 often starts within days of completing Week 10. There is no multi week “requirements gathering” lag where developers try to understand what to build. Your development team hits the ground running with prioritized user stories, technical architecture, and validated prototypes ready for production hardening based on the product decision making framework.

This momentum is invaluable. Teams that maintain velocity from validation through production reach market faster and build confidence through consistent progress enabled by data-driven product decisions. The psychological benefit of momentum often matters as much as time savings.

Scaling with Confidence Based on Evidence-based Product Discovery

Perhaps most importantly, the 10-week validation provides the evidence needed for confident scaling decisions through comprehensive product discovery metrics. Leadership isn’t betting on untested assumptions when committing to full production. They’re investing in solutions proven to deliver business value during the pilot through rigorous validating product assumptions.

When challenges inevitably arise during scale up, they always do the team refers back to Week 9 10 ROI scenarios and risk assessments. If issues fall within anticipated ranges, the team executes mitigation plans calmly based on the product decision making framework. If issues fall outside expected ranges, the team escalates appropriately without panic.

Evidence-based product discovery means resources flow to proven AI capabilities, not speculative experiments. This dramatically improves capital efficiency across the AI portfolio through systematic data-driven product decisions.

Conclusion: Stop Guessing, Start Building With Evidence-based Product Discovery

SmartDev’s 10 Week AI Product Factory ensures every dollar is invested in validated capability. You walk away with a working prototype, proven ROI, validated user experience, technical architecture, and execution confidence. Your team knows what to build, why to build it, how to build it, and what business value it creates.

In a world where AI decisions are high stakes bets under uncertainty, the question isn’t whether you can afford evidence-based product discovery; it’s whether you can afford not to. Organizations mastering this capability build learning organizations adapting faster than competitors, compounding advantages over time.

Ready to transform how your team makes AI product decisions?

Explore SmartDev’s 10 Week AI Product Factory or learn how SmartDev accelerates stakeholder alignment in discovery’s first week. Transform your AI ideas into data-driven product decisions and production ready solutions through our proven product decision making framework emphasizing validating product assumptions, reducing bias in product management, and tracking product discovery metrics throughout.