Artificial intelligence is changing the way businesses operate, creating new opportunities for growth and efficiency. However, as AI becomes more integrated into everyday processes, it’s important to ensure that it’s used safely and responsibly. This is where AI guardrails come into play.

In this blog, we’ll explore what AI guardrails are, why they matter, and how businesses can implement them to foster safe and innovative use of AI.

Why AI Guardrails Matter Now

AI has incredible potential to transform industries, but with this power comes responsibility. While AI can streamline operations, reduce costs, and enhance customer experiences, it can also introduce risks, such as bias, data security issues, or even compliance violations if not properly managed.

Arnaud Lucas, Head of Mobile Sensing Intelligence at Cambridge Mobile Telematics, discussed the importance of balance in AI during his appearance on the Ctrl Shifter podcast. He noted, “Innovation isn’t against safety. It’s about allowing innovation dans a safe manner.”

In other words, AI guardrails are not obstacles—they are safety measures that ensure AI innovation is both effective and secure.

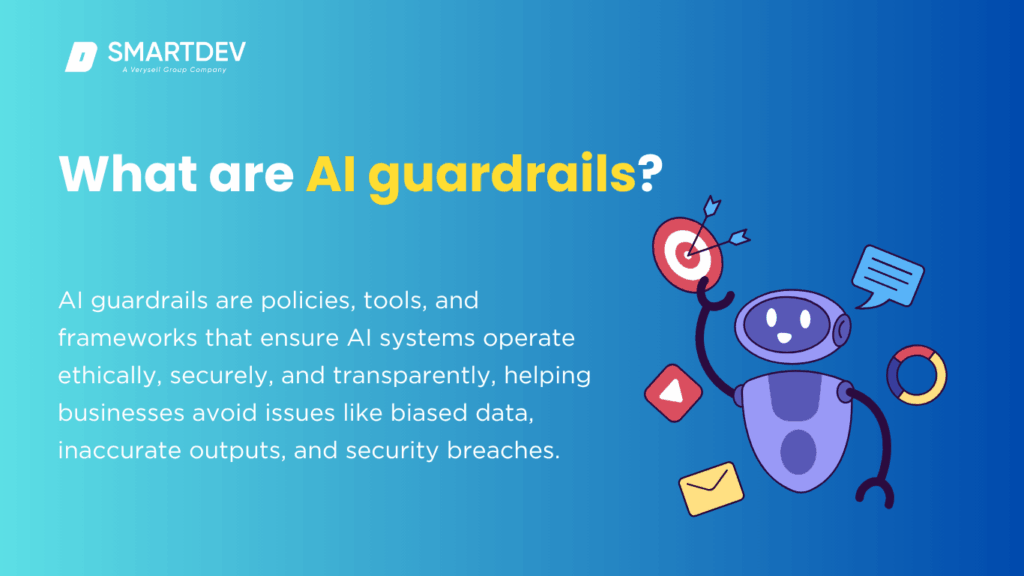

What Are AI Guardrails?

AI guardrails are structures that help businesses use AI responsibly. They are a combination of policies, tools, and frameworks designed to ensure that AI systems operate ethically, securely, and transparently. These guardrails help businesses avoid potential pitfalls, such as biased data, inaccurate AI outputs, or security breaches.

For businesses, implementing AI guardrails means taking steps to ensure that the data used by AI models is clean, secure, and compliant with privacy regulations. It also involves creating frameworks to ensure that AI decisions are transparent and auditable. Ultimately, guardrails allow companies to use AI in a way that is both productive and trustworthy.

The Key Elements of Effective AI Guardrails

To implement effective AI guardrails, businesses should focus on a few key areas:

D'abord, data integrity is essential. The quality of the data feeding AI models directly impacts the accuracy and fairness of AI outputs. Poor or biased data can lead to flawed decisions and undermine trust in AI. Ensuring that data is clean, secure, and compliant with regulations like GDPR is the first step in building AI guardrails.

Second, AI models must be transparent. Businesses need to ensure that the way their AI systems make decisions can be explained and understood. This transparency helps build trust with customers and regulators and ensures that AI systems are used responsibly.

Another important element is human oversight. Even the best AI systems can make mistakes, such as “hallucinating” or generating incorrect results. Having a human in the loop to review and validate AI outputs ensures that errors are caught before they lead to negative consequences.

Dernièrement, sécurité and access control are critical. AI systems should be protected from unauthorized access, and businesses need to ensure that their AI tools meet compliance standards. This is particularly important for organizations handling sensitive customer data.

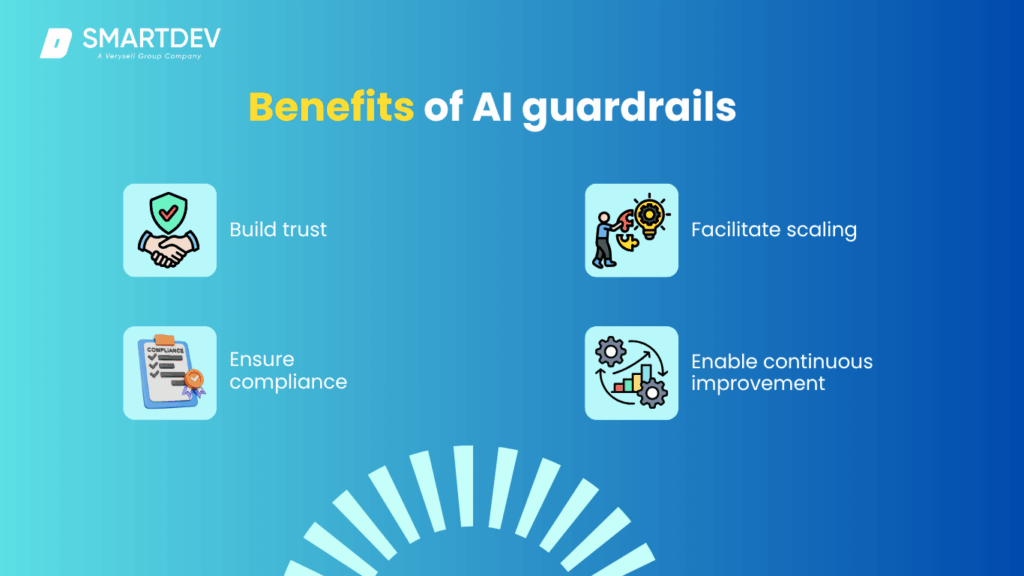

The Benefits of AI Guardrails

AI guardrails offer several benefits. The most obvious is that they help build trust. By ensuring that AI systems are ethical, secure, and transparent, businesses can gain the confidence of customers, employees, and regulators.

Guardrails also help organizations comply with regulations. As AI regulations continue to evolve, having established guardrails in place ensures that businesses can adapt quickly and remain compliant.

In addition, AI guardrails make it easier for businesses to scale their AI initiatives. By ensuring that AI systems are secure and trustworthy from the start, companies can roll out new AI applications without the fear of unexpected risks or challenges.

Finally, guardrails enable continuous improvement. As businesses collect more data and refine their AI models, guardrails help ensure that AI systems remain fair, transparent, and compliant as they evolve.

How to Implement AI Guardrails

To get started, businesses need to define their goals for AI adoption. Before implementing AI, it’s important to ask how AI can add value—whether by improving efficiency, enhancing customer experiences, or solving business challenges.

Once the goals are clear, companies should establish frameworks for AI governance. This includes using best practices and guidelines like the Cadre de gestion des risques liés à l'IA du NIST to ensure that AI systems are ethical, transparent, and secure.

Collaboration is key. AI should not be implemented in isolation. Leadership teams, data scientists, legal teams, and IT professionals must work together to ensure that governance and compliance are embedded into AI projects from the beginning.

It’s also important to start small. Businesses should pilot AI projects in controlled environments first, testing them internally before expanding to customers. This approach allows organizations to identify potential issues early and refine their processes before scaling up.

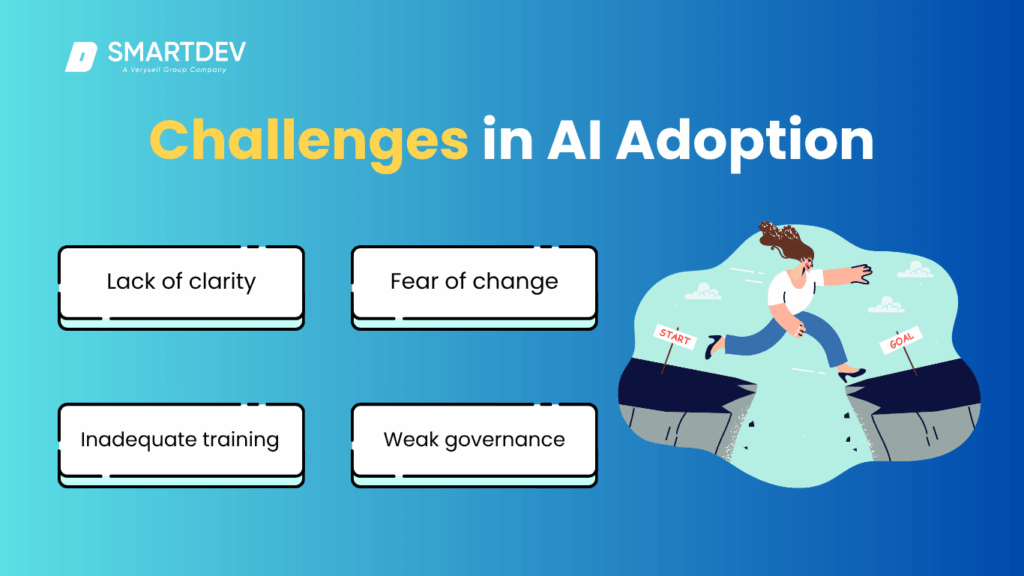

Common Challenges in AI Adoption

Lack of clarity

While AI has tremendous potential, many companies face défis when adopting it. One common issue is lack of clarity about how AI fits into the organization. Employees may not fully understand the purpose or benefits of AI, which can hinder adoption.

Fear of change

Some employees may worry that AI will replace their jobs, leading to resistance. It’s important for organizations to communicate clearly that AI is a tool to help employees work more effectively, not to replace them.

Inadequate training

Without proper training, employees may not fully embrace AI tools or understand how to use them effectively. Providing ongoing training and real-world examples can help overcome this challenge.

Weak governance

If data privacy, security, and compliance are not addressed early on, it can lead to delays or even failure. Establishing governance frameworks early ensures that AI is adopted safely and responsibly.

Insights from the Ctrl Shifter Podcast

Dans episode 9 of the Ctrl Shifter podcast, Arnaud Lucas shared valuable insights into the challenges and strategies around AI adoption and how to implement guardrails effectively. One key takeaway was that businesses should start small with AI, testing it in controlled environments before rolling it out to customers. This approach allows organizations to refine their AI models and governance systems before scaling.

Another important point Lucas made was the balance between innovation and safety. He emphasized that companies could innovate quickly without exposing themselves to unnecessary risks if they have the right guardrails in place.

Le podcast also explored how businesses can use AI responsibly, ensuring that both the technology and its application are aligned with the company’s values and compliance requirements. This helps create an environment where AI supports business growth and efficiency without compromising security or ethics.

Conclusion: Guardrails as Enablers of Safe Innovation

AI presents immense opportunities, but it must be used responsibly to avoid unintended consequences. AI guardrails are essential for ensuring that AI systems are ethical, secure, and transparent. By implementing these guardrails, businesses can unlock the full potential of AI while mitigating risks.

Comme Arnaud Lucas said, “Innovation doesn’t have to be dangerous. You can innovate safely when the right guardrails are in place.” With the right frameworks, businesses can ensure that AI drives value without compromising trust or security.

Want to dive deeper into the world of AI guardrails and responsible innovation? Listen to the full episode of Ctrl Shifter episode 9, featuring Arnaud Lucas, where he shares even more valuable insights on AI adoption.