Introduction: The Challenge of AI Bias & Fairness

Artificial Intelligence (AI) is transforming industries, improving efficiencies, and shaping decision-making processes worldwide. However, as AI systems become more prevalent, concerns over bias and fairness in AI have gained significant attention.

AI bias occurs when algorithms produce systematically prejudiced results, leading to unfair treatment of certain groups. This can have serious consequences in sectors like hiring, lending, healthcare, and law enforcement.

Ensuring fairness in AI is critical to preventing discrimination, fostering trust, and promoting ethical AI adoption. This article explores the causes of AI bias, its implications, and how organizations can mitigate these challenges.

1.1. What is AI Bias?

AI bias refers to systematic errors in AI decision-making that favor or disadvantage specific groups or individuals. These biases arise due to flaws in data collection, algorithm design, and human influence during development.

AI bias refers to systematic errors in AI decision-making that favor or disadvantage specific groups or individuals. These biases arise due to flaws in data collection, algorithm design, and human influence during development.

AI systems learn from historical data, which may carry existing social and economic inequalities. If this bias is not addressed, AI models can reinforce and amplify these disparities, making AI-driven decisions unfair.

Key characteristics of AI bias:

- It is systematic and repeatable rather than random errors.

- It often discriminates against individuals based on characteristics like gender, race, or socio-economic status.

- It can arise at various stages of AI development, from data collection to model deployment.

1.2. Why Fairness in AI Matters (Impact on Society & Business)

Ensuring fairness in AI is vital for social justice and economic prosperity. Here’s why AI fairness is essential:

| Impact Area | Description |

| Society | Unbiased AI promotes inclusivity, reduces discrimination, and fosters trust in technology. It ensures marginalized groups are not unfairly targeted or excluded. |

| Business | Companies using fair AI models avoid legal risks, build customer trust, and enhance brand reputation. Ethical AI also leads to better decision-making and innovation. |

| Legal Compliance | Many governments are introducing AI regulations, requiring companies to audit and eliminate bias in their AI systems. Non-compliance can result in hefty fines and reputational damage. |

For example, companies like IBM and Microsoft have taken proactive steps to improve fairness in their AI tools by promoting transparency and auditing bias in machine learning models.

1.3. The Ethical & Legal Consequences of Unfair AI

Biased AI can have severe ethical and legal consequences, including:

- Discrimination in Hiring: AI-powered recruitment tools have been found to favor male candidates over female applicants due to biased training data.

- Inequitable Loan Approvals: AI-driven lending systems have been criticized for systematically rejecting loan applications from minority groups.

- Unfair Criminal Justice Decisions: Predictive policing algorithms have disproportionately targeted communities of color, reinforcing systemic biases.

- Health Disparities: AI-based medical diagnostics have shown racial bias, leading to misdiagnoses and incorrect treatment plans for underrepresented populations.

Legislators and regulatory bodies, such as the European Union’s AI Act and the U.S. Algorithmic Accountability Act, are increasingly enforcing policies to curb AI bias and promote fairness.

1.4. Key Real-World Examples of AI Bias

Several high-profile cases highlight the dangers of biased AI:

- Amazon’s AI Hiring Tool: Amazon scrapped an AI recruitment system after it showed bias against female candidates, favoring resumes containing male-associated words.

- COMPAS Criminal Justice Algorithm: Used in the U.S. for assessing the risk of reoffending, the algorithm disproportionately labeled Black defendants as high-risk compared to white defendants with similar records.

- Facial Recognition Bias: Studies by MIT and the ACLU found that commercial facial recognition software had significantly higher error rates for darker-skinned individuals, leading to misidentification.

These cases emphasize the need for transparent, explainable, and accountable AI models.

Understanding the Roots of AI Bias

AI bias does not emerge out of nowhere; it is deeply embedded in the development and deployment of machine learning systems. Bias in AI stems from various sources, including flawed algorithms, imbalanced data, and human prejudices.

To address these issues, it is crucial to first understand the different types of bias that affect AI models and then examine the technical pathways through which these biases infiltrate AI decision-making processes.

2.1. Types of Bias in AI Systems

a) Algorithmic Bias

a) Algorithmic Bias

AI bias manifests in multiple forms, each contributing to unfair or inaccurate outcomes. One of the most prominent forms is algorithmic bias, which arises when the design of an AI system inherently favors certain groups over others.

This could be due to the way the algorithm weighs different factors, reinforcing historical inequalities rather than mitigating them. Algorithmic bias is particularly problematic in areas such as hiring, lending, and law enforcement, where biased predictions can lead to widespread discrimination.

b) Data Bias

Another significant contributor to AI bias is data bias, which can occur at various stages of data collection and preparation. When datasets are not representative of the population they are meant to serve, AI models trained on them produce skewed results.

Data bias can be introduced in several ways, including selection bias, where certain demographics are underrepresented; labeling bias, where human annotators inadvertently introduce prejudices into the data; and sampling bias, where the data used for training does not accurately reflect real-world distributions.

These issues can lead to models that systematically disadvantage certain groups, reinforcing stereotypes and deepening societal inequities.

c) Human Bias in AI Development

Bias also emerges from the human element in AI development. Since AI systems are built and maintained by people, the unconscious biases of developers can seep into the models they create.

This occurs through choices made in data curation, feature selection, and model optimization. Even well-intentioned developers can unintentionally design AI systems that reflect their own perspectives and assumptions, further perpetuating bias.

d) Bias in Model Training & Deployment

Finally, bias in model training and deployment can exacerbate pre-existing disparities. If an AI model is trained on biased data, it will inevitably produce biased outputs. Moreover, if AI systems are not regularly audited and updated, biases can persist and even worsen over time.

Deployment practices also play a role in shaping AI behavior—if an AI tool is integrated into a system without proper fairness checks, it can reinforce and amplify social inequalities at scale.

2.2. How Bias Enters AI Models: A Technical Breakdown

a) Data Collection & Annotation Issues

Understanding the technical pathways through which bias infiltrates AI models is essential for mitigating its impact. One of the primary sources of bias is data collection and annotation issues. The process of gathering data often introduces biases, especially when certain groups are overrepresented or underrepresented in training datasets.

If AI models are trained on incomplete or non-diverse datasets, they learn patterns that reflect those biases. Furthermore, data annotation, the process of labeling training examples, can introduce human biases, particularly when subjective categories are involved, such as sentiment analysis or criminal risk assessments.

b) Model Training & Overfitting Bias

Another major technical factor contributing to AI bias is model training and overfitting bias. When an AI model is trained on historical data that reflects past inequalities, it learns to replicate those patterns rather than challenge them.

Overfitting occurs when a model becomes too attuned to the specific patterns of the training data rather than generalizing to new data. This means that any biases present in the training dataset become hard coded into the AI’s decision-making process, leading to discriminatory outcomes when applied in real-world scenarios.

c) Bias in AI Decision-Making & Reinforcement Learning

Bias also emerges in AI decision-making and reinforcement learning. Many AI systems use reinforcement learning, where models optimize their behavior based on feedback. If the feedback loop itself is biased, the AI system will continue to learn and reinforce those biases over time.

For instance, in predictive policing, an AI model that directs more surveillance to certain neighborhoods will generate more crime reports from those areas, reinforcing the false assumption that crime is more prevalent there. This self-perpetuating cycle makes it difficult to correct biases once they have been embedded in the AI system.

By understanding these mechanisms, developers and policymakers can take proactive steps to reduce bias in AI systems. Solutions such as using diverse and representative datasets, designing fairness-aware algorithms, and implementing ongoing bias audits are crucial for building ethical and unbiased AI technologies.

Measuring AI Bias & Fairness: Key Metrics & Methods

Ensuring fairness in AI requires rigorous measurement and evaluation. Bias in AI models can be subtle and often embedded within complex algorithms, making it necessary to use quantitative and qualitative techniques to detect and mitigate unfairness.

Measuring AI bias involves applying statistical fairness metrics, conducting audits, and employing explainability tools to better understand how AI systems make decisions. Without proper evaluation, biased AI models can reinforce discrimination and exacerbate social inequalities.

3.1. Statistical Fairness Metrics

a) Demographic parity

To measure bias in AI, several statistical fairness metrics have been developed, each focusing on different aspects of fairness. One widely used metric is demographic parity, which ensures that AI outcomes are equally distributed across different demographic groups.

In practice, this means that the probability of a positive outcome (such as being approved for a loan or getting a job interview), should be roughly the same across all racial, gender, or socioeconomic groups.

However, demographic parity does not account for differences in underlying qualifications or risk factors, which can sometimes lead to misleading conclusions about fairness.

b) Equal opportunity and equalized odds

Another important measure is equal opportunity and equalized odds, which focus on fairness in error rates rather than overall predictions.

Equal opportunity ensures that individuals who qualify for a positive outcome (such as getting hired) have the same likelihood of receiving that outcome, regardless of their demographic group.

Equalized odds take this a step further by ensuring that false positives and false negatives occur at similar rates across groups.

These metrics are particularly useful in areas such as criminal justice and healthcare, where disparities in false negatives or false positives can have life-altering consequences.

c) Individual fairness and group fairness

The debate between individual fairness and group fairness also plays a key role in bias measurement.

Individual fairness requires that similar individuals receive similar AI-generated decisions, regardless of their demographic characteristics.

Group fairness, on the other hand, focuses on ensuring equitable outcomes across different demographic groups.

The challenge lies in balancing these two perspectives, optimizing for one can sometimes reduce performance on the other.

d) Disparate impact analysis

Another key method is disparate impact analysis, which assesses whether an AI model disproportionately disadvantages certain groups, even if the algorithm is not explicitly programmed to do so. This approach is commonly used in legal and regulatory frameworks to ensure compliance with anti-discrimination laws. Disparate impact analysis can reveal unintended biases in hiring algorithms, lending models, and facial recognition systems, prompting necessary adjustments to reduce unfairness.

3.2. Auditing AI Models for Bias

a) Bias Detection Tools & Frameworks

Several leading tools and frameworks have been developed to assist in AI bias detection.

IBM AI Fairness 360 is an open-source toolkit that provides a suite of fairness metrics and bias mitigation algorithms, helping organizations assess and reduce bias in machine learning models.

Similarly, Google’s What-If Tool allows developers to visualize and compare AI model predictions across different demographic groups, making it easier to identify disparities in decision-making.

These tools help AI practitioners diagnose fairness issues and implement corrective measures before deploying their models in real-world applications.

b) AI Explainability & Transparency Techniques

In addition to detecting bias, enhancing AI explainability and transparency is crucial for ensuring fairness. Many AI models, particularly deep learning algorithms, operate as “black boxes,” making it difficult to understand why they make certain predictions.

Techniques such as SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) provide insights into how specific features influence AI decisions.

By making AI decision-making more interpretable, organizations can identify potential sources of bias and improve accountability.

Case Studies: Real-World Examples of AI Bias & Consequences

AI bias has led to serious real-world consequences, affecting industries from law enforcement to finance. These cases highlight the risks of unchecked AI and the urgent need for fairness, transparency, and accountability in machine learning systems.

4.1. Facial Recognition & Racial Bias

4.1. Facial Recognition & Racial Bias

Facial recognition tools, including Amazon’s Rekognition and Clearview AI, have been found to misidentify people of color at significantly higher rates.

Studies by MIT Media Lab revealed that these systems frequently misclassified Black individuals, leading to wrongful arrests in law enforcement applications. This has raised concerns over racial profiling and mass surveillance, prompting calls for regulation and even bans in some regions.

4.2. Gender Bias in AI Recruiting Tools

Amazon’s AI hiring tool was scrapped after it was found to favor male candidates over female applicants. The model, trained on historical resumes, penalized resumes containing terms like “women’s,” reinforcing gender disparities in hiring. This case demonstrated the risks of using past data without fairness adjustments, emphasizing the need for bias audits in recruitment AI.

4.3. AI Bias in Healthcare

A medical AI system used in U.S. hospitals was found to discriminate against Black patients, underestimating their need for care. The algorithm, which relied on healthcare spending as a proxy for illness severity, failed to account for systemic disparities in medical access. This case highlights the dangers of flawed data proxies and the need for equity in AI-driven healthcare.

4.4. Bias in Financial Services

Apple’s credit card algorithm was accused of offering significantly lower credit limits to women than men, even with similar financial backgrounds. This sparked regulatory scrutiny over biased credit-scoring models, illustrating how opaque AI decisions can reinforce financial inequality.

4.5. Misinformation & Bias in AI Content Moderation

AI-driven content moderation on platforms like Facebook and YouTube has been criticized for disproportionately censoring marginalized communities while amplifying fake news. Engagement-driven algorithms prioritize sensational content, influencing public opinion and political outcomes. This case underscores the need for greater AI transparency in digital platforms.

These cases reveal how biased AI can perpetuate discrimination, financial inequality, and misinformation. To mitigate these risks, organizations must implement fairness audits, use diverse datasets, and ensure transparency in AI decision-making. Without proactive measures, AI will continue to reflect and reinforce societal biases rather than correcting them.

Regulatory & Ethical Guidelines for AI Fairness

As AI adoption grows, governments and organizations worldwide are developing regulatory frameworks and ethical guidelines to ensure fairness, transparency, and accountability in AI systems. These initiatives aim to reduce bias, protect individual rights, and promote responsible AI development.

5.1. GDPR & AI Fairness Requirements in Europe

The General Data Protection Regulation (GDPR) in the European Union (EU) includes provisions that impact AI fairness, particularly in automated decision-making. Article 22 of the GDPR grants individuals the right to contest AI-driven decisions that significantly affect them, such as loan approvals or hiring outcomes.

The regulation also requires AI models to be explainable and prohibits unfair discrimination based on sensitive attributes like race, gender, or religion.

Additionally, the EU is advancing the AI Act, a first-of-its-kind regulatory framework that categorizes AI systems by risk level and imposes stricter rules on high-risk applications, such as biometric surveillance and healthcare AI.

5.2. The U.S. AI Bill of Rights & Algorithmic Accountability Act

In the United States, AI regulation is still evolving. The Blueprint for an AI Bill of Rights, introduced by the White House, outlines principles for ethical AI, emphasizing fairness, privacy, and transparency. It calls for AI systems to undergo bias testing and for users to have greater control over how AI impacts their lives.

The Algorithmic Accountability Act, proposed by U.S. lawmakers, seeks to regulate AI in high-risk sectors like finance and healthcare. It would require companies to conduct impact assessments on AI models to identify and mitigate bias before deployment. These efforts reflect growing concerns about AI-driven discrimination and the need for regulatory oversight.

5.3. ISO & IEEE Standards on Ethical AI & Bias Mitigation

International organizations like the International Organization for Standardization (ISO) and the Institute of Electrical and Electronics Engineers (IEEE) have established guidelines for ethical AI.

ISO’s ISO/IEC 24027 focuses on bias identification and mitigation in machine learning, while IEEE’s Ethically Aligned Design framework outlines best practices for fairness, accountability, and transparency in AI development. These standards provide technical guidance for companies aiming to build ethical AI systems.

5.4. Global Initiatives for AI Fairness

Organizations like UNESCO, OECD, and the EU are leading global efforts to promote fair and ethical AI. UNESCO’s Recommendation on the Ethics of Artificial Intelligence calls for AI governance frameworks that prioritize human rights and sustainability.

The OECD AI Principles advocate for AI transparency, accountability, and inclusivity, influencing AI policies worldwide.

The EU’s AI Act aims to create a regulatory standard for AI safety and fairness, setting a precedent for global AI governance.

5.5. Corporate AI Ethics Policies: How Tech Giants Address AI Bias

Major technology companies are increasingly adopting AI ethics policies to address bias and promote responsible AI use.

Google has established an AI ethics board and developed fairness tools like the “What-If” tool for bias detection.

Microsoft has implemented AI fairness principles, banning the sale of facial recognition technology to law enforcement due to bias concerns.

IBM has released open-source fairness toolkits, such as AI Fairness 360, to help developers detect and mitigate bias in machine learning models.

While corporate policies are a step forward, critics argue that self-regulation is insufficient. Many experts call for stronger government oversight to ensure AI fairness beyond voluntary corporate commitments.

Strategies to Mitigate AI Bias & Promote Fairness

As AI systems become more integrated into decision-making processes, ensuring fairness is critical. Addressing AI bias requires proactive strategies that range from technical solutions to organizational policies.

Effective bias mitigation involves refining AI development practices, implementing human oversight, promoting diversity in AI teams, and establishing independent audits to ensure continuous monitoring and accountability.

6.1. Bias Mitigation Techniques in AI Development

a) Rebalancing Training Data for Fair Representation

One of the most effective ways to reduce AI bias is through rebalancing training data for fair representation. Many AI models become biased due to imbalanced datasets that overrepresent certain demographics while underrepresenting others.

By curating datasets that reflect diverse populations, developers can improve model accuracy and fairness. Techniques such as data augmentation and re-weighting can help balance representation across different groups, ensuring more equitable AI outcomes.

b) Adversarial Debiasing in Machine Learning Models

Another technique is adversarial debiasing, which involves training AI models to recognize and minimize biases during the learning process. This method uses adversarial neural networks that challenge the model to make fairer predictions, helping reduce disparities in decision-making.

Additionally, fairness-aware algorithms, such as reweighted loss functions, can penalize biased predictions, encouraging the model to prioritize equitable outcomes.

c) Differential Privacy & Federated Learning for Ethical AI

Emerging privacy-preserving technologies like differential privacy and federated learning also contribute to ethical AI.

Differential privacy ensures that AI models do not inadvertently memorize or reveal sensitive personal data, reducing the risk of bias caused by data exposure.

Federated learning allows AI models to be trained on decentralized data sources without aggregating individual user data, improving fairness while maintaining privacy.

For a deeper dive into how ethical design principles can be integrated from the ground up, explore our comprehensive guide on ethical AI development.

6.2. The Role of Human Oversight in AI Decision-Making

Despite advances in AI, human oversight remains crucial for preventing bias and ensuring ethical decision-making. AI systems should not operate in isolation; instead, they should be complemented by human judgment, particularly in high-stakes areas such as hiring, healthcare, and law enforcement. Human-in-the-loop (HITL) approaches involve integrating human reviewers at critical stages of AI decision-making to intervene in cases where bias is detected.

Additionally, transparency in AI decision-making helps users understand how AI-driven conclusions are reached. Explainable AI (XAI) techniques allow stakeholders to interpret AI models and identify potential biases before deployment. By incorporating human oversight and interpretability measures, organizations can increase accountability and trust in AI systems.

6.3. Diverse & Inclusive AI Teams: Why Representation Matters

Bias in AI is often a reflection of the biases of those who develop it. To create fairer AI systems, organizations must prioritize diversity in AI development teams. When AI teams lack representation from various demographics, blind spots can emerge, leading to unintentional biases in AI models. A diverse AI workforce, including individuals from different racial, gender, and socioeconomic backgrounds, brings varied perspectives that help identify and mitigate biases early in the development process.

Beyond team composition, inclusive design practices—such as conducting fairness testing across diverse user groups—ensure that AI models work equitably for all communities. Companies that invest in ethical AI development benefit from broader market reach, enhanced user trust, and stronger compliance with fairness regulations.

6.4. Third-Party AI Audits & Continuous Monitoring for Fair AI

AI fairness should not be a one-time consideration but an ongoing process. Independent third-party AI audits provide unbiased evaluations of AI systems, helping organizations detect hidden biases that internal teams might overlook. These audits assess AI models using fairness metrics, stress-test them for discriminatory patterns, and recommend corrective actions.

Continuous monitoring is equally important. AI systems evolve over time, and biases can emerge as models interact with new data. Implementing real-time fairness monitoring ensures that AI models remain ethical and unbiased even after deployment. Automated bias detection tools can flag potential fairness violations, enabling prompt corrective action.

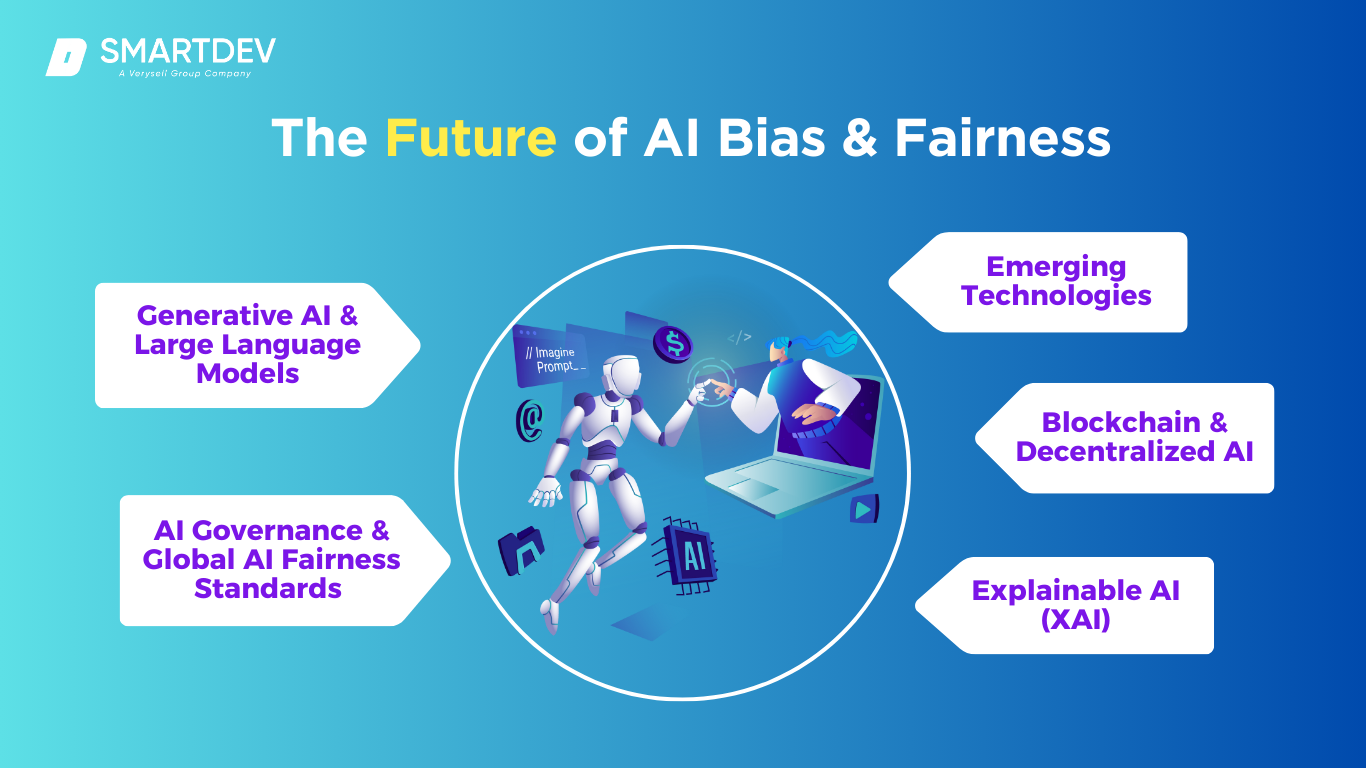

The Future of AI Bias & Fairness: Challenges & Opportunities

As AI continues to evolve, ensuring fairness remains a critical challenge. The rapid expansion of AI technologies, including generative AI, autonomous systems, and decentralized AI, raises ethical concerns about bias, transparency, and governance. Addressing these challenges requires global cooperation, technical innovation, and stronger AI governance frameworks.

As AI continues to evolve, ensuring fairness remains a critical challenge. The rapid expansion of AI technologies, including generative AI, autonomous systems, and decentralized AI, raises ethical concerns about bias, transparency, and governance. Addressing these challenges requires global cooperation, technical innovation, and stronger AI governance frameworks.

7.1. The Ethics of Generative AI & Bias in Large Language Models

Generative AI models like ChatGPT, Gemini, and Claude have revolutionized content creation, but they also inherit biases from the datasets they are trained on. Since these models learn from vast amounts of internet data, they can reflect and amplify existing societal prejudices, including racial, gender, and ideological biases. This has raised concerns about misinformation, stereotyping, and ethical responsibility in AI-generated content.

One challenge is the lack of context-awareness in large language models. While these models generate human-like responses, they do not possess true understanding or moral reasoning, making them prone to reinforcing harmful biases. Companies are working on reinforcement learning from human feedback (RLHF) and adversarial training techniques to reduce bias, but complete neutrality remains difficult to achieve.

The future of generative AI will require continuous updates, stricter fairness audits, and increased transparency in training data and model design.

7.2. AI Governance & The Need for Global AI Fairness Standards

AI bias is a global issue, yet regulations vary widely across countries. While the EU AI Act sets strict guidelines on high-risk AI applications, other regions, including the U.S. and China, take different approaches. The lack of unified AI fairness standards creates inconsistencies in how AI ethics are enforced worldwide.

To ensure fairness, organizations such as UNESCO, OECD, and the World Economic Forum are working on global AI governance frameworks. These initiatives aim to establish ethical AI principles that transcend national regulations and ensure AI benefits all societies. Moving forward, international cooperation will be key to creating standardized fairness metrics, regulatory frameworks, and cross-border AI accountability.

7.3. AI Bias in Emerging Technologies

Bias in AI is not limited to software, it extends into emerging technologies like autonomous vehicles, smart city infrastructures, and robotics.

- Autonomous Vehicles (AVs): AI-powered self-driving cars rely on vast datasets for decision-making. However, studies show that AVs may struggle to recognize pedestrians with darker skin tones, increasing the risk of accidents in marginalized communities. Addressing these biases requires more diverse datasets and rigorous fairness testing in AV systems.

- Smart Cities: AI-driven surveillance and urban planning tools risk reinforcing systemic inequalities if they are based on biased historical data. Biased policing algorithms, for instance, can lead to increased surveillance of minority neighborhoods, exacerbating discrimination.

- Robotics: AI-powered robots used in workplaces and homes must be designed to operate fairly and equitably. If training data is biased, robots could make discriminatory decisions, particularly in sectors like healthcare and customer service.

7.4. How Blockchain & Decentralized AI Can Improve Fairness

Blockchain and decentralized AI offer promising solutions to improve transparency and fairness in AI systems. Decentralized AI frameworks, which distribute AI model training across multiple nodes rather than a central entity, help reduce bias by ensuring no single organization controls the training data.

Blockchain technology can enhance AI fairness audits by creating immutable records of AI decision-making processes. This transparency ensures that AI biases can be traced and corrected. Additionally, decentralized identity systems powered by blockchain could help reduce biases in credit scoring, job hiring, and healthcare by providing individuals with greater control over their data.

While decentralized AI is still in its early stages, it represents a potential future where AI systems are more accountable, transparent, and resistant to bias.

7.5. The Role of Explainable AI (XAI) in Creating Transparent AI Systems

One of the biggest challenges in AI fairness is the black-box nature of many machines learning models, making it difficult to understand how AI arrives at certain decisions. Explainable AI (XAI) aims to solve this problem by developing tools that provide insights into AI decision-making processes.

By making AI systems more interpretable, XAI can help:

- Detect and correct biases in real-time.

- Build trust among users by explaining why AI made a specific decision.

- Ensure regulatory compliance by providing transparency in AI decision-making.

Techniques such as SHAP (Shapley Additive Explanations), LIME (Local Interpretable Model-agnostic Explanations), and counterfactual explanations help make AI systems more understandable and accountable. As AI governance evolves, XAI will play a central role in ensuring that AI operates transparently and fairly.

Conclusion & Key Takeaways

AI bias remains a critical issue that spans industries, from hiring and healthcare to finance and law enforcement. If left unaddressed, biased AI systems risk perpetuating discrimination and exacerbating societal inequalities. Achieving fairness in AI demands collaboration between businesses, policymakers, and developers to ensure that AI technologies are transparent, accountable, and ethically designed.

AI bias remains a critical issue that spans industries, from hiring and healthcare to finance and law enforcement. If left unaddressed, biased AI systems risk perpetuating discrimination and exacerbating societal inequalities. Achieving fairness in AI demands collaboration between businesses, policymakers, and developers to ensure that AI technologies are transparent, accountable, and ethically designed.

Key AI Bias Challenges

The primary challenges include biased training data, algorithmic discrimination, lack of transparency in decision-making processes, and inconsistent regulatory frameworks. As AI continues to evolve, especially in areas like generative models and autonomous systems, the complexity of preventing bias will increase.

Ensuring Fair AI: Action Steps

- Businesses must adopt bias audits, ensure diverse datasets, and prioritize explainability in AI models.

- Policymakers should enforce fairness regulations, such as the EU AI Act, and advocate for comprehensive global AI governance.

- Developers should utilize fairness-aware techniques like adversarial debiasing and federated learning, while promoting inclusivity and diversity within AI teams.

The Path Forward

The future of AI fairness relies on robust governance, ongoing technical advancements, and persistent human oversight. The integration of Explainable AI (XAI) will be essential in fostering greater transparency and accountability. To build ethical AI, organizations must embed fairness into every phase of development, ensuring AI technologies benefit all communities equitably.

—

References:

- AI Now Institute – Reports on AI Bias

- World Economic Forum – How to Prevent Discrimination in AI

- European Commission – Artificial Intelligence Act

- White House – Blueprint for an AI Bill of Rights

- IEEE – Ethically Aligned Design

- NIST – Towards a Standard for Identifying and Managing Bias in AI

- MIT Media Lab – Gender Shades Project

- Science – Dissecting racial bias in an algorithm used to manage the health of populations

- IBM AI Fairness 360 Toolkit

- UNESCO – Recommendation on the Ethics of Artificial Intelligence

4.1. Facial Recognition & Racial Bias

4.1. Facial Recognition & Racial Bias