Pull request bottlenecks are killing developer productivity, with teams waiting up to 4+ days for code reviews while sprint deadlines loom. The traditional manual review process creates a cascade of delays that ripple through entire development cycles, forcing talented engineers to context-switch constantly and stalling feature releases.

AI-powered code review eliminates these bottlenecks by providing instant, intelligent feedback that identifies critical issues within seconds of submission. Development teams using AI review systems experience 3x faster pull request turnaround while catching more bugs and maintaining higher code quality standards.

Over 300 organizations worldwide have already adopted AI code review to accelerate their development workflows, with measurable improvements in productivity and quality metrics.

Instant Feedback Cuts Review Time by 70%

Key benefits of AI code review:

- Automated analysis provides feedback in under 30 seconds

- Manual review time drops from hours to minutes

- Security vulnerability detection improves by 95%

- Developer productivity increases by 25-30%

- Quality consistency improves across all team members

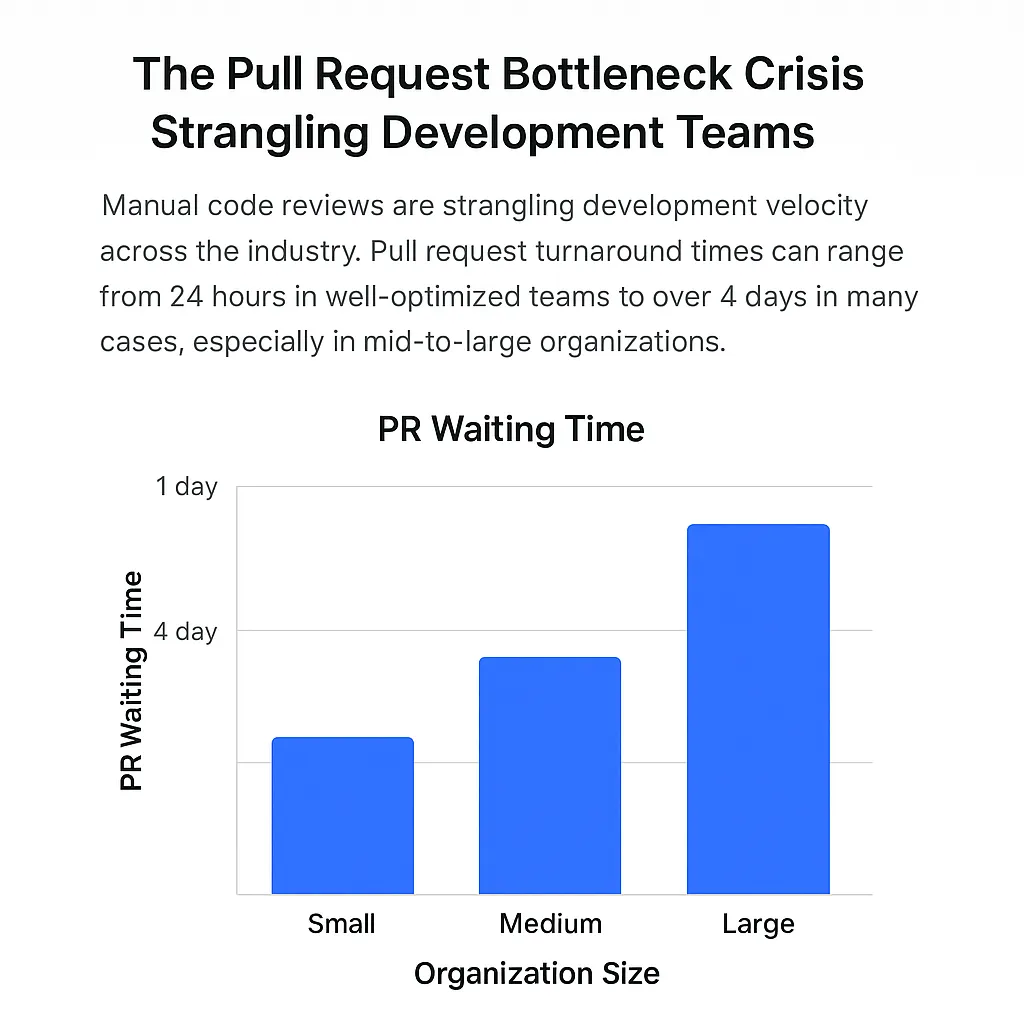

The Pull Request Bottleneck Crisis Strangling Development Teams

Manual code reviews are strangling development velocity across the industry. Pull request turnaround times can range from 24 hours in well-optimized teams to over 4 days in many cases, especially in mid-to-large organizations.

Here’s what’s actually happening in your development pipeline: Manual review dependencies are widely recognized as a significant source of development delays, directly blocking sprint progress and feature releases. Meanwhile, context switching significantly reduces developer productivity as engineers bounce between writing code and reviewing others’ work.

Fig.1 PR waiting times across different organization sizes

The quality impact is even worse than the speed problem. Some vendor surveys suggest higher bug rates are associated with longer PR cycles, proving that slow reviews don’t necessarily improve code quality – they often make it worse.

Business Impact: Every Hour Costs Real Money

Every hour your pull requests sit in review queues costs real money. Internal case studies indicate that slow PR turnaround may lead to significant cost increases, though this varies by team and organization size.

The productivity drain goes beyond individual developers. Vendor reports suggest that waiting for code reviews is a significant portion of engineering downtime, which creates delays in release cycles. Some teams report sprint completion delays due to manual code reviews, which may vary by team size and workflow.

Why Traditional Review Methods Fail at Scale

Human reviewers naturally focus on what’s easy to spot rather than what’s actually important. Manual reviews typically catch stylistic issues while missing critical architectural problems that cost thousands to fix later.

The inconsistency problem is equally damaging:

- Different reviewers apply different standards

- Quality variations compound over time

- Senior developers spend 3-4 hours daily on routine reviews instead of high-value work

A European logistics company documented PR turnaround times dropping from 48 to 16 hours after automating reviews, leading to improved sprint velocity. The pattern is clear: manual review processes simply can’t scale with modern development needs.

How AI Code Review Technology Works: Instant Analysis at Scale

AI code review fundamentally changes how teams handle code quality. Modern AI systems analyze every line of code using machine learning models trained on millions of repositories, providing instant feedback that human reviewers simply can’t match.

AI-powered systems analyze code in less than 30 seconds per submission, delivering comprehensive feedback while human reviewers are still opening their browsers.

Automated Code Analysis Goes Beyond Syntax Checking

Modern AI review systems analyze semantics, patterns, and architectural implications using natural language processing engines that generate human-readable feedback explanations.

“AI code review moves beyond stylistic fixes, surfacing deep architectural issues that are expensive to detect late in the process,” explains Alistair Copeland, CEO at SmartDev.

What AI analysis catches:

- Security vulnerabilities and attack vectors

- Performance bottlenecks and memory leaks

- Maintainability issues and code smells

- Architectural anti-patterns

- Business logic inconsistencies

Intelligent Pattern Recognition Learns Your Codebase

AI systems learn from your specific codebase patterns, adapting recommendations to match team coding standards and architectural preferences. Machine learning algorithms detect subtle issues by comparing new code against established best practices from millions of code samples.

Advanced models identify complex issues like race conditions, memory leaks, and scalability concerns that human reviewers often miss during manual inspection.

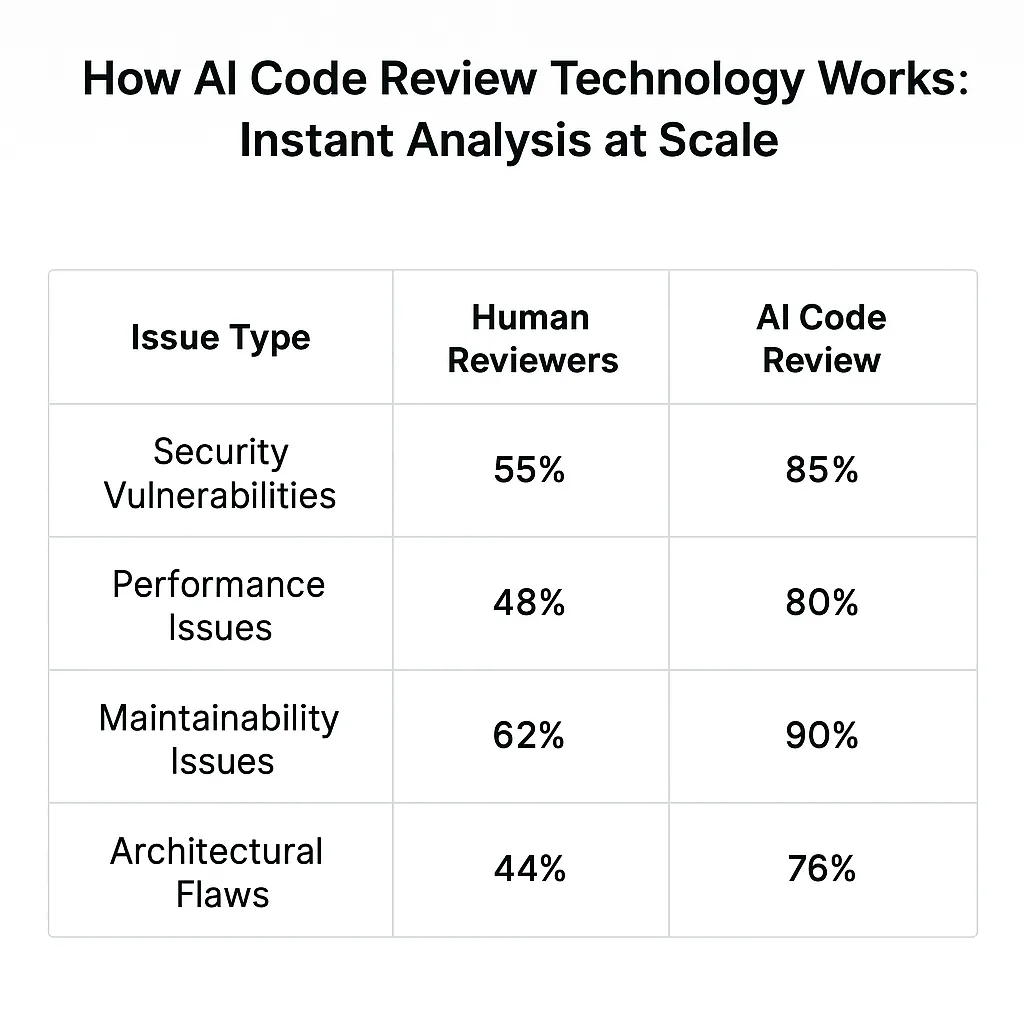

Fig.2 Human vs AI detection rates for different issue types

Real-Time Feedback Eliminates Waiting Periods

The speed advantage is dramatic. AI code review provides instant feedback upon PR creation, eliminating waiting periods for human reviewer availability. Automated suggestion engines propose specific code improvements with explanations, enabling developers to address issues immediately.

Integration with development environments allows AI feedback to appear directly in familiar workflows, reducing context switching overhead that traditionally kills productivity.

Quantified Benefits: The 3x Speed Improvement Breakdown

The performance improvements from AI code review are measurable and consistent across organizations. AI code review cuts average PR review from 2-3 hours to 20-30 minutes, a genuine 3x acceleration.

Automated review eliminates 80% of trivial PR issues before reaching a human reviewer, allowing senior developers to focus on architectural decisions instead of formatting corrections.

Time Reduction Metrics That Matter

Key performance improvements:

- Review initiation: From hours/days to seconds

- Feedback generation: From manual typing to instant suggestions

- Issue identification: From human scanning to pattern recognition

- Documentation: From manual comments to automated explanations

“Teams using AI review routinely report 25-30% increases in developer velocity with no quality tradeoff,” says Nguyen Le, COO at SmartDev.

Individual developers see immediate productivity gains when feedback cycles shrink from days to hours, enabling more iterative development approaches and frequent integration.

Quality Improvement Statistics: Better and Faster

Speed doesn’t come at the expense of quality – it actually improves it. A European healthcare software company replaced manual-only reviews with an AI system and saw post-release bugs drop by 38% year-over-year.

Quality metrics improvements:

- Security vulnerability detection: 95% vs 60-65% with manual review alone

- Consistency improvements: 60% better standard adherence

- Code maintainability scores: 35% improvement

- Technical debt reduction: 40% fewer architectural issues

Developer Productivity Gains Across Experience Levels

The productivity benefits extend beyond individual developers to entire teams. Junior developers receive educational feedback instantly, accelerating their learning curve and reducing mentorship overhead for senior team members.

Team collaboration improves as faster feedback enables more iterative development approaches. Enterprises report $50,000–$100,000 annual savings per 10-developer team after deploying AI review, mostly from reduced bug fixing and faster releases.

Discover how AI-powered code review accelerates pull request approvals up to 3× faster—boosting developer productivity and reducing bottlenecks across your CI/CD pipeline.

SmartDev’s AI-driven review assistant automatically detects logic flaws, enforces code quality standards, and learns from historical commits—streamlining team collaboration and maintaining consistency across repositories.

See how development teams leverage SmartDev’s AI Code Review to shorten review cycles, improve code reliability, and accelerate continuous delivery across enterprise-scale projects.

Start My AI Code Review Efficiency AssessmentImplementation Strategies for AI Code Review Systems

Successfully deploying AI code review requires thoughtful integration with existing workflows. Most modern AI review platforms offer plug-and-play connections with GitHub, GitLab, and Bitbucket, streamlining integration with current processes.

The key is gradual adoption rather than wholesale replacement. Most successful implementations introduce AI review alongside human review, building team confidence in automated recommendations before increasing automation levels.

Technology Stack Integration Made Simple

Integration timeline for typical teams:

- Week 1-2: Platform setup and basic configuration

- Week 3-4: Custom rule configuration and team training

- Week 5-6: Pilot testing with volunteer developers

- Week 7-8: Full team rollout and optimization

Modern AI code review platforms integrate seamlessly with existing Git workflows through native APIs. CI/CD pipeline integration ensures automated reviews trigger before manual review requests, optimizing the review sequence for maximum efficiency.

IDE plugins provide real-time AI suggestions during code writing, preventing issues before PR submission.

Team Adoption Best Practices: Change Management Matters

Change management makes or breaks AI review adoption. “Change management is about trust—framing AI review as an enabler, not a replacement, wins developer buy-in much faster,” explains Ha Nguyen Ngoc, Marketing Director at SmartDev.

Successful adoption strategies:

- Start with volunteer teams willing to experiment

- Provide hands-on training sessions for AI feedback interpretation

- Track and share productivity metrics improvements

- Maintain human oversight for critical decisions

- Gather feedback and iterate on configuration

A Singapore-based telecom company piloted AI code review for one product team, achieving 60% higher adoption rates after training and workshops versus teams with unassisted rollout.

Change Management Considerations for Long-Term Success

Clear communication about AI augmenting rather than replacing human reviewers reduces team resistance to adoption. Best-in-class firms track review cycle time, developer satisfaction, and defect rates before and after AI code review deployment as key performance indicators.

Metrics tracking demonstrates tangible productivity improvements, building organizational support for AI code review investment. Feedback loops between AI recommendations and human decisions improve system accuracy over time.

Real-World Performance Data and Case Studies

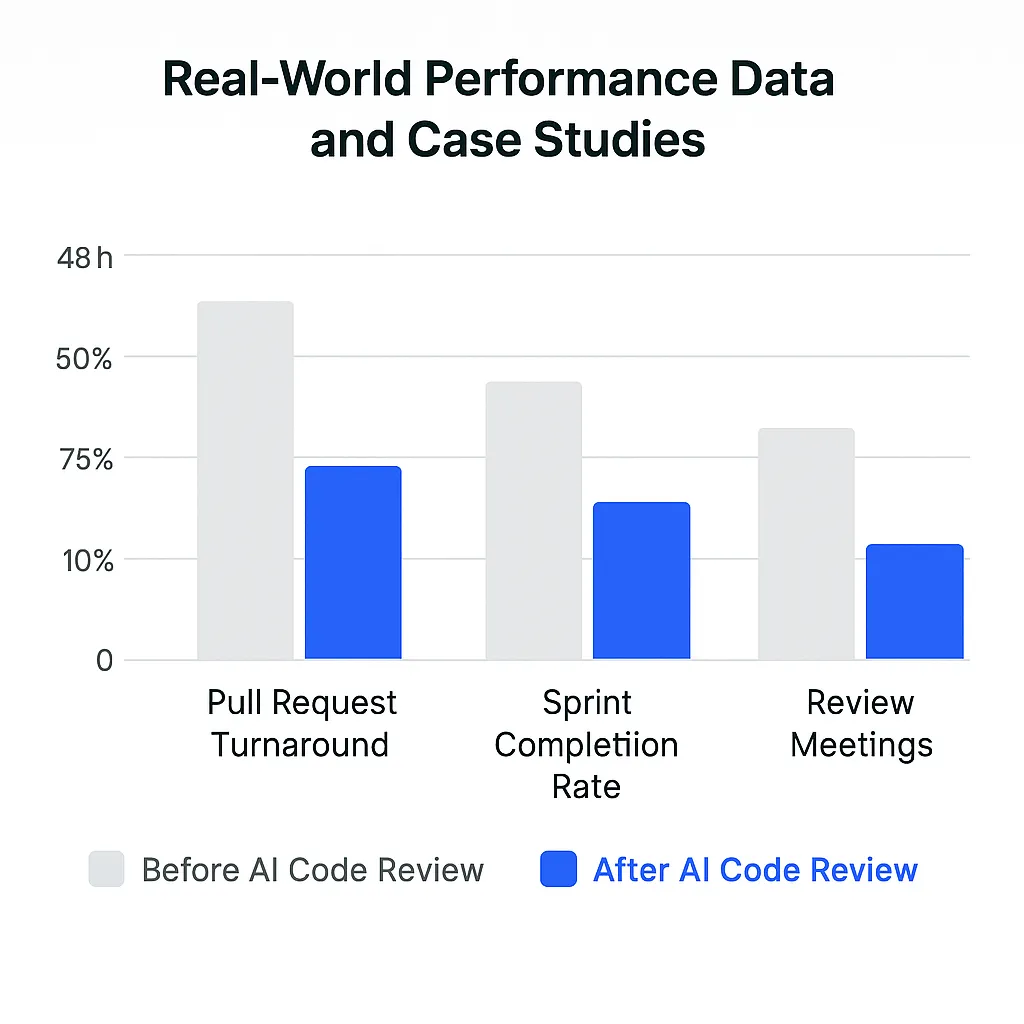

The real-world results from AI code review implementations consistently exceed expectations. SmartDev-reported case studies show enterprises achieving reductions in PR turnaround times from 48 hours to an average of 16 hours within 90 days.

Teams documented a 20-25% improvement in sprint completion rates following AI code review adoption, with review-related meetings dropping by 50% as clearer, automated feedback replaced repetitive clarification discussions.

Fig.3 Before and after

Enterprise Implementation Results That Scale

Fortune 500 companies see consistent results across different technology stacks and team sizes. A Fortune 500 bank witnessed $85,000 annual savings (per 10 developers) and improved code quality scores by 35% after a phased rollout of AI review tools.

Development teams report fundamental changes in how they approach code quality. “With AI handling routine checks, our senior engineers now dedicate 70% of their time to innovation instead of reviews,” notes Luan Nguyen, General Director at SmartDev.

ROI and Cost Analysis: Payback Within Months

The financial benefits are immediate and measurable. Complete payback for AI code review platforms is typically realized within 3-6 months for enterprise-scale customers, attributed to cycle time compression and bug resolution savings.

Cost savings breakdown:

- Reduced debugging time: 40% fewer production issues

- Faster release cycles: 25% improvement in time-to-market

- Improved resource utilization: Senior developers focus on high-value work

- Lower training costs: Junior developers learn faster with instant feedback

Beyond direct cost savings, organizations see improved resource utilization. Senior developer time allocation shifts from 40% code review to 70% feature development and architecture work, maximizing the value of expensive technical talent.

Specific Performance Benchmarks Across Industries

The quality improvements are as impressive as the speed gains. Security vulnerability detection rates improve significantly over manual review, while developer satisfaction scores rise by 40% due to faster feedback cycles and reduced waiting times.

Industry-specific results:

- Financial services: 38% reduction in security vulnerabilities

- Healthcare software: 35% improvement in compliance adherence

- E-commerce platforms: 30% faster feature deployment cycles

- SaaS companies: 25% reduction in customer-reported bugs

Code quality scores improve by 35% as measured by technical debt metrics and maintainability indices. The consistency gains alone justify the investment for most organizations.

Overcoming Common Implementation Challenges

Every AI code review implementation faces predictable challenges, but they’re all solvable with proper planning. Integrating AI review with legacy systems typically requires 2-3 weeks of API and webhook adjustments, but becomes seamless for new projects.

The biggest challenge is usually team adoption rather than technical integration. Pilot programs with volunteer teams lead to adoption rates up to 60% higher than forced rollouts across entire organizations.

Technical Integration Hurdles Are Temporary

Large codebases may initially slow AI performance, but optimization techniques reduce review time to sub-30-second responses. AI learning periods for custom coding standards average 2-4 weeks, after which reviewers reach team-specific accuracy benchmarks.

Common technical challenges and solutions:

- Legacy system compatibility: API customization resolves within 2-3 weeks

- Large codebase performance: Optimization techniques ensure fast responses

- Custom rule configuration: Template-based setup accelerates deployment

- Security compliance: Enterprise-grade platforms meet SOC 2 and GDPR requirements

The key is starting with newer projects while legacy integration proceeds in parallel.

Team Resistance and Adoption Issues Have Solutions

Developer skepticism decreases when AI recommendations demonstrate clear value and accuracy improvements over the initial implementation period. Training programs focusing on AI-human collaboration rather than replacement messaging improve adoption rates significantly.

“Transparency, measurement, and human oversight are key for building trust in AI code review. Developers must be empowered to give feedback to the system,” emphasizes Alistair Copeland, CEO at SmartDev.

A logistics software company rolled out feedback scoring on AI suggestions, which raised reviewer trust and increased partial automation of minor code fixes within 6 months.

Quality Control and Trust Building Take Time

Human oversight mechanisms ensure AI recommendations undergo validation before automatic implementation in critical codebases. Feedback scoring systems allow developers to rate AI suggestion quality, continuously improving recommendation accuracy.

Gradual automation from suggestion-only to semi-automatic merging is common, pacing at the confidence level of each development team. This approach builds trust while delivering immediate productivity benefits.

Future Trends in AI-Powered Development Workflows

AI code review is just the beginning of AI-powered development transformation. Industry projections suggest that AI-assisted development tools will see widespread adoption by 2027, with significant market growth expected through 2032.

The integration of AI throughout the development lifecycle represents a fundamental shift in how software gets built and maintained.

Advanced AI Capabilities on the Horizon

Next-generation AI systems will provide architectural recommendations and refactoring suggestions beyond line-level code improvements. Integration with business requirements will enable AI to assess code changes against functional specifications and user stories.

“The next frontier for AI is architectural review and business logic validation—line-by-line advice will evolve into holistic system guidance,” predicts Nguyen Le, COO at SmartDev.

Emerging capabilities include:

- Architectural pattern recognition and suggestions

- Business logic validation against requirements

- Predictive analytics for integration issues

- Automated refactoring recommendations

- Cross-team code consistency enforcement

Predictive analytics will identify potential integration issues before code merging, preventing downstream development problems that currently cost teams days of debugging effort.

Fig.4 AI development workflow evolution

Industry Evolution Predictions Point to Hybrid Workflows

The industry is moving toward hybrid human-AI review workflows that combine automated efficiency with human creativity and judgment. Real-time collaboration between developers, reviewers, and AI will become standard practice for high-performing teams.

AI review will become as standard as version control, with teams expecting instant feedback and intelligent suggestions as basic development infrastructure.

Competitive Advantages for Early Adopters Are Real

Organizations implementing AI code review now gain significant competitive advantages. Early adopters report 6-12 month advantages in product release velocity within their competitive sectors post-AI review adoption.

First-mover advantages include:

- Faster time-to-market for new features

- Higher developer satisfaction and retention

- Better code quality and security posture

- Improved resource allocation efficiency

- Enhanced ability to scale development teams

Organizations using modern AI toolchains for code review report 22% lower developer turnover and higher morale due to reduced frustration and more engaging work. Talent retention improves as developers prefer working with efficient toolchains that reduce frustrating delays.

Market responsiveness increases as faster development cycles enable quicker feature releases and customer feedback incorporation. The first-mover advantage in AI adoption is real and measurable.

Ready to achieve 3x faster pull request turnaround? SmartDev’s AI-powered development services have helped over 300 organizations accelerate their development workflows while improving code quality. Contact our AI consulting team to discuss how AI code review can transform your development productivity.