As industries embrace digital transformation, the integration of Artificial Intelligence (AI) into Internet of Things (IoT) and manufacturing systems is becoming essential for improving efficiency, reducing downtime, and enhancing decision-making. However, deploying AI models at the edge in resource-constrained environments poses unique technical challenges. To achieve seamless AI performance at the edge, it is crucial to optimize these models.

In this blog, we’ll explore key strategies for optimizing AI model performance for edge deployment in IoT and manufacturing applications, emphasizing model compression techniques, and AI inference optimization.

The Role of AI in IoT and Manufacturing

AI is transforming IoT and manufacturing industries by enabling devices to make intelligent decisions locally, improving efficiency, and enhancing automation. Deploying AI at the edge provides significant advantages in terms of real-time processing, cost savings, and operational efficiency.

AI in IoT: Real-Time Data Processing

In IoT applications, edge devices generate vast amounts of data in real-time. To make timely and intelligent decisions, AI models are deployed at the edge of the network, ensuring that data is processed locally with minimal latency.

- Low Latency: Edge AI enables real-time decision-making by reducing the delay involved in transmitting data to the cloud. For instance, in smart homes, AI-powered devices can control lighting, heating, and security systems based on real-time data without needing to send information to the cloud.

- Event-Driven Automation: In industrial IoT (IIoT), edge devices can detect anomalies in real time. They can also trigger automated actions like shutting down equipment, alerting operators, or initiating maintenance requests.

AI in Manufacturing: Enhancing Efficiency and Productivity

In manufacturing, AI-driven solutions at the edge help optimize operations, reduce downtime, and improve the overall quality of products. AI models deployed at the edge are crucial for predictive maintenance, real-time monitoring, and intelligent automation.

- Predictive Maintenance: AI models on edge devices can monitor machine performance and predict failures before they occur. For example, sensors embedded in machines collect data on temperature, vibrations, and pressure. AI models analyze this data locally and predict when a part is likely to fail, reducing unplanned downtime and maintenance costs.

- Quality Control: AI-based computer vision systems at the edge can inspect products in real-time as they move down production lines. These systems can identify defects, ensuring that only high-quality products reach consumers while also reducing the need for manual inspections.

- Smart Factory Automation: AI-driven robots and automation systems in factories rely on edge AI to perform tasks such as assembly, packaging, and inventory management. These systems make intelligent decisions based on sensor data and are capable of adapting to changing conditions in the factory environment.

Edge AI for Cost Reduction and Energy Efficiency

In both IoT and manufacturing applications, edge AI helps reduce operational costs and optimize energy usage. Processing data locally at the edge minimizes the need for cloud communication, which not only saves bandwidth costs but also reduces energy consumption.

- Bandwidth and Communication Savings: By processing and analyzing data on the edge device, only essential data is transmitted to the cloud, reducing network bandwidth requirements. This is particularly beneficial for remote or rural locations where network bandwidth may be limited or costly.

- Energy Savings: Edge AI can optimize energy consumption in both industrial and IoT systems. For instance, AI models can dynamically adjust the energy usage of machines based on operational conditions, reducing energy waste during non-peak periods.

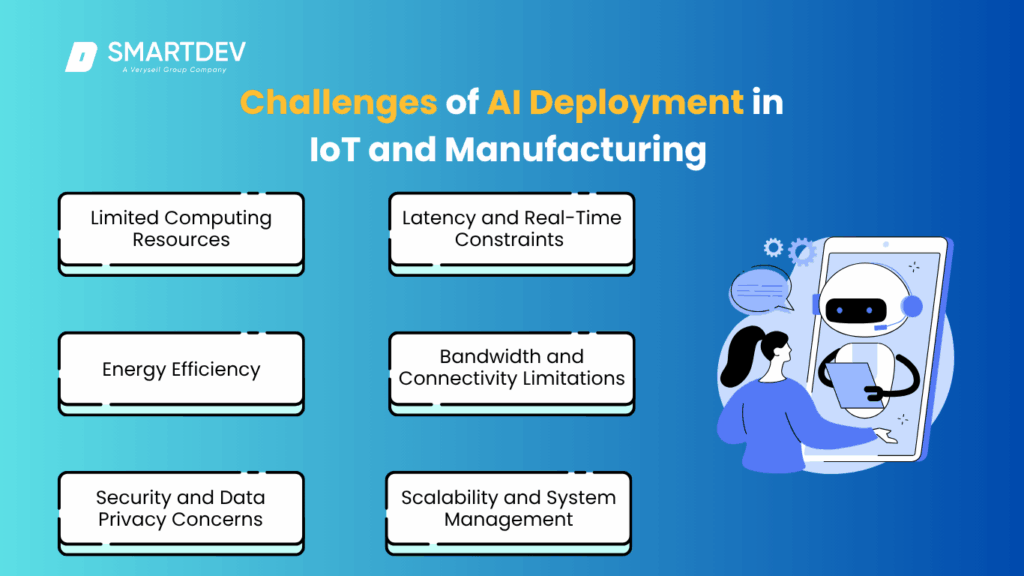

The Challenge of Edge Deployment in IoT and Manufacturing

Edge deployment involves processing data near its source rather than relying on centralized cloud servers, which is crucial for IoT and manufacturing applications requiring real-time decision-making and low-latency processing. However, deploying AI in these environments comes with challenges due to resource constraints and other factors. Below, we explore these challenges and the need for optimizing AI models for edge deployment.

1. Limited Computing Resources

Edge devices, such as sensors, industrial machines, or robots, often have limited processing power, memory, and storage compared to cloud systems. In cloud-based setups, AI models can rely on powerful servers to handle heavy computations. But for edge deployment, these models need to be highly optimized to work within the constraints of devices with minimal CPU, RAM, and storage.

AI models that require substantial resources can cause slow inference, higher energy consumption, and performance issues on these devices. Therefore, optimizing AI models for the edge, such as reducing their size, complexity, and resource demands, is crucial for efficient operation in such environments.

2. Latency and Real-Time Constraints

Real-time data processing is essential in many IoT and manufacturing applications, such as predictive maintenance and quality control. AI models deployed on the edge need to analyze data quickly and trigger actions to prevent delays that could affect production or safety.

While edge deployment reduces latency by processing data close to the source, AI models designed for the cloud may not be optimized for quick inference on edge devices. To meet real-time requirements, edge AI models must be optimized to run efficiently with minimal latency, which involves model compression, hardware acceleration, and reducing computational overhead.

3. Energy Efficiency

Edge devices often operate in power-constrained environments, such as battery-powered IoT sensors or automated robots in manufacturing settings. Running complex AI models on such devices can quickly drain power, affecting device longevity and increasing maintenance costs.

Energy efficiency is critical in these cases, requiring AI models to be optimized for low power consumption. Techniques like model compression, using low-power processors, and dynamic voltage and frequency scaling (DVFS) can help balance performance with energy conservation, allowing devices to run AI models for longer periods without frequent recharging.

4. Bandwidth and Connectivity Limitations

In many industrial or remote IoT settings, network connectivity can be limited or unreliable, which poses challenges for transmitting large data sets to the cloud. Edge devices need to process data locally to avoid delays and inefficiencies caused by network disruptions.

AI models at the edge must be able to operate autonomously with minimal reliance on external systems. By processing and analyzing data on-device, edge devices can continue to function even when connectivity is poor or lost, ensuring continuous operations in critical applications such as predictive maintenance or real-time monitoring.

5. Security and Data Privacy Concerns

The distributed nature of edge computing increases the vulnerability to cyber threats, as sensitive data is processed and stored locally. Securing AI models and devices becomes essential to protect against unauthorized access, data breaches, and adversarial attacks.

In IoT and manufacturing systems, ensuring that AI models comply with privacy regulations and are resistant to data manipulation is crucial. Multi-layered security measures, such as encryption, secure authentication, and secure firmware, are necessary to protect data and ensure safe deployment of AI at the edge.

6. Scalability and System Management

Large-scale IoT and manufacturing deployments can involve hundreds or thousands of edge devices that require monitoring, management, and periodic updates. As the number of devices grows, ensuring that the overall system performs efficiently becomes more challenging.

Managing firmware and software updates across a vast network of edge devices without disrupting operations is critical. Techniques like federated learning, where edge devices collaboratively train models while keeping data localized, can help scale AI deployment across many devices while preserving data privacy and reducing bandwidth usage.

AI Model Optimization for Edge Deployment

To ensure that AI models perform optimally in these resource-constrained environments, several optimization techniques can be applied. Let’s explore some of the most effective strategies.

Model Compression Techniques for Edge AI

When deploying AI models on edge devices, especially in resource-constrained environments like IoT or manufacturing applications, it’s crucial to reduce the size and complexity of models. Model compression techniques are key to achieving this goal, allowing AI systems to run efficiently without compromising on performance.

1. Quantization

Quantization is one of the most common techniques for reducing the memory and computational footprint of AI models. By lowering the precision of the model’s weights and activations, quantization reduces both the storage requirements and the computation overhead. In typical deep learning models, weights are represented using 32-bit floating point numbers. Through quantization, these weights can be represented with fewer bits—such as 8-bit integers—without significantly affecting the model’s performance.

- Benefits: Reduces memory usage and speeds up inference. It allows AI models to run faster on edge devices with limited resources.

- Challenges: The precision loss from quantization may affect the accuracy of the model, especially in cases where fine-grained computations are necessary. Careful calibration is needed to ensure minimal impact on performance.

2. Pruning

Pruning involves removing certain parameters (usually weights) from a trained AI model that are less important or contribute minimally to its performance. This technique works by identifying weights that have little to no effect on the output, essentially “sparsifying” the model. By reducing the number of active parameters, pruning reduces both the model’s size and the computation needed for inference.

- Benefits: Reduces model size and computational cost, resulting in faster inference times, which is crucial for real-time edge applications.

- Challenges: Excessive pruning can degrade the model’s accuracy, so it’s essential to strike a balance between pruning and performance.

3. Knowledge Distillation

Knowledge distillation involves transferring knowledge from a large, complex model (the teacher) to a smaller, simpler model (the student). The larger model is typically more accurate but too resource-intensive for edge devices, while the smaller student model is easier to deploy. During the training process, the student model learns to replicate the behavior of the teacher model, capturing its key features and achieving similar performance with fewer resources.

- Benefits: Allows edge devices to run lightweight models without significant loss in accuracy, enabling fast, efficient AI inference at the edge.

- Challenges: Requires careful training and fine-tuning to ensure the student model approximates the teacher model’s performance.

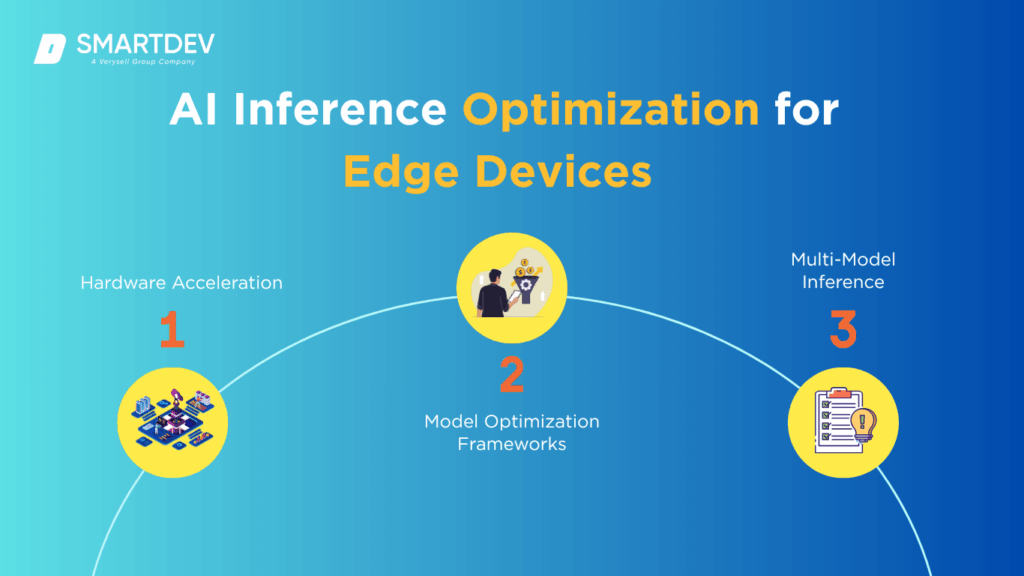

AI Inference Optimization for Edge Devices

Once models are compressed for edge deployment, the next critical step is to optimize how the model performs inference on the edge device. AI inference optimization ensures that models can run efficiently and with minimal latency in real-time applications.

1. Hardware Acceleration

AI inference on edge devices can benefit significantly from specialized hardware accelerators such as GPUs, TPUs, FPGAs, or AI-specific chips like Nvidia Jetson or Google Coral. These accelerators are designed to handle the computational demands of AI models, allowing for faster processing and reduced energy consumption.

- Benefits: Specialized hardware accelerates AI computations, reducing inference time and enabling real-time decision-making, which is essential in IoT and manufacturing systems.

- Challenges: Integrating hardware accelerators requires compatibility with the edge device’s architecture, and may involve additional costs and development effort for deployment.

2. Model Optimization Frameworks

There are several tools and frameworks specifically designed to optimize models for edge deployment. TensorFlow Lite, OpenVINO, and ONNX are popular frameworks that allow models to be fine-tuned for edge devices. These frameworks often include tools to reduce model size, optimize inference performance, and convert models into formats compatible with low-power, resource-constrained hardware.

- Benefits: These frameworks are designed to maximize performance on edge hardware, with support for quantization, pruning, and hardware acceleration.

- Challenges: While these frameworks simplify deployment, they may require specific adjustments or modifications to the original model to achieve optimal performance.

3. Multi-Model Inference

In some cases, edge devices need to run multiple AI models simultaneously for different tasks. Optimizing multi-model inference involves designing efficient ways for an edge device to handle several models at once, ensuring that all models run in parallel without overloading the system’s resources.

- Benefits: Enables edge devices to perform a wide range of tasks simultaneously, such as object detection, classification, and anomaly detection, without needing to offload computation to the cloud.

- Challenges: Running multiple models can quickly exhaust system resources like memory and processing power. Effective load balancing and resource management strategies are needed to ensure smooth operation.

Energy Efficiency and Deployment Considerations

Edge AI deployments often occur in environments where energy consumption is a critical concern, such as battery-powered IoT sensors or autonomous devices in industrial settings. Optimizing energy efficiency ensures that AI systems can operate for extended periods without requiring frequent recharging or maintenance.

1. Low-Power Processors and Chips

One of the most effective ways to optimize energy efficiency is by using low-power processors or AI chips designed specifically for edge applications. Processors like ARM-based CPUs, Intel Movidius, or Nvidia Jetson TX2 are optimized for low power consumption while maintaining adequate processing power for AI workloads.

- Benefits: Low-power chips are designed to perform AI tasks efficiently, allowing edge devices to run AI models for longer periods without draining the battery.

- Challenges: While energy-efficient chips reduce power consumption, they may still have limitations in terms of raw processing power compared to larger server-based GPUs or CPUs. Selecting the right chip is essential for balancing performance and power needs.

2. Dynamic Voltage and Frequency Scaling (DVFS)

DVFS is a technique that dynamically adjusts the voltage and frequency of the processor based on workload demands. During periods of low activity, the system can scale down its voltage and frequency to conserve energy. When computational demands increase (for example, during complex inference tasks), the system can ramp up power to handle the load.

- Benefits: DVFS helps extend battery life in IoT and mobile devices by reducing power consumption when the device is idle or under low computational load.

- Challenges: Implementing DVFS requires careful monitoring of system performance to ensure that the scaling doesn’t impact real-time inference capabilities.

3. Edge Data Processing and Local Storage

Another important aspect of energy efficiency is optimizing data processing on the edge. Instead of continuously transmitting raw data to the cloud for analysis, edge devices can process and filter data locally. By performing local analysis, only essential data or results need to be sent to the cloud, significantly reducing network traffic and energy consumption.

- Benefits: Local data processing reduces the energy consumption associated with data transmission and ensures that only the most relevant information is sent to centralized systems.

- Challenges: Edge devices must be capable of processing and storing data locally, which can require additional resources. It’s also important to manage the quality and relevance of data being processed to avoid unnecessary energy expenditure.

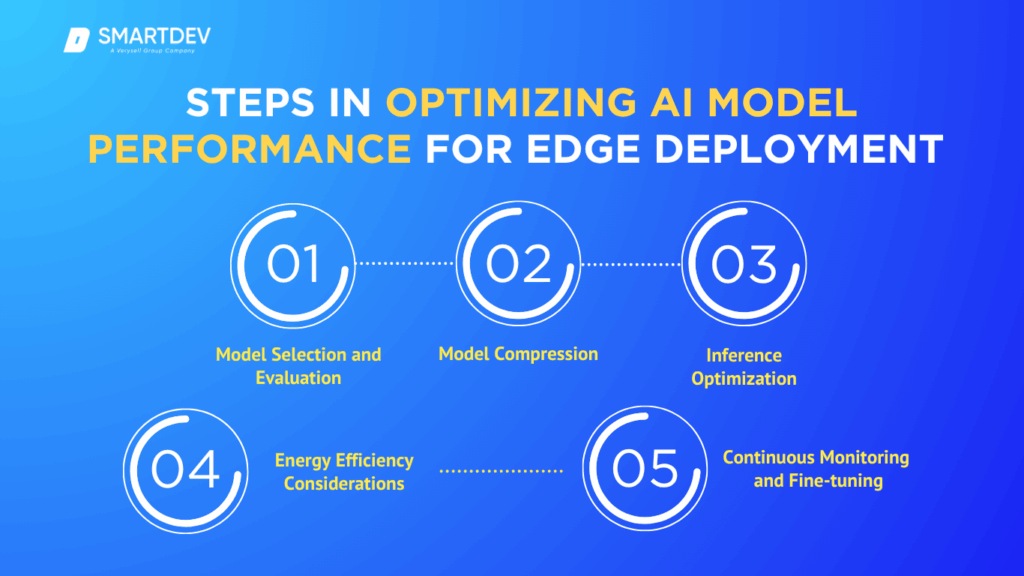

Steps in Optimizing AI Model Performance for Edge Deployment

Optimizing AI model performance for edge deployment requires a structured approach, as the unique constraints of edge environments, such as limited computational resources, energy efficiency needs, and real-time requirements, demand a thoughtful strategy. Below are the key steps involved in optimizing AI models for deployment in IoT and manufacturing environments:

Step 1: Model Selection and Evaluation

The first step in optimizing AI models for edge deployment is selecting the right model. For edge devices, it’s essential to choose models that are simple yet capable of achieving the desired accuracy within the resource constraints.

- Evaluation of Model Complexity: Start by evaluating the complexity of potential AI models. Simpler models with fewer parameters, such as decision trees, linear regressions, or small convolutional neural networks (CNNs), often work better for edge devices than more complex, deep learning models. These simpler models typically consume less memory, require less computational power, and reduce inference time.

- Accuracy vs. Efficiency Trade-offs: Striking a balance between model accuracy and computational efficiency is vital. Overly complex models may provide higher accuracy but will likely require more resources, making them unsuitable for edge deployment. On the other hand, a model that’s too simple may sacrifice performance and lead to suboptimal results in manufacturing or IoT applications.

- Benchmarking: Conduct benchmarks on various models to understand their performance in terms of speed, resource consumption, and accuracy. This helps ensure that the selected model can operate effectively in a real-time, resource-constrained environment.

Step 2: Model Compression

Once a suitable model is selected, the next step is applying model compression techniques. Model compression is essential for reducing the model’s size and computational demands, allowing it to run on resource-constrained edge devices.

- Quantization: Quantization reduces the bit-width of weights and activations (e.g., from 32-bit to 8-bit). This technique can lead to substantial reductions in both memory and computation requirements while retaining a good level of accuracy.

- Pruning: Pruning removes unnecessary weights or neurons from the model, effectively reducing the number of computations required during inference. This helps reduce the model’s size and speeds up execution without major performance losses.

- Knowledge Distillation: This method involves training a smaller model (the student) to mimic the output of a larger model (the teacher). The student model can be smaller and more efficient, yet still retain much of the performance characteristics of the teacher model.

- Low-Rank Factorization: This technique decomposes large matrices into smaller, low-rank matrices, effectively reducing model size while keeping computation efficient.

Step 3: Inference Optimization

After compressing the model, the next step is to optimize inference. This involves making the model run faster and more efficiently on edge devices, ensuring that it meets real-time requirements.

- Edge-Specific Hardware: Use specialized hardware like GPUs, TPUs, FPGAs, or AI chips (e.g., Nvidia Jetson or Google Coral) to accelerate inference. These devices are designed to perform AI computations more efficiently than general-purpose CPUs.

- Framework Optimization: Frameworks such as TensorFlow Lite, OpenVINO, and ONNX offer built-in optimizations for running AI models on edge devices. These tools provide hardware-accelerated execution and model optimization techniques like quantization and pruning.

- Model Parallelization: For more complex tasks, consider splitting the model or its inference process into smaller segments that can be computed in parallel across multiple processors, thus speeding up the overall inference time.

Step 4: Energy Efficiency Considerations

Optimizing for energy efficiency is essential when deploying AI at the edge, especially for battery-powered IoT devices and systems that run continuously in manufacturing environments.

- Low-Power Chips: Select energy-efficient processors designed for edge AI tasks, such as ARM-based chips, Intel Movidius, or custom AI accelerators. These chips provide the necessary performance while minimizing energy consumption.

- Dynamic Voltage and Frequency Scaling (DVFS): Implementing DVFS allows edge devices to adjust processing power based on the task load. For example, when the model is idle or performing less-intensive tasks, the system reduces its voltage and frequency to save energy.

- Local Data Processing: Minimize data transmission to external servers by processing data locally on the edge device. This reduces the need for power-hungry network operations and ensures that the device remains energy efficient.

Step 5: Continuous Monitoring and Fine-tuning

After deploying the optimized AI model, continuous monitoring is necessary to ensure that it performs well in real-world scenarios and adapts to changing conditions.

- Real-time Feedback: Use real-time data to monitor model performance and detect potential issues, such as drift in model accuracy or unexpected behavior. This feedback loop helps in adjusting the model or its deployment strategies.

- Over-the-Air Updates: Given the distributed nature of IoT and manufacturing systems, enabling over-the-air updates allows AI models to be improved or re-optimized after deployment without requiring physical access to each device.

Key Points to Remember When Optimizing AI Models for IoT and Manufacturing

Optimizing AI models for IoT and manufacturing applications is a multifaceted process that requires careful consideration of the unique constraints and demands of edge environments. Here are the key points to keep in mind when optimizing AI models for these contexts:

1. Prioritize Model Simplicity and Efficiency

In resource-constrained environments like IoT and manufacturing, it’s crucial to prioritize simplicity and efficiency in AI models. Complex deep learning models often require significant processing power, memory, and storage, which may not be available on edge devices. Instead, focus on lightweight models that can perform well within these constraints.

- Smaller Models: Use simpler architectures, such as decision trees, support vector machines (SVM), or small convolutional neural networks (CNNs), which typically require less memory and processing power.

- Avoid Overfitting: Train models to avoid overfitting on small datasets, as this can lead to unnecessary complexity. Ensure the model generalizes well, even with fewer parameters.

2. Utilize Model Compression Techniques

Compression techniques, such as pruning, quantization, and knowledge distillation, are essential for reducing the size and complexity of models while retaining performance. These techniques help ensure that models run efficiently on edge devices.

- Pruning: Reduces the number of parameters in the model by removing less important weights, which reduces memory and computation requirements.

- Quantization: Lowers the precision of weights (from 32-bit floating-point to 8-bit integers, for example), which reduces memory and processing demands.

- Knowledge Distillation: Transfers the knowledge from a larger, more complex model (the teacher) to a smaller, simpler model (the student), preserving most of the accuracy while improving efficiency.

3. Leverage Edge-Specific Hardware

Specialized hardware accelerators like GPUs, TPUs, and FPGAs can greatly enhance the performance of AI models at the edge. When selecting hardware for edge deployment, ensure that it is compatible with the AI model’s requirements and can handle real-time inference efficiently.

- Low-Power Chips: Use energy-efficient processors such as ARM-based chips or dedicated AI accelerators (e.g., Nvidia Jetson or Google Coral) to balance performance and power consumption.

- Custom Accelerators: For specialized applications, consider designing custom chips or utilizing field-programmable gate arrays (FPGAs) to accelerate specific AI operations, such as image processing or sensor data analysis.

4. Focus on Real-Time Processing and Low Latency

For applications in IoT and manufacturing, real-time processing is essential for preventing delays and ensuring the timely execution of tasks. Optimizing AI models for low latency is crucial for applications like predictive maintenance, quality control, and automated systems.

- Model Optimization: Simplify or compress models to ensure faster inference times. Use techniques like pruning and quantization to reduce processing time without sacrificing accuracy.

- Edge Deployment Considerations: Ensure that AI models can run locally on edge devices without having to communicate extensively with cloud servers, reducing latency and improving responsiveness.

5. Plan for Scalability and Remote Management

IoT and manufacturing systems often involve large numbers of edge devices spread across different locations. Scaling AI deployments and managing numerous devices remotely requires robust systems and protocols.

- Over-the-Air Updates: Implement systems for remotely updating models and firmware on edge devices to ensure they stay current and secure without disrupting operations.

- Federated Learning: For distributed systems, federated learning allows edge devices to collaboratively train models while keeping data local, helping to preserve privacy and reduce bandwidth usage.

6. Ensure Energy Efficiency

Energy efficiency is crucial in IoT and manufacturing settings where devices may run on limited power sources, such as battery-powered sensors or robots. Optimizing AI models to reduce energy consumption ensures that edge devices can operate for extended periods without frequent recharging or replacement.

- Low Power Inference: Optimize AI models for low-power inference by reducing their computational complexity through techniques like quantization and pruning.

- Power-Aware Scheduling: Implement dynamic voltage and frequency scaling (DVFS) to adjust the processing power of edge devices based on computational needs, helping to save energy during idle or low-activity periods.

7. Prioritize Security and Privacy

Security is a critical consideration when deploying AI at the edge, especially in industrial and IoT environments where sensitive data is processed locally. It’s essential to implement strong security measures to protect both the models and the data they handle.

- Data Encryption: Encrypt data both at rest and in transit to prevent unauthorized access and ensure data integrity.

- Secure Authentication: Use secure authentication methods to verify the identity of devices and users interacting with edge devices.

- Adversarial Resistance: Develop AI models that are resilient to adversarial attacks, which can manipulate input data to deceive the AI system.

Real-World Use Cases of AI Optimization in IoT and Manufacturing

Optimizing AI models for edge deployment is not just a theoretical exercise—it’s already being applied in various industries to drive significant improvements in efficiency, cost savings, and real-time decision-making. Below are some real-world use cases where AI optimization for edge computing is making a tangible impact in IoT and manufacturing environments.

1. Predictive Maintenance in Manufacturing

Predictive maintenance is one of the most common applications of AI in manufacturing. By deploying AI models at the edge, factories can continuously monitor the health of machines and equipment in real-time. Traditional maintenance approaches often lead to costly downtime due to unplanned repairs or the need for routine check-ups, which may not always align with actual machine wear and tear.

- How It Works: Sensors on machines continuously collect data on factors such as temperature, vibration, and pressure. AI models process this data locally at the edge, detecting patterns that indicate wear and potential failures. When the AI model identifies signs of an impending failure, it triggers maintenance alerts or automatically schedules repairs.

- Optimization: For edge deployment, the AI models need to be optimized to run efficiently on devices with limited processing power. Techniques like model pruning and quantization are used to reduce the size and computational requirements of the models, ensuring they can operate on industrial IoT devices without overburdening their resources. These optimized models provide real-time predictions while minimizing latency and power consumption, ensuring that maintenance can be scheduled before costly breakdowns occur.

2. Quality Control and Visual Inspection

In manufacturing, product quality is paramount. AI-powered visual inspection systems at the edge can automate the process of detecting defects in products as they move along the production line. By running AI models locally, manufacturers can achieve faster processing times and make real-time decisions without sending data to the cloud.

- How It Works: Cameras and sensors mounted on production lines capture images of products as they are manufactured. AI models at the edge analyze these images to detect visual defects, such as cracks, scratches, or color inconsistencies. The system then either removes faulty products from the line or flags them for further inspection.

- Optimization: AI models used for visual inspection are typically computationally intensive, but through techniques like model compression, such as pruning and quantization, these models can be made efficient enough for real-time, on-device inference. Specialized hardware accelerators (e.g., edge GPUs or TPUs) can further improve the performance of the system, ensuring that inspection is both fast and accurate, without compromising quality.

3. Smart Warehousing and Inventory Management

In logistics and warehousing, AI-powered systems are transforming how inventory is managed. Optimized AI models deployed at the edge help improve efficiency by tracking goods, monitoring stock levels, and automating stock movement decisions in real time.

- How It Works: IoT sensors and RFID tags track the location and status of products within a warehouse. AI models deployed on edge devices analyze this data to make real-time decisions about inventory levels, movement, and ordering. For example, AI models can predict when stock levels will run low and automatically reorder items or adjust warehouse operations based on demand.

- Optimization: To handle the massive data flow generated in a warehouse, AI models need to be optimized for edge deployment to minimize latency and reduce bandwidth usage. By processing data locally, edge devices can update inventory systems without the need for constant cloud communication. Additionally, energy-efficient processors and low-power chips are used to ensure long-term operation in warehouses without frequent maintenance.

4. Autonomous Vehicles and Robotics in Manufacturing

In modern manufacturing plants, autonomous vehicles and robots equipped with AI are playing an increasingly important role in improving productivity and reducing labor costs. These robots rely on real-time data to make decisions about their movement, task allocation, and interaction with the environment.

- How It Works: Robots and autonomous vehicles in a manufacturing facility use AI models to navigate the factory floor, perform assembly tasks, or transport materials. They rely on sensors, cameras, and LIDAR to detect obstacles and navigate their environment. AI models process this data locally at the edge to make real-time decisions about pathfinding, collision avoidance, and task prioritization.

- Optimization: Autonomous robots must operate with minimal latency to avoid collisions and ensure smooth operation. AI models deployed on edge devices need to be optimized to handle complex tasks with real-time feedback. This requires using lightweight models that can run efficiently on resource-constrained robots, coupled with specialized hardware like edge GPUs for real-time decision-making. Through optimization techniques like knowledge distillation and quantization, the AI models can run efficiently on smaller, low-power devices while maintaining high accuracy.

5. Energy Optimization in Smart Buildings

AI is also being used to optimize energy usage in smart buildings by monitoring and controlling energy consumption in real time. With the deployment of IoT sensors and AI at the edge, buildings can adjust lighting, heating, and cooling systems based on occupancy and environmental conditions, reducing energy waste and lowering costs.

- How It Works: Sensors placed throughout a building monitor various parameters like temperature, humidity, light levels, and occupancy. AI models deployed at the edge process this data to optimize heating, ventilation, and air conditioning (HVAC) systems in real-time, ensuring that energy is used efficiently.

- Optimization: These AI models need to operate on edge devices with limited processing power, making it crucial to optimize for efficiency. By using techniques like model quantization and low-power hardware, the AI models can make real-time adjustments to the building’s systems without draining energy or requiring constant cloud-based computation. This helps buildings achieve significant energy savings without compromising comfort.

Conclusion

Optimizing AI models for edge deployment in IoT and manufacturing applications is not just a technical necessity, but a key driver of efficiency and innovation in today’s fast-paced, data-driven world. As edge devices become more pervasive in industries like manufacturing, logistics, and smart buildings, the need for AI models that can perform effectively in resource-constrained environments is more critical than ever. Through techniques like model compression, hardware acceleration, and real-time inference optimization, businesses can deploy AI systems that are not only accurate but also efficient and scalable, empowering edge devices to make intelligent decisions on-site and in real-time.

The challenges of edge deployment, such as limited computing resources, latency requirements, and energy constraints, demand a careful balance of optimization strategies. However, by understanding these challenges and leveraging the right tools and techniques, companies can unlock the full potential of edge AI, improving productivity, reducing operational costs, and driving better outcomes across industries. As the technology behind edge AI continues to evolve, we can expect even more innovative use cases to emerge, transforming industries by making them smarter, faster, and more autonomous. In the end, optimizing AI for the edge is not just about overcoming technical hurdles. It’s about seizing the opportunity to redefine what’s possible in IoT and manufacturing environments.