The global generative AI market exploded from $11 billion in 2020 to $44.89-$71.36 billion in 2024—and many organizations risk costly mistakes without proper evaluation of their approach selection. You’re facing a critical decision that could determine whether your AI investment delivers exceptional ROI or becomes an expensive lesson in misallocated resources.

The problem? Most businesses jump into fine-tuning because it sounds more sophisticated, or they stick with basic prompting because it seems simpler. Both approaches often lead to suboptimal results, wasted budgets, and frustrated teams.

This guide provides a practical framework for choosing the right approach based on your specific performance requirements, budget constraints, and technical capabilities. You’ll discover when each method delivers optimal value and how leading organizations combine both strategies for maximum impact.

Performance vs. Cost Trade-offs That Matter

Prompt engineering delivers 70-85% accuracy for most business tasks at $0-500 monthly, while fine-tuning achieves 95%+ accuracy for specialized domains at $5,000-50,000 upfront investment. Your choice depends on performance requirements, timeline, and whether you need general business automation or domain-specific expertise.

Fig.1 Prompt Engineering vs Fine-Tuning across cost, accuracy, timeline, and complexity metrics

What’s the Real Difference Between Prompt Engineering and Fine-Tuning?

Prompt engineering crafts specific instructions to guide existing AI models, while fine-tuning retrains model parameters on your domain-specific data. Think of prompting as giving detailed directions to a highly skilled generalist, while fine-tuning is like hiring and training a specialist for your exact needs.

Prompt engineering involves creating optimized instructions that work with pre-trained models like GPT-4 or Claude. You’re essentially becoming fluent in “AI speak”—learning how to phrase requests, provide examples, and structure inputs to get consistent, high-quality outputs. This approach requires no model modification and can be implemented immediately.

Fine-tuning takes a pre-trained model and continues training it on your specific dataset. You’re literally rewiring neural network connections to make the model an expert in your domain. This process requires machine learning expertise, significant computational resources, and careful data preparation.

The cost difference is dramatic. Prompt engineering typically costs $0-500 monthly using existing API services, while fine-tuning projects require $5,000-50,000 initial investment plus ongoing maintenance. But here’s what matters more than cost: performance ceiling and use case fit.

Dr. Walid Amamou, CEO of UbiAI and former Intel researcher with a Ph.D. in Materials Science, explains in his 2025 Toronto Machine Learning Society talk: “While general-purpose Large Language Models have demonstrated impressive capabilities, their limitations become apparent in high-accuracy, domain-specific applications… Fine-tuning LLMs is becoming essential for enterprises seeking to adapt general models to specific tasks or industries, offering stronger privacy safeguards and strategic advantage for mission-critical applications.” The rapid experimentation enabled by prompt engineering democratizes AI access, while fine-tuning remains indispensable for specialized domains requiring precision and reliability.

Why Prompt Engineering Wins for Rapid Business Deployment

Choose prompt engineering when you need rapid deployment, have dynamic requirements, or work with general business tasks. Most organizations actually overestimate their need for custom models.

Prompt engineering demonstrates strong performance in business applications, with enterprise GenAI tools showing 2.3x accuracy improvements and Anthropic benchmarks achieving F1 scores of at least 0.85 (85% accuracy) for customer service chatbots, content generation, email automation, and data analysis. That accuracy level solves real business problems for most use cases, especially when you factor in the speed and flexibility advantages.

Industry research confirms that over 70% of enterprise GenAI implementations use prompt engineering rather than fine-tuning, validating its effectiveness for general business applications where rapid iteration and cost efficiency outweigh the need for domain-specific model retraining.

Consider this real example: A retail company used optimized prompts with GPT-4 to automate customer support, achieving a 38% reduction in average response time and 23% drop in support costs within three months. No custom training required.

Your prompt engineering sweet spot includes:

- High-impact, low-complexity scenarios where you need general business automation rather than specialized expertise

- Customer inquiries, content summarization, basic data extraction, and workflow automation

- Budget-conscious implementations with minimal upfront commitment

Budget and resource constraints make prompt engineering the smart choice for most SMEs and startups. Most leading SaaS generative AI tools cost $20 monthly for individual users, with team plans ranging $25-30 per user monthly and premium tiers reaching $200 for advanced features, making them accessible for proof-of-concept validation with minimal upfront commitment. Platforms like ChatGPT, Claude, Perplexity, and Google Gemini all operate within this pricing structure, enabling SMEs to experiment with different AI capabilities before committing to custom development.

Dynamic requirements favor prompting because you can adjust AI behavior through simple prompt modifications rather than expensive model retraining. When your business needs change seasonally or you’re still figuring out optimal AI workflows, this flexibility becomes invaluable.

Nguyen Le, COO at SmartDev, notes: “For rapid deployment and dynamic business requirements, prompt engineering outpaces fine-tuning in both time and budget, especially for SMEs validating use cases.”

The reality check: 90% of major enterprises, including ~92% of Fortune 500 companies, are using OpenAI technology as starting points before larger investments. If you’re unsure about your requirements or timeline, prompting lets you learn fast and iterate cheaply.

When Fine-Tuning Becomes Your Only Option for Mission-Critical Performance

Fine-tuning becomes essential when accuracy above 95% is non-negotiable, when you’re working in highly specialized domains, or when scale economics favor dedicated models.

The accuracy gap between approaches is real and significant. Fine-tuned models regularly deliver 95% task-specific accuracy on specialized tasks, with documented case studies showing fine-tuned Llama models achieving 95% accuracy compared to GPT-4’s 87.2% on classification tasks—representing an 8-point improvement over the 70-85% ceiling for prompt engineering. In regulated industries or mission-critical applications like compliance monitoring, medical diagnostics, and financial analysis, that performance difference justifies the investment required for custom fine-tuning and domain-specific optimization.

Consider this banking example: A global bank implemented a fine-tuned model for automated loan document analysis, reducing processing time by 68% while achieving 99.1% extraction accuracy. Their prompt-only prototypes maxed out around 80% accuracy—insufficient for regulatory compliance.

Fine-tuning becomes necessary when:

- Performance-critical applications demand AI that significantly exceeds general-purpose capabilities

- Specialized domain requirements involve highly technical language, proprietary processes, or context that cannot be adequately conveyed through prompts alone

- Scale and efficiency considerations make high-volume processing economically unfavorable with per-token API costs

Regulated industry requirements make fine-tuning necessary because compliance demands consistent, explainable behavior. 87% of senior business leaders say digitalization is a company priority according to Gartner, but in healthcare, finance, and legal sectors, accuracy requirements often exceed what prompt engineering can reliably deliver. Where prompt engineering achieves 70-85% accuracy, regulated applications demand the 90-95%+ precision that fine-tuned models consistently provide—along with explainable, auditable decision-making processes required for regulatory compliance.

Alistair Copeland, CEO of SmartDev, emphasizes: “For domains where precision and compliance are non-negotiable, such as healthcare or finance, fine-tuning is the only viable option for AI deployment.”

The cost reality: Fine-tuning projects demand $5,000-50,000 upfront for data annotation, model retraining, and infrastructure, plus annual maintenance costs typically 15-25% of initial investment. But when accuracy requirements justify this investment, the ROI can be transformational.

Ready to choose the right approach for your generative AI use case?

Compare Prompt Engineering and Fine-Tuning across cost, accuracy, and implementation complexity to identify which delivers optimal performance for your business goals.

Learn how SmartDev helps enterprises evaluate, test, and deploy tailored AI strategies—balancing performance precision with scalability and cost efficiency.

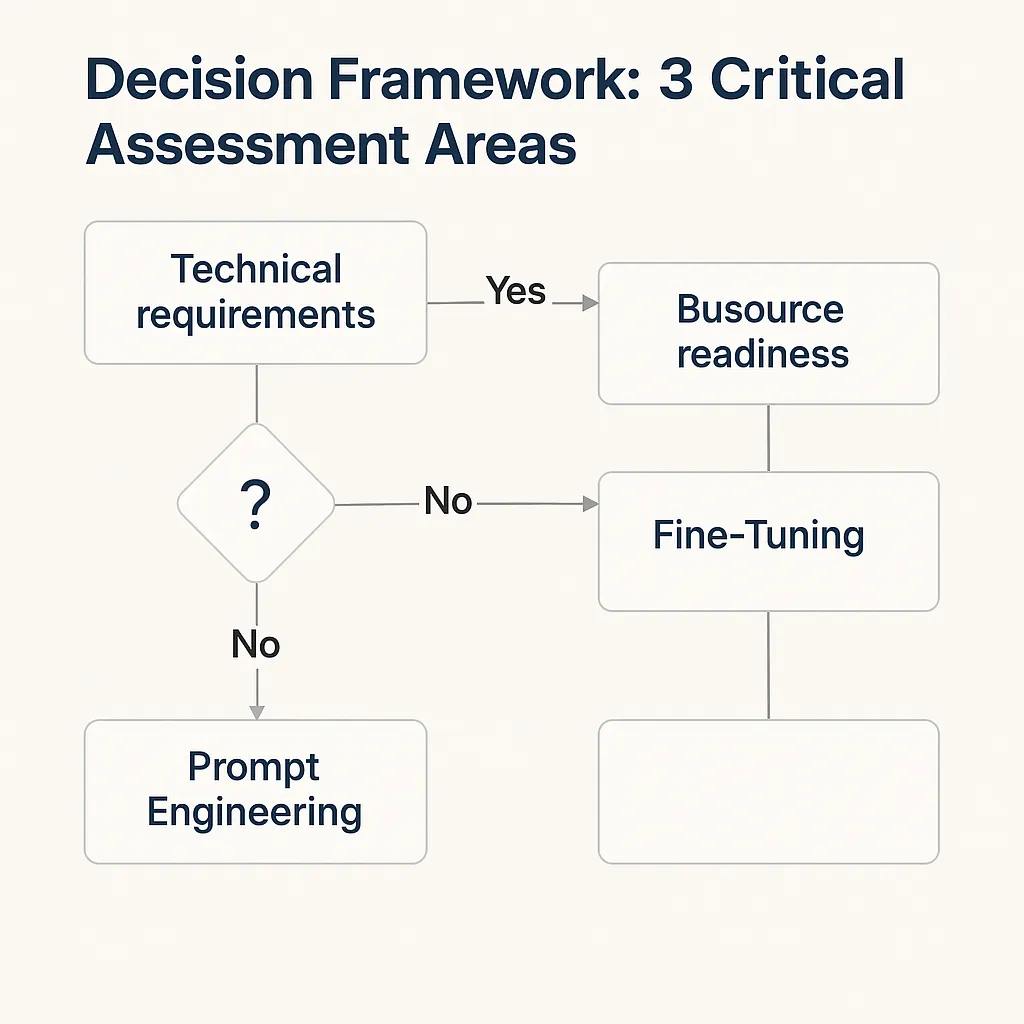

Start My AI Approach ComparisonDecision Framework: 3 Critical Assessment Areas

Start with three critical assessment areas: technical requirements, business impact, and organizational readiness. Most organizations skip systematic evaluation and choose based on assumptions rather than data.

Technical Assessment Questions:

- Does your use case require accuracy above 90% on domain-specific tasks?

- Can you achieve acceptable results using the latest GPT-4 or Claude models with optimized prompts?

- Do you have access to 1,000+ high-quality training examples specific to your use case?

If you answer “no” to the first question, prompt engineering likely suffices. If you answer “yes” to all three, fine-tuning makes sense.

Business Impact Analysis:

- Will AI performance directly impact revenue, customer satisfaction, or operational efficiency by more than 20%?

- Does your timeline allow 2-6 months for fine-tuning development versus 1-4 weeks for prompt engineering?

- Can you justify 10-100x higher initial costs for potentially 15-30% performance improvements?

Only 30% of digital transformation initiatives succeed, often due to inadequate upfront assessment. The stakes are high enough to warrant careful evaluation.

Resource and Risk Evaluation:

- Does your team include machine learning engineers capable of managing fine-tuning projects?

- Can your organization handle ongoing model maintenance, monitoring, and periodic retraining?

- Are you prepared for potential delays, technical challenges, and iterative development cycles?

Fig2. Recommended approach

Luan Nguyen, General Director at SmartDev, advises: “Start by evaluating whether your use case genuinely requires 95%+ accuracy or if 80% solves the business pain—overengineering is a frequent pitfall.”

Prompt engineering deployments average 1-4 weeks, while fine-tuning projects take 2-6 months from start to production. Consider whether your business can wait for the better solution or needs the faster one.

Hybrid Approaches Deliver Early Value While Building Toward Specialized Performance

Yes—hybrid strategies deliver early value while building toward specialized performance. 54% of enterprises use hybrid approaches, starting with prompt engineering and progressing to fine-tuning as usage matures.

Progressive implementation reduces risk and accelerates ROI. Start with prompt engineering to establish baseline performance and validate use case viability. Collect real-world usage data and performance metrics to inform fine-tuning decisions. Migrate to fine-tuning only when clear ROI justification exists based on actual business impact.

The VeryPay mobile money platform rollout demonstrates this approach perfectly. SmartDev began with prompt-based chatbots for user support, then migrated high-volume tasks to a fine-tuned model, resulting in doubling user adoption metrics and winning the 2024 Sao Khue Innovation Award.

Combining both techniques often delivers optimal results:

- Use fine-tuned models for core domain understanding combined with dynamic prompts for specific instructions

- Implement retrieval-augmented generation (RAG) systems that improve prompts with domain-specific context

- Deploy fine-tuned models for high-frequency tasks while maintaining prompt-based approaches for edge cases

Organizations using RAG systems saw contextual response accuracy increase by 15-25% over prompt-only configurations. The hybrid approach lets you optimize for both performance and flexibility.

Risk mitigation strategies include:

- Maintain prompt-based backup systems during fine-tuning development

- Implement A/B testing frameworks to quantitatively compare approaches

- Establish clear success metrics and rollback procedures before committing to fine-tuning investments

Nguyen Le explains: “Hybrid approaches deliver early business value, allowing teams to iterate quickly and justify deeper AI investments as requirements become clear.”

According to industry experts, most hybrid deployments transition from prompt-only to fine-tuned models within 6-12 months as ROI is demonstrated and sufficient data becomes available for effective training.

Next Steps: From Assessment to Implementation

Start with an immediate audit of your current AI use cases to identify the optimal approach for your specific requirements. The cost of choosing wrong far exceeds the investment in proper evaluation.

Immediate action items include:

- Audit your AI use cases against the technical and business criteria outlined above

- Calculate total cost of ownership including development, deployment, and maintenance for both approaches

- Assess your team’s technical capabilities and identify skill gaps that need addressing

94% of executives believe AI will be critical to business success within five years, amplifying the need for expert guidance in approach selection.

Professional AI consulting prevents costly mistakes in approach selection and implementation strategy. Experienced consultants provide objective assessment of your use case complexity and performance requirements. Professional guidance accelerates time-to-value while avoiding common pitfalls in AI project management.

Ha Nguyen Ngoc, Marketing Director at SmartDev, notes: “Early-stage audits and external consulting accelerate AI ROI by up to 40%, while mitigating risk of sunk costs in the wrong solution path.”

After engaging SmartDev’s AI consulting practice, a Southeast Asian logistics company reduced their AI deployment timeline by 44% and avoided a $35,000 misallocated investment in unnecessary fine-tuning.

Fig3. Input your requirements and get estimated costs/timelines for both approaches]

SmartDev’s certified AI practitioners have successfully implemented both prompt engineering and fine-tuning solutions across diverse industries. Our team provides comprehensive AI strategy consulting to help organizations make informed decisions based on technical feasibility and business impact.

With 100% AI-certified developers and a proven track record in custom AI model development, SmartDev guarantees optimal approach selection for your specific use case. Consulting rates typically range from $150-350/hour or $2,500-15,000 per project for end-to-end assessment—often more cost-effective than misdirected development spend.