Introduction

AI investment continues to rise, yet outcomes consistently lag expectations. Organizations launch pilots, fund innovation teams, and deploy advanced models across functions, but most initiatives fail to deliver sustained business value at scale. The root cause is rarely technology readiness. AI execution issues, especially weak problem definition, unclear success metrics, and unstructured delivery models, remain the primary blockers that prevent promising ideas from becoming operational systems.

Across industries, AI projects stall not because models underperform in controlled environments, but because mistakes in executing AI projects compound over time. AI project planning often focuses on experimentation instead of operational readiness, which explains why AI proof of concept fails to scale. Without a clear framework for ownership and delivery, organizations struggle with how to structure AI projects for success, leaving AI trapped in perpetual pilots rather than embedded into core business processes.

The hypothesis gap. Why unclear assumptions break AI initiatives

When AI starts without a business question

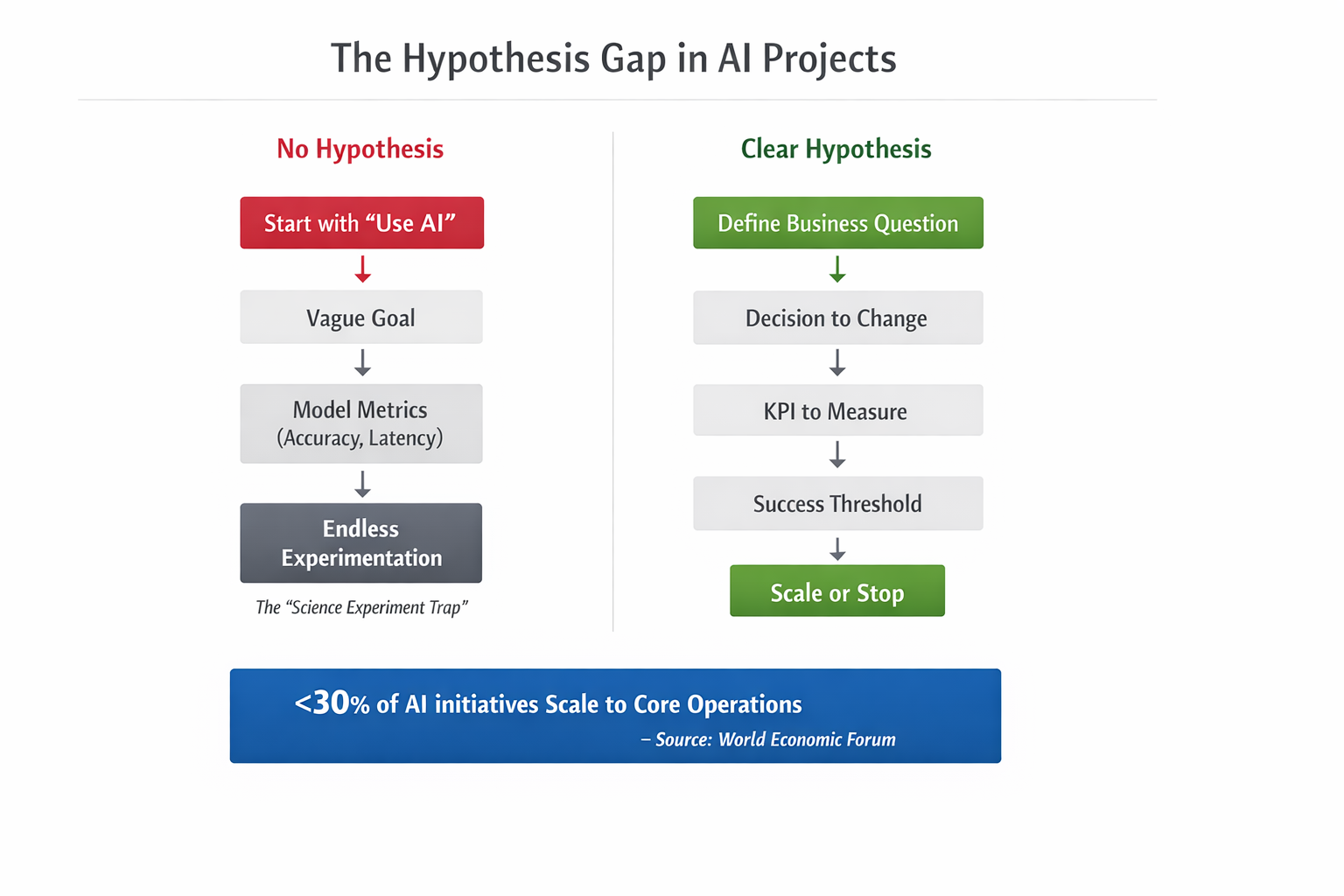

A missing or vague hypothesis is one of the earliest and most damaging AI execution issues. Many initiatives begin with a directive to “use AI” rather than a clearly defined business problem. Teams are not told which decision should improve, which workflow must change, or which KPI defines success. As a result, AI becomes a solution in search of a problem.

This lack of clarity pushes teams toward open-ended experimentation. IBM describes this as the science experiment trap, where AI work continues indefinitely because no hypothesis defines success, failure, or stopping conditions. Models improve incrementally, but the project never reaches a point where teams can confidently move toward deployment. Without a clear hypothesis, there is no shared agreement on when learning is sufficient or when investment should increase.

How unclear hypotheses create execution failure

The impact of weak hypotheses is visible at the enterprise level. The World Economic Forum reports that fewer than 30 percent of organizations successfully scale AI initiatives into core operations, largely because AI efforts are not tied to strategic objectives or measurable outcomes. This means more than 70 percent of AI initiatives stall despite demonstrating technical promise during pilots.

Unclear hypotheses directly lead to mistakes in executing AI projects. Teams optimize proxy metrics such as accuracy or response time instead of business outcomes like revenue lift or cost reduction. Stakeholders disagree on whether progress is being made because success was never clearly defined. Leadership then struggles to justify continued funding, which reinforces broader AI execution issues.

A strong hypothesis creates alignment. It clearly states which decision will change, which KPI will move, and what level of improvement justifies scaling. This clarity reduces wasted experimentation, improves prioritization, and provides a clear path from pilot to production. Without it, even well-funded AI initiatives remain trapped in uncertainty from the very beginning.

From pilot to production failure. Why AI proof of concept fails to scale

From pilot to production failure. Why AI proof of concept fails to scale

The gap between pilot success and production deployment is one of the clearest symptoms of AI execution issues. Many organizations can demonstrate AI working in a controlled environment, yet very few can turn those pilots into systems that operate reliably at scale. The problem is not model performance. It is execution readiness.

Enterprise data highlights how widespread this failure is. An MIT-backed analysis reported that 95 percent of generative AI pilots fail to scale inside companies, despite showing promising results during experimentation. Forbes reinforces this finding, noting that failure rates of up to 95 percent are driven primarily by execution, data, and governance breakdowns rather than limitations in algorithms or tools.

These outcomes explain why AI proof of concept fails to scale even when technical indicators appear strong. The most common failure points are execution-related and repeat across organizations.

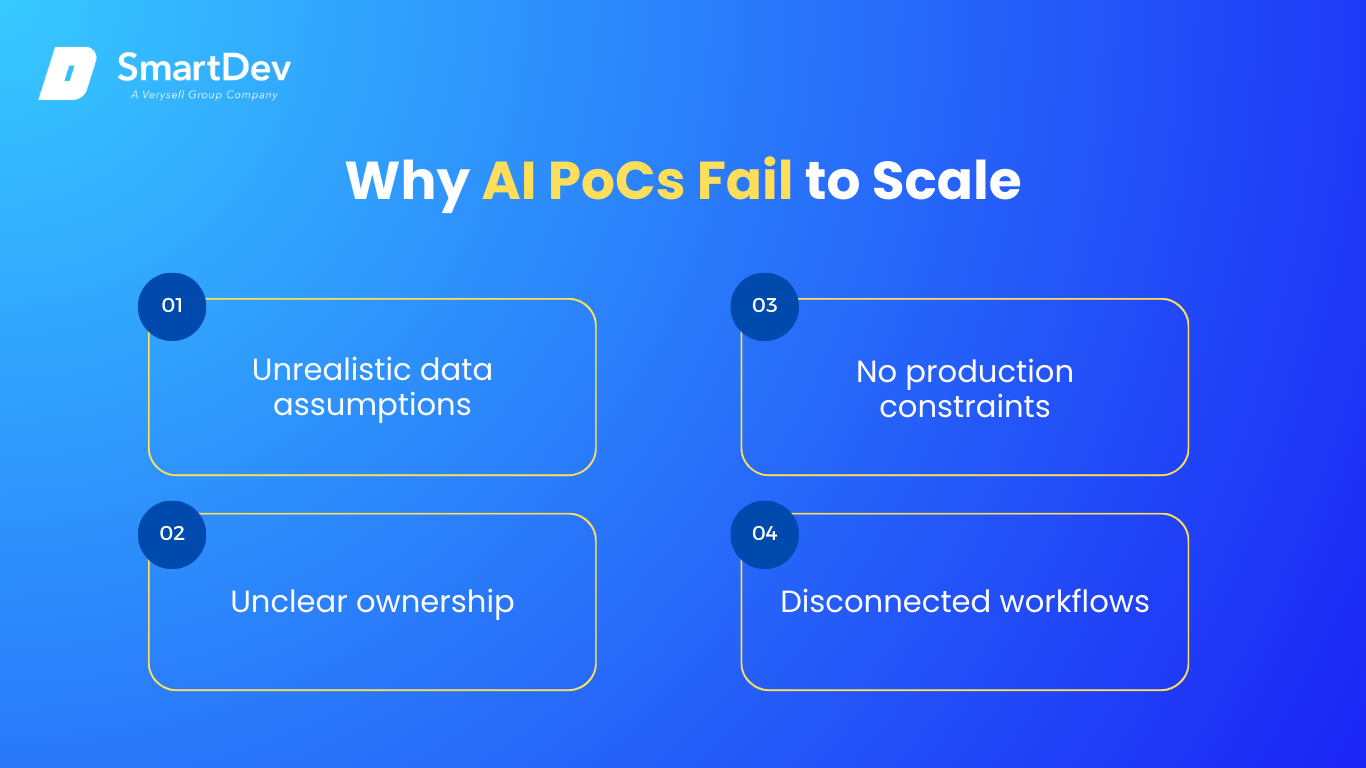

Why AI proof of concept fails to scale

- Unrealistic data assumptions: Pilots rely on curated or historical datasets that do not reflect real-world data quality, volume, or variability

- No production constraints: Security, compliance, latency, cost, and reliability are ignored during pilots, making later rework expensive or impossible

- Unclear ownership: Teams that build pilots are often not responsible for operating them, leading to stalled handoffs and accountability gaps

- Disconnected workflows: Pilots are not embedded into existing business processes, limiting adoption and measurable impact

These mistakes in executing AI projects create false confidence. Leaders see promising demos, assume progress is being made, and only discover execution gaps when scaling begins.

SmartDev argues that proofs of concept should intentionally surface execution risks early rather than hide them behind controlled demos. When pilots are designed with production in mind, organizations reduce downstream failure and address AI execution issues before they become irreversible.

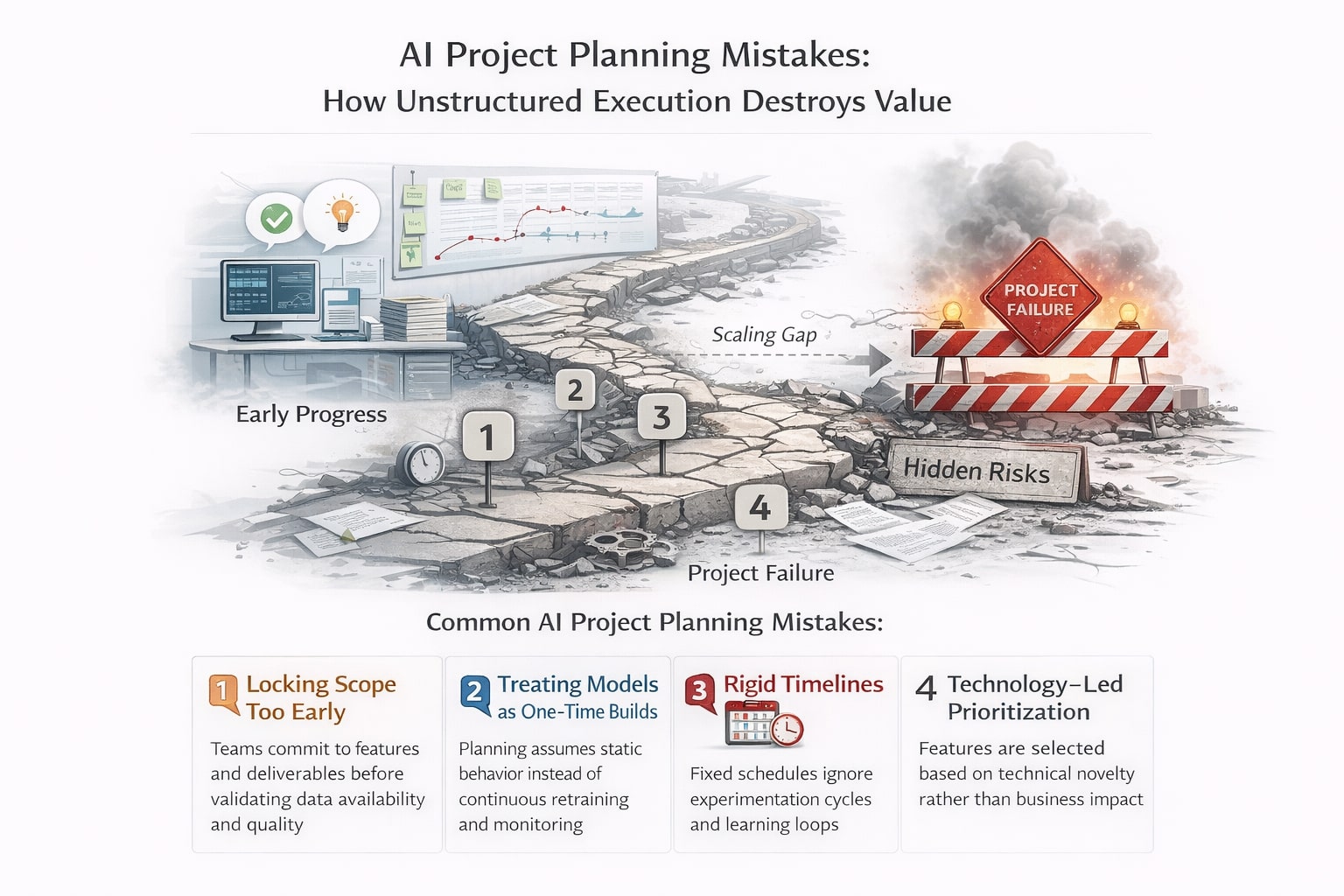

AI project planning mistakes. How unstructured execution destroys value

AI project planning must account for uncertainty, iteration, and heavy data dependency. Unlike traditional software development, AI outcomes cannot be fully specified upfront. Models evolve as data quality, feature relevance, and performance constraints are discovered. Yet many organizations continue to apply rigid planning frameworks designed for deterministic systems. This mismatch creates persistent AI execution issues long before deployment begins.

Execution data confirms the impact of poor planning. Onlim identifies unrealistic timelines and weak stakeholder alignment as leading causes of AI project failure, noting that these issues often remain hidden until late-stage execution breaks down. Early progress can appear smooth, but underlying planning flaws accumulate silently until delivery becomes impossible or costs exceed expectations.

These outcomes are driven by recurring mistakes in executing AI projects, especially during the planning phase.

Common AI project planning mistakes

Common AI project planning mistakes

- Locking scope too early: Teams commit to features and deliverables before validating data availability and quality

- Treating models as one-time builds: Planning assumes static behavior instead of continuous retraining and monitoring

- Rigid timelines: Fixed schedules ignore experimentation cycles and learning loops

- Technology-led prioritization: Features are selected based on technical novelty rather than business impact

These planning errors explain why many AI initiatives appear successful on paper but fail to deliver measurable value in practice. Resources are spent building capabilities that do not move key business metrics.

SmartDev shows that value-driven feature prioritization significantly improves execution outcomes by aligning development effort with business goals from the start. When AI project planning is structured around impact rather than assumptions, teams reduce waste, improve decision-making, and mitigate downstream AI execution issues before they become irreversible.

Data, governance, and ownership gaps that derail AI delivery

Data quality problems are often blamed for AI failure, but the root cause is usually unclear accountability. Data becomes fragmented when no team clearly owns its accuracy, timeliness, or consistency. Forbes links this fragmentation directly to the up to 95 percent AI failure rate, noting that models cannot perform reliably without shared data standards and ownership.

These breakdowns are compounded by governance gaps that emerge after deployment. Many organizations focus on building models but neglect the structures required to operate them safely and reliably over time. IBM emphasizes that AI systems must be governed throughout their entire lifecycle, not just during development.

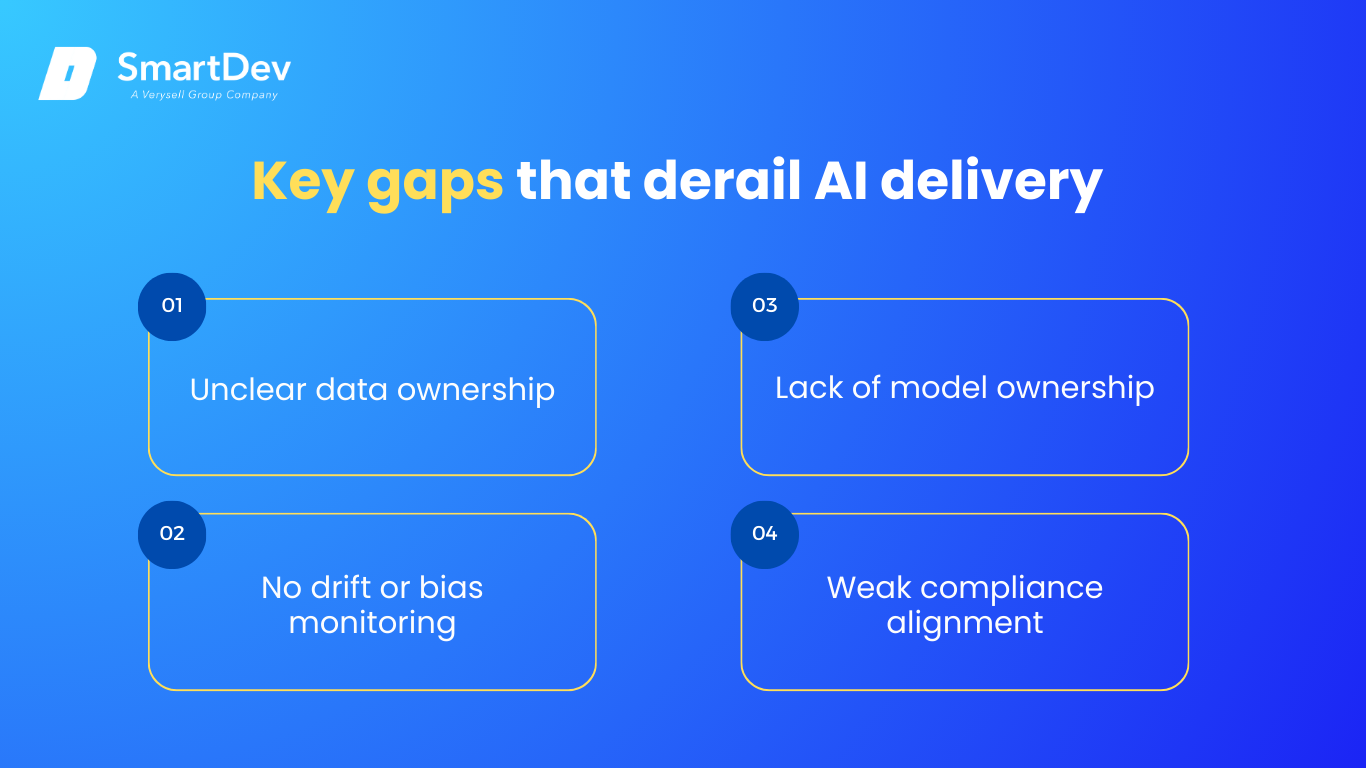

Key gaps that derail AI delivery

Key gaps that derail AI delivery

- Unclear data ownership: No single team is accountable for data quality, definitions, or lineage

- Lack of model ownership: Responsibility for performance, retraining, and incident response is undefined

- No drift or bias monitoring: Models degrade silently as data distributions change

- Weak compliance alignment: Regulatory and ethical requirements are addressed reactively, not by design

These issues reinforce systemic AI execution issues. When performance drops, teams debate responsibility instead of fixing the problem. When risk increases, organizations respond by shutting systems down rather than improving them.

Ultimately, these failures are structural, not technical. Organizations that establish clear data stewardship, lifecycle governance, and ownership transform AI from a fragile experiment into a dependable operational capability.

Explore how SmartDev partners with teams through a focused AI discovery sprint to validate business problems, align stakeholders, and define a clear path forward before development begins.

SmartDev helps organizations clarify AI use cases and feasibility through a structured discovery process, enabling confident decisions and reduced risk before committing to build.

Learn how companies accelerate AI initiatives with SmartDev’s discovery sprint.

Start Your 3-Week Discovery Program NowHow to structure AI projects for success. A practical execution model

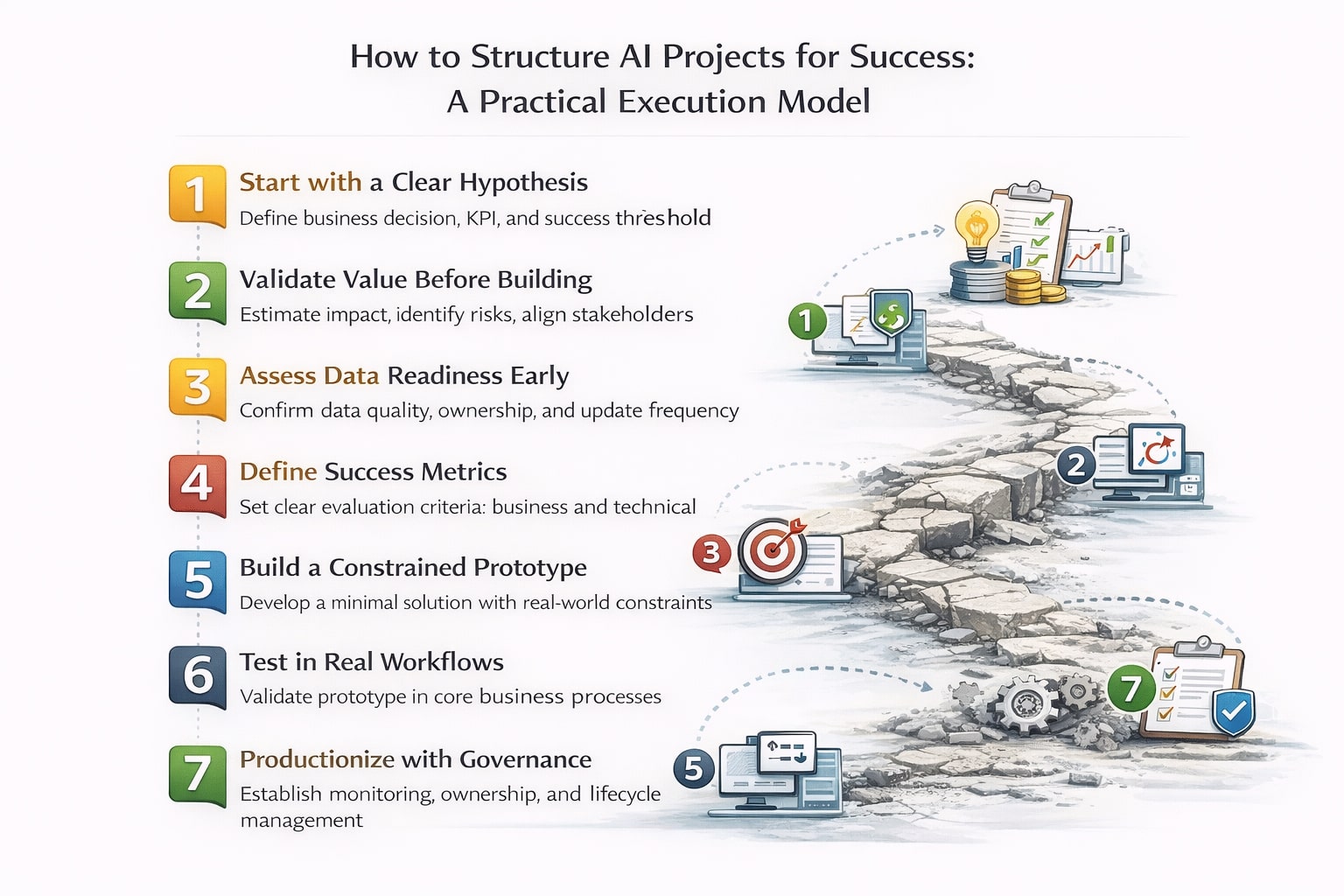

Organizations that overcome AI execution issues follow a clear, step-by-step execution model that turns experimentation into disciplined delivery. This structure does not eliminate uncertainty. It manages it deliberately and progressively.

Step 1. Start with a clear hypothesis

Step 1. Start with a clear hypothesis

Every AI project must begin with a clearly defined hypothesis. This includes the business decision or workflow to improve, the KPI that will change, and the threshold that defines success. Without this step, teams fall into open-ended experimentation and repeat the same mistakes in executing AI projects.

Step 2. Validate value before building

Before writing code, teams must confirm that solving the problem is worth the effort. This step focuses on estimating business impact, identifying key risks, and aligning stakeholders. Many AI initiatives fail because value is assumed rather than tested, which later explains why AI proof of concept fails to scale.

Step 3. Assess data readiness early

Data availability, quality, and ownership must be checked upfront. This includes understanding where data comes from, how often it updates, and what gaps exist. Most late-stage failures originate here, making this step critical to reducing AI execution issues.

Step 4. Define success metrics and evaluation criteria

Teams must agree on how success will be measured, using both business metrics and technical indicators. Clear evaluation criteria prevent confusion, misalignment, and subjective progress reporting during execution.

Step 5. Build a constrained prototype

The goal of prototyping is not to impress but to learn. Teams should build the smallest possible solution that can validate the hypothesis, while applying real-world constraints such as security, latency, and cost from the start.

Step 6. Test in real workflows

Prototypes must be tested with real users and operational data. This step reveals adoption friction, edge cases, and integration challenges that controlled demos often hide.

Step 7. Productionize with governance

Once validated, the system is productionized with proper monitoring, ownership, and lifecycle management. Governance ensures models remain reliable, compliant, and valuable over time.

Following this structure makes how to structure AI projects for success repeatable. It transforms AI from isolated pilots into scalable systems and directly addresses the root causes of AI execution issues.

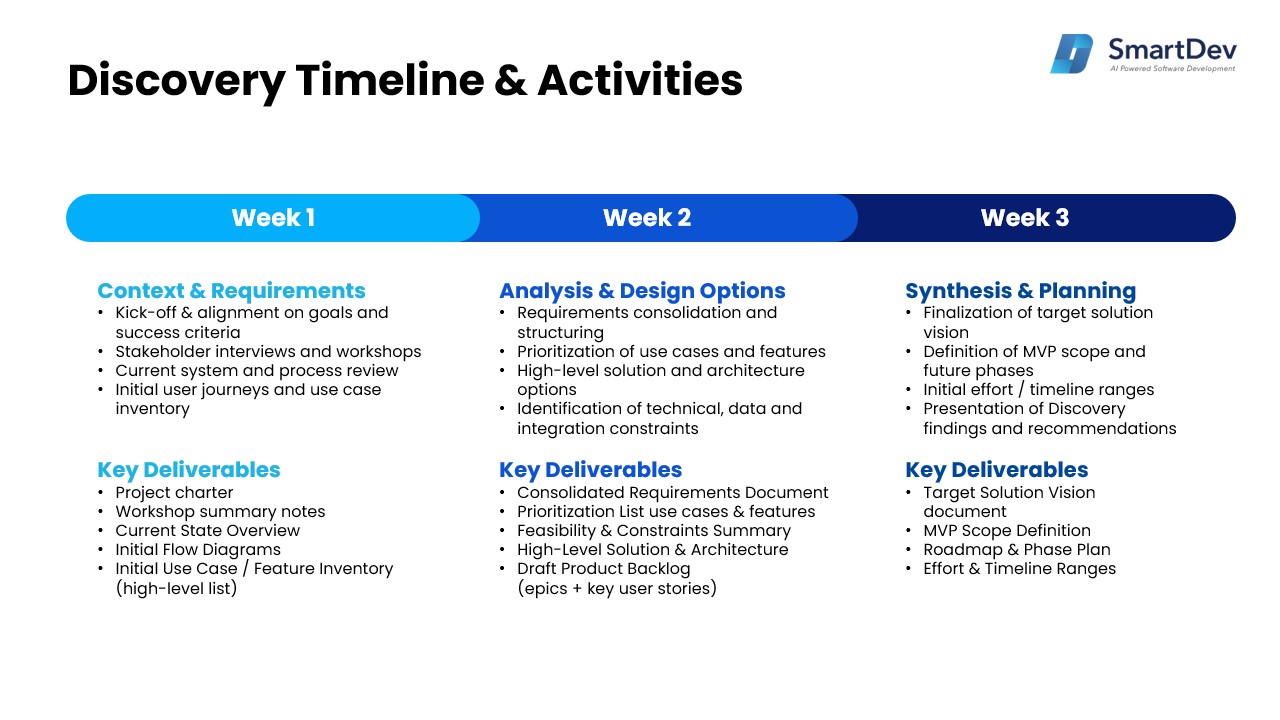

The 3-week AI discovery phase. Reducing execution risk early

The 3-week AI discovery phase is designed to address AI execution issues at their source. Instead of building first and validating later, this phase forces clarity before significant time and budget are committed. Many AI initiatives fail because uncertainty is discovered too late. Discovery compresses that uncertainty into a short, structured window.

The 3-week AI discovery phase is designed to address AI execution issues at their source. Instead of building first and validating later, this phase forces clarity before significant time and budget are committed. Many AI initiatives fail because uncertainty is discovered too late. Discovery compresses that uncertainty into a short, structured window.

SmartDev’s 3-week discovery program focuses on hypothesis validation, data feasibility, and value definition within 21 days, significantly reducing downstream execution risk. During this phase, teams align on the business problem, test whether data can realistically support the use case, and clarify what success actually means.

Typical outcomes include a validated AI hypothesis tied to a business KPI, a clear assessment of data readiness and gaps, and a prioritised backlog aligned to business value rather than technical novelty. By keeping the phase intentionally short, organizations avoid analysis paralysis while eliminating the most common mistakes in executing AI projects. Discovery does not guarantee success, but it ensures that only viable initiatives move forward.

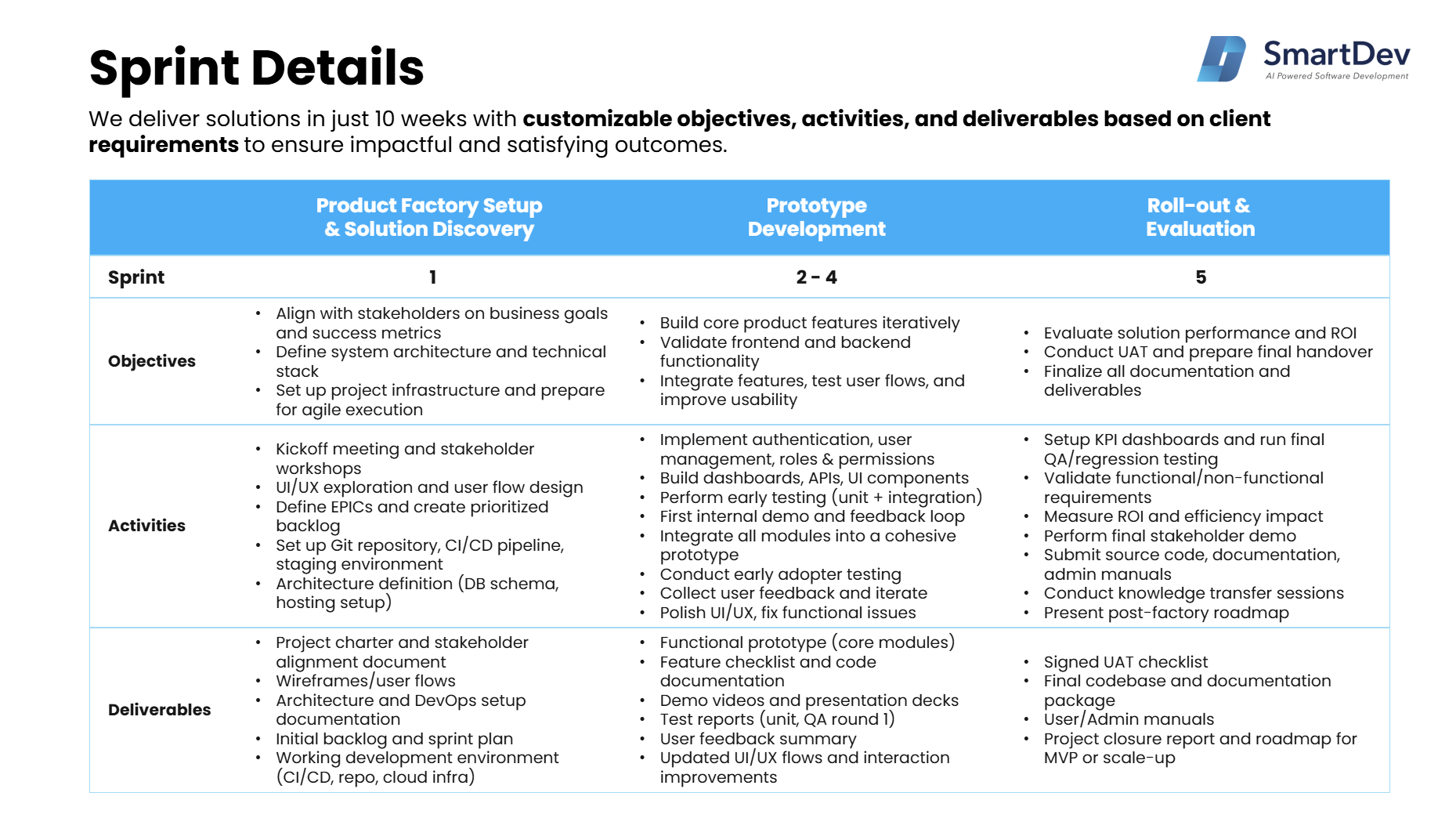

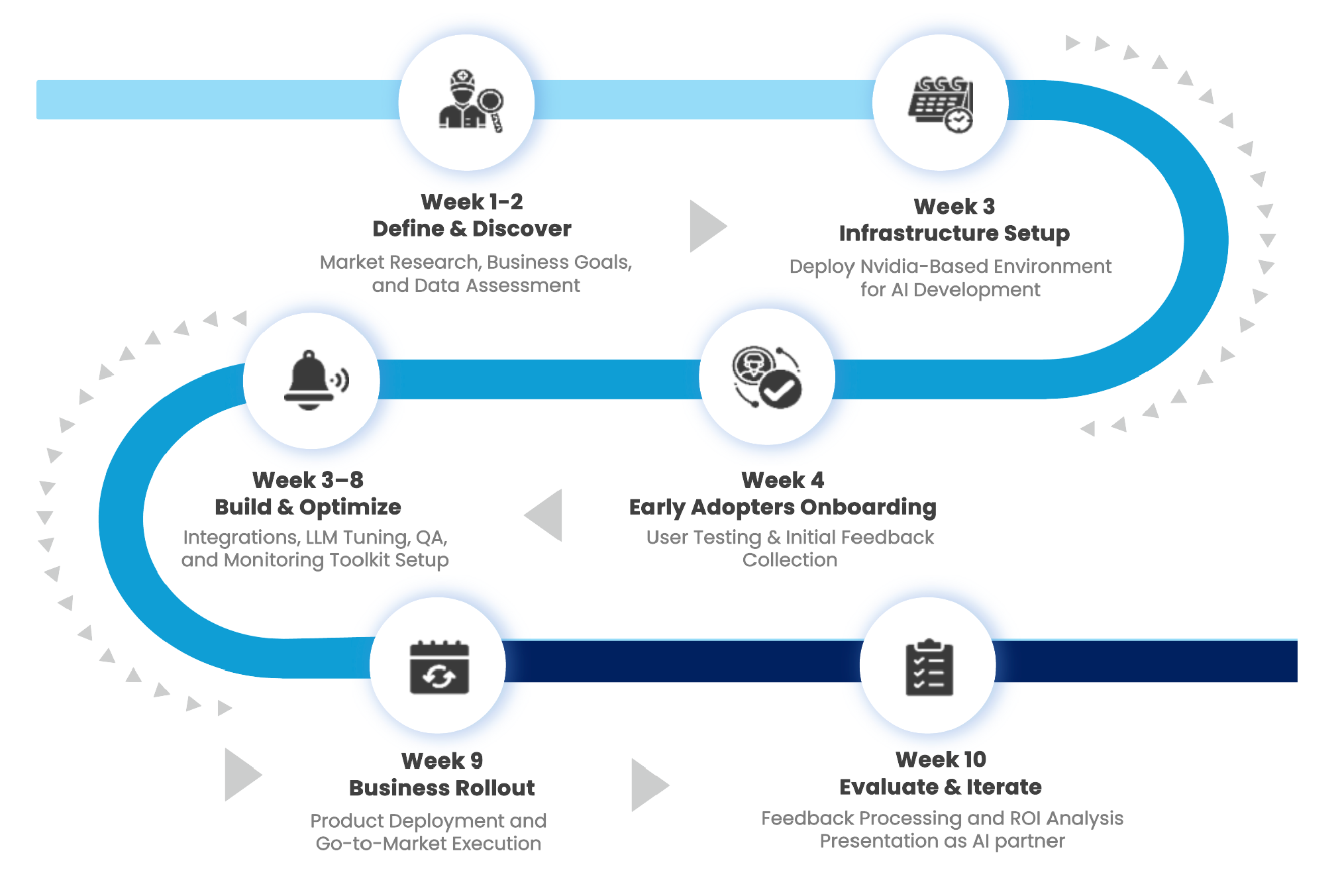

The 10-Week AI Product Factory. Designed to Fix AI execution issues and Prove ROI Early

The 10-week AI Product Factory is built specifically to address the root causes of AI execution issues. Instead of optimizing for technical demos, it is designed to produce early, decision-ready evidence of business value. This matters because many organizations fail not at building AI, but at proving why an AI initiative deserves to scale.

SmartDev’s AI Product Factory helps organizations validate AI use cases faster, reduce delivery risk, and shorten time-to-market compared to traditional AI development cycles. More importantly, it operationalizes early AI ROI validation by structuring experimentation around business outcomes. not just technical feasibility. This directly tackles why AI proof of concept fails to scale, where pilots succeed technically but fail to justify continued investment.

Each phase of the factory is intentionally aligned to:

Each phase of the factory is intentionally aligned to:

- Measuring AI pilot success using real operational and financial metrics

- Defining AI business impact metrics before development begins

- Translating AI experiments into decision-ready business evidence

As a result, AI initiatives generate insight quickly, long before large-scale investment is required.

Phase 1. Define & Discover (Weeks 1–2). Framing AI Proof of Concept ROI

Phase 1 focuses on eliminating ambiguity. Instead of starting with models or data, teams start with execution clarity. This phase transforms AI ideas into testable business hypotheses, directly addressing early AI execution issues caused by vague objectives.

During this phase, teams:

- Align on business objectives and concrete success criteria

- Define AI business impact metrics tied to cost reduction, efficiency, or revenue

- Establish an initial AI proof of concept ROI hypothesis

- Identify assumptions and risks that could undermine ROI

By the end of Phase 1, AI initiatives are no longer abstract experiments. They are clearly scoped around measurable outcomes, creating a credible foundation for ROI evaluation and avoiding common mistakes in executing AI projects.

Phase 2. Prototype Development (Weeks 3–8). Measuring AI Pilot Success in Practice

Phase 2 is where execution discipline matters most. This phase reflects a critical principle. measuring AI pilot success must happen during execution, not after delivery.

Development is deliberately focused on:

- High-impact workflows that directly move AI business impact metrics, ensuring every feature contributes to measurable value

- AI models embedded and tested within real operational environments, exposing adoption, performance, and integration risks early

- Continuous validation of AI ROI assumptions using live pilot data

Through iterative demos and tight feedback loops, stakeholders can already see where value is emerging, where friction exists, and whether expected ROI remains realistic. This turns experimentation into measurable business evidence, not speculative promise. It directly reduces AI execution issues related to late-stage surprises.

Phase 3. Rollout & Evaluation (Weeks 9–10). From Metrics to Scale Decisions

The final phase converts metrics into clear execution decisions. This is where early ROI evidence replaces optimism.

During this phase, teams:

- Validate performance against predefined success criteria

- Translate operational metrics into financial impact

- Compare conservative, expected, and upside ROI scenarios

The outcome is an evidence-based view of AI proof of concept ROI. Leaders can confidently decide whether to scale, pivot, or stop the initiative. This ensures AI investments are guided by data and execution reality, not hope. and directly resolves long-standing AI project planning and execution failures.

Conclusion

Conclusion

Most AI initiatives fail not because the technology is immature, but because AI execution issues are left unresolved. Unclear hypotheses, weak AI project planning, and the absence of structured execution frameworks repeatedly cause promising pilots to stall. This is exactly why AI proof of concept fails to scale across so many organizations.

The path forward is not more experimentation. It is better execution. Organizations that succeed treat AI as a delivery discipline, not a research exercise. They define business outcomes before building, validate value early, and structure execution so learning feeds production rather than replacing it. This approach eliminates the most common mistakes in executing AI projects long before large investments are made.

By adopting clear discovery phases, disciplined execution models, and factory-style delivery, leaders finally learn how to structure AI projects for success. AI stops being a collection of isolated pilots and becomes a repeatable capability that produces measurable business impact. In an environment where AI investment continues to rise, execution is the true competitive advantage.

From pilot to production failure. Why AI proof of concept fails to scale

From pilot to production failure. Why AI proof of concept fails to scale Common AI project planning mistakes

Common AI project planning mistakes Step 1. Start with a clear hypothesis

Step 1. Start with a clear hypothesis