In today’s fast-paced digital world, businesses are increasingly turning to artificial intelligence (AI) to enhance their operations, and stay competitive. However, integrating AI into existing enterprise systems can be a complex and disruptive process, especially when dealing with legacy infrastructure that wasn’t built with modern AI technologies in mind. This is where the API-first approach comes into play. By prioritizing APIs (Application Programming Interfaces) as the main integration tool, businesses can seamlessly connect AI models with their legacy systems, enabling the smooth flow of data and functionality across various platforms.

What is the API-First Approach?

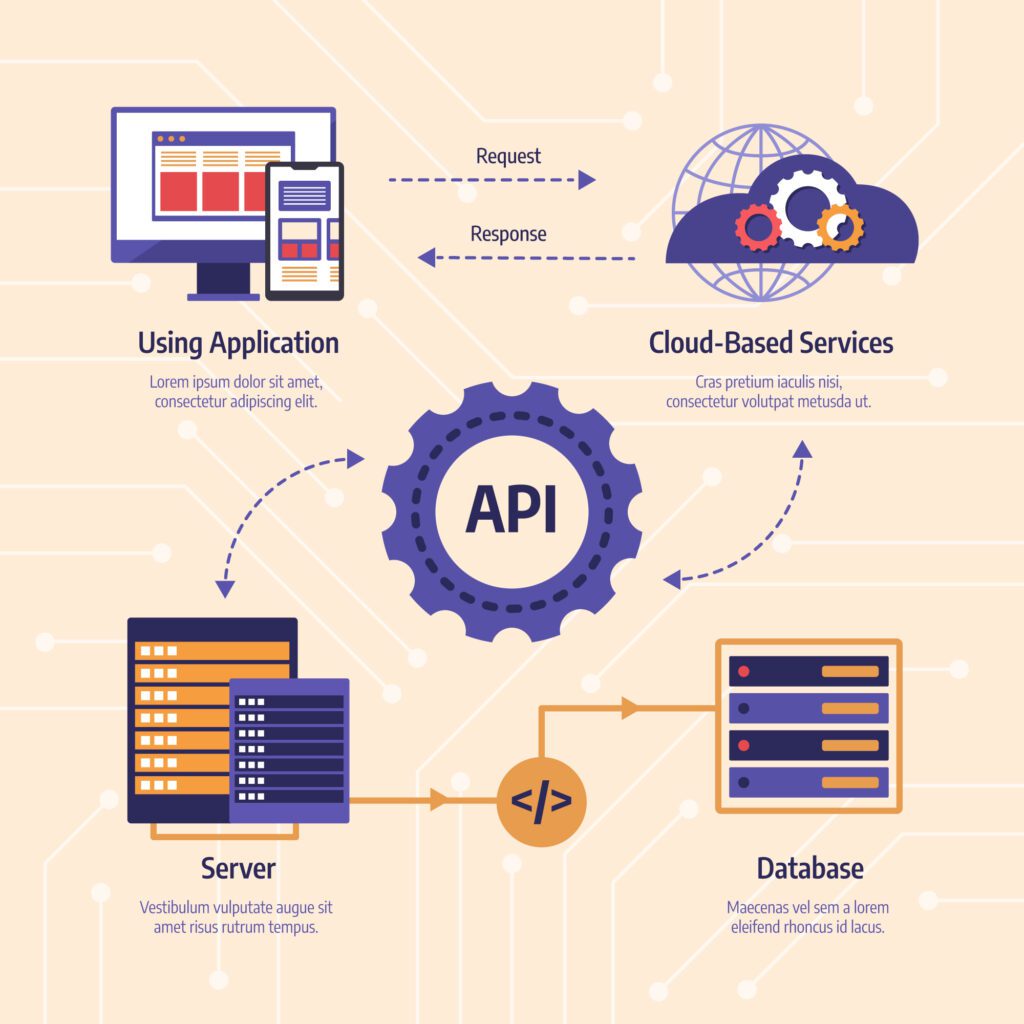

The API-first approach is a modern integration strategy where Application Programming Interfaces (APIs) are designed first, before any application code is written. This ensures that APIs become the standard method through which data flows between systems, enabling modular and scalable connectivity for AI models.

Key Characteristics of the API-First Approach:

- Designing APIs at the Forefront: APIs are created at the beginning of the process, ensuring that all systems and applications interact through standardized interfaces.

- Decoupling AI from Infrastructure: By using APIs, businesses can decouple their AI models from the underlying infrastructure, enabling AI components to be more modular, reusable, and adaptable to future changes.

- Scalable Communication: APIs act as the standardized communication layer, allowing custom AI models to easily interface with existing on-premise or cloud-based systems.

This approach has become increasingly important for organizations integrating AI models with legacy systems. According to a recent Postman 2024 State of the API Report, 85% of organizations using an API-first approach report increased speed in development and integration, demonstrating the tangible benefits of API-first strategies.

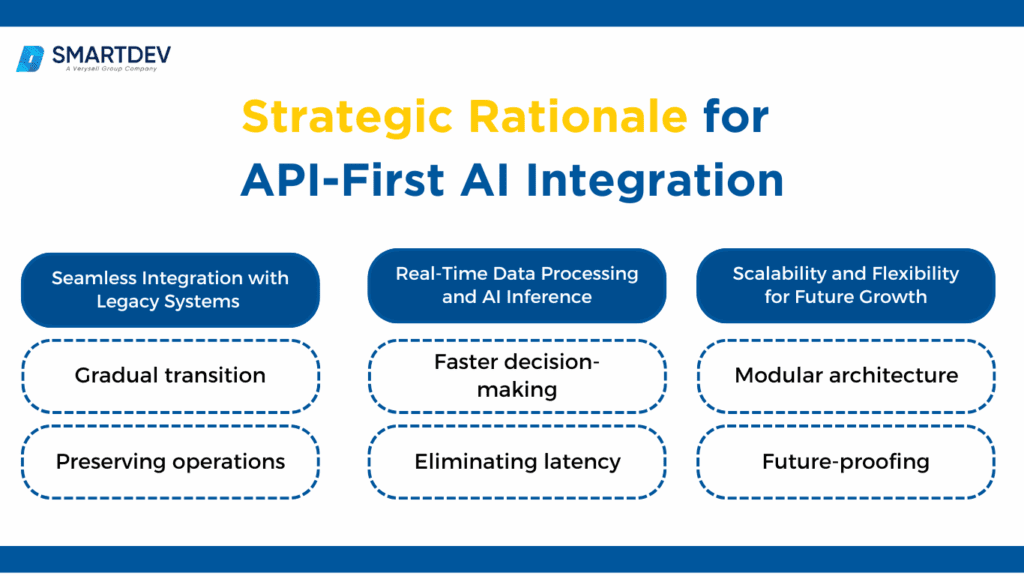

The Strategic Rationale for API-First AI Integration

The API-first approach offers many advantages when integrating AI models with existing enterprise systems, especially in environments with limited resources, like edge computing. This approach allows businesses to incorporate AI technologies without disrupting current operations or requiring extensive infrastructure overhauls.

Here’s why the API-first strategy is essential for businesses scaling their AI capabilities:

1. Seamless Integration with Legacy Systems

Many organizations still use legacy systems that were not built to handle modern AI technologies. Replacing these systems entirely is expensive and disruptive. The API-first approach helps businesses avoid this by enabling incremental integration of AI models into their existing systems.

- Gradual Transition: APIs allow businesses to connect new AI models to legacy systems without completely overhauling them. This means that AI models can be added gradually over time, ensuring smooth transitions.

- Preserving Operations: By adding AI capabilities via APIs, businesses can modernize their systems without interrupting their daily operations. For instance, an AI model for predictive analytics can be integrated into one department at a time, like inventory management, and later expanded to other functions.

This method reduces downtime and costs associated with migration. According to a report by Postman, organizations using an API-first approach experienced 45% faster deployment of new technologies, including AI solutions, compared to those using traditional methods.

2. Real-Time Data Processing and AI Inference

In environments like manufacturing and IoT, edge devices need to process data locally and make decisions in real-time. This is where APIs play a critical role.

- Faster Decision-Making: APIs allow real-time communication between edge devices (like sensors or cameras) and enterprise systems, without relying on centralized servers. This helps in making quick decisions, which is crucial in operational environments.

- Eliminating Latency: By processing data locally, edge devices avoid latency issues associated with sending data to the cloud. This leads to faster AI inference, which is especially important in industries like manufacturing, where machine failures can be costly if not detected quickly.

For example, in a smart factory, sensors continuously monitor machine health. APIs can send data from these sensors to AI models for processing, which then provide real-time insights to managers. This allows for predictive maintenance, helping to avoid downtime.

3. Scalability and Flexibility for Future Growth

The API-first approach enables businesses to scale their AI solutions as their operations grow. APIs are flexible, making it easy to integrate new AI models and devices into existing systems.

- Modular Architecture: APIs allow businesses to add or update AI models without disrupting their entire system. As business needs change or new devices are added, APIs provide a flexible way to accommodate these changes.

- Future-Proofing: With APIs, businesses can upgrade their AI models over time, incorporating newer algorithms or features without replacing the entire system. This ensures long-term adaptability and keeps the organization ready for future technology advancements.

For instance, as new IoT devices are deployed, APIs make it easy to integrate them into the existing AI ecosystem. This scalability makes it easier for businesses to continue expanding their AI capabilities.

According to API7.ai, companies using an API-first strategy report 30% better scalability compared to those using traditional integration methods.

Step-by-Step Guide to Integrating AI Models into Enterprise Systems Using APIs

Integrating AI into business applications is a transformative process that can help companies automate tasks, enhance decision-making, and provide personalized customer experiences. However, the challenge lies in connecting AI models with existing enterprise systems without disrupting operations. The API-first approach simplifies this process, offering a seamless and flexible way to connect AI models to enterprise systems. In this guide, we’ll walk through the steps of integrating AI models into enterprise systems using APIs, from understanding business requirements to real-time data processing and ongoing optimization.

1. Assessing Business Requirements and Identifying AI Use Cases

Before diving into the technical implementation, it’s essential to clearly define the business problem you want to solve with AI. This step involves understanding the specific needs and determining where AI will add the most value within the organization.

- Understanding Business Needs:

The first step is to identify which areas of your business could benefit from AI. For example, if you’re in retail, AI can optimize inventory management, recommend personalized products to customers, or improve customer service with chatbots. Similarly, in finance, AI can enhance fraud detection and automate data entry tasks. The goal is to find AI use cases that align with your company’s strategic objectives. - Mapping Out Potential AI Applications:

Once you’ve identified key areas, break down potential AI applications by department (e.g., sales, marketing, operations). This helps in understanding the scope and potential impact of AI across your enterprise. Prioritize the use cases that will provide the most value with the least disruption, ensuring you’re focusing on projects that align with business goals and have a clear ROI.

By understanding your business needs and mapping potential AI applications, you create a strong foundation for the successful integration of AI models into your enterprise systems.

2. Designing a Robust API Architecture for AI Integration

After identifying AI use cases, the next step is to design the API architecture that will integrate the AI models into your existing enterprise systems. API-first architecture is crucial for ensuring that AI services can be easily accessed and interacted with by other systems within your organization.

- Structuring APIs for Real-Time AI Inference:

Many AI applications require real-time processing, such as fraud detection, recommendation engines, or dynamic pricing models. Your API architecture must support low-latency, real-time communication to ensure that the AI models can process data and return predictions without delay. RESTful APIs are commonly used for their simplicity and efficiency in such applications. - Ensuring Scalability, Flexibility, and Reliability:

Your API design should support scalability to handle increasing data loads as the business grows. Cloud-based infrastructure or microservices architectures allow for easier scaling and flexibility. By designing APIs that can handle spikes in traffic and ensure uptime, you prevent bottlenecks and system failures. Additionally, an API-first approach helps isolate AI models from the core system, enabling you to replace or update AI models without impacting the rest of your infrastructure.

This robust architecture is essential for ensuring that AI models can be accessed and used efficiently without causing disruptions to the business.

3. Developing and Deploying Custom AI Models

With a solid API architecture in place, the next step is to develop and deploy the custom AI models that address the specific business needs you identified earlier. This phase involves both model creation and packaging them as APIs for seamless integration.

- Training and Customizing AI Models:

AI models must be trained on high-quality, domain-specific data to deliver accurate results. For instance, if you’re working in healthcare, your models might need to be trained on medical records, lab results, and imaging data to make predictions. Depending on the use case, this could involve training supervised models for classification tasks or unsupervised models for clustering data. The key here is to tailor the models to meet the specific challenges of your industry. - Packaging AI Models as APIs for Easy Integration:

Once the AI model is trained, you’ll need to wrap it in an API to ensure seamless communication with other systems. This can be done using RESTful APIs, GraphQL, or other protocols, depending on your needs. Packaging AI models as APIs makes them modular and reusable, allowing other teams or applications to easily access them. The goal is to create a standardized interface that abstracts the complexities of the model and makes it easy for developers to integrate it into business workflows.

By developing custom models that address specific business needs and packaging them for API access, companies can ensure that the AI solutions are both relevant and scalable.

4. Integrating AI APIs with Legacy Enterprise Systems

Integrating AI into legacy systems can be a daunting task, but the API-first approach minimizes disruptions and ensures smooth interaction between new and old technologies.

- Connecting AI Models to Existing Systems:

Integrating AI with legacy systems often requires ensuring compatibility without overhauling the entire infrastructure. APIs provide an ideal solution, as they allow AI models to be added as separate services that interact with the core systems without requiring significant changes to them. This modular approach ensures that both the legacy systems and new AI models work together without causing downtime. - Using Middleware Solutions for Data Exchange:

Middleware solutions play a vital role in bridging the gap between legacy systems and new AI models. Middleware allows for smooth data exchange and communication between systems that might use different technologies or protocols. It can also handle data transformations, ensuring that AI models receive data in the right format, while maintaining compatibility with legacy applications.

This integration approach ensures that AI can be leveraged without disrupting ongoing business operations.

5. Real-Time Data Processing and AI Inference

Real-time data processing is critical for many AI applications, such as fraud detection, predictive analytics, and personalized recommendations. The API-first strategy enables seamless real-time communication between AI models and enterprise systems.

- Ensuring Smooth, Real-Time Communication:

AI models often need to interact with enterprise systems in real-time, processing incoming data and delivering insights immediately. For instance, a recommendation engine for an e-commerce website might need to process user behavior data and generate product suggestions in seconds. APIs facilitate this real-time communication by allowing data to flow seamlessly between systems and AI models. - Implementing APIs for Continuous AI Inference:

To keep the AI models continuously updated with the latest data, it’s important to implement APIs that support ongoing inference. This allows enterprise systems to query AI models for predictions as new data becomes available. These continuous interactions ensure that the AI system remains accurate and responsive, making it a valuable tool for decision-making.

Real-time data processing and inference enable businesses to stay agile and responsive in fast-moving markets.

6. Monitoring and Optimizing AI Integration

AI integration doesn’t end once the system is live. Continuous monitoring and optimization are necessary to ensure that AI models continue to perform well and meet evolving business needs.

- Tracking API Performance and AI Outcomes:

It’s important to monitor the performance of both the AI models and the APIs. This includes tracking the response times of API calls, ensuring minimal latency, and checking that the models are making accurate predictions. Analytics tools can provide insights into the effectiveness of AI models, helping to identify potential issues early on. - Fine-Tuning AI Models Based on Feedback:

AI models should be continuously improved based on new data and feedback. As business requirements evolve, AI models may need to be retrained or adjusted to better align with new goals. APIs allow businesses to update models without disrupting other systems, making it easier to implement changes over time.

By actively monitoring and optimizing the AI models and APIs, businesses can ensure that their AI solutions continue to deliver value over the long term.

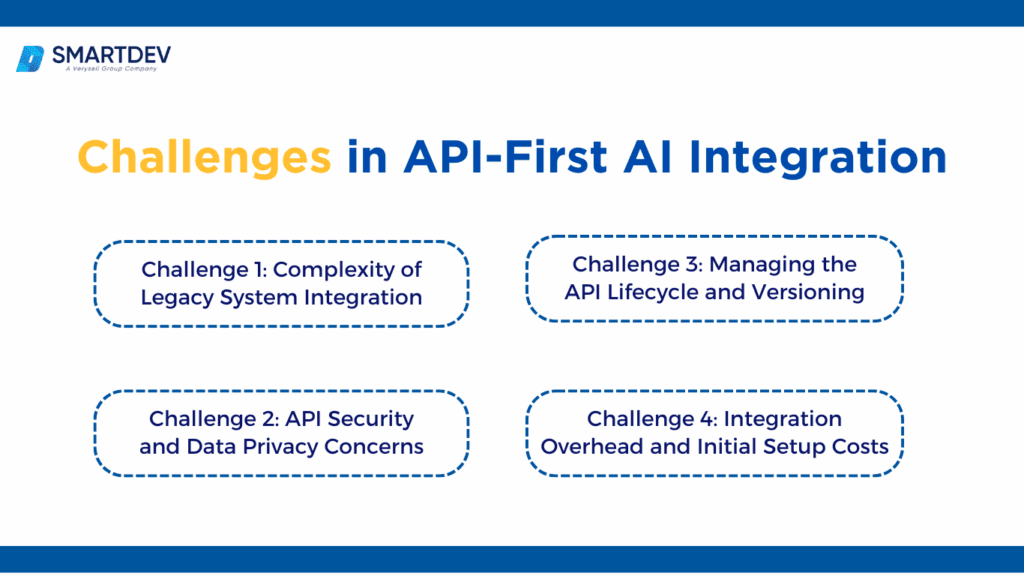

Challenges in API-First AI Integration

While the API-first approach offers many benefits for integrating AI models with existing systems, there are several challenges that organizations must address. These challenges can make the implementation process more complex. Below, we discuss the key obstacles businesses face when adopting API-first AI integration and how to approach them.

1. Complexity of Legacy System Integration

One of the primary challenges organizations face is integrating AI with legacy systems. These older systems often have data storage formats and architectures that were not designed to support modern technologies like AI.

- Data Compatibility: Legacy systems typically store data in outdated formats that may not align with the structured data required by AI models. This can create significant barriers when trying to make legacy data accessible for AI processing. Organizations must spend time and resources ensuring that data from older systems can be properly translated and formatted for AI consumption.

- System Constraints: Many legacy systems also have limitations in terms of processing power, memory, and storage. AI models often require significant computing resources, which older systems may not be able to provide. For example, AI models that process large amounts of real-time data, such as sensor data in manufacturing, may overwhelm the system’s capabilities if not carefully optimized.

- Gradual Integration: The API-first approach can help overcome some of these obstacles by allowing companies to gradually integrate AI. APIs can provide an intermediary layer between legacy systems and new AI models, easing the transition without the need for a full system overhaul. However, significant effort is needed to ensure compatibility between old and new technologies.

2. API Security and Data Privacy Concerns

As APIs become the primary method of communication between AI models and enterprise systems, security becomes a critical issue. APIs are designed to enable easy communication and data sharing, but they also expose systems to potential vulnerabilities.

- Unauthorized Access: APIs can be a target for hackers or unauthorized users. If not properly secured, they can give access to sensitive data or allow attackers to manipulate AI models. This is particularly concerning in industries like finance and healthcare, where personal, financial, or medical data is involved.

- Data Breaches: As AI models often require access to large amounts of sensitive data, the risk of data breaches increases. For example, a security flaw in an API could expose a patient’s health information in a healthcare system or customer financial data in a banking system. These breaches can have serious legal and reputational consequences.

- Privacy Regulations: In industries that handle personal or sensitive data, businesses must comply with data privacy regulations like GDPR in Europe or HIPAA in the U.S. APIs must be designed to meet these standards, ensuring that data is encrypted and that the flow of data through APIs is strictly controlled.

- Securing APIs: To mitigate these risks, businesses must implement strong API security measures. This includes using OAuth, SSL/TLS encryption, rate limiting, and authentication mechanisms to ensure only authorized users can access sensitive data. Regular API audits and penetration testing should also be conducted to identify and fix vulnerabilities.

3. Managing the API Lifecycle and Versioning

As AI models evolve, it’s essential for APIs to remain compatible with newer versions of the models. Managing API versions is a critical challenge that must be addressed to ensure the smooth operation of the system.

- Backward Compatibility: New API versions must be backward compatible with older versions to avoid disruptions. If a business updates its AI model but doesn’t properly manage the API versions, it could break communication between AI systems and other applications. This may cause errors or loss of functionality.

- Versioning Complexity: As APIs evolve, businesses may end up with multiple versions of the same API. Managing these versions and ensuring that each one is functioning as expected can be time-consuming and complex. It’s important to establish a clear versioning strategy and guidelines for transitioning between versions without causing downtime.

- Regular Updates and Testing: Updating APIs regularly is crucial to keep up with new AI advancements and system requirements. However, businesses must also ensure that these updates don’t affect the stability or security of the system. Continuous testing and monitoring of APIs are necessary to ensure their reliability and compatibility.

- Automated Testing: To streamline versioning management, automated testing frameworks should be adopted. These can quickly identify issues in new versions and ensure that updates won’t break existing functionality. Proper testing can reduce the risk of errors during the integration process.

4. Integration Overhead and Initial Setup Costs

Setting up API-first AI integration requires a significant upfront investment. Businesses must allocate time, resources, and expertise to successfully design, test, and integrate APIs.

- Time and Resources: The process of designing APIs and integrating them with existing systems can take time. This includes tasks like customizing the APIs to meet specific business needs, testing for compatibility, and ensuring security. In addition, businesses may need to hire external consultants or experts with knowledge of both AI and API development.

- Development Expertise: Developing API-first systems requires specialized knowledge, especially when integrating AI models. IT and development teams must be familiar with both API design and AI technologies to ensure the integration is smooth and effective. Without this expertise, the process can be delayed, resulting in higher costs.

- Long-Term Maintenance: After the initial setup, businesses must also plan for ongoing maintenance of the APIs. APIs must be regularly updated and monitored to ensure they continue to meet the needs of the business. This adds to the long-term cost and effort of API-first integration.

- Budgeting for Integration: To reduce integration costs, businesses should start small and prioritize the most impactful areas for AI deployment. By focusing on areas with the highest ROI, such as predictive maintenance in manufacturing or fraud detection in finance, businesses can prove the value of AI integration before investing in full-scale deployment.

Tips and Best Practices for Successful API-First AI Integration

While API-first integration offers numerous benefits, businesses must also navigate several challenges. Fortunately, there are practical steps organizations can take to overcome these hurdles and ensure successful implementation. Below are some tips to address common obstacles and streamline the process of integrating AI models through APIs.

1. Middleware Solutions for Legacy Systems

One of the biggest challenges businesses face when adopting an API-first approach is integrating AI models with legacy systems. These older systems often use outdated data formats that are incompatible with modern AI models. Fortunately, middleware solutions can help bridge this gap.

- What is Middleware? Middleware acts as an intermediary layer between legacy systems and AI models. It helps convert or translate data into formats that AI models can process. This allows organizations to connect AI models to their legacy infrastructure without needing to rewrite or replace outdated systems.

- API Gateways: An API gateway can also act as middleware, managing requests between AI models and backend systems. It helps route traffic, ensures security, and monitors data flow. By using middleware or an API gateway, businesses can simplify data translation and communication between legacy systems and new AI technologies.

- Simplifying Integration: Middleware tools allow businesses to integrate AI incrementally. They reduce the complexity of working with disparate systems and make the integration process smoother, helping AI models work with legacy systems without disrupting operations.

2. Robust API Security Practices

As APIs become the primary method for AI models to communicate with other systems, securing these APIs is essential. APIs, if not properly secured, can become vulnerable to cyberattacks or unauthorized access. To mitigate these risks, businesses must implement strong security measures.

- OAuth and JWT: Use OAuth (Open Authorization) and JWT (JSON Web Tokens) to ensure secure communication between APIs and other systems. OAuth helps control access to APIs by allowing users to grant permission to use specific data or services. JWT is a secure way to verify identities and transmit information between parties.

- SSL/TLS Encryption: SSL (Secure Socket Layer) and TLS (Transport Layer Security) are essential for encrypting the data being transmitted through APIs. These encryption protocols ensure that any data sent between systems is secure and cannot be intercepted or tampered with.

- API Management Tools: Use API management tools like Apigee or AWS API Gateway to monitor and secure your APIs in real-time. These tools help track API performance, manage access control, and prevent unauthorized access. They can also automatically detect security breaches and offer real-time alerts, helping businesses maintain a secure API ecosystem.

- Regular Security Audits: It’s crucial to conduct regular security audits and penetration tests to ensure that your API integrations remain safe and secure. Regular testing can help identify vulnerabilities early and mitigate risks before they impact operations.

3. Version Control and Testing

Managing the API lifecycle and ensuring that APIs remain compatible with evolving AI models is a major challenge. As AI models and other systems change over time, businesses must ensure that their APIs continue to function smoothly without causing disruptions.

- Semantic Versioning: Use semantic versioning for APIs. This method assigns version numbers in a way that clearly communicates the scope of changes. For example, version 1.0.0 indicates the first release, while version 1.1.0 might indicate incremental improvements. Semantic versioning helps developers track changes and maintain compatibility between API versions.

- Backward Compatibility: Ensure that each new version of the API is backward compatible with previous versions. This allows older systems or versions of the AI model to continue working while newer versions are being implemented. Proper version control prevents the disruption of services when updating the API.

- Testing in Staging Environments: Always test new versions of APIs in a staging environment before deploying them in production. Staging environments replicate the production environment, allowing teams to test API behavior and identify any issues before they impact the live system.

- Automated Testing: Implement automated testing frameworks to check for compatibility issues with new versions of the API. Automated tests help identify bugs early in the development process, making the transition between versions smoother and reducing the risk of downtime.

4. Pilot Projects for Low-Cost Testing

Starting with a pilot project is a great way to test the API-first integration in a low-risk, cost-effective environment. Pilot projects allow businesses to evaluate the approach before fully scaling up and committing significant resources.

- Controlled Environment: In a pilot project, you can implement API-first AI integration in a smaller, controlled environment. This allows you to test the feasibility of the integration without the risks associated with larger-scale deployments. You can monitor performance, identify challenges, and refine the approach before scaling it to the entire organization.

- Validation of AI Models: Pilot projects also provide an opportunity to validate the effectiveness of the AI models. By testing them in a real-world scenario, businesses can evaluate how well the AI models perform and whether they deliver the desired outcomes. This allows teams to make adjustments or improvements before broader deployment.

- Gradual Rollout: Once the pilot project proves successful, businesses can gradually expand the integration across departments or systems. This approach minimizes the risk of disruption and helps ensure that the AI integration scales smoothly over time.

- Proof of Concept: Pilot projects serve as a proof of concept, demonstrating the value of API-first AI integration to stakeholders. This can help secure buy-in for larger-scale adoption by clearly showing the benefits of the approach.

Real-World Case Studies of API-First AI Integration

The API-first approach has proven to be a game-changer for businesses across various industries. By integrating AI models into existing systems via APIs, companies can scale their AI capabilities, drive innovation, and optimize operations without the need for massive system overhauls. Below are real-world examples from different industries where API-first integration has enabled businesses to enhance their AI capabilities while maintaining smooth operations.

1. Financial Services: Fraud Detection with PayPal

In the financial services sector, PayPal uses AI-powered fraud detection to protect its customers and manage risks. By utilizing an API-first approach, PayPal integrates its AI models seamlessly into its payment processing system to detect fraudulent activity in real-time without slowing down transactions.

Here’s how it works:

- Real-Time Analysis: PayPal’s AI models continuously analyze transactions as they occur, identifying unusual or suspicious patterns that might suggest fraudulent activity. This ensures that every transaction, regardless of volume, is scrutinized promptly and effectively.

- Immediate Action: When a potentially fraudulent transaction is flagged, PayPal’s system immediately blocks the transaction and alerts both the customer and the merchant. This swift response minimizes potential losses and protects users from unauthorized activities.

- API Integration: PayPal uses APIs to integrate the AI-driven fraud detection system with its existing payment infrastructure. This enables smooth communication between different systems, making it possible to update and refine AI algorithms without disrupting the underlying infrastructure. As a result, PayPal can implement new fraud detection models or improve existing ones, all while keeping the transaction flow uninterrupted.

By adopting an API-first approach, PayPal can scale its fraud detection system globally, allowing seamless updates and continuous improvements to its AI models without disrupting transactions. This approach ensures real-time fraud detection, reduces system downtime, and allows PayPal to rapidly respond to emerging threats while maintaining fast and secure payment processes. The flexibility of APIs also helps PayPal efficiently enhance its fraud prevention capabilities across various regions and transaction volumes.

2. Manufacturing: Predictive Maintenance at General Electric (GE)

In the manufacturing sector, General Electric (GE) uses AI-powered predictive maintenance to reduce unplanned downtime and extend the life of industrial equipment. By leveraging an API-first approach, GE integrates its AI models into the Predix platform, which monitors the health of machines and factory equipment in real-time.

Here’s how it works:

- Real-Time Data Collection: GE’s Predix platform connects to machines, sensors, and other equipment via APIs to collect real-time data. AI models analyze this data to predict when machines might fail, allowing maintenance teams to intervene before a breakdown occurs.

- Proactive Maintenance: Using AI-driven insights, GE helps its clients make better decisions about when to schedule maintenance, reducing unexpected repairs and minimizing downtime. This proactive approach leads to significant cost savings and more efficient operations.

- API Integration: GE uses APIs to connect its AI models to the existing machinery and equipment infrastructure. This seamless integration ensures that the system can be continuously updated without requiring a complete overhaul of the platform. As new AI models or insights emerge, they can be integrated into the system, enhancing performance over time.

By using an API-first approach, GE can scale its predictive maintenance system across various manufacturing sites, providing real-time insights that improve operational efficiency. The ability to continuously update and refine AI models through APIs ensures the platform remains effective in predicting equipment failures, reducing costs, and minimizing disruptions to production.

3. Healthcare: AI Diagnostics at Mount Sinai Health System

In healthcare, Mount Sinai Health System in New York City uses AI-powered diagnostic tools to improve patient care and enhance diagnostic accuracy. The system integrates AI models with existing Electronic Health Record (EHR) systems through an API-first approach, allowing healthcare providers to leverage real-time insights into patient health.

Here’s how it works:

- AI Diagnostics: Mount Sinai uses AI models to analyze patient data, such as medical history and lab results, to predict risks for chronic diseases like heart disease and diabetes. These insights help doctors make more informed decisions about treatment plans.

- Real-Time Alerts: The integration of AI through APIs allows healthcare providers to receive real-time alerts when the AI models detect abnormal patterns in patient data. This enables early intervention, helping doctors identify patients who require immediate attention.

- Seamless Integration: By using APIs, Mount Sinai integrates AI diagnostics into its existing EHR system, enabling continuous updates to the AI models without disrupting the entire healthcare system. This modular approach ensures the platform can evolve with new AI capabilities over time.

By adopting an API-first approach, Mount Sinai can improve its diagnostic tools and continuously update its AI models, enhancing patient care while maintaining operational continuity. The ability to integrate and scale AI capabilities without replacing the entire system ensures better outcomes for patients, while keeping the healthcare provider flexible and adaptable to future advancements.

4. Retail: Personalized Recommendations at Amazon

In retail, Amazon uses AI to deliver personalized shopping experiences through product recommendations and dynamic pricing. By adopting an API-first strategy, Amazon integrates these AI models into its e-commerce platform, ensuring that customers receive relevant suggestions and competitive pricing in real-time.

Here’s how it works:

- Personalized Recommendations: Amazon’s AI models analyze vast amounts of customer data, such as browsing history and past purchases, to generate personalized recommendations. These suggestions are delivered to customers in real-time as they browse the site, creating a tailored shopping experience.

- Dynamic Pricing: Amazon also uses APIs to integrate dynamic pricing models, which adjust product prices based on demand, competition, and stock levels. This ensures that Amazon remains competitive while maximizing sales and inventory efficiency.

- API Integration: By using APIs, Amazon can continuously update its recommendation algorithms and pricing models, without disrupting the core e-commerce platform. This enables Amazon to scale its AI-powered services quickly and efficiently, adding new capabilities or enhancing existing features as needed.

By adopting an API-first approach, Amazon can continually refine its AI models, offering an increasingly personalized shopping experience for millions of customers worldwide. The flexibility of APIs allows Amazon to make updates without affecting the user experience, ensuring that the e-commerce platform remains innovative and competitive.

Conclusion

The API-first approach is a game-changer for businesses wanting to integrate AI models into existing systems without large-scale overhauls. By using APIs as the main integration tool, companies can modularize their AI capabilities, allowing them to introduce AI solutions gradually and scale them seamlessly. This approach lets businesses adopt advanced AI technologies while keeping their existing systems stable and performing well. From fraud detection in finance to predictive maintenance in manufacturing and personalized recommendations in retail, APIs help businesses stay competitive and agile.

As AI adoption continues to grow, companies that embrace the API-first strategy will have an advantage in scaling AI effectively. This approach ensures smoother integration and the flexibility to update AI models, adapt to new technologies, and improve business processes without major disruptions. Additionally, API-first integration offers better security, scalability, and cost-effectiveness. By adopting this strategy, businesses can navigate the complexities of AI adoption, grow, innovate, and minimize risks while maximizing returns.