1. Introduction to AI Agents

AI agents are no longer just futuristic concepts—they’re already here, changing the way we work, live, and make decisions. From automating customer service to managing complex business workflows, AI agents are shifting the role of technology from passive tools to proactive problem-solvers.

In this section, you’ll learn:

-

What AI agents really are — in simple, practical terms.

-

Why they matter — and how they’re helping individuals and businesses do more with less.

-

Where they’re already making an impact — through real-world use cases across industries.

-

How they’ve evolved — from basic rule-following bots to intelligent, autonomous systems.

Whether you’re just getting started or looking to sharpen your understanding, this section will give you the essential knowledge to see where AI agents fit into the bigger picture—and how you can start using them to your advantage.

What Is an AI Agent?

Artificial Intelligence (AI) agents are software entities that can perceive their environment, make decisions, and take actions autonomously to achieve specific goals. In simpler terms, an AI agent serves as a digital “agent” on your behalf, processing information and performing tasks without constant human direction.

For business owners, AI agents represent a transformative technology that can automate complex processes, enhance customer interactions, and drive decision-making with data-driven insights.

Read more: Understanding AI Models vs. AI Agents

Why Are AI Agents Important? Real Cases in Today’s World

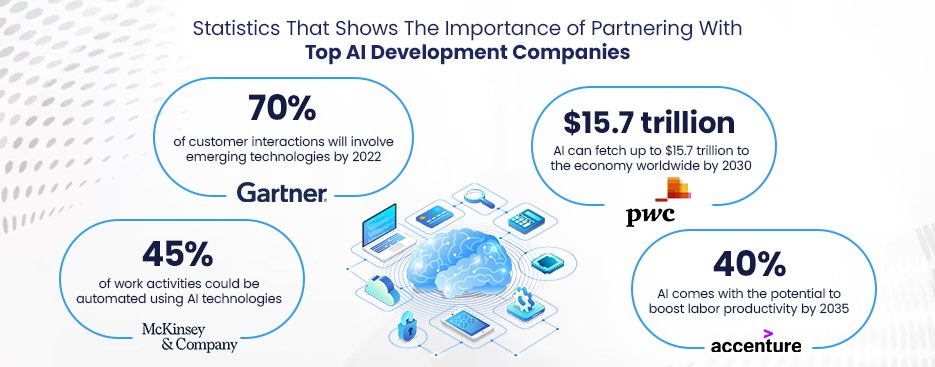

The importance of AI agents for businesses today cannot be overstated. In customer service, for example, AI agents (like intelligent chatbots) are handling a growing share of inquiries, providing instant support 24/7. In fact, Gartner projects that by 2025 about 70% of customer interactions will be managed by AI technologies, underscoring how pervasive and critical these agents have become in delivering efficient customer experience.

Real-world applications of AI agents span industries: e-commerce giants deploy AI agents for personalized recommendations (which account for 35% of Amazon’s sales according to McKinsey), banks use AI trading agents for algorithmic trading and fraud detection, and hospitals employ AI agents to assist in diagnostics and patient triage.

These examples show a clear evolution from early rule-based systems to today’s autonomous, learning agents. As the technology has matured, we’ve moved from those static, rule-based programs to adaptive agents that can improve over time, making them invaluable assets in the modern business toolkit.

Evolution of AI Agents: From Rule-Based to Autonomous

From SmartDev’s perspective, this evolution is something we’ve witnessed firsthand. When we started developing AI solutions years ago, many business automation systems were essentially hard-coded decision trees. They could handle predictable scenarios but failed when faced with nuance.

Today, our AI agents not only follow instructions but can also understand context, adapt to new information, and operate with a degree of autonomy that was science fiction just a decade ago.

In the sections that follow, we’ll share our industry insights and experience at SmartDev on how to create an AI agent that can bring these capabilities into your business.

2. Understanding the Components of an AI Agent

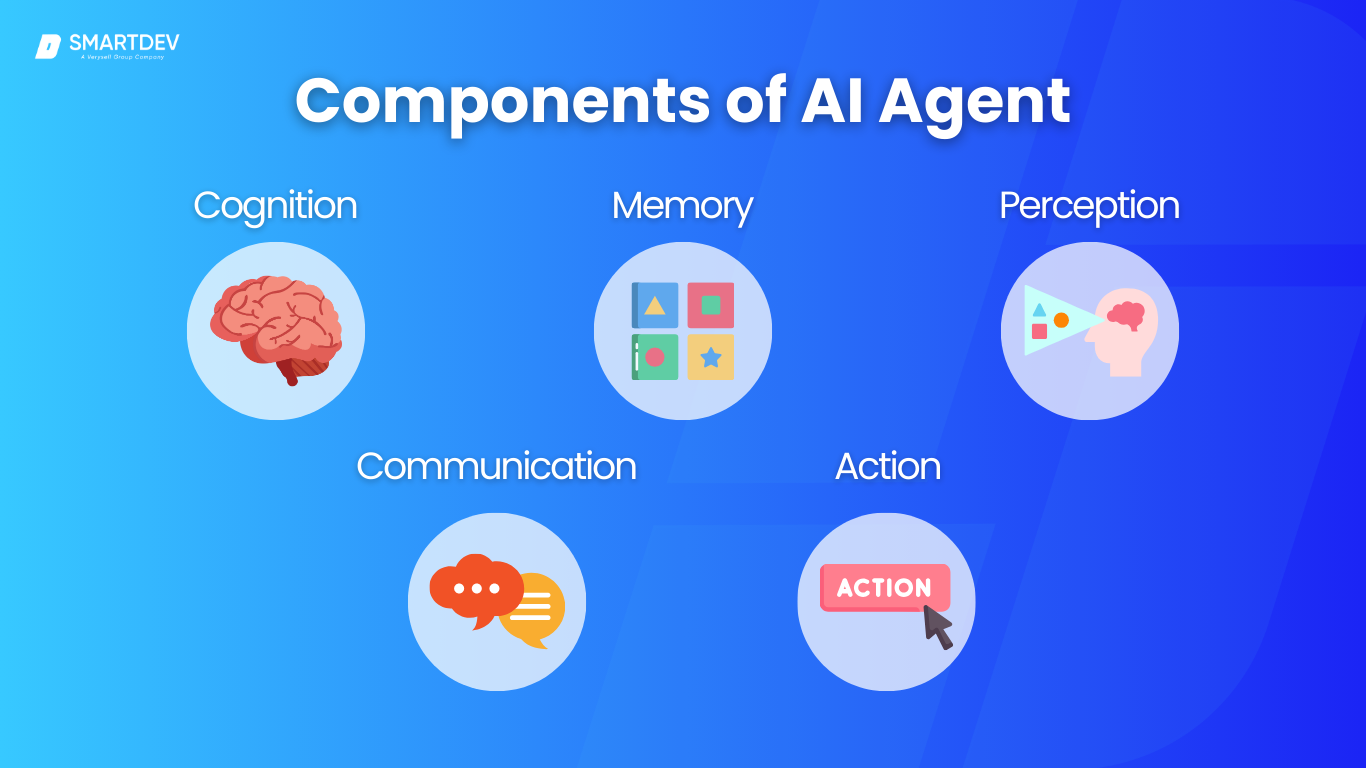

To build a robust AI agent, it’s important to understand its core components – essentially the mind and senses of the agent. Each of these plays a distinct role in how the agent operates:

2.1. Cognition – How AI Thinks

This is the “brain” of the AI agent. Cognition is how the agent thinks, analyzes data, and makes decisions. It involves the algorithms or models that enable reasoning, such as deciding the best course of action to achieve a goal.

For instance, an AI agent might use a planning algorithm to determine the optimal delivery route for a logistics company or employ a machine learning model to decide whether a transaction is fraudulent.

Cognitive processes often involve evaluating options (using logic or learned patterns) and selecting actions that maximize success according to the agent’s goals (this could be a utility function, a reward signal in reinforcement learning, etc.).

2.2. Memory – How AI Remembers

Memory is how the agent remembers and stores information. Just as humans have short-term and long-term memory, AI agents have fast caches and long-term knowledge bases. Memory could be a database of facts, a vector store of embedded knowledge, or any repository the agent can query.

For example, a customer support AI agent might have a knowledge base of FAQs and past interactions to draw from when answering a question. Memory is critical for maintaining context over time – if a user tells a chatbot their name or preferences, the agent’s memory enables it to recall that information later in the conversation.

2.3. Perception – How AI Gathers Data

Perception is how the AI agent gathers data from its environment. Depending on its role, an agent’s “sensors” could be physical (cameras, microphones, IoT sensors) or virtual (APIs, real-time data feeds, user input text).

For instance, an AI security agent might take in video feeds and network logs to detect anomalies, while a shopping assistant agent perceives user queries and browsing behavior as input. This input stage is analogous to human senses – it’s the raw information that feeds into the agent’s cognition.

High-quality perception is vital; if your agent can’t accurately understand its inputs, the decisions it makes will be flawed. Modern AI agents often integrate technologies like computer vision for image/video perception, natural language processing (NLP) for text/audio perception, and other sensor integrations to comprehensively “sense” what’s going on.

2.4. Communication – How AI Interacts

Communication is how the agent interacts and converses with users or other systems. For many business applications, this means understanding human language and responding in kind. Through Natural Language Processing, an AI agent can parse user questions or commands, and then generate an appropriate response.

Communication can also involve structured messaging, such as an agent calling another software’s API and interpreting the response. However, since a lot of AI agents serve as front-line interfaces for users, strong NLP capabilities are often crucial.

At SmartDev, we ensure our agents are equipped with advanced language models or dialog management systems so they can not only understand queries but also respond clearly and helpfully. Whether it’s via text chat, email, or voice, an AI agent’s communication module is the face (or voice) it presents to the world.

2.5. Action – How AI Acts

Finally, action is how the AI agent acts upon decisions. Once the cognition module decides what to do, the action functionality executes it. This could mean performing a transaction, updating a database record, calling an external API, or even controlling a physical device.

For example, if a smart factory agent detects a machine anomaly (perception) and decides it’s critical (cognition), the action component might trigger an emergency shutdown or alert.

In essence, the action component is the agent’s set of “effectors” – analogous to how a robot moves its arms, an AI software agent uses its integration hooks to effect change. Effective AI agents often integrate with various systems: they might have API connectors to enterprise software (CRM, ERP systems), the ability to send emails or notifications, or even control IoT devices.

In our experience, the best AI agents are those designed holistically with all five functionalities in mind, ensuring they can sense, think, communicate, remember, and act in harmony.

3. Types of AI Agents & Their Use Cases

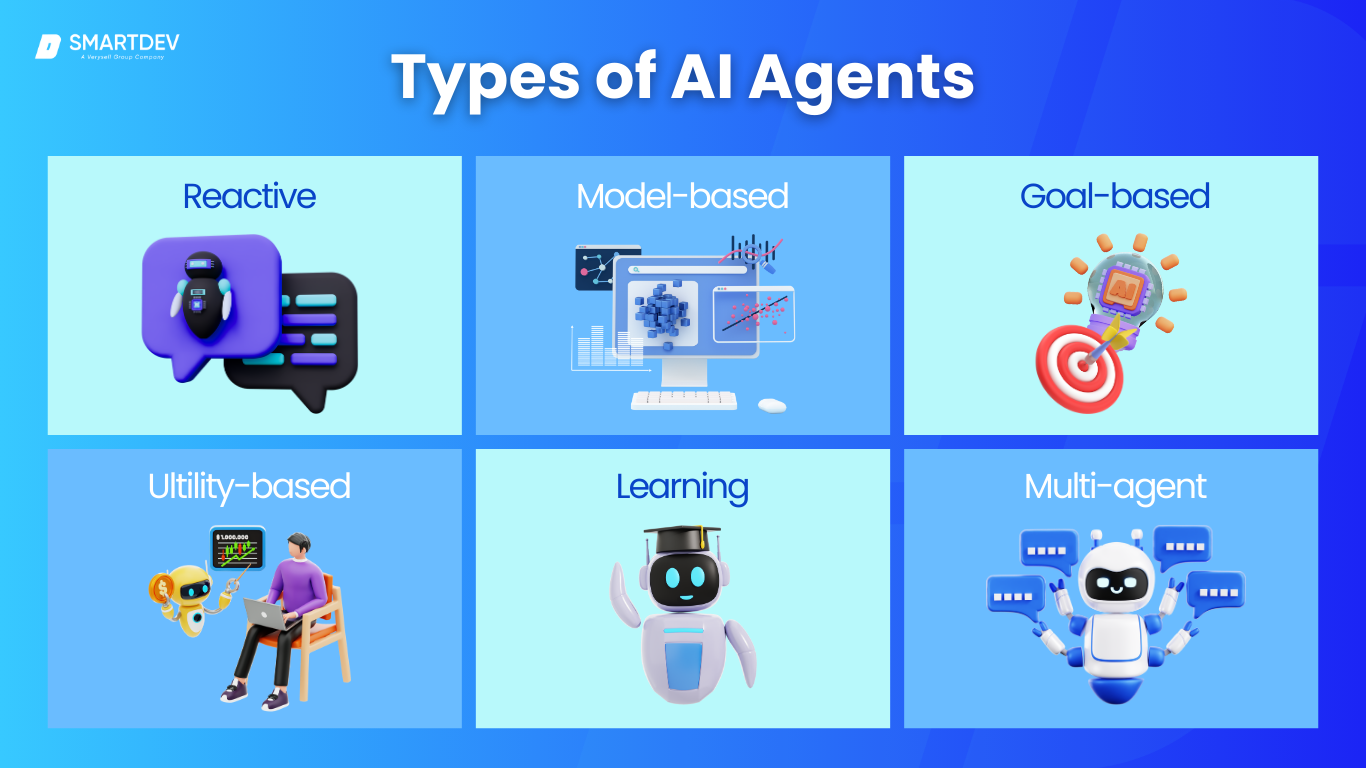

Not all AI agents are created equal – in fact, there are several types of AI agents, each with different levels of sophistication and ideal use cases. In classical AI theory (as described in Russell & Norvig’s AI), agents are often categorized by their internal architecture and behavior.

3.1. Reactive Agents

Reactive agents operate on a stimulus-response basis without relying on internal memory of past states. They perceive an input and react immediately according to a set of rules or learned responses. These agents are the simplest form of AI agent – think of them like a thermostat that turns on the heater if the temperature drops below a threshold. In business, purely reactive agents might include basic chatbots that use predefined rules to answer FAQs or route inquiries.

Use case: In customer support, a reactive agent could handle common questions (store hours, order status) instantly, deflecting routine tasks from human agents. In manufacturing, a reactive AI might control a machine: if a sensor detects overheating, the agent immediately shuts it down – no memory or deep planning needed, just an instant reflex to a condition.

3.2. Model-Based Agents

A step up in intelligence, model-based agents maintain an internal state or model of the world that goes beyond the immediate input. This means they consider how the world evolves or they remember past inputs to inform current decisions. The “model” could be as simple as “the last known location of an object” or as complex as an internal simulation of the environment.

For instance, a robot vacuum is a model-based agent when it keeps track of which areas of a room it has cleaned (memory of past actions) to decide where to go next.

Use case: In finance, a model-based trading agent might maintain a state representing market trends or its own portfolio holdings to decide trades (it doesn’t just react to the latest price, but also remembers historical trends). In healthcare, an AI diagnostic assistant could keep track of patient symptoms over time – instead of reacting only to the latest test result, it compares it with previous results to detect changes or patterns.

3.3. Goal-Based Agents

Goal-based agents are driven by objectives. They not only consider “What is the state?” but also “What do I want to achieve?” and then plan actions to reach that goal. This often involves search and planning algorithms: the agent evaluates potential sequences of actions against its goal and chooses a path that gets it closer to the goal state. This type of agent can navigate more complex decision spaces by focusing on end results.

Use case: In e-commerce or marketing, a goal-based agent could be tasked with increasing user engagement – it might plan a sequence of personalized offers or messages to achieve that goal for each customer segment. In project management, an AI agent could be given the goal of optimizing team scheduling and then evaluate different assignment combinations to meet deadlines.

3.4. Utility-Based Agents

Utility-based agents extend goal-based agents by introducing the concept of optimization. Instead of simply achieving a goal in any way, they consider multiple possible outcomes and evaluate which outcome is most preferred (has the highest utility). They have a utility function – a measure of satisfaction – that they try to maximize. This allows them to handle trade-offs.

Use case: In finance, consider an investment AI agent that not only has a goal “maximize return” but also factors in risk tolerance as a utility. It will strive for the best risk-adjusted returns, effectively optimizing a utility function that balances profit against risk. These agents are powerful for complex decision-making where there isn’t a single clear goal, but rather a need to evaluate the quality of outcomes.

3.5. Learning Agents

Learning agents are designed to improve their performance over time. They have components to learn from experience. A learning agent typically will have a performance element and a learning element. Almost any of the above agent types can be augmented with learning capabilities – for example, you could have a learning reactive agent that adjusts its reflex rules based on feedback, or a learning goal-based agent that gets better at planning as it experiences more scenarios.

Use case: In healthcare, a learning agent might improve its diagnostic suggestions as it gets more patient data and feedback from doctors learning from cases where its suggestions were right or wrong. Over time, a learning agent in an e-commerce setting could refine its product recommendations by learning from each customer’s clicks and purchases, getting more personalized and accurate. The key benefit here is adaptability – learning agents can start with baseline knowledge and become more effective as they accumulate data.

3.6. Multi-Agent Systems

Sometimes one agent isn’t enough for a complex problem, and that’s where multi-agent systems come in. In a multi-agent system, you have multiple AI agents that may work collaboratively or competitively within an environment. Each agent might have its own role or specialization, and together they accomplish tasks via interaction. This is analogous to a team of employees: each member (agent) has certain duties, but they coordinate to achieve an overall objective. Multi-agent systems can be particularly powerful in simulating complex environments or in large-scale optimization.

Use case: In manufacturing or logistics, multi-agent systems can manage different parts of a workflow – one agent schedules production, another manages supply chain orders, and another handles delivery routing, all communicating to keep the operations smooth.

At SmartDev, we’ve explored multi-agent architectures where, for example, an “executive” agent can delegate subtasks to other sub-agents (a concept we’ll revisit with AutoGPT in an upcoming section). This approach mimics organizational behavior and can solve problems that are too vast for a single agent by dividing and conquering.

4. Choosing the Right Technology Stack

With a clear vision of what your AI agent should do, the next step is deciding how to build it – and that means selecting the right technology stack.

Let’s demystify this by breaking it down into key components of the stack and discussing options for each, along with why you might choose them.

| Component | Options | Key Considerations |

| Programming Languages |

|

Python for AI/ML JavaScript for web AI.

C++/ Rust for speed. R for data-heavy tasks. |

| AI Frameworks & Libraries |

|

TensorFlow/PyTorch for training.

LangChain for LLM agents. OpenAI API for quick AI integration. |

| LLMs & NLP Models |

|

GPT-4/Claude for general AI.

Llama 2/Mistral for self-hosted, private AI |

| Databases |

|

Vector DBs for AI search.

SQL for transactions. Graph DBs for complex relationships. |

| APIs & Tools for Deployment |

|

OpenAI/Azure for fast LLM use.

Hugging Face for custom models. Cloud AI for enterprise AI. |

| Infrastructure & Hosting |

|

Cloud for scale.

On-prem for security. Serverless for microservices. Edge for IoT/low-latency AI. |

5. Step-by-Step Guide to Building an AI Agent

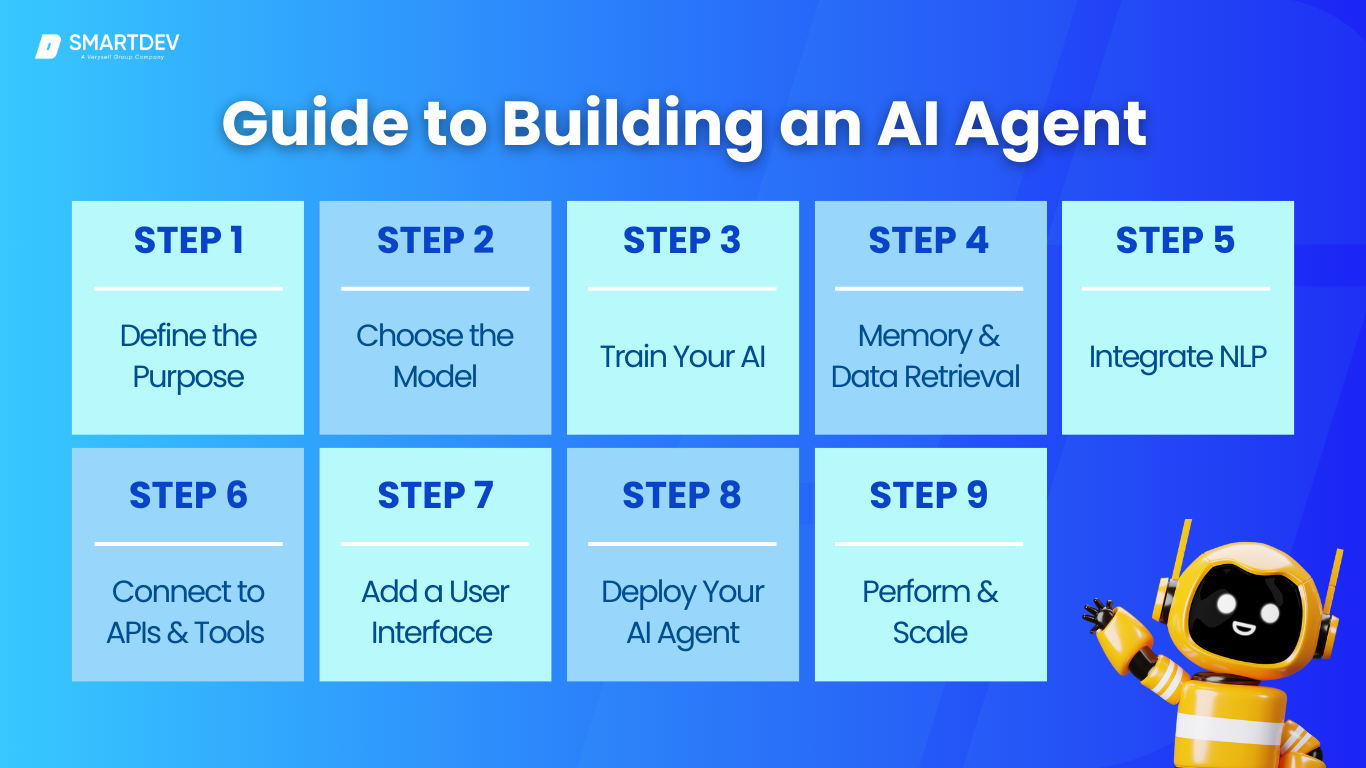

Here’s a step-by-step guide, distilled from our SmartDev playbook, to take you from idea to a deployed AI agent. This guide keeps things high-level (no code required for understanding) and is tailored for business owners working with development teams.

Step 1: Define the Purpose of Your AI Agent

Every successful project starts with a clear purpose. Begin by pinpointing what problem or opportunity the AI agent will tackle in your business.

- Is it to automate customer service queries?

- To act as a virtual sales assistant?

- To analyze large datasets and generate reports?

- …or etc.

Defining a specific use case and objectives will guide all subsequent decisions. At this stage, engage stakeholders (e.g. the customer support manager if it’s a support agent, or IT if it’s internal automation) to outline requirements.

Key questions to answer:

- What tasks should the agent perform?

- Who will interact with it?

- What are the success metrics (e.g. reduce response time by 50%, handle 1000 queries/day, etc.)?

By clearly defining the purpose, you ensure that you and your development team have a unified vision.

For example, SmartDev once worked with a healthcare company to create an AI agent for patient appointment scheduling – the purpose was narrowly defined as “automate routine scheduling calls and free up staff time.” With that clarity, we knew exactly what features the agent needed. Write down the purpose and expected benefits – this will serve as your north star.

Step 2: Choose the Right Model

With the purpose in mind, decide on the brain of the agent. There are a few approaches:

Large Language Model (LLM)

You might use a pre-trained Large Language Model (LLM) if your agent needs sophisticated language understanding or general intelligence. For instance, for a conversational agent, a model like GPT-4 or Claude can be a great starting brain because it’s already learned how to converse and reason in natural language.

Retrieval-Augmented Generation (RAG)

If your agent needs to frequently fetch or refer to a lot of external information (like documents, knowledge base content), consider a Retrieval-Augmented Generation (RAG) approach.

RAG isn’t a specific model but a design: it combines an LLM with a retrieval mechanism. The agent uses a knowledge store (like a vector database or search index) to pull in relevant information for each query and the LLM uses that info to craft its answer. This is ideal when you want up-to-date or company-specific knowledge without training a giant model on all of it.

Custom ML model

In some cases, you might need a custom ML model (or a collection of models) trained for a specific task. Custom models are appropriate when you have proprietary data and a well-defined prediction or classification task that generic models wouldn’t know.

Often, an AI agent will actually involve a combination: maybe a custom model for one part and an LLM for another. But at this step, decide on the core approach. If opting to train a model, plan how you’ll gather training data. If using an existing model, evaluate which one fits best (accuracy, cost, speed). The decision also ties back to the tech stack from the previous section (are you using OpenAI’s model via API, fine-tuning an open model, etc.?).

Step 3: Train Your AI (or Fine-Tune)

Now it’s time to get your AI model ready for the task. If you chose a pre-trained LLM and it performs well out-of-the-box on your needs, you might skip heavy training and go straight to integration. But often, some fine-tuning or training is required:

- Fine-Tuning: This means taking a pre-trained model and further training it on your specific data so it better handles your domain or use case. Fine-tuning can dramatically improve performance but requires training expertise and careful validation (to avoid overfitting or loss of the model’s general abilities).

- Supervised Learning: If you’re training a model from scratch or a smaller model, you’ll need labeled data. This could be historical records or knowledge pairs. You’ll feed this data to machine learning algorithms to train the model.

- Reinforcement Learning: In some cases, especially for agents that need to make sequential decisions or where feedback is a success/failure signal, reinforcement learning (RL) is used. At SmartDev, we often do a proof-of-concept training at this stage – train a model quickly on a subset of data just to validate that the approach works. This step might also involve data augmentation (creating more training examples), and definitely involves testing the model on some hold-out data to estimate its performance.

The outcome of Step 3 is a brain that is ready – either a trained model file or a thoroughly configured external model – that can perform the core task of your agent.

Step 4: Implement Memory & Data Retrieval

Next, give your agent a memory. As discussed earlier, memory could be short-term and long-term. For a chatbot agent, this might mean setting up a mechanism to store the conversation context so the agent can reference earlier messages – this could be as simple as passing the last N messages into the model each time, or as complex as maintaining a vector database of the conversation so far.

For an agent that needs business knowledge, this is where you implement a retrieval system. You’ll index your documents or data into a vector database or search engine. The result is that when the agent gets a query it can’t answer from its own model knowledge, it will fetch relevant info from the index to include in its response. If your agent is more process-oriented (say an automation agent that executes tasks), memory might be maintaining state of a workflow. This step is all about ensuring the agent isn’t operating blind each time – it can recall what’s needed.

Concretely, set up your databases here, load initial data. If you have an FAQ, load it into the knowledge base. If the agent should recall user preferences, decide how those are stored and retrieved. It might involve writing some code to query your CRM or database whenever the agent gets a user ID, for instance.

Step 5: Integrate NLP for Conversations

Most business AI agents need to process and generate human language, even if they’re not chatbots. This step involves integrating an NLP model (from Step 3) or an API to handle user inputs and generate responses. If it’s a dialogue agent, you’ll define how it processes questions and formulates answers. For voice-based agents, this includes speech-to-text and text-to-speech integration. Custom vocabulary, regex for structured data, and post-processing (e.g., refining tone, formatting responses) ensure accuracy and brand consistency.

At SmartDev, we fine-tune these elements to create a seamless, professional user experience. By the end of this step, your AI should be capable of basic interaction.

Step 6: Connect the Agent to APIs & External Tools

AI agents need to interact with external systems to fetch data or take actions. Identify required integrations:

- Sales agent: Queries CRM or inventory (“We have 3 in stock” or “Your last order was in January”).

- Finance agent: Pulls stock prices from a financial API.

- IT support agent: Creates tickets or restarts servers via cloud APIs.

Use APIs/SDKs to enable these functions. Modern frameworks allow AI models to call functions dynamically, like fetching order details when a user asks about a shipment. This keeps responses grounded in real data. If the agent performs actions (e.g., sending emails, processing refunds), ensure strict permissions to prevent security risks.

By the end of this step, your AI will be able to interact with business systems, making it more than just a chatbot.

Step 7: Add a User Interface

Now, make the AI accessible via an interface:

- Chatbot: Embed in a website using frameworks like Drift or Intercom. Ensure branding and smooth UX (e.g., typing indicators, human escalation).

- Voice assistant: Integrate with Alexa, Google Assistant, or a mobile app. Focus on clear speech synthesis and interruption handling.

- Internal tools: Slack/Teams bots or a simple web dashboard for employees.

SmartDev has built custom web apps for AI-powered dashboards (e.g., financial analysts generating reports with interactive charts). Design the UI to be intuitive and visually engaging, guiding users with example prompts. By this step, your AI is now accessible for real-world use.

Step 8: Deploy Your AI Agent

Deploying your AI agent involves choosing the right hosting environment. Cloud platforms (AWS, Azure, GCP) offer quick setup and scalability, while on-premise deployment provides full control for sensitive data. Edge deployment is ideal for AI that needs real-time, offline processing.

To ensure smooth deployment, containerization with Docker packages the AI and its dependencies for easy scaling. If hosting a model in-house, GPU-enabled servers or cloud AI instances are necessary. SmartDev often uses TorchServe or TensorFlow Serving for efficient model hosting.

Security is key—store API keys and credentials securely using environment variables. A beta deployment allows controlled testing before full rollout. By the end of this step, your AI is live, integrated, and ready for real-world use.

Step 9: Optimize for Performance & Scalability

Once deployed, ensure your AI is fast, scalable, and cost-efficient:

- Monitor performance: Track response times, API usage, and failure rates (use tools like Datadog or New Relic).

- Reduce latency: Cache frequent queries, optimize prompts, and use smaller models where possible.

- Scale efficiently: Auto-scale infrastructure (Kubernetes, AWS ECS) to handle traffic spikes.

- Cost optimization: Adjust AI usage based on demand—switch to smaller models during off-peak hours.

- Fine-tune for accuracy: Improve responses based on real-world usage data.

In a SmartDev project, we distilled a large model into a smaller, faster version, cutting costs while maintaining high-quality answers. By completing this step, your AI agent is stable, efficient, and ready to scale with your business needs.

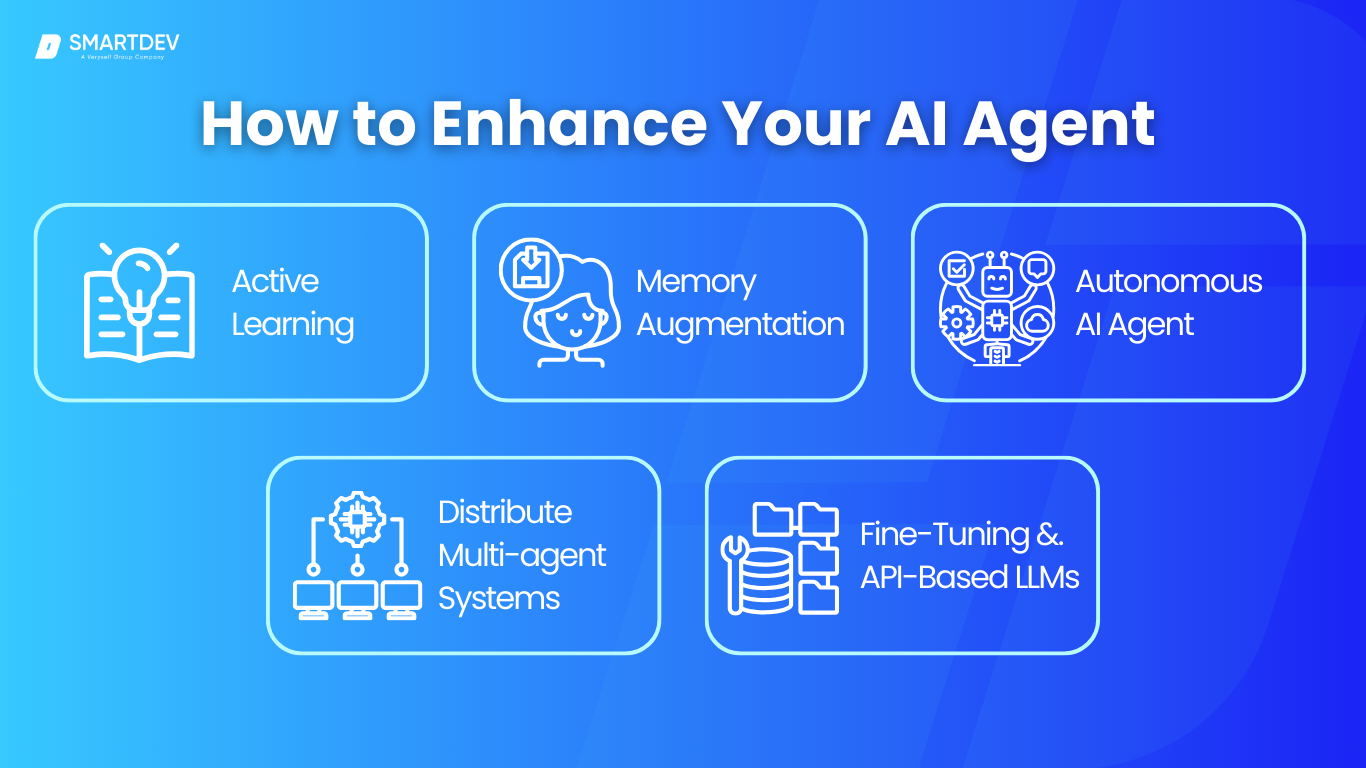

6. Advanced Techniques to Enhance Your AI Agent

Once deployed, your AI agent can be optimized and enhanced to improve performance, accuracy, and adaptability. These advanced techniques ensure that AI continues to deliver business value over time.

6.1. Smarter Responses

AI should not remain static—continuous learning ensures it stays relevant as data, trends, and business needs evolve. Active learning allows AI to refine itself based on user interactions, improving accuracy over time.

For instance, SmartDev implemented feedback loops in an AI-powered HR recruitment system, where hiring managers could review AI-suggested candidates and fine-tune its recommendations. This real-time learning helped improve talent matching significantly.

6.2. Memory Augmentation (Long-Term Memory)

By default, AI models treat each query as independent, which can lead to repetitive or inconsistent responses. Context retention helps AI agents remember past interactions, improving customer experience and operational efficiency.

Techniques like vector databases (Pinecone, Weaviate) and Retrieval-Augmented Generation (RAG) allow AI to recall previous interactions, making it ideal for customer support, virtual assistants, and knowledge management systems. This eliminates the need for users to repeat information and enables AI to provide more personalized and efficient responses.

6.3. Autonomous AI Agents

The next evolution of AI involves autonomous agents that take proactive actions rather than just responding to user inputs. These AI systems can plan, execute tasks, and make decisions with minimal human intervention. Technologies like BabyAGI and AutoGPT enable AI to break down complex objectives into subtasks and execute them automatically.

SmartDev is actively exploring autonomous AI for business automation and decision support systems.

6.4. Multi-Agent Collaboration

Instead of a single AI handling all tasks, multi-agent systems distribute workloads between specialized AI agents. Using orchestration frameworks like AutoGen and LangChain, businesses can enable AI agents to collaborate, improving efficiency.

For example, in a SmartDev logistics project, we built an AI-driven shipment tracking system where one AI agent retrieved delivery statuses, another handled customer queries, and a third optimized supply chain decisions. This specialization of AI agents led to faster response times and better decision-making.

6.5. Fine-Tuning vs. API-Based LLMs

Pre-trained models like GPT-4 or Llama 2 provide a solid foundation, but they lack domain-specific expertise. Fine-tuning AI models using business-specific data improves precision and relevance. Transfer learning allows businesses to refine a model without training from scratch, reducing time and costs.

At SmartDev, we’ve fine-tuned AI models for finance (fraud detection), healthcare (diagnostics), and retail (personalized recommendations), helping businesses achieve more accurate and context-aware AI outputs.

7. Ethical Considerations & AI Safety

Deploying AI in business requires a strong focus on ethics, safety, and compliance. AI agents can enhance efficiency but, if not governed properly, they can also introduce risks like bias, misinformation, and privacy breaches. At SmartDev, we prioritize fairness, transparency, and security to ensure AI solutions are both effective and responsible.

7.1. Bias in AI & How to Mitigate It

AI learns from historical data, which may contain biases. This can lead to discriminatory outputs, such as favoring certain candidates in hiring or unfairly rejecting loan applications.

To prevent this, businesses should audit training data for fairness, apply bias mitigation techniques like data balancing and re-weighting, and ensure transparency by allowing human oversight.

Example: When SmartDev built an AI for loan recommendations, we explicitly blocked location-based biases to prevent unfair lending decisions.

7.2. AI Hallucinations & Error Handling

AI sometimes generates plausible but false information, which can mislead users. This is particularly risky in areas like finance, healthcare, and customer support, where accuracy is critical.

To reduce hallucinations, businesses should ground AI responses in real data using retrieval-augmented generation (RAG). AI should also verify critical facts by cross-checking information with external sources before providing an answer.

Another key approach is to use UI indicators that highlight confidence levels or reference sources. If the AI is unsure, it should defer to human review rather than risk providing misleading information.

Example: SmartDev developed an AI agent that cross-checks facts before responding, ensuring reliability and reducing misinformation.

7.3. Data Privacy & Security Concerns

AI agents often process sensitive customer and business data, making privacy and security top priorities. Businesses must ensure AI does not store, leak, or misuse confidential information.

To mitigate risks, companies should follow data minimization principles, ensuring AI only collects what is strictly necessary. Encryption and strict access controls should be applied to protect data in storage and transit.

Businesses must also guard against AI data leaks, preventing models from inadvertently exposing sensitive details in responses. Additionally, AI security hardening is necessary to prevent cyber threats like prompt injection attacks, where users attempt to manipulate AI into revealing restricted information.

Best Practice: At SmartDev, we treat AI security like human employees—restricting access, monitoring activity, and enforcing strict security protocols.

7.4. Regulatory Compliance

AI systems must comply with global and industry-specific regulations to avoid legal risks.

- GDPR (EU) requires AI to protect user data, ensure transparency in automated decisions, and allow users to request data deletion.

- The EU AI Act will introduce risk-based AI regulations, requiring documentation and explainability for high-risk AI applications.

- CCPA (California) mandates businesses to provide users access to personal data collected by AI and delete it upon request.

- Healthcare AI must follow HIPAA, and financial AI must comply with SEC and FINRA regulations.

Proactive compliance is essential. Businesses should document AI decision-making and regularly review legal requirements to stay ahead of evolving regulations.

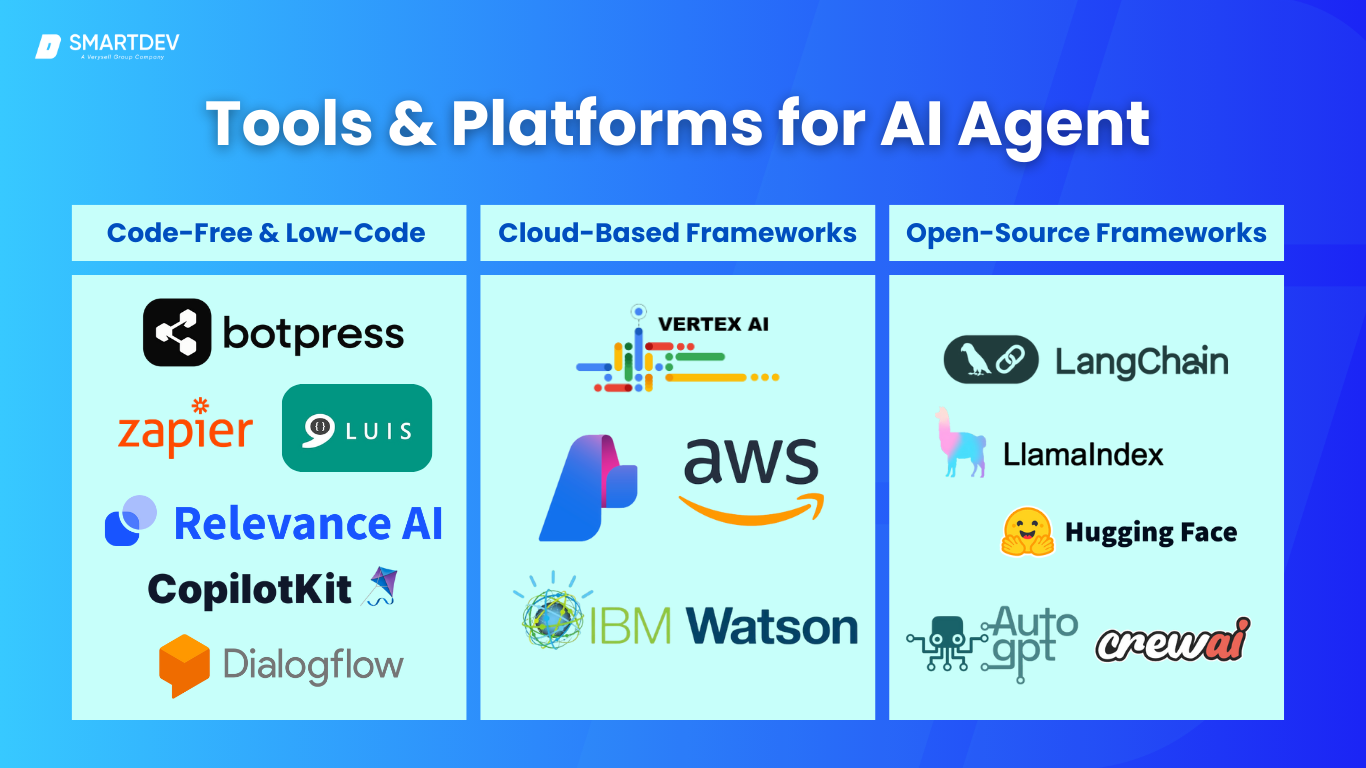

8. Tools & Platforms for AI Agent Development

In this section, we’ll explore a spectrum of options, from no-code/low-code platforms that let you craft AI agents with minimal coding, to major cloud frameworks offered by tech giants, and powerful open-source frameworks that give you full control and community support.

We’ll also touch on how contributing to open-source can be beneficial if you have a developer team keen on being at the cutting edge.

8.1. Code-Free & Low-Code AI Agent Builders

If you’re looking for speed and ease, or if you’re not a developer by trade, low-code platforms can be a boon. These are tools where much of the heavy lifting (NLP, integrations, UI) is handled through visual interfaces or simple configuration, so you can focus on designing the conversation flow or logic. Examples include:

- BotPress: A popular open-source platform for building chatbots and AI assistants. It provides a visual flow editor, supports multiple channels (web, Messenger, etc.), and you can integrate your own AI models or use built-in NLP. BotPress allows some coding for custom actions, but many things are doable with drag-and-drop.

- Zapier with AI integrations: Zapier is known for connecting different apps (if this, then that style). With their recent AI features, you can route inputs to AI services and then onward to other apps, essentially creating an agent workflow without writing code. For example, Zapier could take an email, send it to OpenAI’s API for analysis via a Zap, then based on the result, trigger different actions (like scheduling a meeting or replying).

- Relevance AI: A platform focusing on building AI-driven search and insight engines. If your agent is about retrieving information (like a smart search assistant for your company data), Relevance AI offers a UI to ingest data and enable semantic search, letting you set up an intelligent Q&A system quickly.

- CopilotKit: (As mentioned in the outline – not as commonly known as others, but likely a tool to build AI copilots for various tasks). This could be a framework that gives templates for building AI assistants or copilots in applications like IDEs or productivity software.

- Dialogflow (Google) and LUIS (Microsoft): These low-code platforms offer an easy way to build conversational AI by defining intents and training phrases, with machine learning handling NLP. Pros include quick setup, built-in analytics, and no need to manage infrastructure. However, they have limitations in customization—advanced needs may require migration to custom solutions.

8.2. Cloud-Based AI Agent Frameworks

The big cloud providers offer robust services to build and deploy AI agents at scale:

- Google Cloud Agent Builder (Vertex AI): Google has integrated conversational AI into its Vertex AI platform. It’s often referred to as “Agent Builder” and is designed to help create generative AI applications. It provides tools to design dialogue, integrate with data sources (like Google Search or your own knowledge base), and deploy on Google’s infrastructure. If you’re already in Google’s ecosystem, this can be powerful, leveraging their models (like PaLM or eventually Gemini) with enterprise features.

- Azure AI (including Bot Service and Azure OpenAI): Microsoft’s Azure offers a Bot Service that works with the Bot Framework SDK. It also has Azure OpenAI service that lets you use OpenAI’s models in the Azure cloud with enterprise security. Microsoft’s Power Virtual Agents is another no-code builder for chatbots that ties into Azure’s AI under the hood. Azure’s advantage is seamless integration with the Microsoft stack (like Teams, Office 365, etc.), which can be great for internal agents (imagine an AI agent accessible in Teams that can pull data from SharePoint and report generation via Power BI).

- AWS AI Services: AWS has Amazon Lex (for conversational interfaces, similar to Dialogflow), Amazon Lambda for serverless logic, and a host of AI services like Comprehend (NLP), Rekognition (vision) if your agent needs multi-modal skills. There’s also AWS SageMaker if you want to train and deploy custom models. AWS’s breadth is huge, but it often requires a bit more assembly – however, they recently introduced some higher-level orchestrations for generative AI. For instance, AWS Bedrock is their managed service for foundation models, which could plug into an agent easily.

- IBM Watson Assistant: IBM has been in this game with Watson Assistant, which is a cloud service to build conversational agents, focusing on enterprise clients. It’s worth mentioning if you’re in industries like healthcare or finance where IBM has tailored solutions (and you need strong data governance). Using these cloud frameworks typically means you get scalability and integration out-of-the-box. They are built to handle things like scaling up for peak loads, monitoring, and connecting to other cloud services.

8.3. Open-Source AI Agent Frameworks

For maximum flexibility and avoiding vendor constraints, open-source is the way to go. The AI community is vibrant and many frameworks have arisen:

- LangChain: Arguably one of the most popular libraries in 2023-2024 for building applications with LLMs. LangChain provides a way to chain together prompts, models, and arbitrary logic (including tool use). It’s great for creating agent behavior like “If user asks for X, first do Y then answer”. It also has integrations for memory (like with vector stores) and can manage dialogues. We’ve used LangChain in SmartDev for quickly prototyping complex behaviors (like an agent that can do math by invoking a calculator tool, then answer).

- LlamaIndex (GPT Index): This is a tool to connect LLMs with external data. It complements LangChain often – focusing on the data side, making it easier to do retrieval (as we described in RAG). LlamaIndex lets you structure your data (documents, databases) into an index that an LLM can query via natural language. It’s great for Q&A or knowledge-based agents.

- Hugging Face Transformers: Not an agent framework per se, but the core library to bring in any model you want. If you’re going fully open-source on models (like running a Flan-T5 for QA, or a Llama for conversation), this library is essential. It gives you the model implementations and pipelines that simplify using them. Hugging Face also has Accelerate for multi-GPU, and the Datasets library to manage training data.

- AutoGPT and related: These started as open-source projects on GitHub demonstrating autonomous agents. AutoGPT is itself open-source (Python) and many have forked/extended it. If you want to tinker with autonomous behaviors or multi-agent setups, exploring these repos can be enlightening (though they can be experimental).

- CrewAI: As found in our research, CrewAI is an open-source framework specifically for multi-agent systems, emphasizing orchestrating various agents in a workflow and integrating with different LLMs or tools. If your project leans that way, an open framework like CrewAI might save you from reinventing that coordination logic.

Others than those, the ecosystem is rich – e.g., Rasa (open-source conversational AI framework, which is quite powerful for dialogues and has machine learning-based dialogue management), Open Assistant, etc. Using open-source frameworks means you can customize everything. You can also self-host, which is good for privacy. And the community contributions are invaluable – you’ll find lots of examples, and if you face a problem, likely someone else did and shared a solution.

Read more: Open Source vs. Proprietary AI

How to Contribute to Open-Source AI Agent Development

This is a slight tangent but worthwhile if you have a capable dev team. By engaging with open-source projects:

- You can steer features that matter to you. If your team builds a cool plugin for LangChain to integrate with a unique database, contributing it back can help ensure compatibility and maintenance by the community.

- You gain reputation and insight. Active contributors often get early knowledge of upcoming changes, and your company can be seen as a leader in the space which is good PR.

- It’s cost-sharing. By fixing a bug and sharing it, you save others the headache and likewise benefit from their bug fixes – a communal effort that improves stability. To contribute, you can start by reporting issues on GitHub for these projects, then perhaps making pull requests for small changes. Some companies sponsor open-source maintainers which can be another route to support the ecosystem that you rely on.

In summary, whether you choose low-code platforms, cloud solutions, or open-source frameworks (or a mix of these), there’s no shortage of tools to help build your AI agent. The choice depends on how much you want to customize vs. how much you want provided for you.

9. Testing, Debugging & Performance Optimization

A well-tested and optimized AI agent is more reliable, accurate, and scalable. AI can be unpredictable, so catching issues before deployment prevents user frustration and costly mistakes. This section covers testing strategies, debugging AI issues, and performance optimization to ensure efficiency and cost-effectiveness.

9.1. Testing AI Agents: Ensuring Reliability

AI testing requires a mix of traditional software testing and AI-specific evaluation due to its probabilistic nature.

Unit & Functional Testing

For deterministic components (e.g., API calls, output formatting), standard unit tests ensure functionality. However, since AI responses vary, functional tests should verify that responses are logically correct rather than word-for-word identical.

Simulation & A/B Testing

Simulating user interactions helps identify weaknesses in multi-turn conversations. AI-vs-AI testing, where one AI simulates the user, can expose gaps in handling adversarial inputs. A/B testing compares different AI versions in real use cases to see which performs best, based on user ratings, resolution rates, and response times.

Human-in-the-Loop & Continuous Monitoring

For subjective factors like tone or helpfulness, human evaluation is key. Beta testers and annotators can rate AI responses, guiding further improvements. Real-time monitoring also helps – log AI failures, review unknown queries, and improve responses based on live usage.

9.2. Debugging Common AI Issues

AI debugging can be complex, as errors may not always have clear causes. However, systematic troubleshooting helps resolve the most common issues:

Hallucinations (False Information)

They occur when AI generates plausible but incorrect responses. This can be mitigated by grounding AI responses in real data using Retrieval-Augmented Generation (RAG). Adjusting the temperature setting makes the AI more deterministic, reducing the likelihood of fabricated answers. Another approach is to implement a verification layer, where the AI cross-checks critical facts against a reliable database before responding.

Context Loss

It is another frequent issue in AI-driven conversations. If an AI forgets previous messages, it’s often due to exceeding the model’s context window or ineffective session tracking. Businesses can summarize key details within the conversation to preserve important information while staying within memory limits. Additionally, debugging the AI’s session history logs can reveal where context breaks occur.

Slow Processing

It can result from large model sizes, inefficient API calls, or unnecessary computational steps. Identifying performance bottlenecks through latency profiling allows developers to pinpoint delays. Solutions include caching frequent queries, reducing API dependencies, or using optimized model versions.

9.3. Optimizing Performance

To ensure smooth performance, businesses should continuously optimize their AI agents for speed, scalability, and cost-effectiveness.

Latency reduction

This is crucial for real-time AI interactions. Optimizations include quantizing models (reducing precision to speed up inference), enabling parallel processing for simultaneous data retrieval, and using edge caching to serve responses faster for global users.

Optimization costs

Every business should minimize expensive API calls by routing simple queries to smaller, cheaper models, reserving powerful AI for complex tasks. Cloud-based AI agents should be set up with auto-scaling, adjusting resources based on demand to avoid unnecessary compute costs. Selecting the right infrastructure—whether CPU- or GPU-based—ensures optimal efficiency.

Managing memory and resource usage is also essential. Large AI models consume high amounts of RAM, so sharing a central model instead of duplicating processes can significantly cut resource usage. Optimizing vector database indexing and clearing unnecessary data further enhances efficiency.

10. Deploying & Scaling

Choosing the right hosting approach, implementing CI/CD pipelines, and preparing for high traffic are essential for making your AI agent a sustainable business solution.

10.1. Hosting Options: On-Premise vs. Cloud Deployment

Where your AI agent runs directly impacts performance, cost, and compliance.

On-premise Deployment

The method is ideal for businesses handling sensitive data, such as banks, hospitals, or government agencies, where regulations prohibit data from leaving company servers. It also offers long-term cost savings if the infrastructure is already in place. However, on-prem comes with challenges: manual scaling, hardware maintenance, and the need for IT expertise to manage security, redundancy, and high availability. Businesses adopting on-prem often use Kubernetes to automate scaling and improve resilience.

Cloud Deployment (AWS, Azure, GCP)

This offers flexibility and scalability, allowing businesses to start small and scale on demand without hardware constraints. Cloud platforms provide managed AI services, on-demand GPUs, and pre-built monitoring tools, making it easy to deploy and maintain AI agents. However, costs can rise unexpectedly with high usage, and businesses must ensure compliance with data protection laws when handling customer information in the cloud.

A hybrid approach is often a practical solution, keeping sensitive components on-premise while leveraging cloud services for scalability and complex computations. This setup allows AI agents to process critical data locally while using cloud AI models for resource-intensive tasks.

10.2. CI/CD Pipelines for AI Agents

Continuous Integration/Continuous Deployment (CI/CD) ensures seamless updates to both AI models and code, preventing downtime and minimizing deployment risks.

Version Control for Code & Models

Code changes should go through automated testing in CI pipelines, while AI models should be versioned and stored in a model registry (e.g., MLflow, DVC). This prevents accidental rollbacks and ensures that production always runs the most reliable AI model.

Automated Testing

This should include AI-specific validation. Since AI outputs are probabilistic, tests should focus on verifying that responses meet logical correctness, contain required information, and do not degrade in quality.

Safe Deployment Strategies

The strategies like blue-green deployments or canary releases help mitigate risks when rolling out updates. New AI versions should be tested with a small percentage of traffic before full deployment to prevent business disruptions.

Rollback Plans

If a new AI model or feature causes performance issues, having the ability to quickly revert to the previous version ensures system stability. Using containerized deployments (Docker, Kubernetes) makes rollback management easier.

10.3. Scaling for High-Traffic Environments

As your AI agent gains traction, scalability becomes critical to handle increasing users and queries efficiently.

Load Balancing

It is a must for distributing requests across multiple instances, ensuring consistent response times and redundancy. Cloud-based load balancers (AWS ELB, Azure Load Balancer) or Kubernetes Ingress Controllers allow businesses to scale horizontally without service disruption.

Stateless Architecture

Focusing on this improves scalability by storing user context externally (e.g., Redis, session databases) instead of within the AI instance. This allows multiple AI instances to handle user queries interchangeably without loss of conversation history.

Scaling AI Models Efficiently

The factor is challenging due to GPU resource demands. Businesses often separate the AI inference layer from the API layer, allowing requests to be queued and processed by dedicated GPU instances. This approach prevents bottlenecks and improves cost efficiency.

Autoscaling Mechanisms

Kubernetes Horizontal Pod Autoscaler or cloud-native auto-scaling ensures that instances increase during peak hours and scale down when demand drops, reducing operational costs.

Caching & Response Optimization

Frequently requested AI responses or database queries can be cached to reduce processing time. This is particularly useful for AI agents answering repetitive queries or FAQ-style questions.

10.4. Serverless AI Agent Deployment

Serverless AI (AWS Lambda, Google Cloud Functions, Azure Functions)

This allows businesses to run AI agents without managing infrastructure. This approach is ideal for handling unpredictable traffic, as it scales automatically based on incoming requests.

However, serverless AI comes with cold start delays and runtime limitations, making it less suitable for real-time AI processing. Businesses can keep AI models warm by maintaining a minimum number of active instances to avoid delays.

Edge AI Deployment

This enables AI models to run closer to users at edge locations, reducing latency for real-time processing. Cloudflare Workers, Fly.io, and AWS Wavelength allow businesses to deploy AI logic at multiple global edge locations, improving speed for geographically distributed users.

11. Future Trends in AI Agents

From increasing AI autonomy to self-learning models and immersive AI experiences, the future of AI agents promises greater intelligence, adaptability, and integration into our daily lives.

11.1. AGI (Artificial General Intelligence) & AI Autonomy

Artificial General Intelligence (AGI) refers to AI that can reason, learn, and apply knowledge across different domains, much like a human. While today’s AI agents are task-specific, advancements in models like GPT-4, Gemini, and Claude show progress toward more generalized AI capabilities.

Even before reaching AGI, autonomous AI agents are becoming more powerful. Tools like AutoGPT and BabyAGI already allow AI to break down complex tasks into multi-step action plans. In the future, businesses could deploy AI agents that manage entire operations such as recently the brand new ChatGPT-4.5 by OpenAI or Grok-3 by Elon Musk’s xAI.

However, increased autonomy also raises concerns about alignment, ethical oversight, and workforce impact. Businesses should plan for AI governance frameworks, ensuring AI systems are used responsibly while maximizing productivity.

11.2. The Rise of Multi-Agent Systems

The concept of multi-agent systems, where multiple AI agents interact to perform complex tasks, is gaining traction. Google DeepMind’s introduction of SIMA (Scalable Instructable Multiworld Agent) demonstrates this trend. SIMA can understand and follow natural language instructions to complete tasks across various 3D virtual environments, adapting to new tasks and settings without requiring access to game source code or APIs.

11.3. Self-Learning AI & AutoML

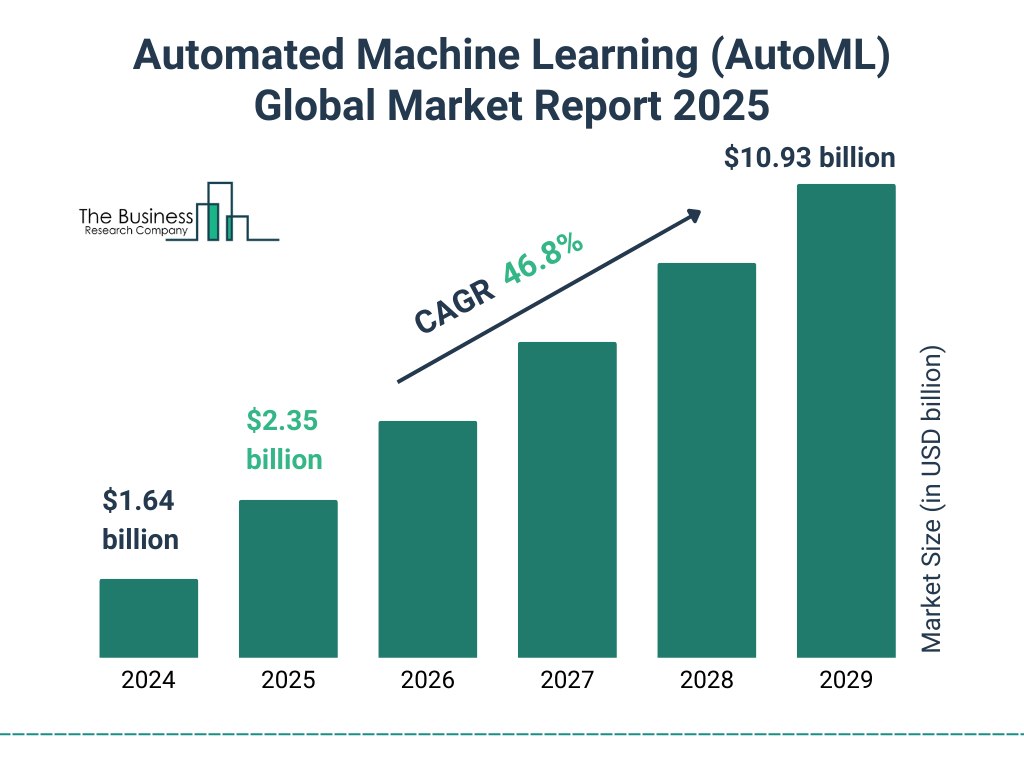

AI systems are increasingly capable of self-improvement through mechanisms like reflection and self-learning. It is predicted that “the automated machine learning (AutoML) market size is expected to see exponential growth in the next few years. It will grow to $10.93 billion in 2029 at a compound annual growth rate (CAGR) of 46.8%.” by The Business Research Company

OpenAI’s development of models such as o1-preview and o1, which exhibit enhanced reasoning abilities, highlights this progression. These models can solve multi-step problems by identifying and correcting their own errors, leading to improved coherence and reduced inaccuracies.

11.4. AI Agents for the Metaverse, AR & VR

As augmented reality (AR) and virtual reality (VR) technologies mature, AI agents will play a key role in immersive digital experiences.

In AR, AI agents could function as visual assistants, appearing through smart glasses or mobile cameras to provide contextual guidance. For example, an AI-powered technician assistant could overlay repair instructions on machinery, walking users through maintenance procedures.

In VR and the metaverse, AI agents could serve as virtual sales representatives, digital trainers, or interactive brand ambassadors. Businesses may use AI-driven VR simulations for customer service training, allowing employees to practice realistic interactions with AI-driven customers.

With digital twins, businesses could replicate factories, offices, or retail spaces in virtual environments, using AI agents to simulate real-world operations, detect inefficiencies, and optimize processes.

12. Key Takeaways

- AI can perceive, process, and act—handling tasks like customer support, data analysis, and automation with minimal human input.

- A structured approach—choosing the right tech stack, integrating APIs, optimizing NLP, and ensuring scalability—leads to reliable AI solutions.

- From memory-enhanced agents to autonomous AI and multi-agent collaboration, the potential of AI is expanding rapidly.

- Responsible AI development ensures fairness, transparency, and compliance, safeguarding both businesses and users.

- Businesses must adapt to AI advancements—leveraging self-learning models, AI-driven automation, and immersive technologies like AR and VR.

Let’s Build the Future Together!

AI is not just a tool—it’s a business enabler that enhances efficiency, decision-making, and customer engagement. The journey starts with one step—whether it’s automating a routine process, launching an AI-powered assistant, or integrating AI insights into business strategy.

SmartDev is here to help. We work alongside businesses, providing AI expertise, tailored solutions, and strategic guidance to drive digital transformation. Whether you’re exploring AI for the first time or looking to scale and optimize existing AI solutions, our team is ready to support you.

📌 Next Steps

- Identify a Pilot Project – Find a business process where AI can add value.

- Develop a Proof of Concept – Start small, test, and refine your AI agent.

- Integrate & Scale – Implement AI into your workflow and optimize based on real-world use.

- Stay Ahead – Keep up with AI advancements to remain competitive.

The AI revolution is here, and those who embrace it now will shape the future of business. Let’s innovate together and build AI-driven solutions that transform operations, enhance customer experiences, and drive success.

🚀 Ready to start your AI journey? Contact SmartDev today and let’s bring AI-powered automation to your business!

—

References

- Gartner Reveals Three Technologies That Will Transform Customer Service and Support By 2028 | Gartner

- How retailers can keep up with consumers | McKinsey & Company

- With GPT-4.5, OpenAI Trips Over Its Own AGI Ambitions | Wired

- A generalist AI agent for 3D virtual environments | Google Deepmind

- In AI Agent Battle, Meta Seeks Total Dominance as OpenAI Plans to Charge $20K for Some Models | Inc.