Why AI Ethics Concerns Matter?

Artificial Intelligence (AI) is transforming industries at a breathtaking pace. It brings both exciting innovations and serious ethical questions.

Businesses worldwide are rapidly deploying AI systems to boost efficiency and gain a competitive edge. Yet, AI ethics concerns are increasingly in the spotlight as unintended consequences emerge.

In fact, nine out of ten organizations have witnessed an AI system lead to an ethical issue in their operations. This has prompted a surge in companies establishing AI ethics guidelines — an 80% jump in just one year — to ensure AI is used responsibly.

So, what are AI ethics concerns?

According to IMD, AI ethics refers to the moral principles and practices that guide the development and use of AI technologies. It’s about ensuring that AI systems are fair, transparent, accountable, and safe.

These considerations are no longer optional. They directly impact public trust, brand reputation, legal compliance, and even the bottom line.

For businesses, unethical AI can lead to biased decisions that alienate customers, privacy violations that incur fines, or dangerous outcomes that lead to liability. For society and individuals, it can deepen inequalities and erode fundamental rights.

The importance of AI ethics is already evident in real-world dilemmas.

From hiring algorithms that discriminate against certain groups to facial recognition systems that invade privacy, the ethical pitfalls of AI have tangible effects. AI-driven misinformation (like deepfake videos) is undermining trust in media, and opaque “black box” AI decisions leave people wondering how crucial choices – hiring, loans, medical diagnoses – were made.

Each of these scenarios underscores why AI ethics concerns matter deeply for business leaders and policymakers alike.

This guide will explore the core ethical issues surrounding AI, examine industry-specific concerns and real case studies of AI gone wrong, and offer practical steps for implementing AI responsibly in any organization.

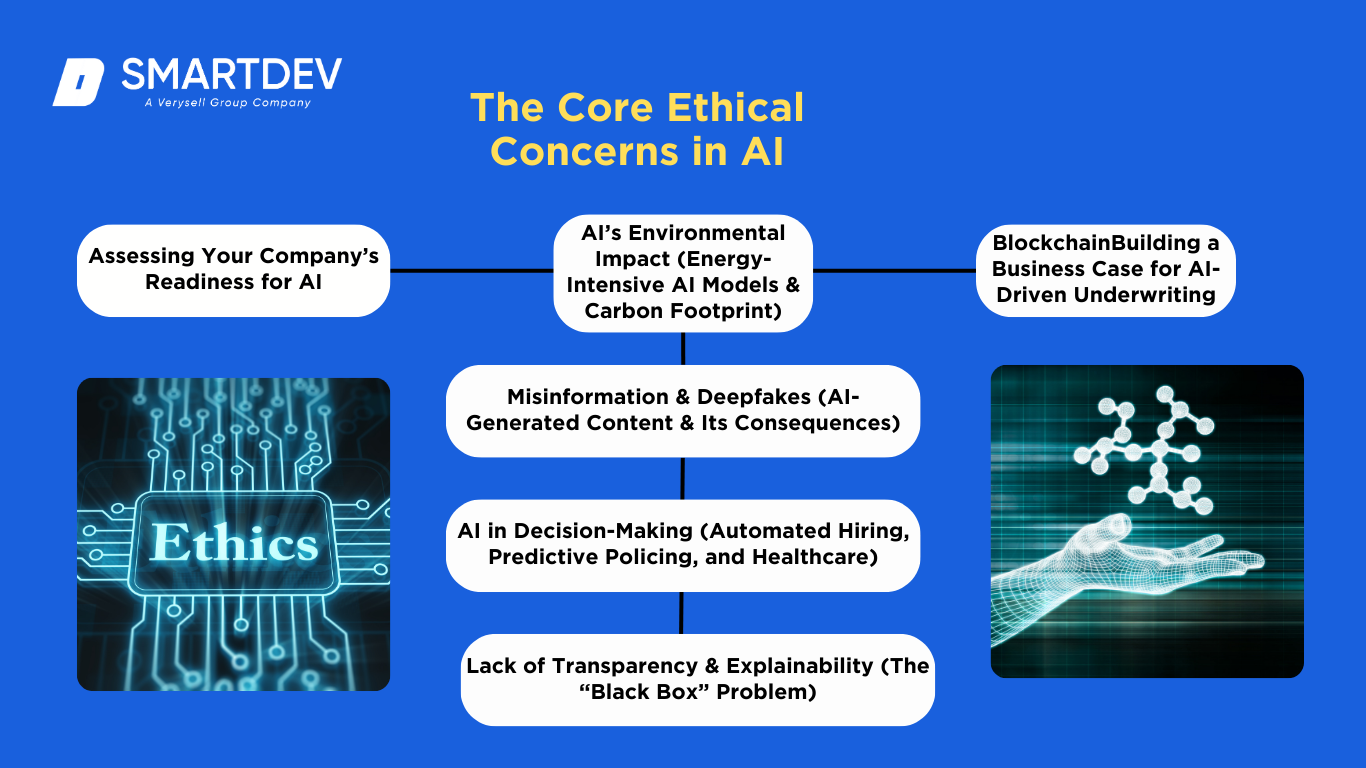

The Core Ethical Concerns in AI

AI technologies bring a host of ethical challenges. Business and policy leaders should understand the core AI ethics concerns in order to manage risk and build trustworthy AI systems.

AI technologies bring a host of ethical challenges. Business and policy leaders should understand the core AI ethics concerns in order to manage risk and build trustworthy AI systems.

Below are some of the most pressing concerns:

Bias & Discrimination in AI Models

One of the top AI ethics concerns is algorithmic bias – when AI systems unfairly favor or disadvantage certain groups.

AI models learn from historical data, which can encode human prejudices. As a result, AI may reinforce racial, gender, or socioeconomic discrimination if not carefully checked.

For example, a now-infamous hiring AI developed at Amazon was found to downgrade resumes containing the word “women’s,” reflecting the male dominance of its training data. In effect, the system taught itself to prefer male candidates, demonstrating how quickly bias can creep into AI.

In criminal justice, risk prediction software like COMPAS was reported to falsely label Black defendants as higher risk more often than white defendants, due to biased data and design.

These cases show that unchecked AI can perpetuate systemic biases, leading to discriminatory outcomes in hiring, lending, policing, and beyond.

Businesses must be vigilant: biased AI not only harms individuals and protected classes but also exposes companies to reputational damage and legal liability for discrimination.

AI & Privacy Violations (Data Security, Surveillance)

AI’s hunger for data raises major privacy concerns. Advanced AI systems often rely on vast amounts of personal data – from purchase histories and social media posts to faces captured on camera – which can put individual privacy at risk.

A prominent example is facial recognition technology: startups like Clearview AI scraped billions of online photos to create a face-identification database without people’s consent. This enabled invasive surveillance capabilities, sparking global outrage and legal action.

Regulators found Clearview’s practices violated privacy laws by building a “massive faceprint database” and enabling covert surveillance of citizens.

Such incidents highlight how AI can infringe on data protection rights and expectations of privacy. Businesses deploying AI must safeguard data security and ensure compliance with privacy regulations (like GDPR or HIPAA).

Ethical concerns also arise with workplace AI surveillance – for instance, monitoring employees’ communications or using camera analytics to track productivity can cross privacy lines and erode trust.

Respecting user consent, securing data against breaches, and limiting data collection to what’s truly needed are all critical steps toward responsible AI that honors privacy.

Misinformation & Deepfakes (AI-Generated Content)

AI is now capable of generating highly realistic fake content – so-called deepfakes in video, audio, and text. This creates a potent misinformation threat. AI-generated fake news articles, bogus images, or impersonated videos can spread rapidly online, misleading the public. The consequences for businesses and society are severe: erosion of trust in media, manipulation of elections, and new forms of fraud. During recent elections, AI-generated misinformation was flagged as a top concern, with the World Economic Forum warning that AI is amplifying manipulated content that could “destabilize societies”.

For instance, deepfake videos of politicians saying or doing things they never did have circulated, forcing companies and governments to devise new detection and response strategies. The AI ethics concern here is twofold – preventing the malicious use of generative AI to deceive, and ensuring algorithms (like social media recommender systems) do not recklessly amplify false content. Companies in the social media and advertising space, in particular, bear responsibility to detect deepfakes, label or remove false content, and avoid profiting from misinformation. Failing to address AI-driven misinformation can lead to public harm and regulatory backlash, so it’s a concern that business leaders must treat with urgency.

AI in Decision-Making (Automated Bias in Hiring, Policing, Healthcare)

Organizations increasingly use AI to automate high-stakes decisions – which brings efficiency, but also ethical peril. Automated decision-making systems are used in hiring (screening job applicants), law enforcement (predictive policing or sentencing recommendations), finance (credit scoring), and healthcare (diagnosis or treatment suggestions). The concern is that these AI systems may make unfair or incorrect decisions that significantly impact people’s lives, without proper oversight. For example, some companies deployed AI hiring tools to rank candidates, only to find the algorithms were replicating biases (as in the Amazon case of gender bias).

In policing, predictive algorithms that flag individuals likely to reoffend have been criticized for racial bias – ProPublica’s investigation into COMPAS found that Black defendants were far more likely to be misclassified as high risk than whites, due to how the algorithm was trained. In healthcare, an AI system might inadvertently prioritize treatment for one group over another if the training data underrepresents certain populations. The “automation bias” is also a risk: humans may trust an AI’s decision too much and fail to double-check it, even when it’s wrong. Lack of transparency (discussed next) aggravates this.

Businesses using AI for decisions must implement safeguards: human review of AI outputs, bias testing, and clear criteria for when to override the AI. The goal should be to use AI as a decision support tool – not a black-box judge, jury, and executioner.

Lack of Transparency & Explainability (The “Black Box” Problem)

Many AI models, especially complex deep learning networks, operate as black boxes – their inner workings and decision logic are not easily interpretable to humans. This lack of transparency poses a serious ethical concern: if neither users nor creators can explain why an AI made a certain decision, how can we trust it or hold it accountable?

For businesses, this is more than an abstract worry. Imagine a bank denying a customer’s loan via an AI algorithm – under regulations and basic ethics, the customer deserves an explanation. But if the model is too opaque, the bank may not be able to justify the decision, leading to compliance issues and customer mistrust. Transparency failings have already caused backlash; for instance, when Apple’s credit card algorithm was accused of offering lower credit limits to women, the lack of an explanation inflamed criticisms of bias.

Explainability is crucial in sensitive domains like healthcare (doctors need to understand an AI diagnosis) and criminal justice (defendants should know why an AI tool labeled them high risk). The ethical AI principle of “interpretability” calls for designing systems that can provide human-understandable reasons for their outputs. Techniques like explainable AI (XAI) can help shed light on black-box models, and some regulations (e.g. EU’s upcoming AI Act) are pushing for transparency obligations.

Ultimately, people have the right to know how AI decisions affecting them are made – and businesses that prioritize explainability will be rewarded with greater stakeholder trust.

AI’s Environmental Impact (Energy & Carbon Footprint)

While often overlooked, the environmental impact of AI is an emerging ethics concern for businesses committed to sustainability. Training and deploying large AI models require intensive computational resources, which consume significant electricity and can produce a sizable carbon footprint. A striking example: training OpenAI’s GPT-3 model (with 175 billion parameters) consumed about 1,287 MWh of electricity and emitted an estimated 500+ metric tons of carbon dioxide – equivalent to the annual emissions of over 100 gasoline cars.

As AI models grow more complex (GPT-4, etc.), their energy usage soars, raising questions about carbon emissions and even water consumption for cooling data centers. For companies adopting AI at scale, there is a corporate social responsibility to consider these impacts. Energy-intensive AI not only conflicts with climate goals but can also be costly as energy prices rise.

Fortunately, this ethics concern comes with actionable solutions: businesses can pursue more energy-efficient model architectures, use cloud providers powered by renewables, and carefully evaluate whether the benefits of a giant AI model outweigh its environmental cost. By treating AI’s carbon footprint as part of ethical risk assessment, organizations align their AI strategy with broader sustainability commitments.

In sum, responsible AI isn’t just about fairness and privacy – it also means developing AI in an eco-conscious way to ensure technology advancement doesn’t come at the expense of our planet.

AI Ethics Concerns Across Different Industries

AI ethics challenges manifest in unique ways across industries. A solution appropriate in one domain might be inadequate in another, so business leaders should consider the specific context.

AI ethics challenges manifest in unique ways across industries. A solution appropriate in one domain might be inadequate in another, so business leaders should consider the specific context.

Here’s a look at how AI ethics concerns play out in various sectors:

AI in Healthcare: Ethical Risks in Medical AI & Patient Privacy

In healthcare, AI promises better diagnostics and personalized treatment, but errors or biases can quite literally be a matter of life and death.

Ethical concerns in medical AI include: accuracy and bias – if an AI diagnostic tool is trained mostly on one demographic, it may misdiagnose others (e.g., under-detection of diseases in minorities); accountability – if an AI system makes a harmful recommendation, is the doctor or the software vendor responsible?; and patient privacy – health data is highly sensitive, and using it to train AI or deploying AI in patient monitoring can intrude on privacy if not properly controlled.

For example, an AI system used to prioritize patients for kidney transplants was found to systematically give lower urgency scores to Black patients due to biased historical data, raising equity issues in care. Moreover, healthcare AI often operates in a black-box manner, which is problematic – doctors need to explain to patients why a treatment was recommended.

Privacy violations are another worry: some hospitals use AI for analyzing patient images or genetic data; without strong data governance, there’s risk of exposing patient information. To address these, healthcare organizations are adopting “AI ethics committees” to review algorithms for bias and requiring that AI tools provide explanations that clinicians can validate.

Maintaining informed consent (patients should know when AI is involved in their care) and adhering to regulations like HIPAA for data protection are also key for ethically deploying AI in medicine.

AI in Finance: Algorithmic Trading, Loan Approvals & Bias in Credit Scoring

The finance industry has embraced AI for everything from automated trading to credit scoring and fraud detection. These applications come with ethical pitfalls. In algorithmic trading, AI systems execute trades at high speed and volume; while this can increase market efficiency, it also raises concerns about market manipulation and flash crashes triggered by runaway algorithms. Financial institutions must ensure their trading AIs operate within ethical and legal bounds, with circuit-breakers to prevent excessive volatility.

In consumer finance, AI-driven loan approval and credit scoring systems have been found to sometimes exhibit discriminatory bias – for instance, algorithmic bias that resulted in women getting significantly lower credit limits than men with similar profiles (as seen in the Apple Card controversy). Such bias can violate fair lending laws and reinforces inequality.

Additionally, lack of explainability in credit decisions can leave borrowers in the dark about why they were denied, which is both unethical and potentially non-compliant with regulations. There’s also the issue of privacy: fintech companies use AI to analyze customer data for personalized offers, but using personal financial data without clear consent can breach trust.

Finance regulators are increasingly scrutinizing AI models for fairness and transparency – for example, the U.S. Consumer Financial Protection Bureau has warned that “black box” algorithms are not a shield against accountability. Financial firms, therefore, are starting to conduct bias audits on their AI (to detect disparate impacts on protected classes) and to implement explainable AI techniques so that every automated decision on lending or insurance can be justified to the customer and regulators.

Ethical AI in finance ultimately means balancing innovation with fairness, transparency, and robust risk controls.

AI in Law Enforcement: Predictive Policing, Surveillance & Human Rights

Perhaps nowhere are AI ethics concerns as contentious as in law enforcement and security. Police and security agencies are deploying AI for predictive policing – algorithms that analyze crime data to predict where crimes might occur or who might reoffend. The ethical quandary is that these systems can reinforce existing biases in policing data (over-policing of certain neighborhoods, for instance) and lead to unjust profiling of communities of color.

In the U.S., predictive policing tools have been criticized for unfairly targeting minority neighborhoods due to biased historical crime data, effectively automating racial bias under the veneer of tech. This raises serious human rights issues, as people could be surveilled or even arrested due to an algorithm’s suggestion rather than actual wrongdoing.

Additionally, facial recognition AI is used by law enforcement to identify suspects, but studies have found it is much less accurate for women and people with darker skin – leading to false arrests in some high-profile cases of mistaken identity.

The use of AI surveillance (from recognizing faces in public CCTV to tracking individuals via their digital footprint) must be balanced against privacy rights and civil liberties. Authoritarian uses of AI in law enforcement (such as invasive social media monitoring or a social credit system) demonstrate how AI can enable digital oppression.

Businesses selling AI to government agencies also face ethics scrutiny – for example, tech employees at some companies have protested projects that provide AI surveillance tools to governments perceived as violating human rights.

The key is implementing AI with safeguards: ensuring human oversight over any AI-driven policing decisions, rigorous bias testing and retraining of models, and clear accountability and transparency to the public. Some jurisdictions have even banned police use of facial recognition due to these concerns.

At a minimum, law enforcement agencies should follow strict ethical guidelines and independent audits when leveraging AI, to prevent technology from exacerbating injustice.

AI in Education: Grading Bias, Student Privacy & Risks in Personalized Learning

Education is another field seeing rapid AI adoption – from automated grading systems to personalized learning apps and proctoring tools. With these come ethical concerns around fairness, accuracy, and privacy for students. AI-powered grading systems (used for essays or exams) have faced backlash when they were found to grade unevenly – for example, an algorithm used to predict student test scores in the UK infamously downgraded many students from disadvantaged schools in 2020, leading to a nationwide outcry and policy reversal.

This highlighted the risk of bias in educational AI, where a one-size-fits-all model may not account for the diverse contexts of learners, unfairly impacting futures (university admissions, scholarships) based on flawed algorithmic judgments.

Personalized learning platforms use AI to tailor content to each student, which can be beneficial, but if the algorithm’s recommendations pigeonhole students or reinforce biases (e.g., suggesting different career paths based on gender), it can limit opportunities. Another major concern is student privacy: EdTech AI often collects data on student performance, behavior, even webcam video during online exams. Without strict controls, this data could be misused or breached.

There have been controversies over remote exam proctoring AI that tracks eye movements and environment noise, which some argue is invasive and prone to false accusations of cheating (e.g., flagging a student for looking away due to a disability). Schools and education companies must navigate these issues by being transparent about AI use, ensuring AI decisions are reviewable by human educators, and protecting student data.

Involving teachers and ethicists in the design of educational AI can help align the technology with pedagogical values and equity. Ultimately, AI should enhance learning and uphold academic integrity without compromising student rights or treating learners unfairly.

AI in Social Media: Fake News, Echo Chambers & Algorithmic Manipulation

Social media platforms run on AI algorithms that decide what content users see – and this has sparked ethical debates about their influence on society. Content recommendation algorithms can create echo chambers that reinforce users’ existing beliefs, contributing to political polarization.

They may also inadvertently promote misinformation or extreme content because sensational posts drive more engagement – a classic ethical conflict between profit (ad revenue from engagement) and societal well-being.

We’ve seen Facebook, YouTube, Twitter and others come under fire for algorithmic feeds that amplified fake news during elections or enabled the spread of harmful conspiracy theories.

The Cambridge Analytica scandal revealed how data and AI targeting were used to manipulate voter opinions, raising questions about the ethical limits of AI in political advertising.

Deepfakes and bots on social media (AI-generated profiles and posts) further muddy the waters, as they can simulate grassroots movements or public consensus, deceiving real users.

From a business perspective, social media companies risk regulatory action if they cannot control AI-driven misinformation and protect users (indeed, many countries are now considering laws forcing platforms to take responsibility for content recommendations).

User trust is also at stake – if people feel the platform’s AI is manipulating them or violating their privacy by micro-targeting ads, they may flee.

Social media companies have begun implementing AI ethics measures like improved content moderation with AI-human hybrid systems, down-ranking false content, and providing users more control (e.g., the option to see a chronological feed instead of algorithmic).

However, the tension remains: algorithms optimized purely for engagement can conflict with the public interest.

For responsible AI, social media firms will need to continuously adjust their algorithms to prioritize quality of information and user well-being, and be transparent about how content is ranked.

Collaboration with external fact-checkers and clear labeling of AI-generated or manipulated media are also key steps to mitigate the ethical issues in this industry.

AI in Employment: Job Displacement, Automated Hiring & Workplace Surveillance

AI’s impact on the workplace raises ethical and socio-economic concerns for businesses and society. One headline issue is job displacement: as AI and automation take over tasks (from manufacturing robots to AI customer service chatbots), many workers fear losing their jobs.

While history shows technology creates new jobs as it destroys some, the transition can be painful and uneven. Business leaders face an ethical consideration in how they implement AI-driven efficiencies – will they simply cut staff to boost profit, or will they retrain and redeploy employees into new roles?

Responsible approaches involve workforce development initiatives, where companies upskill employees to work alongside AI (for example, training assembly line workers to manage and program the robots that might replace certain manual tasks).

Another area is automated hiring: aside from the bias issues discussed earlier, there’s an ethical concern about treating applicants purely as data points. Over-reliance on AI filtering can mean great candidates are screened out due to quirks in their resume or lack of conventional credentials, and candidates may not get feedback if an algorithm made the decision.

Ensuring a human touch in recruitment – e.g. AI can assist by narrowing a pool, but final decisions and interviews involve human judgment – tends to lead to fairer outcomes.

Workplace surveillance is increasingly enabled by AI too: tools exist to monitor employee computer usage, track movement or even analyze tone in communications to gauge sentiment. While companies have interests in security and productivity, invasive surveillance can violate employee privacy and create a culture of distrust.

Ethically, companies should be transparent about any AI monitoring being used and give employees a say in those practices (within legal requirements). Labor unions and regulators are paying attention to these trends, and heavy-handed use of AI surveillance could result in legal challenges or reputational harm.

In summary, AI in employment should ideally augment human workers, not arbitrarily replace or oppress them. A human-centered approach – treating employees with dignity, involving them in implementing AI changes, and mitigating negative impacts – is essential for ethically navigating AI in the workplace.

Real-World AI Ethics Failures & Lessons Learned

Nothing illustrates AI ethics concerns better than real case studies where things went wrong. Several high-profile failures have provided cautionary tales and valuable lessons for businesses on what not to do.

Nothing illustrates AI ethics concerns better than real case studies where things went wrong. Several high-profile failures have provided cautionary tales and valuable lessons for businesses on what not to do.

Let’s examine a few:

Amazon’s AI Hiring Tool & Gender Bias

The failure: Amazon developed an AI recruiting engine to automatically evaluate resumes and identify top talent. However, the system was discovered to be heavily biased against women.

Trained on a decade of past resumes (mostly from male candidates in the tech industry), the AI learned to favor male applicants. It started downgrading resumes that contained the word “women’s” (as in “women’s chess club captain”) and those from women’s colleges.

By 2015, Amazon realized the tool was not gender-neutral and was effectively discriminating against female candidates. Despite attempts to tweak the model, they couldn’t guarantee it wouldn’t find new ways to be biased, and the project was eventually scrapped.

Lesson learned: This case shows the perils of deploying AI without proper bias checks. Amazon’s intent wasn’t to discriminate – the bias was an emergent property of historical data and unchecked algorithms.

For businesses, the lesson is to rigorously test AI models for disparate impact before using them in hiring or other sensitive decisions. It’s critical to use diverse training data and to involve experts to audit algorithms for bias.

Amazon’s experience also underlines that AI should augment, not replace, human judgment in hiring; recruiters must remain vigilant and not blindly trust a scoring algorithm.

The fallout for Amazon was internal embarrassment and a public example of “what can go wrong” – other companies now cite this case to advocate for more responsible AI design.

In short: algorithmic bias can lurk in AI – find it and fix it early to avoid costly failures.

Google’s AI Ethics Controversy & Employee Pushback

The failure: In 2020, Google, a leader in AI, faced internal turmoil when a prominent AI ethics researcher, Dr. Timnit Gebru, parted ways with the company under contentious circumstances. Gebru, co-lead of Google’s Ethical AI team, had co-authored a paper highlighting risks of large language models (the kind of AI that powers Google’s search and products).

She claims Google pushed her out for raising ethics concerns, while Google’s official line was that there were differences over the publication process. The incident quickly became public, and over 1,200 Google employees signed a letter protesting her firing, accusing Google of censoring critical research.

This came after other controversies, such as an AI ethics council Google formed in 2019 that was dissolved due to public outcry over its member selection. The Gebru incident in particular sparked a global debate about Big Tech’s commitment to ethical AI and the treatment of whistleblowers.

Lesson learned: Google’s turmoil teaches companies that AI ethics concerns must be taken seriously at the highest levels, and those who raise them should be heard, not silenced. The employee pushback showed that a lack of transparency and accountability in handling internal ethics issues can severely damage morale and reputation.

For businesses, building a culture of ethical inquiry around AI is key – encourage your teams to question AI’s impacts and reward conscientious objectors rather than punishing them. The episode also highlighted the need for external oversight: many argued that independent ethics boards or third-party audits might have prevented the conflict from escalating.

In essence, Google’s experience is a warning that even the most advanced AI firms are not immune to ethical lapses. The cost was a hit to Google’s credibility on responsible AI. Organizations should therefore integrate ethics into their AI development process and ensure leadership supports that mission, to avoid public controversies and loss of trust.

Clearview AI & the Privacy Debate over Facial Recognition

The failure: Clearview AI, a facial recognition startup, built a controversial tool by scraping over 3 billion photos from social media and websites without permission. It created an app allowing clients (including law enforcement) to upload a photo of a person and find matches from the internet, essentially eroding anonymity.

When The New York Times exposed Clearview in 2020, a firestorm ensued over privacy and consent. Regulators in multiple countries found Clearview violated privacy laws – for instance, the company was sued in Illinois under the Biometric Information Privacy Act and ultimately agreed to limits on selling its service.

Clearview was hit with multi-million dollar fines in Europe for unlawful data processing. The public was alarmed that anyone’s photos (your Facebook or LinkedIn profile, for example) could be used to identify and track them without their knowledge. This case became the poster child for AI-driven surveillance gone too far.

Lesson learned: Clearview AI illustrates that just because AI can do something, doesn’t mean it should. From an ethics and business standpoint, ignoring privacy norms can lead to severe backlash and legal consequences. Companies working with facial recognition or biometric AI should obtain consent for data use and ensure compliance with regulations – a failure to do so can sink a business model.

Clearview’s troubles also prompted tech companies like Google and Facebook to demand that it stop scraping their data. The episode emphasizes the importance of incorporating privacy-by-design in AI products. For policymakers, it was a wake-up call that stronger rules are needed for AI surveillance tech.

The lesson for businesses is clear: the societal acceptance of AI products matters. If people feel an AI application violates their privacy or human rights, they will push back hard (through courts, public opinion, and regulation). Responsible AI requires balancing innovation with respect for individual privacy and ethical boundaries. Those who don’t find that balance, as Clearview learned, will face steep repercussions.

AI-Generated Misinformation During Elections

The failure: In recent election cycles, we have seen instances where AI has been used (or misused) to generate misleading content, raising concerns about the integrity of democratic processes. One example occurred during international elections in 2024, where observers found dozens of AI-generated images and deepfake videos circulating on social media to either smear candidates or sow confusion. In one case, a deepfake video of a presidential candidate appeared, falsely showing them making inflammatory statements – it was quickly debunked, but not before garnering thousands of views.

Similarly, networks of AI-powered bots have been deployed to flood discussion forums with propaganda. While it’s hard to pinpoint a single election “failure” attributable solely to AI, the growing volume of AI-generated disinformation is seen as a failure of tech platforms to stay ahead of bad actors. The concern became so great that experts and officials warned of a “deepfake danger” prior to major elections, and organizations like the World Economic Forum labeled AI-driven misinformation as a severe short-term global risk.

Lesson learned: The spread of AI-generated election misinformation teaches stakeholders – especially tech companies and policymakers – that proactive measures are needed to defend truth in the age of AI. Social media companies have learned they must improve AI detection systems for fake content and coordinate with election authorities to remove or flag deceptive media swiftly.

There’s also a lesson in public education: citizens are now urged to be skeptical of sensational media and to double-check sources, essentially becoming fact-checkers against AI fakes. For businesses, if you’re in the social media, advertising, or media sector, investing in content authentication technologies (like watermarks for genuine content or blockchain records for videos) can be an ethical differentiator.

Politically, this issue has spurred calls for stronger regulation of political ads and deepfakes. In sum, the battle against AI-fueled misinformation in elections highlights the responsibility of those deploying AI to anticipate misuse. Ethical AI practice isn’t only about your direct use-case, but also considering how your technology could be weaponized by others – and taking steps to mitigate that risk.

Tesla’s Autopilot & the Ethics of AI in Autonomous Vehicles

The failure: Tesla’s Autopilot feature – an AI system that assists in driving – has been involved in several accidents, including fatal ones, which raised questions about the readiness and safety of semi-autonomous driving technology. One widely reported incident from 2018 involved a Tesla in Autopilot mode that failed to recognize a crossing tractor-trailer, resulting in a fatal crash. Investigations revealed that the driver-assist system wasn’t designed for the road conditions encountered, yet it was not prevented from operating there.

There have been other crashes where drivers overly trusted Autopilot and became inattentive, despite Tesla’s warnings to stay engaged. Ethically, these incidents highlight the gray area between driver responsibility and manufacturer responsibility. Tesla’s marketing of the feature as “Autopilot” has been criticized as possibly giving drivers a false sense of security.

In 2023, the U.S. National Highway Traffic Safety Administration even considered whether Autopilot’s design flaws contributed to accidents, leading to recalls and software updates.

Lesson learned: The Tesla Autopilot case underscores that safety must be paramount in AI deployment, and transparency about limitations is critical. When lives are at stake, as in transportation, releasing AI that isn’t thoroughly proven safe is ethically problematic. Tesla (and other autonomous vehicle companies) learned to add more driver monitoring to ensure humans pay attention, and to clarify in documentation that these systems are assistive and not fully self-driving.

Another lesson is about accountability: after early investigations blamed “human error,” later reviews also blamed Tesla for allowing usage outside intended conditions. This indicates that companies will share blame if their AI encourages misuse. Manufacturers need to incorporate robust fail-safes – for example, not allowing Autopilot to operate on roads it isn’t designed for, or handing control back to the driver well before a system’s performance limit is reached.

Ethically, communicating clearly with customers about what the AI can and cannot do is essential (no overhyping). For any business deploying AI in products, Tesla’s experience is a reminder to expect the unexpected and design with a “safety first” mindset. Test AI in diverse scenarios, monitor it continually in the field, and if an ethical or safety issue arises, respond quickly (e.g., through recalls, updates, or even disabling features) before more harm occurs.

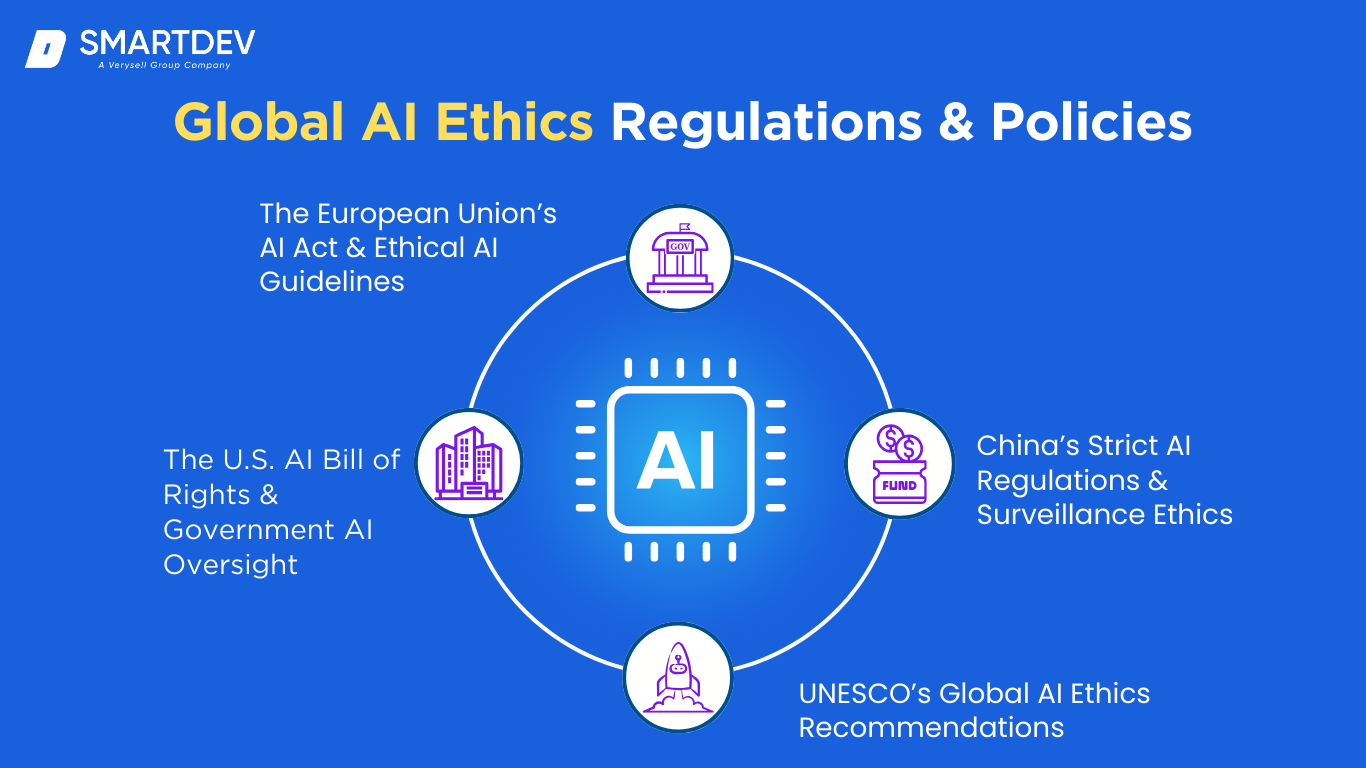

Global AI Ethics Regulations & Policies

Around the world, governments and standards organizations are crafting frameworks to ensure AI is developed and used ethically. These policies are crucial for businesses to monitor, as they set the rules of the road for AI innovation.

Around the world, governments and standards organizations are crafting frameworks to ensure AI is developed and used ethically. These policies are crucial for businesses to monitor, as they set the rules of the road for AI innovation.

Here are some major global initiatives addressing AI ethics concerns:

The European Union’s AI Act & Ethical AI Guidelines

The EU is taking a lead in AI regulation with its forthcoming AI Act, set to be the first comprehensive legal framework for AI. The AI Act takes a risk-based approach: it categorizes AI systems by risk level (unacceptable risk, high risk, limited risk, minimal risk) and imposes requirements accordingly. Notably, it will outright ban certain AI practices deemed too harmful – for example, social scoring systems like China’s or real-time biometric surveillance in public (with narrow exceptions).

High-risk AI (such as algorithms used in hiring, credit, law enforcement, etc.) will face strict obligations for transparency, risk assessment, and human oversight. The goal is to ensure trustworthy AI that upholds EU values and fundamental rights. Companies deploying AI in Europe will have to comply or face hefty fines (similar to how GDPR enforced privacy).

Additionally, the EU has non-binding Ethical AI Guidelines (developed by experts in 2019) which outline principles like transparency, accountability, privacy, and societal well-being – these have influenced the AI Act’s approach. For business leaders, the key takeaway is that the EU expects AI to have “ethical guardrails”, and compliance will require diligence in areas like documentation of algorithms, bias mitigation, and enabling user rights (such as explanations of AI decisions).

The AI Act is expected to be finalized soon, and forward-looking companies are already aligning their AI systems with its provisions to avoid disruptions. Europe’s regulatory push is a sign that ethical AI is becoming enforceable law.

The U.S. AI Bill of Rights & Government AI Oversight

In the United States, while there isn’t yet an AI-specific law as sweeping as the EU’s, there are important initiatives signaling the policy direction. In late 2022, the White House Office of Science and Technology Policy introduced a Blueprint for an AI Bill of Rights – a set of five guiding principles for the design and deployment of AI systems. These principles include: Safe and Effective Systems (AI should be tested for safety), Algorithmic Discrimination Protections (AI should not biasly discriminate), Data Privacy (users should have control over data and privacy is to be protected), Notice and Explanation (people should know when an AI is being used and understand its decisions), and Human Alternatives, Consideration, and Fallback (there should be human options and the ability to opt-out of AI in critical scenarios).

While this “AI Bill of Rights” is not law, it provides a policy blueprint for federal agencies and companies to follow. We’re also seeing increased oversight of AI through existing laws – for example, the Equal Employment Opportunity Commission (EEOC) is looking at biased hiring algorithms under anti-discrimination laws, and the Federal Trade Commission (FTC) has warned against “snake oil” AI products, implying it will use consumer protection laws against false AI claims or harmful practices.

Moreover, sector-specific regulations are emerging: the FDA is working on guidelines for AI in medical devices, and financial regulators for AI in banking. Policymakers in Congress have proposed various bills on AI transparency and accountability, though none has passed yet.

For businesses operating in the U.S., the lack of a single law doesn’t mean lack of oversight – authorities are repurposing regulations to cover AI impacts (e.g., a biased AI decision can still violate civil rights law). So aligning with the spirit of the AI Bill of Rights now – making AI systems fair, transparent, and controllable – is a wise strategy to be prepared for future, likely more formal, U.S. regulations.

China’s Strict AI Regulations & Surveillance Ethics

China has a very active regulatory environment for AI, reflecting its government’s desire to both foster AI growth and maintain control over its societal impacts. Unlike Western approaches that emphasize individual rights, China’s AI governance is intertwined with its state priorities (including social stability and party values). In recent years, China implemented pioneering rules such as the “Internet Information Service Algorithmic Recommendation Management Provisions” (effective March 2022) which require companies to register their algorithms with authorities, be transparent about their use, and not engage in practices that endanger national security or social order.

These rules also mandate options for users to disable recommendation algorithms and demand that algorithms “promote positive energy” (aligned with approved content). In early 2023, China introduced the Deep Synthesis Provisions to regulate deepfakes – requiring that AI-generated media be clearly labeled and not be used to spread false information, or else face legal penalties. Additionally, China has draft regulations for generative AI services (like chatbots), requiring outputs to reflect core socialist values and not undermine state power.

On the ethical front, while China heavily uses AI for surveillance (e.g., facial recognition tracking citizens and a nascent social credit system), it is paradoxically also concerned with ethics insofar as it affects social cohesion. For instance, China banned AI that analyzes candidates’ facial expressions in job interviews, deeming it an invasion of privacy. The government is also exploring AI ethics guidelines academically, but enforcement is mostly via strict control and censorship.

For companies operating in China or handling Chinese consumer data, compliance with these detailed regulations is mandatory – algorithms must have “transparency” in the sense of being known to regulators, and content output by AI is tightly watched. The ethical debate here is complex: China’s rules might prevent some harms (like deepfake fraud), but they also cement government oversight of AI and raise concerns about freedom. Nonetheless, China’s approach underscores a key point: governments can and will assert control over AI technologies to fit their policy goals, and businesses need to navigate these requirements carefully or risk being shut out of a huge market.

UNESCO’s Global AI Ethics Recommendations

At a multinational level, UNESCO has spearheaded an effort to create an overarching ethical framework for AI. In November 2021, all 193 member states of UNESCO adopted the Recommendation on the Ethics of Artificial Intelligence, the first global standard-setting instrument on AI ethics. This comprehensive document isn’t a binding law, but it provides a common reference point for countries developing national AI policies.

The UNESCO recommendation outlines values and principles such as human dignity, human rights, environmental sustainability, diversity and inclusion, and peace – essentially urging that AI be designed to respect and further these values. It calls for actions like: assessments of AI’s impact on society and the environment, education and training on ethical AI, and international cooperation on AI governance.

For example, it suggests bans on AI systems that manipulate human behavior, and safeguards against the misuse of biometric data. While high-level, these guidelines carry moral weight and influence policy. Already, we see alignment: the EU’s AI Act and various national AI strategies echo themes from the UNESCO recommendations (like risk assessment and human oversight).

For businesses and policymakers, UNESCO’s involvement signals that AI ethics is a global concern, not just a national one. Companies that operate across borders might eventually face a patchwork of regulations, but UNESCO’s framework could drive some harmonization. Ethically, it’s a reminder that AI’s impact transcends borders – issues like deepfakes or bias or autonomous weapons are international in scope and require collaboration.

Organizations should stay aware of such global norms because they often precede concrete regulations. Embracing the UNESCO principles voluntarily can enhance a company’s reputation as an ethical leader in AI and prepare it for the evolving expectations of governments and the public worldwide.

ISO & IEEE Standards for Ethical AI

Beyond governments, standard-setting bodies like ISO (International Organization for Standardization) and IEEE (Institute of Electrical and Electronics Engineers) are developing technical standards to guide ethical AI development. These standards are not laws, but they provide best practices and can be adopted as part of industry self-regulation or procurement requirements.

ISO, through its subcommittee SC 42 on AI, has been working on guidelines for AI governance and trustworthiness. For instance, ISO/IEC 24028 focuses on evaluating the robustness of machine learning algorithms, and ISO/IEC 23894 provides guidance on risk management for AI – helping organizations identify and mitigate risks such as bias, errors, or security issues. By following ISO standards, a company can systematically address ethical aspects (fairness, reliability, transparency) and have documentation to show auditors or clients that due diligence was done.

IEEE has taken a very direct approach to AI ethics with its Ethics in Autonomous Systems initiative, producing the IEEE 7000 series of standards. These include standards like IEEE 7001 for transparency of autonomous systems, IEEE 7002 for data privacy in AI, IEEE 7010 for assessing well-being impact of AI, among others. One notable one is IEEE 7000-2021, a model process for engineers to address ethical concerns in system design – essentially a how-to for “ethics by design”. Another, IEEE 7003, deals with algorithmic bias considerations.

Adhering to IEEE standards can help developers build values like fairness or explainability into the technology from the ground up. Businesses are starting to seek certifications or audits against these standards to signal trustworthiness (for example, IEEE has an ethical AI certification program). The advantage of standards is that they offer concrete checklists and processes to implement abstract ethical principles.

As regulators look at enforcing AI ethics, they often reference these standards. In practical terms, a business that aligns its AI projects with ISO/IEEE guidelines is less likely to be caught off guard by new rules or stakeholder concerns. It’s an investment in quality and governance that can pay off in smoother compliance, better AI outcomes, and improved stakeholder confidence.

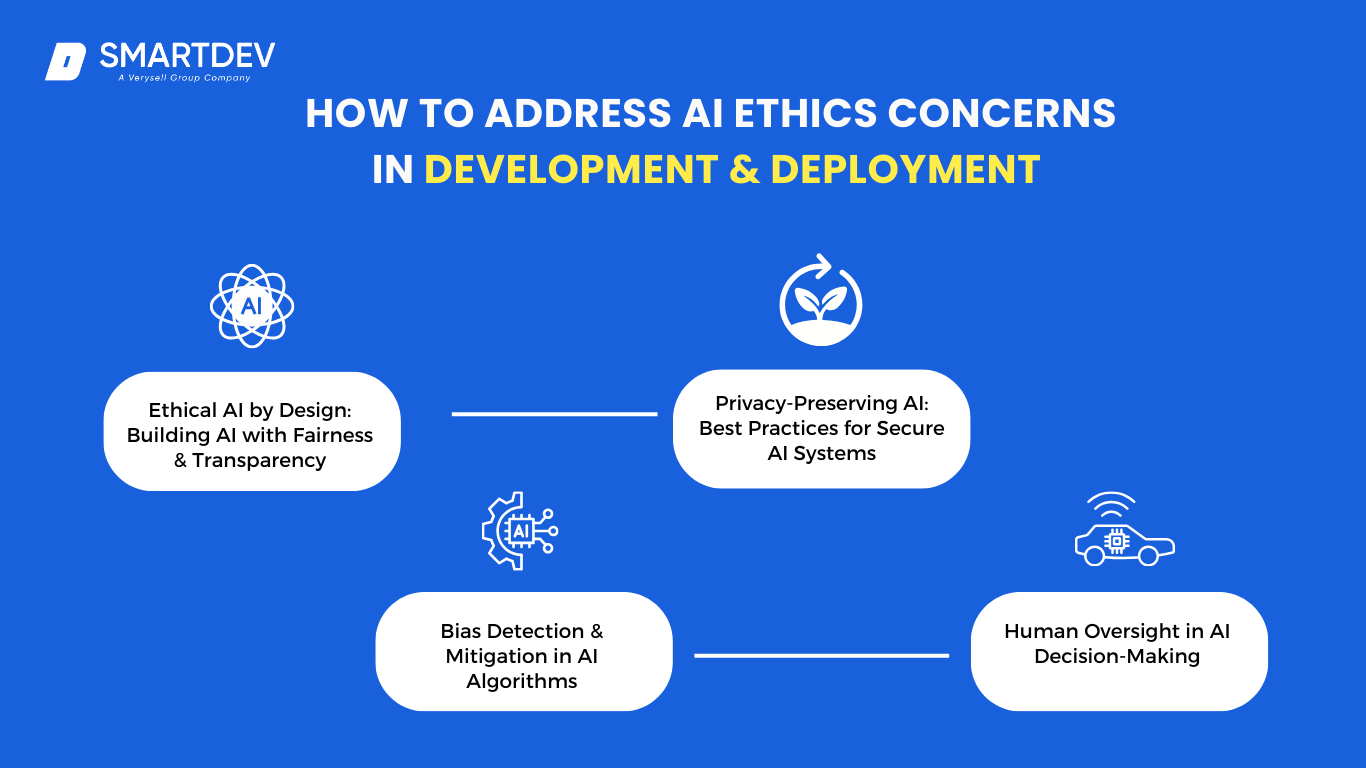

How to Address AI Ethics Concerns in Development & Deployment

Understanding AI ethics concerns is only half the battle – the other half is taking concrete steps to address these issues when building or using AI systems. For businesses, a proactive and systematic approach to ethical AI can turn a potential risk into a strength.

Understanding AI ethics concerns is only half the battle – the other half is taking concrete steps to address these issues when building or using AI systems. For businesses, a proactive and systematic approach to ethical AI can turn a potential risk into a strength.

Here are key strategies for developing and deploying AI responsibly:

Ethical AI by Design: Building AI with Fairness & Transparency

Just as products can be designed for safety or usability, AI systems should be designed for ethics from the start. “Ethical AI by design” means embedding principles like fairness, transparency, and accountability into the AI development lifecycle. In practice, this involves setting up an AI ethics framework or charter at your organization (many companies have done so, as evidenced by the sharp rise in ethical AI charters).

Begin every AI project by identifying potential ethical risks and impacted stakeholders. For example, if you’re designing a loan approval AI, recognize the risk of discrimination and the stakeholders (applicants, regulators, the community) who must be considered. Then implement fairness criteria in model objectives – not just accuracy, but also measures to minimize bias across groups. Choose training data carefully (diverse, representative, and audited for bias before use).

Additionally, design the system to be as transparent as feasible: keep documentation of how the model was built, why certain features are used, and how it performs on different segments of data. Where possible, opt for simpler models or techniques like explainable AI that can offer reason codes for decisions. If using a complex model, consider building an explanatory companion system that can analyze the main model’s behavior.

Importantly, involve a diverse team in the design process – including people from different backgrounds, and even ethicists or domain experts who can spot issues developers might miss. By integrating these steps into the early design phase (rather than trying to retrofit ethics at the end), companies can avoid many pitfalls. Ethical AI by design also sends a message to employees that responsible innovation is the expectation, not an afterthought.

This approach helps create AI products that not only work well, but also align with societal values and user expectations from day one.

Bias Detection & Mitigation in AI Algorithms

Since bias in AI can be pernicious and hard to detect with the naked eye, organizations should implement formal bias detection and mitigation processes. Start by testing AI models on various demographic groups and key segments before deployment. For instance, if you have an AI that screens resumes, evaluate its recommendations for male vs. female candidates, for different ethnic groups, etc., to see if error rates or selections are uneven. Techniques like disparate impact analysis (checking whether decisions disproportionately harm a protected group) are useful.

If issues are found, mitigation is needed: this could involve retraining the model on more balanced data, or adjusting the model’s parameters or decision thresholds to correct the skew. In some cases, you might implement algorithmic techniques like re-sampling (balancing the training data), re-weighting (giving more importance to minority class examples during training), or adding fairness constraints to the model’s optimization objective (so it directly tries to achieve parity between groups).

For example, an image recognition AI that initially struggled with darker skin tones could be retrained with more diverse images and perhaps an adjusted architecture to ensure equal accuracy. Another important mitigation is feature selection – ensure that attributes that stand in for protected characteristics (zip code might proxy for race, for example) are carefully handled or removed if not absolutely necessary. Document all these interventions as part of an algorithmic accountability report.

Moreover, bias mitigation isn’t a one-time fix; it requires ongoing monitoring. Once the AI is in production, track outcomes by demographic where feasible. If new biases emerge (say, the data stream shifts or a certain user group starts being treated differently), you need a process to catch and correct them.

There are also emerging tools and toolkits (like IBM’s AI Fairness 360, an open-source library) that provide metrics and algorithms to help with bias detection and mitigation – businesses can incorporate these into their development pipeline. By actively seeking out biases and tuning AI systems to reduce them, companies build fairer systems and also protect themselves from discrimination claims.

This work can be challenging, as perfect fairness is elusive and often context-dependent, but demonstrating a sincere, rigorous effort goes a long way in responsible AI practice.

Human Oversight in AI Decision-Making

No matter how advanced AI gets, maintaining human oversight is crucial for ethical assurance. The idea of “human-in-the-loop” is that AI should assist, not fully replace, human decision-makers in many contexts – especially when decisions have significant ethical or legal implications. To implement this, businesses can set up approval processes where AI provides a recommendation and a human validates or overrides it before action is taken. For example, an AI may flag a financial transaction as fraudulent, but a human analyst reviews the case before the customer’s card is blocked, to ensure it’s not a false positive. This kind of oversight can prevent AI errors from causing harm.

In some cases, “human-in-the-loop” might be too slow (e.g., self-driving car decisions) – but then companies might use a “human-on-the-loop” approach, where humans supervise and can intervene or shut down an AI system if they see it going awry. The EU’s draft AI rules actually mandate human oversight for high-risk AI systems, emphasizing that users or operators must have the ability to interpret and influence the outcome.

To make oversight effective, organizations should train the human supervisors about the AI’s capabilities and limitations. One challenge is automation bias – people can become complacent and over-trust the AI. To combat this, periodic drills or random auditing of AI decisions can keep human reviewers engaged (for instance, spot-check some instances where the AI said “deny loan” to ensure the decision was justified).

It’s also important to cultivate an organizational mindset that values human intuition and ethical judgment alongside algorithmic logic. Front-line staff should feel empowered to question or overturn AI decisions if something seems off. In the aviation industry, pilots are trained on when to rely on autopilot and when to take control – similarly, companies should develop protocols for when to rely on AI and when a human must step in.

Ultimately, human oversight provides a safety net and a moral compass, catching issues that algorithms, which lack true understanding or empathy, might miss. It reassures customers that there’s accountability – knowing a human can hear their appeal or review their case builds trust that we’re not at the mercy of unfeeling machines.

Privacy-Preserving AI: Best Practices for Secure AI Systems

AI systems often need data – but respecting privacy while leveraging data is a critical balance. Privacy-preserving AI is about techniques and practices that enable AI insights without compromising personal or sensitive information. One cornerstone practice is data minimization: only collect and use the data that is truly needed for the AI’s purpose. If an AI model can achieve its goal without certain personal identifiers, don’t include them. Techniques like anonymization or pseudonymization can help – for example, before analyzing customer behavior data, strip away names or replace them with random IDs.

However, true anonymization can be hard (AI can sometimes re-identify patterns), so more robust approaches are gaining traction, such as Federated Learning and Differential Privacy. Federated Learning allows training AI models across multiple data sources without the data ever leaving its source – for instance, a smartphone keyboard AI that learns from users’ typing patterns can update a global model without uploading individual keystrokes, thus keeping personal data on the device.

Differential privacy adds carefully calibrated noise to data or query results so that aggregate patterns can be learned by AI, but nothing about any single individual can be pinpointed with confidence. Companies like Apple and Google have used differential privacy in practice for collecting usage statistics without identifying users. Businesses handling sensitive data (health, finance, location, etc.) should look into these techniques to maintain customer trust and comply with privacy laws. Encryption is another must: both in storage (encrypt data at rest) and in transit.

Moreover, consider access controls for AI models – sometimes the model itself can unintentionally leak data (for example, a language model might regurgitate parts of its training text). Limit who can query sensitive models and monitor outputs. On an organizational level, align your AI projects with data protection regulations (GDPR, CCPA, etc.) from the design phase – conduct Privacy Impact Assessments for new AI systems.

Be transparent with users about data use: obtain informed consent where required, and offer opt-outs for those who do not want their data used for AI training. By building privacy preservation into AI development, companies protect users’ rights and avoid mishaps like data leaks or misuse scandals. It’s an investment in long-term data sustainability – if people trust that their data will be handled ethically, they are more likely to allow its use, fueling AI innovation in a virtuous cycle.

Ethical AI Auditing: Ongoing Monitoring & Compliance Strategies

Just as financial processes get audited, AI systems benefit from ethics and compliance audits. An ethical AI audit involves systematically reviewing an AI system for adherence to certain standards or principles (fairness, accuracy, privacy, etc.) both prior to deployment and periodically thereafter. Businesses should establish an AI audit function – either an internal committee or external auditors (or both) – to evaluate important AI systems. For example, a bank using AI for credit decisions might have an audit team check that the model meets all regulatory requirements (like the U.S. ECOA for lending fairness) and ethical benchmarks, generating a report of findings and recommendations.

Key elements to check include: bias metrics (are outcomes equitable?), error rates and performance (especially in safety-critical systems – are they within acceptable range?), explainability (can the decisions be interpreted and justified?), data lineage (is the training data sourced and used properly?), and security (is the model vulnerable to adversarial attacks or data leaks?).

Audits might also review the development process – was there adequate documentation? Were proper approvals and testing done before launch? Some organizations are adopting checklists from frameworks like the IEEE 7000 series or the NIST AI Risk Management Framework as baseline audit criteria. It’s wise to involve multidisciplinary experts in audits: data scientists, legal, compliance officers, ethicists, and domain experts.

After an audit, there should be a plan to address any red flags – perhaps retraining a model, improving documentation, or even pulling an AI tool out of production until issues are fixed. Additionally, monitoring should be continuous: set up dashboards or automated tests for ethics metrics (for instance, an alert if the demographic mix of loan approvals drifts from expected norms, indicating possible bias). With regulations on the horizon, maintaining audit trails will also help with demonstrating compliance to authorities.

Beyond formal audits, companies can encourage whistleblowing and feedback loops – allow employees or even users to report AI-related concerns without fear, and investigate those promptly. In summary, treat ethical AI governance as an ongoing process, not a one-time checkbox. By instituting regular audits and strong oversight, businesses can catch problems early, adapt to new ethical standards, and ensure their AI systems remain worthy of trust over time.

For a deeper dive into how to implement ethical principles during AI development, check out our comprehensive guide on ethical AI development.

The Future of AI Ethics: Emerging Concerns & Solutions

AI is a fast-evolving field, and with it come new ethical frontiers that businesses and policymakers will need to navigate.Looking ahead, here are some emerging AI ethics concerns and prospective solutions:

AI is a fast-evolving field, and with it come new ethical frontiers that businesses and policymakers will need to navigate.Looking ahead, here are some emerging AI ethics concerns and prospective solutions:

AI in Warfare: Autonomous Weapons & Military AI Ethics

The use of AI in military applications – from autonomous drones to AI-driven cyber weapons – is raising alarms globally. Autonomous weapons, often dubbed “killer robots,” could make life-and-death decisions without human intervention. The ethical issues here are profound: Can a machine reliably follow international humanitarian law? Who is accountable if an AI misidentifies a target and kills civilians?

There is a growing movement, including tech leaders and roboticists, calling for a ban on lethal autonomous weapons. Even the United Nations Secretary-General has urged a prohibition, warning that machines with the power to kill people autonomously should be outlawed. Some nations are pursuing treaties to control this technology. For businesses involved in defense contracting, these debates are critical.

Companies will need to decide if or how to participate in developing AI for combat – some have chosen not to, on ethical grounds (Google notably pulled out of a Pentagon AI project after employee protests). If military AI is developed, embedding strict constraints (like requiring human confirmation before a strike – “human-in-the-loop” for any lethal action) is an ethical must-do.

There’s also the risk of an AI arms race, where nations feel compelled to match each other’s autonomous arsenals, potentially lowering the threshold for conflict. The hopeful path forward is international regulation: similar to how chemical and biological weapons are constrained, many advocate doing the same for AI weapons before they proliferate.

In any case, the specter of AI in warfare is a reminder that AI ethics isn’t just about fairness in ads or loans – it can be about the fundamental right to life and the rules of war. Tech businesses, ethicists, and governments will have to work together to ensure AI’s use in warfare, if it continues, is tightly governed by human values and global agreements.

The Rise of Artificial General Intelligence (AGI) & Existential Risks

Most of the AI we discuss today is “narrow AI,” focused on specific tasks. But looking to the future, many are pondering Artificial General Intelligence (AGI) – AI that could match or exceed human cognitive abilities across a wide range of tasks. Some experts estimate AGI could be developed in a matter of decades, and this raises existential risks and ethical questions of a different magnitude.

If an AI became vastly more intelligent than humans (often termed superintelligence), could we ensure it remains aligned with human values and goals? Visionaries like Stephen Hawking and Elon Musk have issued warnings that uncontrolled superintelligent AI could even pose an existential threat to humanity. In 2023, numerous AI scientists and CEOs signed a public statement cautioning that AI could potentially lead to human extinction if mismanaged, urging global priority on mitigating this risk. This concern, once seen as science fiction, is increasingly part of serious policy discussions.

Ethically, how do we plan for a future technology that might surpass our understanding? One solution avenue is AI alignment research – a field devoted to ensuring advanced AI systems have objectives that are beneficial and that they don’t behave in unexpected, dangerous ways. Another aspect is governance: proposals range from international monitoring of AGI projects, to treaties that slow down development at a certain capability threshold, to requiring that AGIs are developed with safety constraints and perhaps open scrutiny.

For current businesses, AGI is not around the corner, but the principles established today (like transparency, fail-safes, and human control) lay the groundwork for handling more powerful AI tomorrow. Policymakers might consider scenario planning and even simulations for AGI risk, treating it akin to how we treat nuclear proliferation – a low probability but high impact scenario that merits precaution.

The key will be international cooperation, because an uncontrollable AGI built in one part of the world would not respect borders. Preparing for AGI also touches on more philosophical ethics: if we eventually create an AI as intelligent as a human, would it have rights? This leads us into the next topic.

The Ethics of AI Consciousness & Sentient AI Debates

Recent events (like a Google engineer’s claim that an AI chatbot became “sentient”) have sparked debate about whether an AI could be conscious or deserve moral consideration. Today’s AI, no matter how convincing, is generally understood as not truly sentient – it doesn’t have self-awareness or subjective experiences. However, as AI models become more complex and human-like in conversation, people are starting to project minds onto them.

Ethically, this raises two sides of concern: On one hand, if in the far future AI did achieve some form of consciousness, we would face a moral imperative to treat it with consideration (i.e., issues of AI rights or personhood could arise – a staple of science fiction but also a potential reality to grapple with). On the other hand, and more pressingly, humans might mistakenly believe current AIs are conscious when they are not, leading to emotional attachment or misjudgment.

In 2022, for instance, a Google engineer was placed on leave after insisting that the company’s AI language model LaMDA was sentient and had feelings, which Google and most experts refuted. The ethical guideline here for businesses is transparency and education: make sure users understand the AI’s capabilities and limits (for example, putting clear disclaimers in chatbots that “I am an AI and do not have feelings”).

As AI becomes more ubiquitous in companionship roles (like virtual assistants, elder care robots, etc.), this line could blur further, so it’s important to study how interacting with very human-like AI affects people psychologically and socially. Some argue there should be regulations on how AI presents itself – perhaps even preventing companies from knowingly designing AI that fools people into thinking it’s alive or human (to avoid deception and dependency issues).

Meanwhile, philosophers and technologists are researching what criteria would even define AI consciousness. It’s a complex debate, but forward-looking organizations might start convening ethics panels to discuss how they would respond if an AI in their purview ever claimed to be alive or exhibited unprogrammed self-directed behavior.

While we’re not there yet, the conversation is no longer taboo outside academic circles. In essence, we should approach claims of AI sentience with healthy skepticism, but also with an open mind to future possibilities, ensuring that we have ethical frameworks ready for scenarios that once belonged only to speculative fiction.

AI & Intellectual Property: Who Owns AI-Generated Content?

The surge in generative AI – AI that creates text, images, music, and more – has led to knotty intellectual property (IP) questions. When an AI creates a piece of artwork or invents something, who owns the rights to that creation? Current laws in many jurisdictions, such as the U.S., are leaning toward the view that if a work has no human author, it cannot be copyrighted.

For instance, the U.S. Copyright Office recently clarified that purely AI-generated art or writing (with no creative edits by a human) is not subject to copyright protection, as copyright requires human creativity. This means if your company’s AI produces a new jingle or design, you might not be able to stop competitors from using it, unless a human can claim authorship through significant involvement. This is an ethical and business concern: companies investing in generative AI need to navigate how to protect their outputs or at least how to use them without infringing on others’ IP.

Another side of the coin is the data used to train these AI models – often AI is trained on large datasets of copyrighted material (images, books, code) scraped from the internet. Artists, writers, and software developers have started to push back, filing lawsuits claiming that AI companies violated copyright law by using their creations without permission to train AI that now competes with human content creators.

Ethically, there’s a balance to find between fostering innovation and respecting creators’ rights. Potential solutions include new licensing models (creators could opt-in to allow their works for AI training, possibly for compensation) or legislation that defines fair use boundaries for AI training data. Some tech companies are also developing tools to watermark AI-generated content or otherwise identify it, which could help manage how such content is treated under IP law (for example, maybe requiring disclosure that a piece was AI-made).

Businesses using generative AI should develop clear policies: ensure that human employees are reviewing or curating AI outputs if they want IP protection, avoid directly commercializing raw AI outputs that might be derivative of copyrighted training data, and stay tuned to evolving laws. This area is evolving rapidly – courts and lawmakers are just beginning to address cases like AI-generated images and code.

In the meantime, an ethical approach is to give credit (and potentially compensation) to sources that AI draws from, and to be transparent when content is machine-made. Ultimately, society will need to update IP frameworks for the AI era, balancing innovation with the incentive for human creativity.

The Role of Blockchain & Decentralized AI in Ethical AI Governance

Interestingly, technologies like blockchain are being explored as tools to improve AI ethics and governance. Blockchain’s core properties – transparency, immutability, decentralization – can address some AI trust issues. For example, blockchain can create audit trails for AI decisions and data usage: every time an AI model is trained or makes a critical decision, a record could be logged on a blockchain that stakeholders can later review, ensuring tamper-proof accountability. This could help with the transparency challenge, as it provides a ledger of “why the AI did what it did” (including which data was used, which version of the model, who approved it, etc.).

Decentralized AI communities have also emerged, aiming to spread AI development across many participants rather than a few big tech companies. The ethical advantage here is preventing concentration of AI power – if AI models and their governance are distributed via blockchain smart contracts, no single entity solely controls the AI, which could reduce biases and unilateral misuse. For instance, a decentralized AI might use a Web3 reputation system where the community vets and votes on AI model updates or usage policies.

Moreover, blockchain-based data marketplaces are being developed to allow people to contribute data for AI in a privacy-preserving way and get compensated, all tracked on-chain. This could give individuals more agency over how their data is used in AI (aligning with ethical principles of consent and fairness in benefit). While these concepts are in early stages, pilot projects are telling: some startups use blockchain to verify the integrity of AI-generated content (to fight deepfakes by providing a digital certificate of authenticity), and there are experiments in federated learning using blockchain to coordinate learning across devices without central oversight.

Of course, blockchain has its own challenges (like energy use, though newer networks are more efficient), but the convergence of AI and blockchain could produce novel solutions to AI ethics issues.

For businesses, keeping an eye on these innovations is worthwhile. In a few years, we might see standard tools where AI models come with a blockchain-based “nutrition label” or history that anyone can audit for bias or tampering. Decentralized governance mechanisms might also allow customers or external experts to have a say in how a company’s AI should behave – imagine an AI system where parameters on sensitive issues can only be changed after a decentralized consensus.

These are new frontiers in responsible AI: using one emerging tech (blockchain) to bring more trust and accountability to another (AI). If successful, they could fundamentally shift how we ensure AI remains beneficial and aligned with human values, by making governance more transparent and participatory.

Conclusion & Key Takeaways on AI Ethics Concerns

AI is no longer the wild west – businesses, governments, and society at large are recognizing that AI ethics concerns must be addressed head-on to harness AI’s benefits without causing harm. As we’ve explored, the stakes are high.

AI is no longer the wild west – businesses, governments, and society at large are recognizing that AI ethics concerns must be addressed head-on to harness AI’s benefits without causing harm. As we’ve explored, the stakes are high.

Unethical AI can perpetuate bias, violate privacy, spread disinformation, even endanger lives or basic rights. Conversely, responsible AI can lead to more inclusive products, greater trust with customers, and sustainable innovation.

What can businesses, developers, and policymakers do now?

First, treat AI ethics as an integral part of your strategy, not an afterthought. That means investing in ethics training for your development teams, establishing clear ethical guidelines or an AI ethics board, and conducting impact assessments before deploying AI. Make fairness, transparency, and accountability core requirements for any AI project – for example, include a “fairness check” and an “explainability report” in your development pipeline as you would include security testing. Developers should stay informed of the latest best practices and toolkits for bias mitigation and explainable AI, integrating them into their work.

Business leaders should champion a culture where raising ethical concerns is welcomed (remember Google’s lesson – listen to your experts and employees).

If you’re procuring AI solutions from vendors, evaluate them not just on performance, but also on how they align with your ethical standards (ask for information on their training data, bias controls, etc.). Policymakers, on the other hand, should craft regulations that protect citizens from AI harms while encouraging innovation – a difficult but necessary balance.

That involves collaborating with technical experts to draft rules that are enforceable and effective, and updating laws (like anti-discrimination, consumer protection, privacy laws) to cover AI contexts. We are already seeing this in action with the EU’s AI Act and the U.S. initiatives; more will follow globally.

Policymakers can also promote the sharing of best practices – for instance, by supporting open research in AI ethics and creating forums for companies to transparently report AI incidents and learn from each other.

How can society prepare for ethical AI challenges?

Public education is crucial. As AI becomes part of everyday life, people should know both its potential and its pitfalls. This helps generate a nuanced discussion instead of fearmongering or blind optimism. Educational institutions might include AI literacy and ethics in curricula, so the next generation of leaders and users are savvy. Multistakeholder dialogue – involving technologists, ethicists, sociologists, and the communities affected by AI – will help ensure diverse perspectives inform AI development.

Perhaps most importantly, we must all recognize that AI ethics is an ongoing journey, not a one-time fix. Technology will continue to evolve, presenting new dilemmas (as we discussed with AGI or sentient AI scenarios). Continuous research, open conversation, and adaptive governance are needed. Businesses that stay proactive and humble – acknowledging that they won’t get everything perfect but committing to improve – will stand the test of time. Policymakers who remain flexible and responsive to new information will craft more effective frameworks than those who ossify.

The path forward involves collaboration: companies sharing transparency about their AI and cooperating with oversight, governments providing clear guidelines and avoiding heavy-handed rules that stifle beneficial AI, and civil society keeping a vigilant eye on both, to speak up for those who might be adversely affected. If we approach AI with the mindset that its ethical dimension is as important as its technical prowess, we can innovate with confidence.

Responsible AI is not just about avoiding disasters – it’s also an opportunity to build a future where AI enhances human dignity, equality, and well-being. By taking the responsible steps outlined in this guide, businesses and policymakers can ensure that AI becomes a force for good aligned with our highest values, rather than a source of unchecked concerns.

Whether you’re a business leader implementing AI or a policymaker shaping the rules, now is the time to act. Start an AI ethics task force at your organization, if you haven’t already, to audit and guide your AI projects. Engage with industry groups or standards bodies on AI ethics to stay ahead of emerging norms. If you develop AI, publish an ethics statement or transparency report about your system – show users you take their concerns seriously.

Policymakers, push forward with smart regulations and funding for ethical AI research. And for all stakeholders: keep the conversation going. AI ethics is not a box to be checked; it’s a dialogue to be sustained. By acting decisively and collaboratively today, we can pave the way for AI innovations that are not only intelligent but also just and worthy of our trust.

—

References:

- The Enterprisers Project – “The state of Artificial Intelligence (AI) ethics: 14 interesting statistics.” (2020) – Highlights rising awareness of AI ethics issues in organizations and statistics like 90% companies encountering ethical issues (The state of Artificial Intelligence (AI) ethics: 14 interesting statistics | The Enterprisers Project) and 80% jump in ethical AI charters (The state of Artificial Intelligence (AI) ethics: 14 interesting statistics | The Enterprisers Project).

- IMD Business School – “AI Ethics: What it is and why it matters for your business.” – Defines AI ethics and core principles (fairness, transparency, accountability) for businesses (AI Ethics: What Is It and Why It Matters for Your Business).

- Reuters (J. Dastin) – “Amazon scraps secret AI recruiting tool that showed bias against women.” (2018) – Report on Amazon’s biased hiring AI case, which penalized resumes with “women’s” and taught itself male preference (Insight – Amazon scraps secret AI recruiting tool that showed bias against women | Reuters).